A standard is a set of protocols or rules that define how a system operates. Standards provide a coherent platform from which information can be created, extracted, and exchanged. Without these protocols, there would be no consistency in the handling of information. Television is the conversion of light to electrical energy. The process by which this conversion takes place is referred to as a television standard or system. Standards are necessary so that video signals can be created and interpreted by equipment manufactured by different companies throughout the world. For example, video levels, broadcast frequencies, subcarrier frequency, and frame rates are all dictated by a specific standard.

Analog Standards

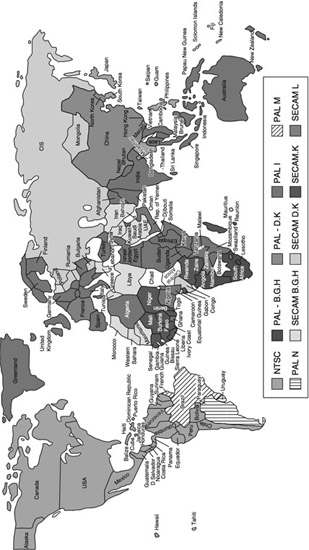

NTSC analog is one example of a video standard. Other world standards include PAL and SECAM. As you can see in Figure 12.1, each standard is used in many different countries.

In 1941, the first NTSC standard was developed. And in 1953, a new standard with a provision for color television was created. Because there were still so many black and white receivers in use, another

NTSC standard was adopted in 1953 that allowed for compatibility between those existing receivers and color television broadcasting. NTSC was the first widely adopted broadcast color system and remained dominant until the first decade of the 21st century, when it was replaced with digital standards.

Setting Digital Standards

Like the analog standards NTSC, PAL and SECAM, digital standards are set by international agreement through various worldwide organizations, many of which fall under the auspices of the ISO, the International Organization for Standardization. Established in 1947, this non-government organization, comprised of over 165 member countries, sets technical standards for scientific, technological, and economic activity.

NOTE ISO’s official definition of a standard is: A document that provides requirements, specifications, guidelines or characteristics that can be used consistently to ensure that materials, products, processes and services are fit for their purpose.

Some of the video organizations under the auspices of the ISO include SMPTE, NTSC, EBU (European Broadcast Union), and the ATSC (Advanced Television Systems Committee). ATSC is an international non-profit organization formed in 1982 for the purpose of developing voluntary standards for digital television. The ATSC has approximately 140 members representing the broadcast, broadcast equipment, motion picture, consumer electronics, computer, cable, satellite, and semiconductor industries. At a time when manufacturers were researching, developing and vying for the best way to play, record and broadcast digital video, it was imperative to set a standard that all manufacturers could adhere to. In 1995, the ATSC defined and approved the Digital Television Standards in a document called simply A/53. This included standard definition television (SDTV) and high definition television (HDTV), discussed later in this chapter.

Standards Parameters

Before defining specific standards, an understanding of the basic parameters of a standard is necessary. Analog television standards had specific criteria, while the ATSC’s recommended Digital Television Standard allows for a combination of various parameters. Some of the parameters used to define a standard include how many pixels make up the image (image resolution), the shape of the image (aspect ratio), the shape of the pixels that make up the image (pixel aspect ratio), the scanning process used to display the image, the audio frequency, and the number of frames displayed per second (frame rate or fps). A specific combination of these parameters is referred to as a standard format.

NOTE The term format may also be used to define a video medium such as VHS, Blu-Ray DVD, HDCam, HDV, and so on. There can be several different formats within a given standard or set of protocols.

Image Resolution

Image resolution is the detail or quality of the video image as it is created or displayed in a camera, video monitor, or other display source. The amount of detail is controlled by the number of pixels (picture elements) in a horizontal scan line multiplied by the Television Standards number of scan lines in a frame. The combined pixel and line count in an image represents what is known as the spatial density resolution, or how many total pixels make up one frame. For standard definition ATSC digital video, the image resolution is 720 pixels per line with 480 active scan lines per frame (720 × 480).

Increasing the number of pixels in an image increases the amount of detail. This corresponds to an increase in the resolution of the image. If the displayed image is kept at the same size, an increase in resolution would increase the detail in the image. Alternatively, a higher-resolution image could be displayed much larger while keeping the same degree of detail as the lower-resolution image.

For example, if a 720 × 480 image is displayed on a 21-inch monitor, and the resolution of that image is increased, the image would have more detail. The same image with increased resolution could be displayed on a larger monitor with no loss of detail.

Aspect Ratios

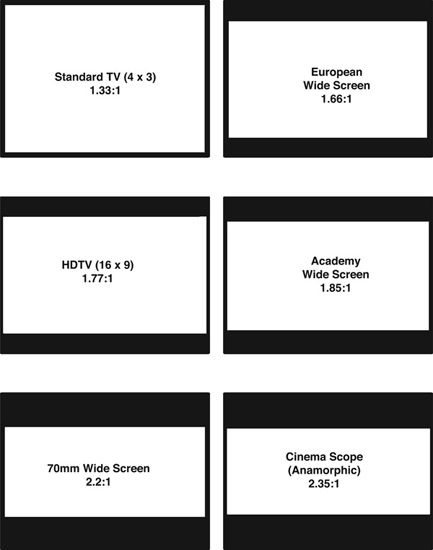

Video images are generally displayed in a rectangular shape. To describe the particular shape of an image, the width of the image is compared to its height to come up with its aspect ratio. This ratio describes the shape of the image independent of its size or resolution. Two common aspect ratios in video are 16 × 9 and 4 × 3. An image with a 16 × 9 aspect ratio would be, for example, 16 units across and 9 units tall. The actual ratio does not depend on any particular unit of measure. If a 16 × 9 image or display were 16 feet wide, it would be 9 feet tall. If it were 16 inches wide, it would be 9 inches tall. A display that is 9 yards tall would be 16 yards wide. Think of a stadium scoreboard at that size. Older standard definition displays were a bit closer to square. A display that is 4 feet wide by 3 feet tall would be referred to as a 4 × 3 ratio.

Another common way to note aspect ratio is to divide the length of the image by the height. In the 16 × 9 example, when the width amount of 16 is divided by the height of 9, the result would be 1.777777 units long. This is often rounded to 1.78 and expressed as 1.78 to 1, or shown as the ratio 1.78:1. For each unit of height we need 1.78 units of width. The older 4 × 3 standard definition image would be 1.33:1. When four is divided by three the result is 1.33333333.

The film world uses a number of different image ratios, but they are almost always expressed as comparisons to 1 unit of height. For example, a theatrical release movie, showing in Cinemascope, has an aspect ratio of 21 × 9, but it is more commonly referred to as 2.35:1. Another common film aspect ratio is 1.85:1, which is closer to the 16 × 9 aspect ratio of HD (Figure 12.2).

Pixel Aspect Ratio

The pixel aspect ratio is the size and shape of the pixel itself. In computer displays, pixels are square with an aspect ratio of 1 to 1. NTSC pixels have an aspect ratio of 0.91:1, which makes them tall and thin. When setting the standard for digitizing the NTSC video image, the intent was to digitize the image at the highest practical resolution. While the number of pixels in a horizontal scan line could be set to any amount, the number of scan lines could not be arbitrarily increased since they are part of the NTSC standard. Therefore, the pixels were changed to a narrow, vertical rectangle shape, allowing an increase in the number of pixels per line and added image resolution.

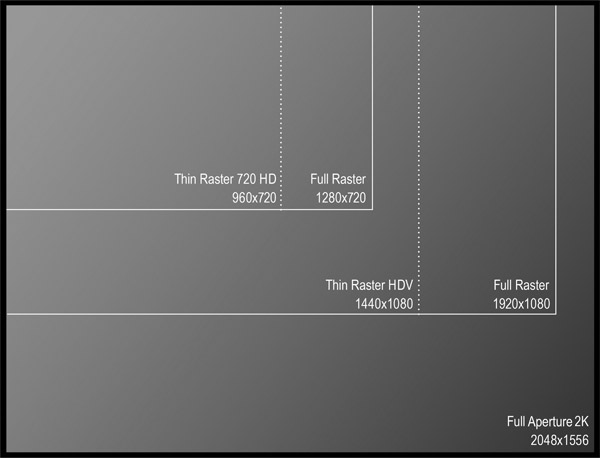

In the digital world, the aspect ratio of the pixels is sometimes changed to take fewer samples on each line. (Remember, the number of pixels in a horizontal scan line can be set to any amount,

but the number of scan lines cannot be arbitrarily increased since they are part of an existing standard.) While expanding the width of each pixel reduces the amount of data being stored and sent, it also reduces horizontal resolution. For example, a camera may have a sensor that is 1920 × 1080 full raster, meaning that the sensor itself is full-sized and rectangular—that it is in fact 1920 × 1080, and the pixel aspect ratio is square or 1:1. However, there are recording formats that are not full raster. By expanding the width of each pixel to an aspect ratio of 1.33:1, the HD 1920 × 1080 image can be reproduced at 1440 x 1080. This saves about a third on the amount of data that has to be stored or transmitted. The 1440 × 1080 image is referred to as a thin raster format (Figure 12.3).

Interlace and Progressive Scan Modes

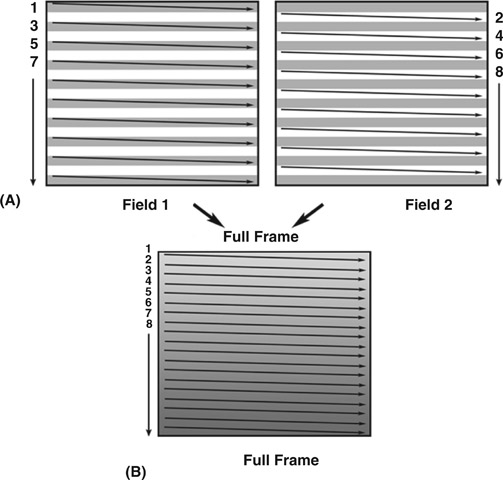

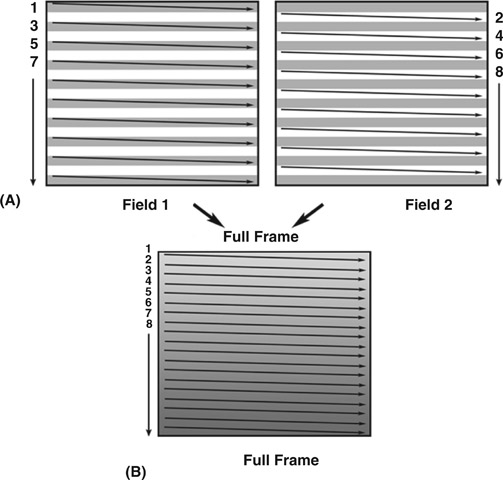

Interlace scanning is the process of splitting a frame of video into two fields. One field of video contains the odd lines of information while the other contains the even lines of the scanned image. When played back, the two fields are interlaced together to form one complete frame of video.

Where historically television used an interlace scanning process, the computer uses a non-interlaced or progressive scanning technique. Computer image screens can be refreshed as rapidly as 72 times a second. Because this rate is faster than the persistence of vision, the problem of flicker is eliminated. Therefore, there is no need to split the frame into two fields. Instead, the image is scanned from top to bottom, line by line, without using an interlacing process. The complete frame is captured or reproduced with each scan of the image (Figure 12.4).

Progressively scanned images have greater apparent clarity of detail than an equivalent interlaced image. The progressively scanned image holds a complete frame of video, whereas an interlaced frame contains images from two moments in time, each at half the resolution of the full frame.

The computer industry has always insisted on a far more detailed and higher quality image than has been used in the television industry. Computer images usually require very fine detail and often include a great deal of text that would be unreadable on a standard television screen. Traditional television images did not require the same degree of detail as computer information. In a digital environment, the limiting factor is enough bandwidth to transmit the information. Interlace scanning allowed more information to be transmitted in the limited spectrum of space allotted for signal transmission.

Figure 12.4

(A) Interlaced Scanning and (B) Progressive Scanning

When listing the criteria for a particular standard, the indication of whether the scanning mode is interlaced or progressive appears as an “i” or “p” following the line count, such as 480p, 720p, 1080i, and so on.

Frame Rate

The frame rate, regardless of the pixel or line count, is the number of full frames scanned per second. This rate represents what is known as the temporal resolution of the image, or how fast or slow the image is scanned. Frame rates vary depending on the rate at which the image was captured or created, the needs or capability of the system using the video image, and the capability of the system reproducing the image.

For example, an image may have been captured at 24 frames per second (fps), edited at 29.97 fps, and reproduced at 30 fps. Each change in the frame rate would denote a different format. Different frame rates include 23.98, 24, 25, 29.97, 30, 59.94, and 60 fps. Before color was added to the NTSC signal, black and white video was scanned at 30 frames per second, or 30 fps. When color was added to the signal, the scanning rate had to be slowed down slightly to 29.97 fps to accommodate the additional color information. That legacy rate is still used in countries that once broadcast in NTSC to facilitate incorporation of older previously recorded material. Projects that do not require a broadcast version can use the integer, or non-decimal rate (24, 25, 30, or 60 fps), that is best suited to the source material.

Conventional Definition Television (CDTV)

When digital video standards were created, the ATSC redefined the prior standards that were already in use. The analog standards used throughout the world were placed in a newly defined category called Conventional Definition Television, or CDTV. CDTV refers to all the original analog television standards.

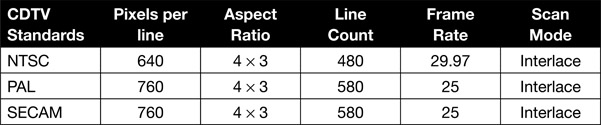

In the NTSC system, the CDTV standard image resolution is 640 pixels by 480 lines of active video. The frame rate is 29.97 frames per second with two interlaced fields, each containing 240 lines of active video. The PAL standard image resolution is 760 pixels by 580 lines. The PAL frame rate is 25 fps with two interlaced fields, each containing 288 lines of active video. SECAM also has

760 pixels per line and 580 lines, and shares the same frame rate and scan mode as PAL (Table 12.1).

In the original analog standards, each individual standard contained its own method for processing color information. With the advent of digital video standards, color encoding was redefined to a new international standard called CCIR 601, named after the French organization Consultative Committee on International Radio, which first developed the standard. Further refinement of the color encoding process and the merger of the CCIR with other organizations led to a change in name from CCIR 601 to ITU-R 601 named after the International Telecommunications Union.

NOTE You may be familiar with Emmy Awards given to television actors and programs. But the CCIR received a 1982–83 Technology and Engineering Emmy Award for its development of the Rec. 601 standard.

Digital Television Categories (DTV)

The Digital Television category, or DTV, developed from the growth of the digital video domain and encompasses all digital video standards. It has two subcategories: Standard Definition Television (SDTV) and High Definition Television (HDTV). To further divide the DTV category, there is an in-between standard referred to as EDTV, or Enhanced Definition Television.

High Definition Television (HDTV)

The original HDTV standards were analog because at the time there was no digital television system in use. With the advent of digital, HDTV immediately crossed over into the digital domain. In the process, the quality of the image was vastly improved. There are several different formats within the HDTV standard. In addition, many HDTV formats were developed as various manufacturers and various countries began to develop their own broadcasting systems. The differences between HDTV formats include such elements as frame rate, scan lines, and pixel count. Additional information and specifics of the HDTV standard are discussed further in Chapter 13, High Definition Video.

Standard Definition Television (SDTV)

Standard Definition Television, or SDTV, is the digital equivalent of the original analog standards. When a CDTV signal is transferred to the digital domain, it becomes an SDTV signal within the DTV category. It is, therefore, not a high definition image. It has less picture information, a lower pixel and line count, and smaller aspect ratio than HDTV.

Enhanced Definition Television (EDTV)

Enhanced Definition Television, or EDTV, is a term applied to video with a picture quality better than SDTV, but not quite as good as HDTV. While both SDTV and EDTV have 480 lines of information, SDTV displays the image using interlace scanning, while EDTV displays the image using progressive scanning. In progressive scanning, all 480 lines of the video image are displayed at one time, rather than in two passes as is the case with interlace scanning, giving the progressively scanned image a better quality. Progressive scanning is discussed in more detail in Chapter 13.

Digital Television Standards

As mentioned earlier, in 1995, the ATSC created a set of standards, known as A/53, for digital broadcasting of television signals in the United States. These standards use the MPEG-2 compression method for video compression (see Chapter 15 for more information on MPEG compression). The AC-3 Dolby Digital standard (document A/52), although outside the MPEG-2 standard, has been adopted in the United States as the standard for Digital TV audio (see Chapter 16 for more information on digital audio).

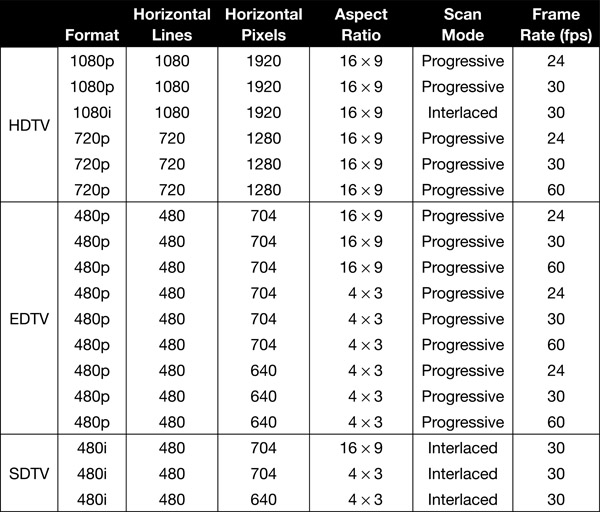

The standards created by the ATSC were adopted by the Federal Communications Commission (FCC) in the United States. However, the table of standards shown below (Table 12.2) was rejected by the FCC because of pressure from the computer industry. The idea, then, was to leave the choice of what standards to use to the marketplace as long as it worked with the MPEG-2 compression scheme. The result has been that all manufacturers and broadcasters have been using the standards included in the ATSC table.

Together, HDTV, SDTV, and EDTV make up 18 different ATSC picture display formats.

The Horizontal Lines column contains the number of lines in the image, while the Horizontal Pixels is the number of pixels across each line. In the Format column, the “i” refers to images scanned

using the interlace approach, while the “p” refers to progressively scanned images. 24 fps is also used to refer to 23.976 frames per second and 30 fps is also used to refer to 29.97 frames per second.

Digital Television Transmission

Digital video requires a different form of transmission from analog. In analog, the video was broadcast using AM for the images and FM for the audio. But digital contains all the elements in one data stream. Since digital signals can be compressed to use less spectrum space than analog, several digital data streams can be transmitted in the same space that contained just the one analog signal. To take advantage of this capability, the ATSC developed new standards for transmitting digital television.

NOTE After nearly 70 years, the majority of over-the-air NTSC transmissions in the United States ended on June 12, 2009, while other countries using NTSC ended within the next two years.

While the U.S. government mandated a change to digital television, it did not order that all television broadcasting must be High Definition, only that it be digital. As High Definition (HD) television is not mandatory and as compressed digital signals can require considerably less spectrum space than analog signals, broadcasters can transmit both a High Definition signal and more than one Standard Definition (SD) or Enhanced Definition (ED) signal in the remaining spectrum space. This is called multicasting.

The A/53 standard for transmitting digital television signals is called 8VSB, which stands for 8-level vestigial sideband. A vestigial element is a part of something that has no useful value. Vestigial in this case refers to that part of the carrier frequency that is filtered off as unnecessary. It uses the amplitude modulation (AM) method of modulating a carrier frequency. The A/53 standard using 8VSB can transport 18 different video standards simultaneously and has the capability to expand and change as needed. The 8VSB system of carrier modulation is also very useful for television transmission in that it greatly conserves bandwidth, is a relatively simple method of modulation, and saves cost in receiver design. This capability is also what makes multicasting possible.

The “8” in 8VSB

8VSB modulation treats digital bits a bit differently than the standard On/Off of most digital electronics. Typically a digital bit has either the presence or absence of some known voltage. Five volts is typical, so something close to five volts is a digital 1. Something close to 0 volts is a digital 0.

In order to increase the amount of data that the broadcast signal carries, 8VSB works with groups of three bits. In binary math, three bits can be combined into eight different patterns:

000 001 010 011 100 101 110 111

8VSB then assigns each of those patterns, or symbols as they are called, a different voltage. That voltage is what gets transmitted. At the receiver, the voltage is recovered and the three bits it represents are added to the bit stream. So in the same amount of time it takes to send one bit, three are sent.

However, like everything else, there is a price or tradeoff. Because noise is always present in electronics, sometimes the addition and subtraction that it causes makes it hard to see exactly what voltage a signal is for a moment. To overcome this error, correction schemes must be employed to compensate. For 8VSB, this error correction consumes about 40% of the transmitted data.

For transmission, the MPEG-2 program data, the audio data, the ancillary or additional data such as instructions about what is contained in the data stream, closed captioning, and synchronizing information are combined or multiplexed into the data stream. This data stream is what is sent for transmission.

Digital cable, while adhering to the adopted ATSC standards, generally does not use 8VSB for signal modulation. Instead cable operators use a more complex form of modulation called quadrature amplitude modulation (QAM) that is capable of 64 or 256 levels rather than the 8 levels of 8VSB.

Satellite broadcasting uses another form of modulation called quadrature phase-shift keying (QPSK). Again, the standards used for satellite broadcasting adhere to the ATSC table but satellite broadcasters use QPSK for modulating the carrier signal.

Moving Forward

Video technology will continue to evolve. And “television” viewers will want to watch their programs in a variety of ways on a growing range of media sources and delivery platforms, including, of course, the Internet. Creating a new DTV system that incorporates these elements must be developed in the future to keep pace with the industry’s technological growth and that of the consumers’ expectations. The ATSC is already making plans for a 3.0 system that will adapt to future innovations. Moving forward, this new system must address some of the key concepts of the emerging technology, by making it scalable, interoperable, and adaptable.