Chapter 5

Program Design and Analysis

Chapter Points

• Some useful components for embedded software.

• Models of programs, such as data flow and control flow graphs.

• An introduction to compilation methods.

• Analyzing and optimizing programs for performance, size, and power consumption.

5.1 Introduction

In this chapter we study in detail the process of creating programs for embedded processors. The creation of embedded programs is at the heart of embedded system design. If you are reading this book, you almost certainly have an understanding of programming, but designing and implementing embedded programs is different and more challenging than writing typical workstation or PC programs. Embedded code must not only provide rich functionality, it must also often run at a required rate to meet system deadlines, fit into the allowed amount of memory, and meet power consumption requirements. Designing code that simultaneously meets multiple design constraints is a considerable challenge, but luckily there are techniques and tools that we can use to help us through the design process. Making sure that the program works is also a challenge, but once again methods and tools come to our aid.

Throughout the discussion we concentrate on high-level programming languages, specifically C. High-level languages were once shunned as too inefficient for embedded microcontrollers, but better compilers, more compiler-friendly architectures, and faster processors and memory have made high-level language programs common. Some sections of a program may still need to be written in assembly language if the compiler doesn’t give sufficiently good results, but even when coding in assembly language it is often helpful to think about the program’s functionality in high-level form. Many of the analysis and optimization techniques that we study in this chapter are equally applicable to programs written in assembly language.

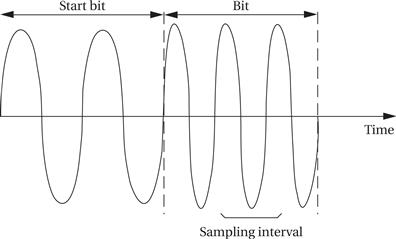

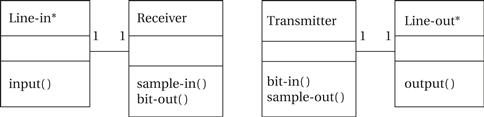

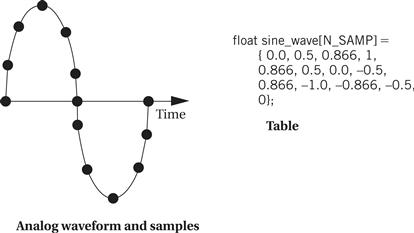

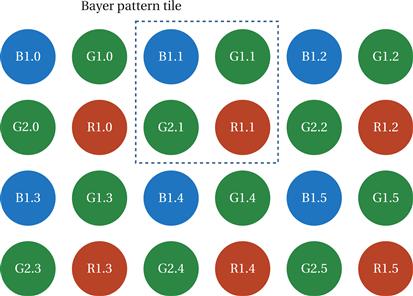

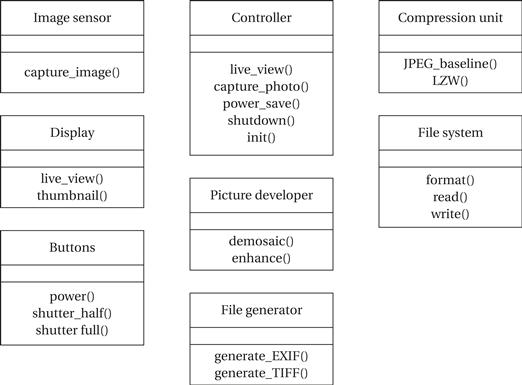

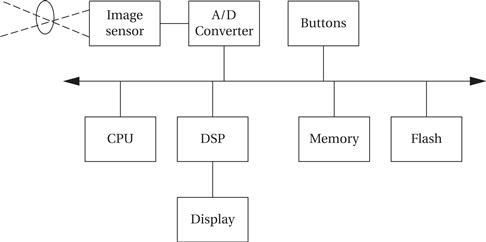

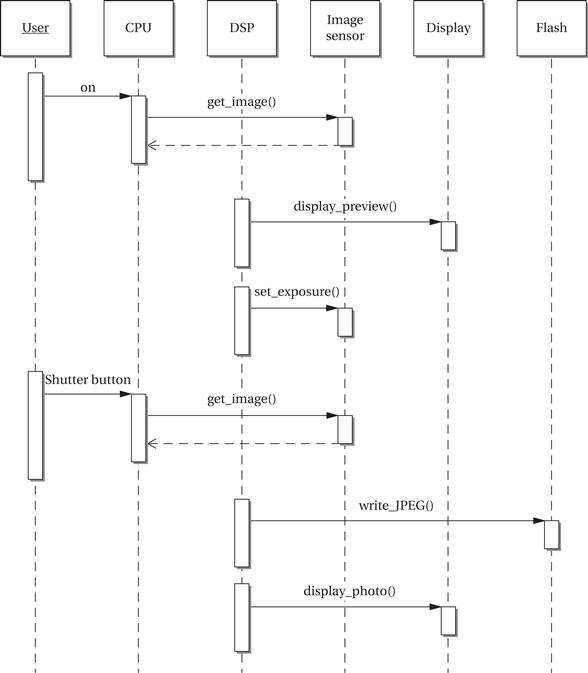

The next section talks about some software components that are commonly used in embedded software. Section 5.3 introduces the control/data flow graph as a model for high-level language programs (which can also be applied to programs written originally in assembly language). Section 5.4 reviews the assembly and linking process while Section 5.5 introduces some compilation techniques. Section 5.6 introduces methods for analyzing the performance of programs. We talk about optimization techniques specific to embedded computing in the next three sections: performance in Section 5.7, energy consumption in Section 5.8, and size in Section 5.9. In Section 5.10, we discuss techniques for ensuring that the programs you write are correct. We close with two design examples: a software modem as a design example in Section 5.11 and a digital still camera in Section 5.12.

5.2 Components for Embedded Programs

In this section, we consider code for three structures or components that are commonly used in embedded software: the state machine, the circular buffer, and the queue. State machines are well suited to reactive systems such as user interfaces; circular buffers and queues are useful in digital signal processing.

5.2.1 State Machines

State machine style

When inputs appear intermittently rather than as periodic samples, it is often convenient to think of the system as reacting to those inputs. The reaction of most systems can be characterized in terms of the input received and the current state of the system. This leads naturally to a finite-state machine style of describing the reactive system’s behavior. Moreover, if the behavior is specified in that way, it is natural to write the program implementing that behavior in a state machine style. The state machine style of programming is also an efficient implementation of such computations. Finite-state machines are usually first encountered in the context of hardware design.

Programming Example 5.1 shows how to write a finite-state machine in a high-level programming language.

Programming Example 5.1 A State Machine in C

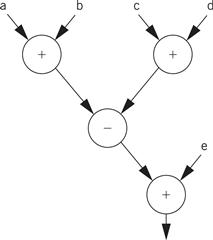

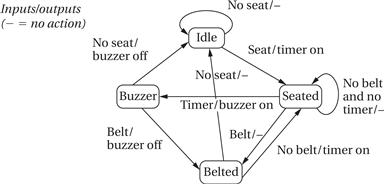

The behavior we want to implement is a simple seat belt controller [Chi94]. The controller’s job is to turn on a buzzer if a person sits in a seat and does not fasten the seat belt within a fixed amount of time. This system has three inputs and one output. The inputs are a sensor for the seat to know when a person has sat down, a seat belt sensor that tells when the belt is fastened, and a timer that goes off when the required time interval has elapsed. The output is the buzzer. Appearing below is a state diagram that describes the seat belt controller’s behavior.

The idle state is in force when there is no person in the seat. When the person sits down, the machine goes into the seated state and turns on the timer. If the timer goes off before the seat belt is fastened, the machine goes into the buzzer state. If the seat belt goes on first, it enters the belted state. When the person leaves the seat, the machine goes back to idle.

To write this behavior in C, we will assume that we have loaded the current values of all three inputs (seat, belt, timer) into variables and will similarly hold the outputs in variables temporarily (timer_on, buzzer_on). We will use a variable named state to hold the current state of the machine and a switch statement to determine what action to take in each state. Here is the code:

#define IDLE 0

#define SEATED 1

#define BELTED 2

#define BUZZER 3

switch(state) { /* check the current state */

case IDLE:

if (seat){ state = SEATED; timer_on = TRUE; }

/* default case is self-loop */

break;

case SEATED:

if (belt) state = BELTED; /* won’t hear the buzzer */

else if (timer) state = BUZZER; /* didn’t put on belt in time */

/* default case is self-loop */

break;

case BELTED:

if (!seat) state = IDLE; /* person left */

else if (!belt) state = SEATED; /* person still in seat */

break;

case BUZZER:

if (belt) state = BELTED; /* belt is on---turn off buzzer */

else if (!seat) state = IDLE; /* no one in seat--turn off buzzer */

break;

}

This code takes advantage of the fact that the state will remain the same unless explicitly changed; this makes self-loops back to the same state easy to implement. This state machine may be executed forever in a while(TRUE) loop or periodically called by some other code. In either case, the code must be executed regularly so that it can check on the current value of the inputs and, if necessary, go into a new state.

5.2.2 Circular Buffers and Stream-Oriented Programming

Data stream style

The data stream style makes sense for data that comes in regularly and must be processed on the fly. The FIR filter of Application Example 2.1 is a classic example of stream-oriented processing. For each sample, the filter must emit one output that depends on the values of the last n inputs. In a typical workstation application, we would process the samples over a given interval by reading them all in from a file and then computing the results all at once in a batch process. In an embedded system we must not only emit outputs in real time, but we must also do so using a minimum amount of memory.

Circular buffer

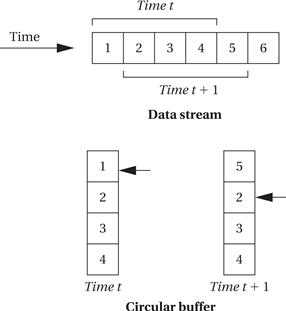

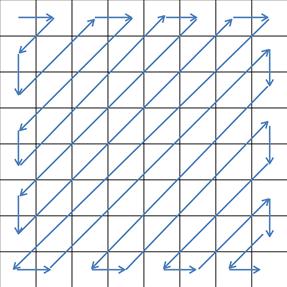

The circular buffer is a data structure that lets us handle streaming data in an efficient way. Figure 5.1 illustrates how a circular buffer stores a subset of the data stream. At each point in time, the algorithm needs a subset of the data stream that forms a window into the stream. The window slides with time as we throw out old values no longer needed and add new values. Because the size of the window does not change, we can use a fixed-size buffer to hold the current data. To avoid constantly copying data within the buffer, we will move the head of the buffer in time. The buffer points to the location at which the next sample will be placed; every time we add a sample, we automatically overwrite the oldest sample, which is the one that needs to be thrown out. When the pointer gets to the end of the buffer, it wraps around to the top.

Figure 5.1 A circular buffer.

Instruction set support

Many DSPs provide addressing modes to support circular buffers. For example, the C55x [Tex04] provides five circular buffer start address registers (their names start with BSA). These registers allow circular buffers to be placed without alignment constraints.

In the absence of specialized instructions, we can write our own C code for a circular buffer. This code also helps us understand the operation of the buffer. Programming Example 5.2 provides an efficient implementation of a circular buffer.

High-level language implementation

Programming Example 5.2 A Circular Buffer in C

Once we build a circular buffer, we can use it in a variety of ways. We will use an array as the buffer:

#define CMAX 6 /* filter order */

int circ[CMAX]; /* circular buffer */

int pos; /* position of current sample */

The variable pos holds the position of the current sample. As we add new values to the buffer this variable moves.

Here is the function that adds a new value to the buffer:

void circ_update(int xnew) {

/* add the new sample and push off the oldest one */

/* compute the new head value with wraparound; the pos pointer moves from 0 to CMAX-1 */

pos = ((pos == CMAX-1) ? 0 : (pos+1));

/* insert the new value at the new head */

circ[pos] = xnew;

}

The assignment to pos takes care of wraparound—when pos hits the end of the array it returns to zero. We then put the new value into the buffer at the new position. This overwrites the old value that was there. Note that as we go to higher index values in the array we march through the older values.

We can now write an initialization function. It sets the buffer values to zero. More important, it sets pos to the initial value. For ease of debugging, we want the first data element to go into circ[0]. To do this, we set pos to the end of the array so that it is set to zero before the first element is added:

void circ_init() {

int i;

for (i=0; i<CMAX; i++) /* set values to 0 */

circ[i] = 0;

pos=CMAX-1; /* start at tail so first element will be at 0 */

}

We can also make use of a function to get the ith value of the buffer. This function has to translate the index in temporal order—zero being the newest value—to its position in the array:

int circ_get(int i) {

/* get the ith value from the circular buffer */

int ii;

/* compute the buffer position */

ii = (pos - i) % CMAX;

/* return the value */

return circ[ii];

}

We are now in a position to write C code for a digital filter. To help us understand the filter algorithm, we can introduce a widely used representation for filter functions.

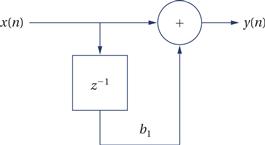

Signal flow graph

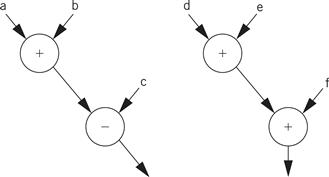

The FIR filter is only one type of digital filter. We can represent many different filtering structures using a signal flow graph as shown in Figure 5.2. The filter operates at a sample rate with inputs arriving and outputs generated at the sample rate. The inputs x[n] and y[n] are sequences indexed by n, which corresponds to the sequence of samples. Nodes in the graph can represent either arithmetic operators or delay operators. The + node adds its two inputs and produces the output y[n]. The box labeled z-1 is a delay operator. The z notation comes from the z transform used in digital signal processing; the −1 superscript means that the operation performs a time delay of one sample period. The edge from the delay operator to the addition operator is labeled with b1, meaning that the output of the delay operator is multiplied by b1.

Figure 5.2 A signal flow graph.

Filters and buffering

The code to produce one FIR filter output looks like this:

for (i=0, y=0.0; i<N; i++)

y += x[i] * b[i];

However, the filter takes in a new sample on every sample period. The new input becomes x1 , the old x1 becomes x2 , etc. x0 is stored directly in the circular buffer but must be multiplied by b0 before being added to the output sum. Early digital filters were built-in hardware, where we can build a shift register to perform this operation. If we used an analogous operation in software, we would move every value in the filter on every sample period. We can avoid that with a circular buffer, moving the head without moving the data elements.

The next example uses our circular buffer class to build an FIR filter.

Programming Example 5.3 An FIR Filter in C

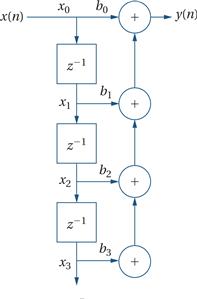

Here is a signal flow graph for an FIR filter:

The delay elements running vertically hold the input samples with the most recent sample at the top and the oldest one at the bottom. Unfortunately, the signal flow graph doesn’t explicitly label all of the values that we use as inputs to operations, so the figure also shows the values (xi ) we need to operate on in our FIR loop.

When we compute the filter function, we want to match the bi’s and xi’s. We will use our circular buffer for the x’s, which change over time. We will use a standard array for the b’s, which don’t change. In order for the filter function to be able to use the same I value for both sets of data, we need to put the x data in the proper order. We can put the b data in a standard array with b0 being the first element. When we add a new x value, it becomes x 0 and replaces the oldest data value in the buffer. This means that the buffer head moves from higher to lower values, not lower to higher as we might expect.

0 and replaces the oldest data value in the buffer. This means that the buffer head moves from higher to lower values, not lower to higher as we might expect.

Here is the modified version of circ_update() that puts a new sample into the buffer into the desired order:

void circ_update(int xnew) {

/* add the new sample and push off the oldest one */

/* compute the new head value with wraparound; the pos pointer moves from CMAX-1 down to 0 */

pos = ((pos == 0) ? CMAX-1 : (pos-1));

/* insert the new value at the new head */

circ[pos] = xnew;

}

We also need to change circ_init() to set pos = 0 initially. We don’t need to change circ_get();

Given these functions, the filter itself is simple. Here is our code for the FIR filter function:

int fir(int xnew) {

/* given a new sample value, update the queue and compute the filter output */

int i;

int result; /* holds the filter output */

circ_update(xnew); /* put the new value in */

for (i=0, result=0; i<CMAX; i++) /* compute the filter function */

result += b[i] * circ_get(i);

return result;

}

There is only one major structure for FIR filters but several for IIR filters, depending on the application requirements. One of the important reasons for so many different IIR forms is numerical properties—depending on the filter structure and coefficients, one structure may give significantly less numerical noise than another. But numerical noise is beyond the scope of our discussion so let’s concentrate on one form of IIR filter that highlights buffering issues. The next example looks at one form of IIR filter.

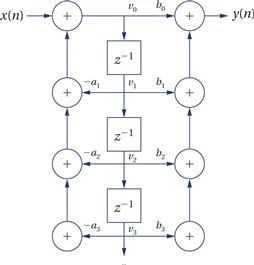

Programming Example 5.4 A Direct Form II IIR Filter Class in C

Here is what is known as the direct form II of an IIR filter:

This structure is designed to minimize the amount of buffer space required. Other forms of the IIR filter have other advantages but require more storage. We will store the vi values as in the FIR filter. In this case, v0 does not represent the input, but rather the left-hand sum. But v0 is stored before multiplication by b0 so that we can move v0 to v1 on the following sample period.

We can use the same circ_update() and circ_get() functions that we used for the FIR filter. We need two coefficient arrays, one for as and one for bs; as with the FIR filter, we can use standard C arrays for the coefficients because they don’t change over time. Here is the IIR filter function:

int iir2(int xnew) {

/* given a new sample value, update the queue and compute the filter output */

int i, aside, bside, result;

for (i=0, aside=0; i<ZMAX; i++)

aside += -a[i+1] * circ_get(i);

for (i=0, bside=0; i<ZMAX; i++)

bside += b[i+1] * circ_get(i);

result = b[0] * (xnew + aside) + bside;

circ_update(xnew); /* put the new value in */

return result;

}

5.2.3 Queues and Producer/Consumer Systems

Queues are also used in signal processing and event processing. Queues are used whenever data may arrive and depart at somewhat unpredictable times or when variable amounts of data may arrive. A queue is often referred to as an elastic buffer. We saw how to use elastic buffers for I/O in Chapter 3.

One way to build a queue is with a linked list. This approach allows the queue to grow to an arbitrary size. But in many applications we are unwilling to pay the price of dynamically allocating memory. Another way to design the queue is to use an array to hold all the data. Although some writers use both circular buffer and queue to mean the same thing, we use the term circular buffer to refer to a buffer that always has a fixed number of data elements while a queue may have varying numbers of elements in it.

Programming Example 5.5 gives C code for a queue that is built from an array.

Programming Example 5.5 An Array-Based Queue

The first step in designing the queue is to declare the array that we will use for the buffer:

#define Q_SIZE 5 /* your queue size may vary */

#define Q_MAX (Q_SIZE-1) /* this is the maximum index value into the array */

int q[Q_SIZE]; /* the array for our queue */

int head, tail; /* indexes for the current queue head and tail */

The variables head and tail keep track of the two ends of the queue.

Here is the initialization code for the queue:

void queue_init() {

/* initialize the queue data structure */

head = 0;

tail = 0;

}

We initialize the head and tail to the same position. As we add a value to the tail of the queue, we will increment tail. Similarly, when we remove a value from the head, we will increment head. The value of head is always equal to the location of the first element of the queue (except for when the queue is empty). The value of tail, in contrast, points to the location in which the next queue entry will go. When we reach the end of the array, we must wrap around these values—for example, when we add a value into the last element of q, the new value of tail becomes the 0th entry of the array.

We need to check for two error conditions: removing from an empty queue and adding to a full queue. In the first case, we know the queue is empty if head == tail. In the second case, we know the queue is full if incrementing tail will cause it to equal head. Testing for fullness, however, is a little harder because we have to worry about wraparound.

Here is the code for adding an element to the tail of the queue, which is known as enqueueing:

void enqueue(int val) {

/* check for a full queue */

if (((tail+1) % Q_SIZE) == head) error("enqueue onto full queue",tail);

/* add val to the tail of the queue */

q[tail] = val;

/* update the tail */

if (tail == Q_MAX)

tail = 0;

else

tail++;

}

And here is the code for removing an element from the head of the queue, known as dequeueing:

int dequeue() {

int returnval; /* use this to remember the value that you will return */

/* check for an empty queue */

if (head == tail) error("dequeue from empty queue",head);

/* remove from the head of the queue */

returnval = q[head];

/* update head */

if (head == Q_MAX)

head = 0;

else

head++;

/* return the value */

return returnval;

}

Digital filters always take in the same amount of data in each time period. Many systems, even signal processing systems, don’t fit that mold. Rather, they may take in varying amounts of data over time and produce varying amounts. When several of these systems operate in a chain, the variable-rate output of one stage becomes the variable-rate input of another stage.

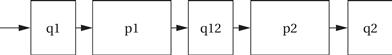

Producer/consumer

Figure 5.3 shows a block diagram of a simple producer/consumer system. p1 and p2 are the two blocks that perform algorithmic processing. The data is fed to them by queues that act as elastic buffers. The queues modify the flow of control in the system as well as store data. If, for example, p2 runs ahead of p1, it will eventually run out of data in its q12 input queue. At that point, the queue will return an empty signal to p2. At this point, p2 should stop working until more data is available. This sort of complex control is easier to implement in a multitasking environment, as we will see in Chapter 6, but it is also possible to make effective use of queues in programs structured as nested procedures.

Figure 5.3 A producer/consumer system.

Data structures in queues

The queues in a producer/consumer may hold either uniform-sized data elements or variable-sized data elements. In some cases, the consumer needs to know how many of a given type of data element are associated together. The queue can be structured to hold a complex data type. Alternatively, the data structure can be stored as bytes or integers in the queue with, for example, the first integer holding the number of successive data elements.

5.3 Models of Programs

In this section, we develop models for programs that are more general than source code. Why not use the source code directly? First, there are many different types of source code—assembly languages, C code, and so on—but we can use a single model to describe all of them. Once we have such a model, we can perform many useful analyses on the model more easily than we could on the source code.

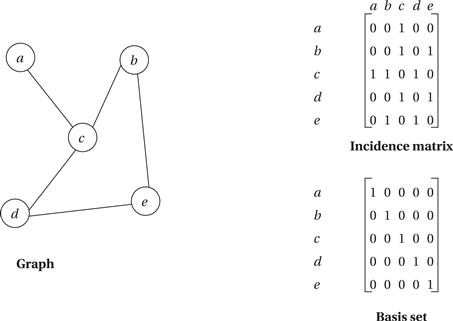

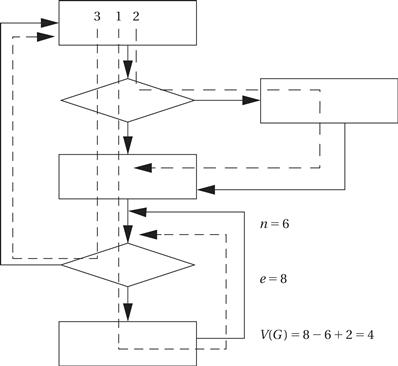

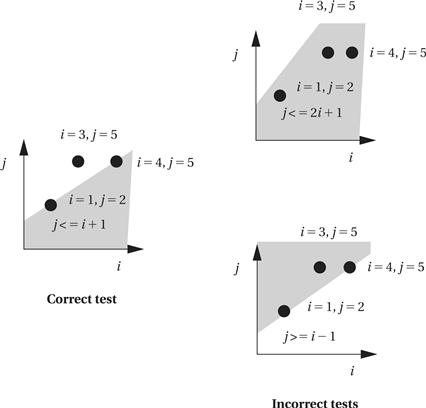

Our fundamental model for programs is the control/data flow graph (CDFG). (We can also model hardware behavior with the CDFG.) As the name implies, the CDFG has constructs that model both data operations (arithmetic and other computations) and control operations (conditionals). Part of the power of the CDFG comes from its combination of control and data constructs. To understand the CDFG, we start with pure data descriptions and then extend the model to control.

5.3.1 Data Flow Graphs

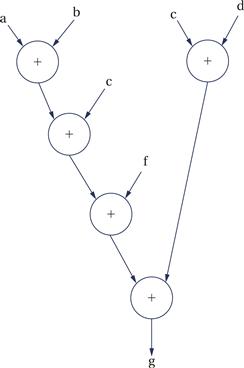

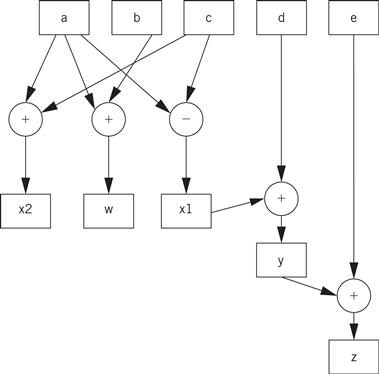

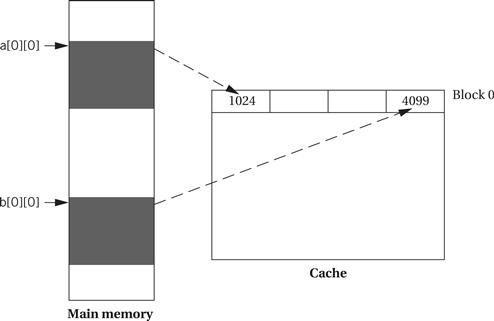

A data flow graph is a model of a program with no conditionals. In a high-level programming language, a code segment with no conditionals—more precisely, with only one entry and exit point—is known as a basic block. Figure 5.4 shows a simple basic block. As the C code is executed, we would enter this basic block at the beginning and execute all the statements.

Figure 5.4 A basic block in C.

Before we are able to draw the data flow graph for this code we need to modify it slightly. There are two assignments to the variable x—it appears twice on the left side of an assignment. We need to rewrite the code in single-assignment form, in which a variable appears only once on the left side. Because our specification is C code, we assume that the statements are executed sequentially, so that any use of a variable refers to its latest assigned value. In this case, x is not reused in this block (presumably it is used elsewhere), so we just have to eliminate the multiple assignment to x. The result is shown in Figure 5.5 where we have used the names x1 and x2 to distinguish the separate uses of x.

Figure 5.5 The basic block in single-assignment form.

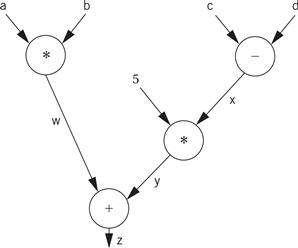

The single-assignment form is important because it allows us to identify a unique location in the code where each named location is computed. As an introduction to the data flow graph, we use two types of nodes in the graph—round nodes denote operators and square nodes represent values. The value nodes may be either inputs to the basic block, such as a and b, or variables assigned to within the block, such as w and x1. The data flow graph for our single-assignment code is shown in Figure 5.6. The single-assignment form means that the data flow graph is acyclic—if we assigned to x multiple times, then the second assignment would form a cycle in the graph including x and the operators used to compute x. Keeping the data flow graph acyclic is important in many types of analyses we want to do on the graph. (Of course, it is important to know whether the source code actually assigns to a variable multiple times, because some of those assignments may be mistakes. We consider the analysis of source code for proper use of assignments in Section 5.5.)

Figure 5.6 An extended data flow graph for our sample basic block.

The data flow graph is generally drawn in the form shown in Figure 5.7. Here, the variables are not explicitly represented by nodes. Instead, the edges are labeled with the variables they represent. As a result, a variable can be represented by more than one edge. However, the edges are directed and all the edges for a variable must come from a single source. We use this form for its simplicity and compactness.

Figure 5.7 Standard data flow graph for our sample basic block.

The data flow graph for the code makes the order in which the operations are performed in the C code much less obvious. This is one of the advantages of the data flow graph. We can use it to determine feasible reorderings of the operations, which may help us to reduce pipeline or cache conflicts. We can also use it when the exact order of operations simply doesn’t matter. The data flow graph defines a partial ordering of the operations in the basic block. We must ensure that a value is computed before it is used, but generally there are several possible orderings of evaluating expressions that satisfy this requirement.

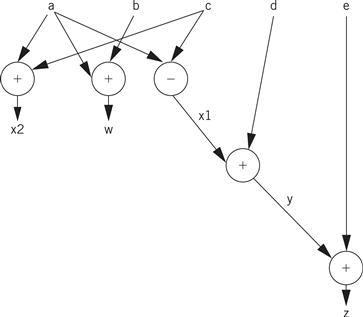

5.3.2 Control/Data Flow Graphs

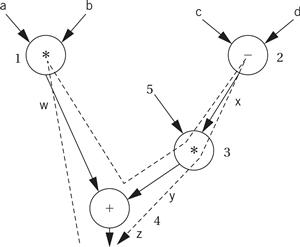

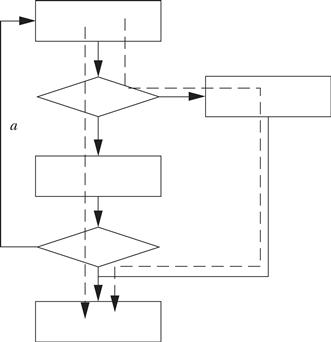

A CDFG uses a data flow graph as an element, adding constructs to describe control. In a basic CDFG, we have two types of nodes: decision nodes and data flow nodes. A data flow node encapsulates a complete data flow graph to represent a basic block. We can use one type of decision node to describe all the types of control in a sequential program. (The jump/branch is, after all, the way we implement all those high-level control constructs.)

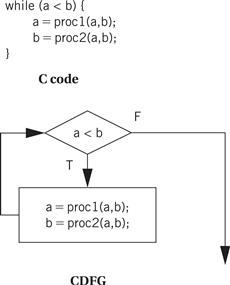

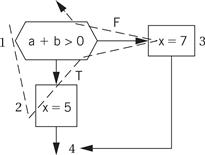

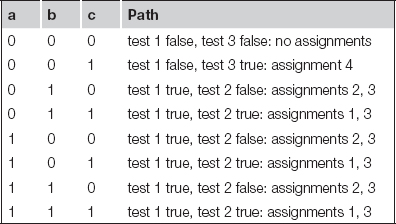

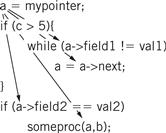

Figure 5.8 shows a bit of C code with control constructs and the CDFG constructed from it. The rectangular nodes in the graph represent the basic blocks. The basic blocks in the C code have been represented by function calls for simplicity. The diamond-shaped nodes represent the conditionals. The node’s condition is given by the label, and the edges are labeled with the possible outcomes of evaluating the condition.

Figure 5.8 C code and its CDFG.

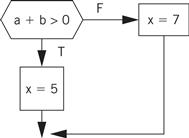

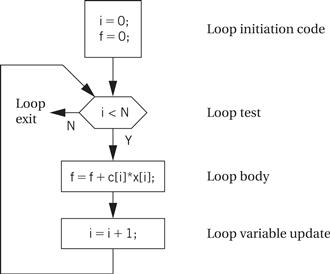

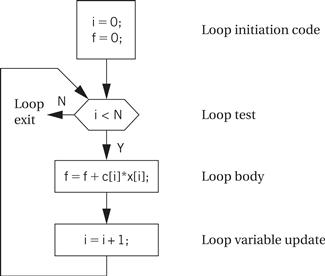

Building a CDFG for a while loop is straightforward, as shown in Figure 5.9. The while loop consists of both a test and a loop body, each of which we know how to represent in a CDFG. We can represent for loops by remembering that, in C, a for loop is defined in terms of a while loop. This for loop

for (i = 0; i < N; i++) {

loop_body();

}

is equivalent to

i = 0;

while (i < N) {

loop_body();

i++;

}

Figure 5.9 A while loop and its CDFG.

Hierarchical representation

For a complete CDFG model, we can use a data flow graph to model each data flow node. Thus, the CDFG is a hierarchical representation—a data flow CDFG can be expanded to reveal a complete data flow graph.

An execution model for a CDFG is very much like the execution of the program it represents. The CDFG does not require explicit declaration of variables but we assume that the implementation has sufficient memory for all the variables. We can define a state variable that represents a program counter in a CPU. (When studying a drawing of a CDFG, a finger works well for keeping track of the program counter state.) As we execute the program, we either execute the data flow node or compute the decision in the decision node and follow the appropriate edge, depending on the type of node the program counter points on. Even though the data flow nodes may specify only a partial ordering on the data flow computations, the CDFG is a sequential representation of the program. There is only one program counter in our execution model of the CDFG, and operations are not executed in parallel.

The CDFG is not necessarily tied to high-level language control structures. We can also build a CDFG for an assembly language program. A jump instruction corresponds to a nonlocal edge in the CDFG. Some architectures, such as ARM and many VLIW processors, support predicated execution of instructions, which may be represented by special constructs in the CDFG.

5.4 Assembly, Linking, and Loading

Assembly and linking are the last steps in the compilation process—they turn a list of instructions into an image of the program’s bits in memory. Loading actually puts the program in memory so that it can be executed. In this section, we survey the basic techniques required for assembly linking to help us understand the complete compilation and loading process.

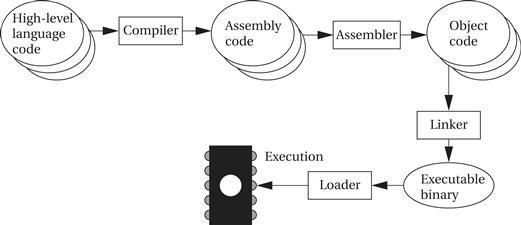

Program generation work flow

Figure 5.10 highlights the role of assemblers and linkers in the compilation process. This process is often hidden from us by compilation commands that do everything required to generate an executable program. As the figure shows, most compilers do not directly generate machine code, but instead create the instruction-level program in the form of human-readable assembly language. Generating assembly language rather than binary instructions frees the compiler writer from details extraneous to the compilation process, which includes the instruction format as well as the exact addresses of instructions and data. The assembler’s job is to translate symbolic assembly language statements into bit-level representations of instructions known as object code. The assembler takes care of instruction formats and does part of the job of translating labels into addresses. However, because the program may be built from many files, the final steps in determining the addresses of instructions and data are performed by the linker, which produces an executable binary file. That file may not necessarily be located in the CPU’s memory, however, unless the linker happens to create the executable directly in RAM. The program that brings the program into memory for execution is called a loader.

Figure 5.10 Program generation from compilation through loading.

Absolute and relative addresses

The simplest form of the assembler assumes that the starting address of the assembly language program has been specified by the programmer. The addresses in such a program are known as absolute addresses. However, in many cases, particularly when we are creating an executable out of several component files, we do not want to specify the starting addresses for all the modules before assembly—if we did, we would have to determine before assembly not only the length of each program in memory but also the order in which they would be linked into the program. Most assemblers therefore allow us to use relative addresses by specifying at the start of the file that the origin of the assembly language module is to be computed later. Addresses within the module are then computed relative to the start of the module. The linker is then responsible for translating relative addresses into addresses.

5.4.1 Assemblers

When translating assembly code into object code, the assembler must translate opcodes and format the bits in each instruction, and translate labels into addresses. In this section, we review the translation of assembly language into binary.

Labels make the assembly process more complex, but they are the most important abstraction provided by the assembler. Labels let the programmer (a human programmer or a compiler generating assembly code) avoid worrying about the locations of instructions and data. Label processing requires making two passes through the assembly source code:

1. The first pass scans the code to determine the address of each label.

2. The second pass assembles the instructions using the label values computed in the first pass.

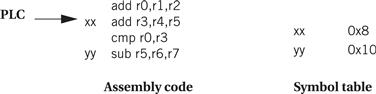

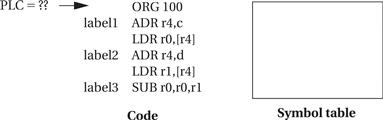

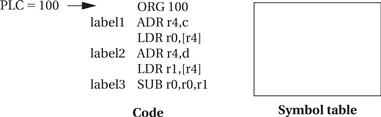

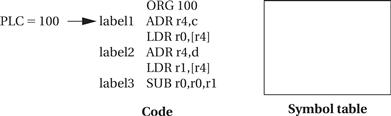

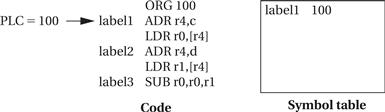

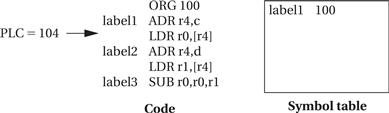

Symbol table

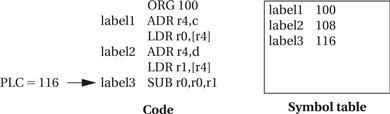

As shown in Figure 5.11, the name of each symbol and its address is stored in a symbol table that is built during the first pass. The symbol table is built by scanning from the first instruction to the last. (For the moment, we assume that we know the address of the first instruction in the program.) During scanning, the current location in memory is kept in a program location counter (PLC). Despite the similarity in name to a program counter, the PLC is not used to execute the program, only to assign memory locations to labels. For example, the PLC always makes exactly one pass through the program, whereas the program counter makes many passes over code in a loop. Thus, at the start of the first pass, the PLC is set to the program’s starting address and the assembler looks at the first line. After examining the line, the assembler updates the PLC to the next location (because ARM instructions are four bytes long, the PLC would be incremented by four) and looks at the next instruction. If the instruction begins with a label, a new entry is made in the symbol table, which includes the label name and its value. The value of the label is equal to the current value of the PLC. At the end of the first pass, the assembler rewinds to the beginning of the assembly language file to make the second pass. During the second pass, when a label name is found, the label is looked up in the symbol table and its value substituted into the appropriate place in the instruction.

Figure 5.11 Symbol table processing during assembly.

But how do we know the starting value of the PLC? The simplest case is addressing. In this case, one of the first statements in the assembly language program is a pseudo-op that specifies the origin of the program, that is, the location of the first address in the program. A common name for this pseudo-op (e.g., the one used for the ARM) is the ORG statement

ORG 2000

which puts the start of the program at location 2000. This pseudo-op accomplishes this by setting the PLC’s value to its argument’s value, 2000 in this case. Assemblers generally allow a program to have many ORG statements in case instructions or data must be spread around various spots in memory.

Example 5.1 illustrates the use of the PLC in generating the symbol table.

Example 5.1 Generating a Symbol Table

Let’s use the following simple example of ARM assembly code:

ORG 100

label1 ADR r4,c

LDR r0,[r4]

label2 ADR r4,d

LDR r1,[r4]

label3 SUB r0,r0,r1

The initial ORG statement tells us the starting address of the program. To begin, let’s initialize the symbol table to an empty state and put the PLC at the initial ORG statement.

The PLC value shown is at the beginning of this step, before we have processed the ORG statement. The ORG tells us to set the PLC value to 100.

To process the next statement, we move the PLC to point to the next statement. But because the last statement was a pseudo-op that generates no memory values, the PLC value remains at 100.

Because there is a label in this statement, we add it to the symbol table, taking its value from the current PLC value.

To process the next statement, we advance the PLC to point to the next line of the program and increment its value by the length in memory of the last line, namely, 4.

We continue this process as we scan the program until we reach the end, at which the state of the PLC and symbol table are as shown below.

Assemblers allow labels to be added to the symbol table without occupying space in the program memory. A typical name of this pseudo-op is EQU for equate. For example, in the code

ADD r0,r1,r2

FOO EQU 5

BAZ SUB r3,r4,#FOO

the EQU pseudo-op adds a label named FOO with the value 5 to the symbol table. The value of the BAZ label is the same as if the EQU pseudo-op were not present, because EQU does not advance the PLC. The new label is used in the subsequent SUB instruction as the name for a constant. EQUs can be used to define symbolic values to help make the assembly code more structured.

ARM ADR pseudo-op

The ARM assembler supports one pseudo-op that is particular to the ARM instruction set. In other architectures, an address would be loaded into a register (e.g., for an indirect access) by reading it from a memory location. ARM does not have an instruction that can load an effective address, so the assembler supplies the ADR pseudo-op to create the address in the register. It does so by using ADD or SUB instructions to generate the address. The address to be loaded can be register relative, program relative, or numeric, but it must assemble to a single instruction. More complicated address calculations must be explicitly programmed.

Object code formats

The assembler produces an object file that describes the instructions and data in binary format. A commonly used object file format, originally developed for Unix but now used in other environments as well, is known as COFF (common object file format). The object file must describe the instructions, data, and any addressing information and also usually carries along the symbol table for later use in debugging.

Generating relative code rather than code introduces some new challenges to the assembly language process. Rather than using an ORG statement to provide the starting address, the assembly code uses a pseudo-op to indicate that the code is in fact relocatable. (Relative code is the default for the ARM assembler.) Similarly, we must mark the output object file as being relative code. We can initialize the PLC to 0 to denote that addresses are relative to the start of the file. However, when we generate code that makes use of those labels, we must be careful, because we do not yet know the actual value that must be put into the bits. We must instead generate relocatable code. We use extra bits in the object file format to mark the relevant fields as relocatable and then insert the label’s relative value into the field. The linker must therefore modify the generated code—when it finds a field marked as relative, it uses the addresses that it has generated to replace the relative value with a correct, value for the address. To understand the details of turning relocatable code into executable code, we must understand the linking process described in the next section.

5.4.2 Linking

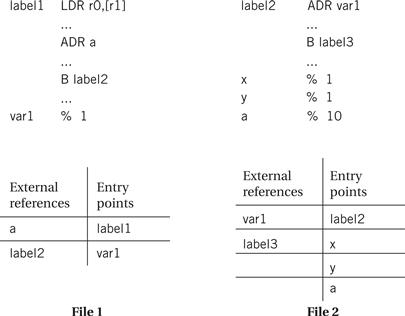

Many assembly language programs are written as several smaller pieces rather than as a single large file. Breaking a large program into smaller files helps delineate program modularity. If the program uses library routines, those will already be preassembled, and assembly language source code for the libraries may not be available for purchase. A linker allows a program to be stitched together out of several smaller pieces. The linker operates on the object files created by the assembler and modifies the assembled code to make the necessary links between files.

Some labels will be both defined and used in the same file. Other labels will be defined in a single file but used elsewhere as illustrated in Figure 5.12. The place in the file where a label is defined is known as an entry point. The place in the file where the label is used is called an external reference. The main job of the loader is to resolve external references based on available entry points. As a result of the need to know how definitions and references connect, the assembler passes to the linker not only the object file but also the symbol table. Even if the entire symbol table is not kept for later debugging purposes, it must at least pass the entry points. External references are identified in the object code by their relative symbol identifiers.

Figure 5.12 External references and entry points.

Linking process

The linker proceeds in two phases. First, it determines the address of the start of each object file. The order in which object files are to be loaded is given by the user, either by specifying parameters when the loader is run or by creating a load map file that gives the order in which files are to be placed in memory. Given the order in which files are to be placed in memory and the length of each object file, it is easy to compute the starting address of each file. At the start of the second phase, the loader merges all symbol tables from the object files into a single, large table. It then edits the object files to change relative addresses into addresses. This is typically performed by having the assembler write extra bits into the object file to identify the instructions and fields that refer to labels. If a label cannot be found in the merged symbol table, it is undefined and an error message is sent to the user.

Controlling where code modules are loaded into memory is important in embedded systems. Some data structures and instructions, such as those used to manage interrupts, must be put at precise memory locations for them to work. In other cases, different types of memory may be installed at different address ranges. For example, if we have flash in some locations and DRAM in others, we want to make sure that locations to be written are put in the DRAM locations.

Dynamically linked libraries

Workstations and PCs provide dynamically linked libraries, and certain sophisticated embedded computing environments may provide them as well. Rather than link a separate copy of commonly used routines such as I/O to every executable program on the system, dynamically linked libraries allow them to be linked in at the start of program execution. A brief linking process is run just before execution of the program begins; the dynamic linker uses code libraries to link in the required routines. This not only saves storage space but also allows programs that use those libraries to be easily updated. However, it does introduce a delay before the program starts executing.

5.4.3 Object Code Design

We have to take several issues into account when designing object code. In a timesharing system, many of these details are taken care of for us. When designing an embedded system, we may need to handle some of them ourselves.

Memory map design

As we saw, the linker allows us to control where object code modules are placed in memory. We may need to control the placement of several types of data:

• Interrupt vectors and other information for I/O devices must be placed in specific locations.

• Memory management tables must be set up.

• Global variables used for communication between processes must be put in locations that are accessible to all the users of that data.

We can give these locations symbolic names so that, for example, the same software can work on different processors that put these items at different addresses. But the linker must be given the proper absolute addresses to configure the program’s memory.

Reentrancy

Many programs should be designed to be reentrant. A program is reentrant if can be interrupted by another call to the function without changing the results of either call. If the program changes the value of global variables, it may give a different answer when it is called recursively. Consider this code:

int foo = 1;

int task1() {

foo = foo + 1;

return foo;

}

In this simple example, the variable foo is modified and so task1() gives a different answer on every invocation. We can avoid this problem by passing foo in as an argument:

int task1(int foo) {

return foo+1;

}

Relocatability

A program is relocatable if it can be executed when loaded into different parts of memory. Relocatability requires some sort of support from hardware that provides address calculation. But it is possible to write nonrelocatable code for nonrelocatable architectures. In some cases, it may be necessary to use a nonrelocatable address, such as when addressing an I/O device. However, any addresses that are not fixed by the architecture or system configuration should be accessed using relocatable code.

5.5 Compilation Techniques

Even though we don’t write our own assembly code much of the time, we still care about the characteristics of the code our compiler generates: its speed, its size, and its power consumption. Understanding how a compiler works will help us write code and direct the compiler to get the assembly language implementation we want. We will start with an overview of the compilation process, then some basic compilation methods, and conclude with some more advanced optimizations.

5.5.1 The Compilation Process

It is useful to understand how a high-level language program is translated into instructions: interrupt handling instructions, placement of data and instructions in memory, etc. Understanding how the compiler works can help you know when you cannot rely on the compiler. Next, because many applications are also performance sensitive, understanding how code is generated can help you meet your performance goals, either by writing high-level code that gets compiled into the instructions you want or by recognizing when you must write your own assembly code.

We can summarize the compilation process with a formula:

The high-level language program is translated into the lower-level form of instructions; optimizations try to generate better instruction sequences than would be possible if the brute force technique of independently translating source code statements were used. Optimization techniques focus on more of the program to ensure that compilation decisions that appear to be good for one statement are not unnecessarily problematic for other parts of the program.

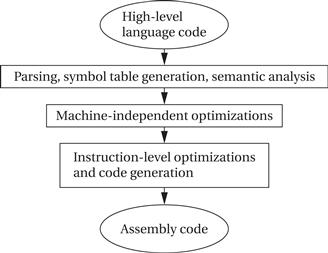

The compilation process is outlined in Figure 5.13. Compilation begins with high-level language code such as C or C++ and generally produces assembly code. (Directly producing object code simply duplicates the functions of an assembler, which is a very desirable stand-alone program to have.) The high-level language program is parsed to break it into statements and expressions. In addition, a symbol table is generated, which includes all the named objects in the program. Some compilers may then perform higher-level optimizations that can be viewed as modifying the high-level language program input without reference to instructions.

Figure 5.13 The compilation process.

Simplifying arithmetic expressions is one example of a machine-independent optimization. Not all compilers do such optimizations, and compilers can vary widely regarding which combinations of machine-independent optimizations they do perform. Instruction-level optimizations are aimed at generating code. They may work directly on real instructions or on a pseudo-instruction format that is later mapped onto the instructions of the target CPU. This level of optimization also helps modularize the compiler by allowing code generation to create simpler code that is later optimized. For example, consider this array access code:

x[i] = c*x[i];

A simple code generator would generate the address for x[i] twice, once for each appearance in the statement. The later optimization phases can recognize this as an example of common expressions that need not be duplicated. While in this simple case it would be possible to create a code generator that never generated the redundant expression, taking into account every such optimization at code generation time is very difficult. We get better code and more reliable compilers by generating simple code first and then optimizing it.

5.5.2 Basic Compilation Methods

Statement translation

In this section, we consider the basic job of translating the high-level language program with little or no optimization. Let’s first consider how to translate an expression. A large amount of the code in a typical application consists of arithmetic and logical expressions. Understanding how to compile a single expression, as described in the next example, is a good first step in understanding the entire compilation process.

Example 5.2 Compiling an Arithmetic Expression

Consider this arithmetic expression:

x = a*b + 5*(c − d)

The expression is written in terms of program variables. In some machines we may be able to perform memory-to-memory arithmetic directly on the locations corresponding to those variables. However, in many machines, such as the ARM, we must first load the variables into registers. This requires choosing which registers receive not only the named variables but also intermediate results such as (c − d).

The code for the expression can be built by walking the data flow graph. Here is the data flow graph for the expression.

The temporary variables for the intermediate values and final result have been named w, x, y, and z. To generate code, we walk from the tree’s root (where z, the final result, is generated) by traversing the nodes in post order. During the walk, we generate instructions to cover the operation at every node. Here is the path:

The nodes are numbered in the order in which code is generated. Because every node in the data flow graph corresponds to an operation that is directly supported by the instruction set, we simply generate an instruction at every node. Because we are making an arbitrary register assignment, we can use up the registers in order starting with r1. Here is the resulting ARM code:

; operator 1 (+)

ADR r4,a ; get address for a

MOV r1,[r4] ; load a

ADR r4,b ; get address for b

MOV r2,[r4] ; load b

ADD r3,r1,r2 ; put w into r3

; operator 2 (−)

ADR r4,c ; get address for c

MOV r4,[r4] ; load c

ADR r4,d ; get address for d

MOV r5,[r4] ; load d

SUB r6,r4,r5 ; put z into r6

; operator 3 (*)

MUL r7,r6,#5 ; operator 3, puts y into r7

; operator 4 (+)

ADD r8,r7,r3 ; operator 4, puts x into r8

; assign to x

ADR r1,x

STR r8,[r1] ; assigns to x location

One obvious optimization is to reuse a register whose value is no longer needed. In the case of the intermediate values w, y, and z, we know that they cannot be used after the end of the expression (e.g., in another expression) because they have no name in the C program. However, the final result z may in fact be used in a C assignment and the value reused later in the program. In this case we would need to know when the register is no longer needed to determine its best use.

For comparison, here is the code generated by the ARM gcc compiler with handwritten comments:

ldr r2, [fp, #−16]

ldr r3, [fp, #−20]

mul r1, r3, r2 ; multiply

ldr r2, [fp, #−24]

ldr r3, [fp, #−28]

rsb r2, r3, r2 ; subtract

mov r3, r2

mov r3, r3, asl #2

add r3, r3, r2 ; add

add r3, r1, r3 ; add

str r3, [fp, #−32] ; assign

In the previous example, we made an arbitrary allocation of variables to registers for simplicity. When we have large programs with multiple expressions, we must allocate registers more carefully because CPUs have a limited number of registers. We will consider register allocation in more detail below.

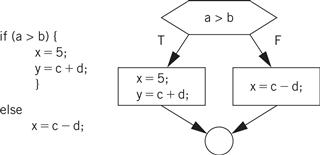

We also need to be able to translate control structures. Because conditionals are controlled by expressions, the code generation techniques of the last example can be used for those expressions, leaving us with the task of generating code for the flow of control itself. Figure 5.14 shows a simple example of changing flow of control in C—an if statement, in which the condition controls whether the true or false branch of the if is taken. Figure 5.14 also shows the control flow diagram for the if statement.

Figure 5.14 Flow of control in C and control flow diagrams.

The next example illustrates how to implement conditionals in assembly language.

Example 5.3 Generating Code for a Conditional

Consider this C statement:

if (a + b > 0)

x = 5;

else

x = 7;

The CDFG for the statement appears below.

We know how to generate the code for the expressions. We can generate the control flow code by walking the CDFG. One ordered walk through the CDFG follows:

To generate code, we must assign a label to the first instruction at the end of a directed edge and create a branch for each edge that does not go to the immediately following instruction. The exact steps to be taken at the branch points depend on the target architecture. On some machines, evaluating expressions generates condition codes that we can test in subsequent branches, and on other machines we must use test-and-branch instructions. ARM allows us to test condition codes, so we get the following ARM code for the 1-2-3 walk:

ADR r5,a ; get address for a

LDR r1,[r5] ; load a

ADR r5,b ; get address for b

LDR r2,b ; load b

ADD r3,r1,r2

BLE label3 ; true condition falls through branch

; true case

LDR r3,#5 ; load constant

ADR r5,x

STR r3, [r5] ; store value into x

B stmtend ; done with the true case

; false case

label3 LDR r3,#7 ; load constant

ADR r5,x ; get address of x

STR r3,[r5] ; store value into x

stmtend …

The 1-2 and 3-4 edges do not require a branch and label because they are straight-line code. In contrast, the 1-3 and 2-4 edges do require a branch and a label for the target.

For comparison, here is the code generated by the ARM gcc compiler with some handwritten comments:

ldr r2, [fp, #−16]

ldr r3, [fp, #−20]

add r3, r2, r3

cmp r3, #0 ; test the branch condition

ble .L3 ; branch to false block if < =

mov r3, #5 ; true block

str r3, [fp, #−32]

b .L4 ; go to end of if statement

.L3: ; false block

mov r3, #7

str r3, [fp, #−32]

.L4:

Because expressions are generally created as straight-line code, they typically require careful consideration of the order in which the operations are executed. We have much more freedom when generating conditional code because the branches ensure that the flow of control goes to the right block of code. If we walk the CDFG in a different order and lay out the code blocks in a different order in memory, we still get valid code as long as we properly place branches.

Drawing a control flow graph based on the while form of the loop helps us understand how to translate it into instructions.

C compilers can generate (using the -s flag) assembler source, which some compilers intersperse with the C code. Such code is a very good way to learn about both assembly language programming and compilation.

Procedures

Another major code generation problem is the creation of procedures. Generating code for procedures is relatively straightforward once we know the procedure linkage appropriate for the CPU. At the procedure definition, we generate the code to handle the procedure call and return. At each call of the procedure, we set up the procedure parameters and make the call.

The CPU’s subroutine call mechanism is usually not sufficient to directly support procedures in modern programming languages. We introduced the procedure stack and procedure linkages in Chapter 2. The linkage mechanism provides a way for the program to pass parameters into the program and for the procedure to return a value. It also provides help in restoring the values of registers that the procedure has modified. All procedures in a given programming language use the same linkage mechanism (although different languages may use different linkages). The mechanism can also be used to call handwritten assembly language routines from compiled code.

Procedure stacks are typically built to grow down from high addresses. A stack pointer (sp) defines the end of the current frame, while a frame pointer (fp) defines the end of the last frame. (The fp is technically necessary only if the stack frame can be grown by the procedure during execution.) The procedure can refer to an element in the frame by addressing relative to sp. When a new procedure is called, the sp and fp are modified to push another frame onto the stack.

As we saw in Chapter 2, the ARM Procedure Call Standard (APCS) [Slo04] is the recommended procedure linkage for ARM processors. r0–r3 are used to pass the first four parameters into the procedure. r0 is also used to hold the return value.

The next example looks at compiler-generated procedure linkage code.

Example 5.4 Procedure Linkage in C

Here is a procedure definition:

int p1(int a, int b, int c, int d, int e) {

return a + e;

}

This procedure has five parameters, so we would expect that one of them would be passed through the stack while the rest are passed through registers. It also returns an integer value, which should be returned in r0. Here is the code for the procedure generated by the ARM gcc compiler with some handwritten comments:

mov ip, sp ; procedure entry

stmfd sp!, {fp, ip, lr, pc}

sub fp, ip, #4

sub sp, sp, #16

str r0, [fp, #−16] ; put first four args on stack

str r1, [fp, #−20]

str r2, [fp, #−24]

str r3, [fp, #−28]

ldr r2, [fp, #−16] ; load a

ldr r3, [fp, #4] ; load e

add r3, r2, r3 ; compute a + e

mov r0, r3 ; put the result into r0 for return

ldmea fp, {fp, sp, pc} ; return

Here is a call to that procedure:

y = p1(a,b,c,d,x);

Here is the ARM gcc code with handwritten comments:

ldr r3, [fp, #−32] ; get e

str r3, [sp, #0] ; put into p1()’s stack frame

ldr r0, [fp, #−16] ; put a into r0

ldr r1, [fp, #−20] ; put b into r1

ldr r2, [fp, #−24] ; put c into r2

ldr r3, [fp, #−28] ; put d into r3

bl p1 ; call p1()

mov r3, r0 ; move return value into r3

str r3, [fp, #−36] ; store into y in stack frame

We can see that the compiler sometimes makes additional register moves but it does follow the ACPS standard.

Data structures

The compiler must also translate references to data structures into references to raw memories. In general, this requires address computations. Some of these computations can be done at compile time while others must be done at run time.

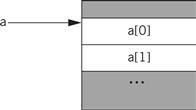

Arrays are interesting because the address of an array element must in general be computed at run time, because the array index may change. Let us first consider a one-dimensional array:

a[i]

The layout of the array in memory is shown in Figure 5.15: the zeroth element is stored as the first element of the array, the first element directly below, and so on. We can create a pointer for the array that points to the array’s head, namely, a[0]. If we call that pointer aptr for convenience, then we can rewrite the reading of a[i] as

*(aptr + i)

Figure 5.15 Layout of a one-dimensional array in memory.

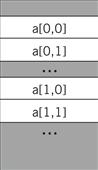

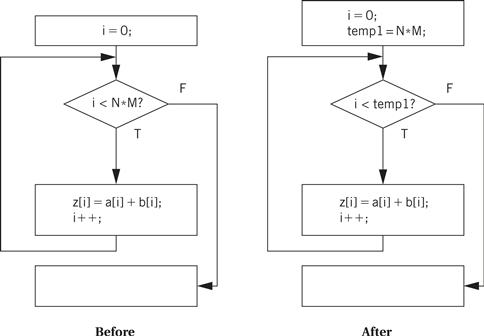

Two-dimensional arrays are more challenging. There are multiple possible ways to lay out a two-dimensional array in memory, as shown in Figure 5.15. In this form, which is known as row major, the inner variable of the array (j in a[i,j]) varies most quickly. (Fortran uses a different organization known as column major.) Two-dimensional arrays also require more sophisticated addressing—in particular, we must know the size of the array. Let us consider the row-major form. If the a[] array is of size N × M, then we can turn the two-dimensional array access into a one-dimensional array access. Thus,

a[i,j]

becomes

a[i*M + j]

where the maximum value for j is M − 1.

A C struct is easier to address. As shown in Figure 5.16, a structure is implemented as a contiguous block of memory. Fields in the structure can be accessed using constant offsets to the base address of the structure. In this example, if field1 is four bytes long, then field2 can be accessed as

*(aptr + 4)

Figure 5.16 Memory layout for two-dimensional arrays.

This addition can usually be done at compile time, requiring only the indirection itself to fetch the memory location during execution.

5.5.3 Compiler Optimizations

Basic compilation techniques can generate inefficient code. Compilers use a wide range of algorithms to optimize the code they generate.

Loop transformations

Loops are important program structures—although they are compactly described in the source code, they often use a large fraction of the computation time. Many techniques have been designed to optimize loops.

A simple but useful transformation is known as loop unrolling, illustrated in the next example. Loop unrolling is important because it helps expose parallelism that can be used by later stages of the compiler.

Example 5.5 Loop Unrolling

Here is a simple C loop:

for (i = 0; i < N; i++) {

a[i]=b[i]*c[i];

}

This loop is executed a fixed number of times, namely, N. A straightforward implementation of the loop would create and initialize the loop variable i, update its value on every iteration, and test it to see whether to exit the loop. However, because the loop is executed a fixed number of times, we can generate more direct code.

If we let N = 4, then we can substitute this straight-line code for the loop:

a[0] = b[0]*c[0];

a[1] = b[1]*c[1];

a[2] = b[2]*c[2];

a[3] = b[3]*c[3];

This unrolled code has no loop overhead code at all, that is, no iteration variable and no tests. But the unrolled loop has the same problems as the inlined procedure—it may interfere with the cache and expands the amount of code required.

We do not, of course, have to fully unroll loops. Rather than unroll the above loop four times, we could unroll it twice. Unrolling produces this code:

for (i = 0; i < 2; i++) {

a[i*2] = b[i*2]*c[i*2];

a[i*2 + 1] = b[i*2 + 1]*c[i*2 + 1];

}

In this case, because all operations in the two lines of the loop body are independent, later stages of the compiler may be able to generate code that allows them to be executed efficiently on the CPU’s pipeline.

Loop fusion combines two or more loops into a single loop. For this transformation to be legal, two conditions must be satisfied. First, the loops must iterate over the same values. Second, the loop bodies must not have dependencies that would be violated if they are executed together—for example, if the second loop’s ith iteration depends on the results of the i + 1th iteration of the first loop, the two loops cannot be combined. Loop distribution is the opposite of loop fusion, that is, decomposing a single loop into multiple loops.

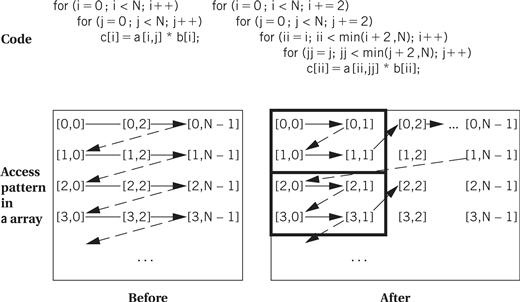

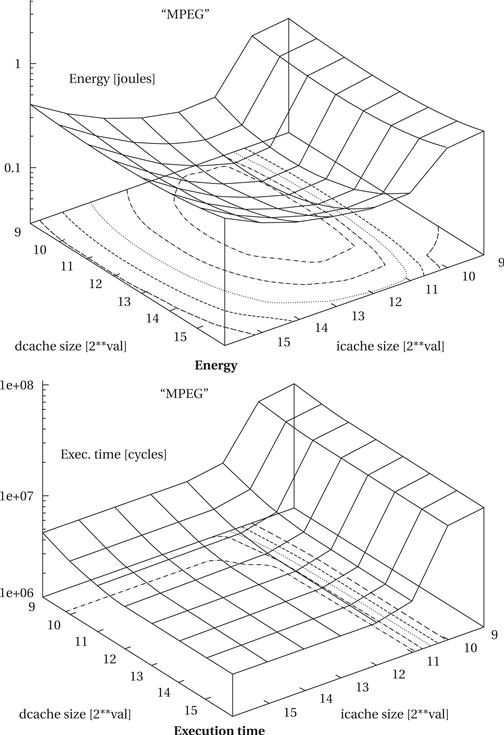

Loop tiling breaks up a loop into a set of nested loops, with each inner loop performing the operations on a subset of the data. An example is shown in Figure 5.17. Here, each loop is broken up into tiles of size two. Each loop is split into two loops—for example, the inner ii loop iterates within the tile and the outer i loop iterates across the tiles. The result is that the pattern of accesses across the a array is drastically different—rather than walking across one row in its entirety, the code walks through rows and columns following the tile structure. Loop tiling changes the order in which array elements are accessed, thereby allowing us to better control the behavior of the cache during loop execution.

Figure 5.17 Loop tiling.

We can also modify the arrays being indexed in loops. Array padding adds dummy data elements to a loop in order to change the layout of the array in the cache. Although these array locations will not be used, they do change how the useful array elements fall into cache lines. Judicious padding can in some cases significantly reduce the number of cache conflicts during loop execution.

Dead code elimination

Dead code is code that can never be executed. Dead code can be generated by programmers, either inadvertently or purposefully. Dead code can also be generated by compilers. Dead code can be identified by reachability analysis—finding the other statements or instructions from which it can be reached. If a given piece of code cannot be reached, or it can be reached only by a piece of code that is unreachable from the main program, then it can be eliminated. Dead code elimination analyzes code for reachability and trims away dead code.

Register allocation

Register allocation is a very important compilation phase. Given a block of code, we want to choose assignments of variables (both declared and temporary) to registers to minimize the total number of required registers.

The next example illustrates the importance of proper register allocation.

Example 5.6 Register Allocation

To keep the example small, we assume that we can use only four of the ARM’s registers. In fact, such a restriction is not unthinkable—programming conventions can reserve certain registers for special purposes and significantly reduce the number of general-purpose registers available.

Consider this C code:

w = a + b; /* statement 1 */

x = c + w; /* statement 2 */

y = c + d; /* statement 3 */

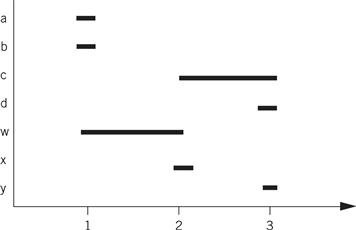

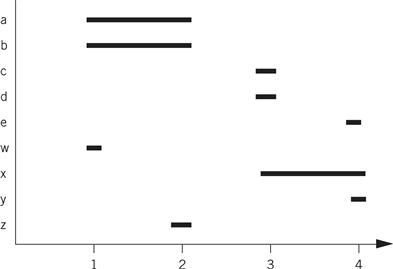

A naive register allocation, assigning each variable to a separate register, would require seven registers for the seven variables in the above code. However, we can do much better by reusing a register once the value stored in the register is no longer needed. To understand how to do this, we can draw a lifetime graph that shows the statements on which each statement is used. Here is a lifetime graph in which the x axis is the statement number in the C code and the y axis shows the variables.

A horizontal line stretches from the first statement where the variable is used to the last use of the variable; a variable is said to be live during this interval. At each statement, we can determine every variable currently in use. The maximum number of variables in use at any statement determines the maximum number of registers required. In this case, statement two requires three registers: c, w, and x. This fits within the four-register limitation. By reusing registers once their current values are no longer needed, we can write code that requires no more than four registers. Here is one register assignment:

| a | r0 |

| b | r1 |

| c | r2 |

| d | r0 |

| w | r3 |

| x | r0 |

| y | r3 |

Here is the ARM assembly code that uses the above register assignment:

LDR r0,[p_a] ; load a into r0 using pointer to a (p_a)

LDR r1,[p_b] ; load b into r1

ADD r3,r0,r1 ; compute a + b

STR r3,[p_w] ; w = a + b

LDR r2,[p_c] ; load c into r2

ADD r0,r2,r3 ; compute c + w, reusing r0 for x

STR r0,[p_x] ; x = c + w

LDR r0,[p_d] ; load d into r0

ADD r3,r2,r0 ; compute c + d, reusing r3 for y

STR r3,[p_y] ; y = c + d

If a section of code requires more registers than are available, we must spill some of the values out to memory temporarily. After computing some values, we write the values to temporary memory locations, reuse those registers in other computations, and then reread the old values from the temporary locations to resume work. Spilling registers is problematic in several respects: it requires extra CPU time and uses up both instruction and data memory. Putting effort into register allocation to avoid unnecessary register spills is worth your time.

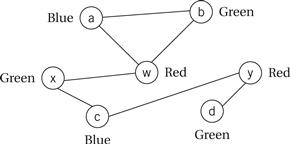

We can solve register allocation problems by building a conflict graph and solving a graph coloring problem. As shown in Figure 5.18, each variable in the high-level language code is represented by a node. An edge is added between two nodes if they are both live at the same time. The graph coloring problem is to use the smallest number of distinct colors to color all the nodes such that no two nodes are directly connected by an edge of the same color. The figure shows a satisfying coloring that uses three colors. Graph coloring is NP-complete, but there are efficient heuristic algorithms that can give good results on typical register allocation problems.

Figure 5.18 Using graph coloring to solve the problem of Example 5.6.

Lifetime analysis assumes that we have already determined the order in which we will evaluate operations. In many cases, we have freedom in the order in which we do things. Consider this expression:

(a + b) * (c − d)

We have to do the multiplication last, but we can do either the addition or the subtraction first. Different orders of loads, stores, and arithmetic operations may also result in different execution times on pipelined machines. If we can keep values in registers without having to reread them from main memory, we can save execution time and reduce code size as well.

The next example shows how proper operator scheduling can improve register allocation.

Example 5.7 Operator Scheduling for Register Allocation

Here is a sample C code fragment:

w = a + b; /* statement 1 */

x = c + d; /* statement 2 */

y = x + e; /* statement 3 */

z = a − b; /* statement 4 */

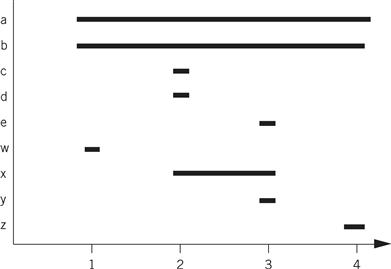

If we compile the statements in the order in which they were written, we get this register graph:

Because w is needed until the last statement, we need five registers at statement 3, even though only three registers are needed for the statement at line 3. If we swap statements 3 and 4 (renumbering them 39 and 49), we reduce our requirements to three registers. Here is the modified C code:

w = a + b; /* statement 1 */

z = a − b; /* statement 29 */

x = c + d; /* statement 39 */

y = x + e; /* statement 49 */

And here is the lifetime graph for the new code:

Compare the ARM assembly code for the two code fragments. We have written both assuming that we have only four free registers. In the before version, we do not have to write out any values, but we must read a and b twice. The after version allows us to retain all values in registers as long as we need them.

Before version After version

LDR r0,a LDR r0,a

LDR r1,b LDR r1,b

ADD r2,r0,r1 ADD r2,r1,r0

STR r2,w ; w = a + b STR r2,w ; w = a + b

LDRr r0,c SUB r2,r0,r1

LDR r1,d STR r2,z ; z = a − b

ADD r2,r0,r1 LDR r0,c

STR r2,x ; x = c + d LDR r1,d

LDR r1,e ADD r2,r1,r0

ADD r0,r1,r2 STR r2,x ; x = c + d

STR r0,y ; y = x + e LDR r1,e

LDR r0,a ; reload a ADD r0,r1,r2

LDR r1,b ; reload b STR r0,y ; y = x + e

SUB r2,r1,r0

STR r2,z ; z = a − b

Scheduling

We have some freedom to choose the order in which operations will be performed. We can use this to our advantage—for example, we may be able to improve the register allocation by changing the order in which operations are performed, thereby changing the lifetimes of the variables.

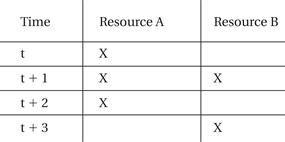

We can solve scheduling problems by keeping track of resource utilization over time. We do not have to know the exact microarchitecture of the CPU—all we have to know is that, for example, instruction types 1 and 2 both use resource A while instruction types 3 and 4 use resource B. CPU manufacturers generally disclose enough information about the microarchitecture to allow us to schedule instructions even when they do not provide a detailed description of the CPU’s internals.

We can keep track of CPU resources during instruction scheduling using a reservation table[Kog81]. As illustrated in Figure 5.19, rows in the table represent instruction execution time slots and columns represent resources that must be scheduled. Before scheduling an instruction to be executed at a particular time, we check the reservation table to determine whether all resources needed by the instruction are available at that time. Upon scheduling the instruction, we update the table to note all resources used by that instruction. Various algorithms can be used for the scheduling itself, depending on the types of resources and instructions involved, but the reservation table provides a good summary of the state of an instruction scheduling problem in progress.

Figure 5.19 A reservation table for instruction scheduling.

We can also schedule instructions to maximize performance. As we know from Section 3.6, when an instruction that takes more cycles than normal to finish is in the pipeline, pipeline bubbles appear that reduce performance. Software pipelining is a technique for reordering instructions across several loop iterations to reduce pipeline bubbles. Some instructions take several cycles to complete; if the value produced by one of these instructions is needed by other instructions in the loop iteration, then they must wait for that value to be produced. Rather than pad the loop with no-ops, we can start instructions from the next iteration. The loop body then contains instructions that manipulate values from several different loop iterations—some of the instructions are working on the early part of iteration n + 1, others are working on iteration n, and still others are finishing iteration n − 1.

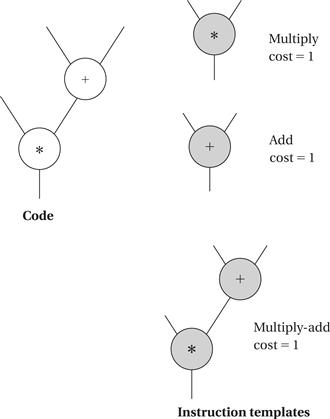

Instruction selection

Selecting the instructions to use to implement each operation is not trivial. There may be several different instructions that can be used to accomplish the same goal, but they may have different execution times. Moreover, using one instruction for one part of the program may affect the instructions that can be used in adjacent code. Although we can’t discuss all the problems and methods for code generation here, a little bit of knowledge helps us envision what the compiler is doing.

One useful technique for generating code is template matching, illustrated in Figure 5.20. We have a DAG that represents the expression for which we want to generate code. In order to be able to match up instructions and operations, we represent instructions using the same DAG representation. We shaded the instruction template nodes to distinguish them from code nodes. Each node has a cost, which may be simply the execution time of the instruction or may include factors for size, power consumption, and so on. In this case, we have shown that each instruction takes the same amount of time, and thus all have a cost of 1. Our goal is to cover all nodes in the code DAG with instruction DAGs—until we have covered the code DAG we haven’t generated code for all the operations in the expression. In this case, the lowest-cost covering uses the multiply-add instruction to cover both nodes. If we first tried to cover the bottom node with the multiply instruction, we would find ourselves blocked from using the multiply-add instruction. Dynamic programming can be used to efficiently find the lowest-cost covering of trees, and heuristics can extend the technique to DAGs.

Figure 5.20 Code generation by template matching.

Understanding your compiler

Clearly, the compiler can vastly transform your program during the creation of assembly language. But compilers are also substantially different in terms of the optimizations they perform. Understanding your compiler can help you get the best code out of it.

Studying the assembly language output of the compiler is a good way to learn about what the compiler does. Some compilers will annotate sections of code to help you make the correspondence between the source and assembler output. Starting with small examples that exercise only a few types of statements will help. You can experiment with different optimization levels (the -O flag on most C compilers). You can also try writing the same algorithm in several ways to see how the compiler’s output changes.

If you can’t get your compiler to generate the code you want, you may need to write your own assembly language. You can do this by writing it from scratch or modifying the output of the compiler. If you write your own assembly code, you must ensure that it conforms to all compiler conventions, such as procedure call linkage. If you modify the compiler output, you should be sure that you have the algorithm right before you start writing code so that you don’t have to repeatedly edit the compiler’s assembly language output. You also need to clearly document the fact that the high-level language source is, in fact, not the code used in the system.

5.6 Program-Level Performance Analysis

Because embedded systems must perform functions in real time, we often need to know how fast a program runs. The techniques we use to analyze program execution time are also helpful in analyzing properties such as power consumption. In this section, we study how to analyze programs to estimate their run times. We also examine how to optimize programs to improve their execution times; of course, optimization relies on analysis.

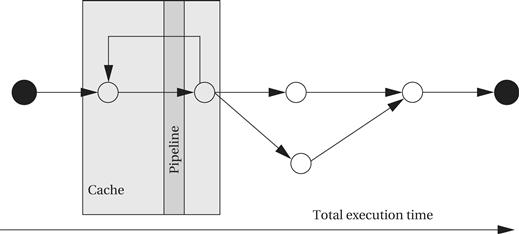

It is important to keep in mind that CPU performance is not judged in the same way as program performance. Certainly, CPU clock rate is a very unreliable metric for program performance. But more importantly, the fact that the CPU executes part of our program quickly doesn’t mean that it will execute the entire program at the rate we desire. As illustrated in Figure 5.21, the CPU pipeline and cache act as windows into our program. In order to understand the total execution time of our program, we must look at execution paths, which in general are far longer than the pipeline and cache windows. The pipeline and cache influence execution time, but execution time is a global property of the program.

Figure 5.21 Execution time is a global property of a program.

While we might hope that the execution time of programs could be precisely determined, this is in fact difficult to do in practice:

• The execution time of a program often varies with the input data values because those values select different execution paths in the program. For example, loops may be executed a varying number of times, and different branches may execute blocks of varying complexity.

• The cache has a major effect on program performance, and once again, the cache’s behavior depends in part on the data values input to the program.

• Execution times may vary even at the instruction level. Floating-point operations are the most sensitive to data values, but the normal integer execution pipeline can also introduce data-dependent variations. In general, the execution time of an instruction in a pipeline depends not only on that instruction but on the instructions around it in the pipeline.

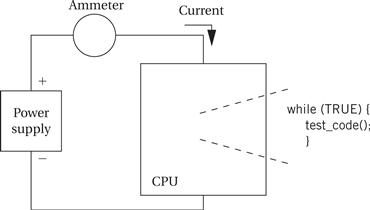

Measuring execution speed

We can measure program performance in several ways: