ETSI NFV ISG

Abstract

In this chapter, we consider the contribution of the ETSI NFV ISG group’s work (with focus on the charter period ending in December 2014—which we will refer to as Phase 1—and an update on its later phase) as it pertains to Network Function Virtualization and the potential future of the ISG. The main focus of this work is decoupling service software from network hardware, creating flexible deployments and dynamic operations—the stated benefits of NFV in their architecture framework.

Keywords

ETSI; NFV; ISG; deployment; industry study group

Introduction

In October 2012, a group of engineers from 13 network operators published an NFV white paper that outlined the need for, and proposal to form, a work effort regarding the issues of NFV.

A second version of this white paper was published a year later, in 2013 with 25 (mostly telco)operator co-signers.1 A third was published in October 2014 as their work wound down.2

In between the first and last publications, the group found a home for their work in a European Telecommunications Standards Institute (ETSI)3 Industry Study Group (ISG).

In this chapter, we consider the contribution of the ETSI NFV ISG group’s work (for the charter period ending in December 2014—which we will refer to as Phase 1) as it pertains to NFV and the potential future of the ISG. The main focus of this work is around decoupling software from hardware, creating flexible deployments and dynamic operations—the stated benefits of NFV in their architecture framework.

This contribution is found largely in their published white papers and Proof-of-Concept (PoC) exercises, which by their own admission (and subsequent recharter for a Phase 2) leave much more work to be done.

Let’s now explore these efforts in more detail.

Getting Chartered

European Telecommunications Institute

The ETSI is an interesting Standards body. It was formed in 1988, with the original charter of providing “harmonized” standards in support of a single telecommunications services market within Europe originally under the auspices of the European Commission/European Free Trade Association, later within the European Union.

In support of this mission, ETSI has several levels of standard, ranging from the Group Specification (GS) to the European Standard (EN). The latter requires approval by European National Standards Organizations, whereas other standards produced (such as the ETSI Standard), require a majority of the entire organization to vote in favor of the proposal in order to ratify it as a Standard.

ETSI has wide-reaching interests that it calls “clusters,” including Home and Office, Networks, Better Living with ICT, Content Delivery, Networks, Wireless Systems, Transportation, Connecting Things, Interoperability, Public Safety, and Security.

The NFV ISG falls under the Networks cluster.

The Third Generation Partnership Project (3GPP) is another contributor to this cluster, and because of this (to most readers of this book) ETSI might be known more for wireless telecom standards to those that follow networking.

NFV at ETSI

So, why would the organizers of the NFV effort locate this work in ETSI?

The answer is primarily in expediency and structure. ETSI has a reasonable governance process, well-established Intellectual Property Rights (IPR) policies, and open membership. Non-ETSI members may pay a small fee to cover the costs of administrative overhead and are thereafter allowed to participate. Membership/participation is not limited to Europe, but is a good deal more involved in terms of paperwork and fees. In short, the organizational framework and structure are very much set up to support the proposed study and the distribution of its outputs.

The ISG structure is designed to be supplemental to the existing standardization process within ETSI, operating more as an industry forum than a Standards organization. It does have its challenges when interaction with other standards forums are considered.

In early presentations the mission of the NFV ISG was described as having two key drivers:

• To recommend consensus-based approaches which address the challenges outlined in the original white paper.

• Not to develop any new standards, but instead to produce white papers that describe and illustrate the problem space or requirements for solutions in that space, reference existing standards where they are applicable and point at them as possible solutions to the aforementioned identified requirements, and make inputs to Standards bodies to address gaps where standards might not yet exist to address the requirements.

As originally scoped, the outputs were not considered “normative” documents.4 Early discussions around forming the ISG curtailed their mandate to delivering a Gap Analysis (described as a gap analysis and solution approach). The ETSI NFV ISG published documents appear as GS level documents—which if ratified, would empower the ISG to “provide technical requirements or explanatory material or both.” Effectively, the ISG could produce proper standards solutions, but never get beyond requirements and problem space definitions.

The original work was scoped to take two years and conclude at the end of 2014. However, with the increased focus on the SDN and networking industries over the last year of their first charter, the group voted to recharter its efforts in order to facilitate another two years of operation. To this end, the group’s portal was showing proposed meetings into Spring/Summer 2017 (at the time this book was written).

Although the stated original intent (during formation) was that a consensus-based approach was preferred5 and that voting was not anticipated for issue resolution, the drafting operators were given extraordinary rights in the first three meetings as part of a founding block of network operators, referred to as the Network Operators Council (NOC).

Should a vote be called, this block of founding members would have enough “weighted” votes to outvote any reasonable opposition (assuming they all voted the same way).

This council was moderated by the ISG Chairman and originally consisting of representatives from AT&T, BT, Deutsche Telekom, Orange, Telecom Italia, Telefonica, CenturyLink, China Mobile, Colt, KDDI, NTT, Telstra, Vodafone, and Verizon.

The ISG Chairman appoints a Technical Manager who chairs the Technical Steering Committee (TSC).

Organization

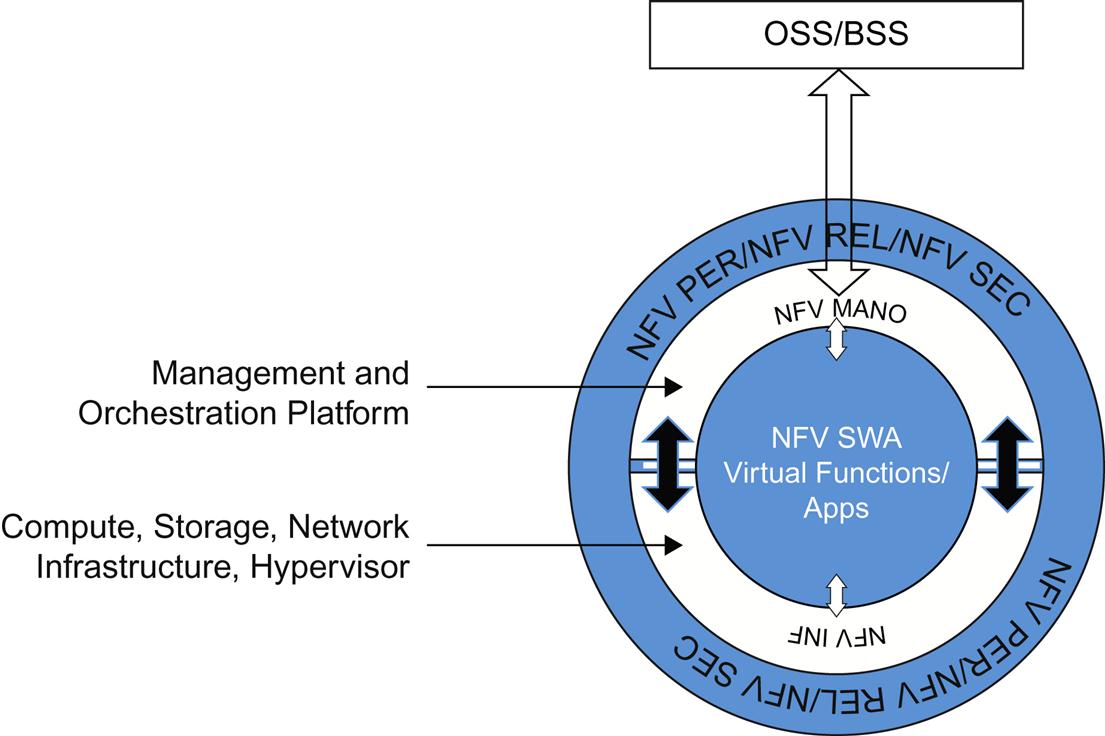

Underneath the umbrella of the TSC are several Working Groups (Fig. 3.1). At a layer below the Working Groups are Expert Task Groups, which can work with more than one Working Group in order to accomplish their goals and tasks.

• NFV INF (Infrastructure)—work on NFV issues and recommendations in the Compute, Hypervisor, and Network Infrastructure (the “underlay”) domains. These are commonly referred to as “network underlay” domains. Late in the process, they expanded their scope to include Service Quality Metrics.

• NFV MAN (Management and Orchestration)—was chartered to work on NFV issues and recommendations around Management and Orchestration.

• NFV SWA (Software Architecture)—was enlisted to produce software architecture for virtual network functions (VNFs).

• NFV PER (Performance)—identify NFV issues and recommendations in the area of performance and portability. This group also created the PoC Framework.

• NFV REL (Reliability)—work on reliability and resiliency issues and produce related recommendations.

• NFV SEC (Security)—work on security issues that pertain to NFV, both for the virtual functions and their management systems.

The Performance, Reliability, and Security groups were chartered with overarching responsibilities across multiple areas. To that end, these groups had relationships with all other working groups and help connect the different areas together. The remaining group interactions can be implied from the interfaces in the NFV architecture.

While these groups produced their own outputs, they also rolled-up and contributed to the high-level outputs of the ISG. There is evidence that views on their topics continued to evolve after publication of the original high-level documents (as expressed in their own subsequent workgroup publications). Because of this evolution, there is a need for normalization through subsequent revision of either the WG or ISG-level documents.

Impact on 3GPP

It is only natural that NFV work items might spill over into the 3GPP, as some operators see the architecture of both the 3GPP core and radio access network (RAN) for mobile networks changing due to NFV. A reimagining of these architectures would target the movement of a lot of the state management between the logical entities of those architectures into VNFs as a starting simplification. At a minimum, such changes would result in the Operations and Management (OAM—SA5) interfaces of 3GPP evolving for virtualized infrastructure. This is not surprising, given that the 3GPP architecture was envisioned prior to NFV and its related concepts and architectural implications. However, changes due to virtualization will probably be minor (at first) within the core protocols. We will not see any real impacts to these architectures until Release 14 (or later) of 3GPP standards.

The bigger impacts of NFV on 3GPP will probably be seen in discussions of the new 5G mobile architecture and, like all SDOs, the pace of innovation in virtual elements may require ETSI and 3GPP to speed up their standards process to keep pace with the demand for such changes.

Digesting ETSI Output

Output

The NFV ISG has been busy as the group met often and produced a significant number of papers and PoC documents in Phase 1.

• In the two years allotted to the group, there were eight major meetings, and some individual groups held more than eighty online meetings.

• The final document count at the expiration of the (first) charter is almost twenty. Each document can represent numerous, voluminous “contributions.”

• There were more than twenty-five registered PoC projects6 in that same two-year period.

The papers include workgroup specific publications and four “macro” documents. The latter include the (more mundane) Terminology, the Use Case document,7 the Virtualization Requirements work and as well as the main NFV Architecture and NFV Gap Analysis documents (inline with the original mandate).

Terminology

With a goal of not duplicating the Terminology for Main Concepts in NFV as identified in GS NFV 003, there are still some fundamental terms that were uniquely coined by the NFV ISG that we will use in this chapter8 that have to be introduced here. Let’s define them now:

VNF—A VNF is an implementation of a new Network Function or a Network Function that was previously implemented as a tightly integrated software/hardware appliance or network element. This is referred to in other chapters as a service component or service function to imply one of many that might make up a service offering deployable on a Network Function Virtualization Infrastructure (NFVI). For instance, a firewall function that once existed as a fixed-function, physical device is now virtualized to create a VNF called “firewall.”

VNFC—A Virtual Network Function Component is requisite to discussing the concept of “composite” service functions. A composite is composed of more than one discernable/separable component function. The documentary example of a composite is the combining of PGW and SGW network functions in the mobile network core to make a theoretical virtual Evolved Packet Core (vEPC) offering.

NFVI—The NFVI is the hardware and software infrastructure that create the environment for VNF deployments, including the connectivity between VNFs.

Architecture—General

The NFV Architectural Framework as described in GS NFV 002 tries to describe and align functional blocks and their eight reference points (interfaces) with an operator’s overall needs (in the view of the membership).

Keep in mind that this architecture and the perspective of the operator is in the context of the ETSI member operators, which may not comprise the totality of worldwide network operators.

The reader should note that this document predates many of the final workgroup documents, thus requiring subsequent searches into the documents published for clarifications and greater detail to make the ISG view whole and cohesive.

We recommend reading the Virtualization Requirements document as a good companion document to the Architectural Framework. We also advise that reading some of the individual workgroup documents can be useful in filling gaps left by those main documents.

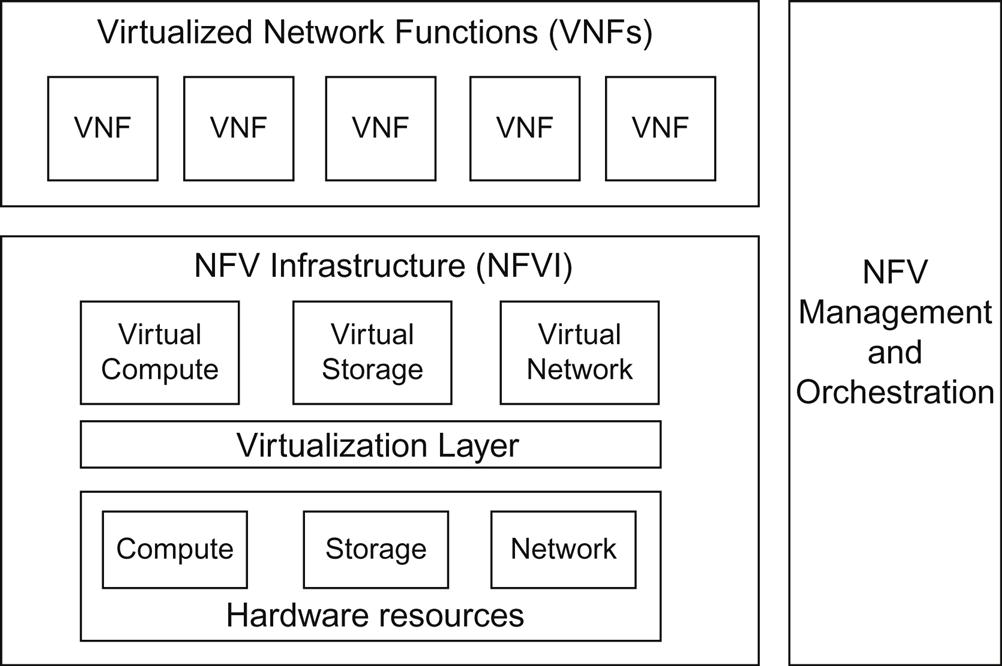

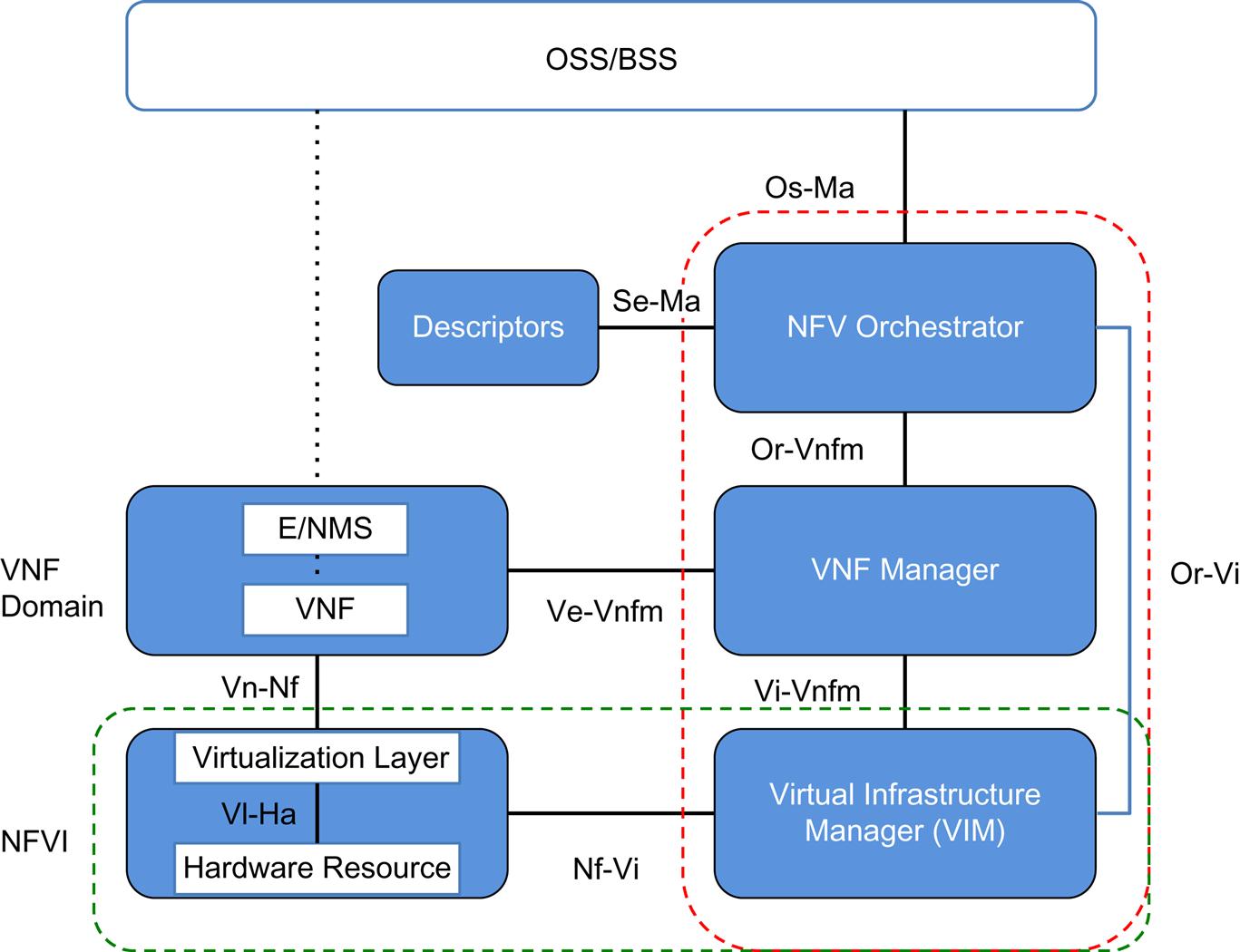

The high level architecture (Fig. 3.2) focuses on VNFs (the VNF Domain), the NFVI and NFV Management and Orchestration. These components and their relationships with one another arguably outline the core of NFV.

The large blocks in Fig. 3.2 include:

• NFVI (defined earlier)—virtualized compute, storage, and network and its corresponding physical compute, storage, and network resources. The execution environment for the VNFs.

• VNF domain—the virtualized network functions and their management interface(s).

• NFV Management and Orchestration—lifecycle management of the physical and virtual resources that comprise the NFV environment.

The connectivity between VNFs in the Architecture Framework document is described as a VNF Forwarding Graph and is relevant to the Service Function Chain of Chapter 2 or a group where the connectivity is not specified called a VNF Set. This latter concept is described later in the use case referring to residential networking virtualization.

In the discussion of forwarding graphs, the concept of nesting forwarding graphs transparently to the end-to-end service is described. This level of decomposition does seem practical in that reusable subchains might make the overall orchestration of service chains easier to define, as well as operate.

In the description of a VNF the architecture document states that a VNF can be decomposed into multiple virtual machines (VMs), where each VM hosts a component of the VNF.9 This is comprehensible from the starting point of a composite network function (eg, a conjoined PGW/SGW as a vEPC is decomposed to PGW and SGW). Such a procedure may need no standardization given that the decomposition is rather obvious.

Other applications may have backend components that may reside in a separate VM—like an IMS application with a separate Cassandra database. Here the challenge is more in the lifecycle management of the composite than its connectivity. The ability of a third party (other than the original “composed” function creator) performing this separation (or joining) seems unlikely.

To get more depth, the SWA (GS NFV-SWA) document provides more guidance around these “composite” functions.

• It superimposes its own reference points on the architecture (SWA-x).

• It describes VNF design patterns and properties: internal structure, instantiation, state models, and load balancing. To some degree, these can be seen in the Virtualization Requirements document.

• It describes scaling methodologies and reuse rules—including one that states that the only acceptable reusable component is one that is promoted to a full VNF (from VNFC).

In the example case of the composite vEPC, the document describes how the components might connect logically above the hypervisor (SWA-2 reference point) and stipulates that this could be vendor-specific, but seems to imply that they connect over the SWA-defined SWA-5 interface. In turn, the SWA-5 is defined as the VNF-VNFI interface with the functionality of accessing resources that provide a “special function” (eg, video processing card), “storage” and “network I/O.”

Thus the NFV Architecture (and supporting documents) establishes a general deployment model where individual components always talk through the NFVI. The granularity of a “composite” is not sub-atomic as described, for example, it does not contemplate breaking the SGW functionality into further components, some of which may be reusable (although the potential exists).

The Virtualization Layer description of the NFVI appears to be written prior to the explosion of interest in containers (eg, UML, LXC, Docker) and thus is very hypervisor-centric in its view of virtualization. The document makes note of the fact that it is written in a certain time or place, with a nod to bare metal deployment of software-based VNFs.

While it is understandable that the goal was to be nonspecific to any specific virtualization “layer,” the observation here is that technology in this space has and is rapidly evolving and these standards need to keep pace. Anyone implementing the technology described therein should be sure to look around at what is happening in the state of the art before making any firm decisions for a deployment.

The SWA document published later in the timeline of ISG publications recognizes an entire spectrum of virtualization, including containers, as does an INF document (The Architecture of the Hypervisor Domain—GS NFV INF 003). The latter states that the hypervisor requirements are similar to those of containers, but more research is needed to define that ecosystem.

In their latest white paper, the ISG provides this summary of the SWA document:

In summary, the NFV Virtual Network Functions Architecture document defines the functions, requirements, and interfaces of VNFs with respect to the more general NFV Architectural Framework. It sets the stage for future work to bring software engineering best practices to the design of VNFs.10

These variations could point to (minor) problems with any document in a traditional standard development organization keeping up with the current pace of innovation.

Architecture—Big Blocks and Reference Points

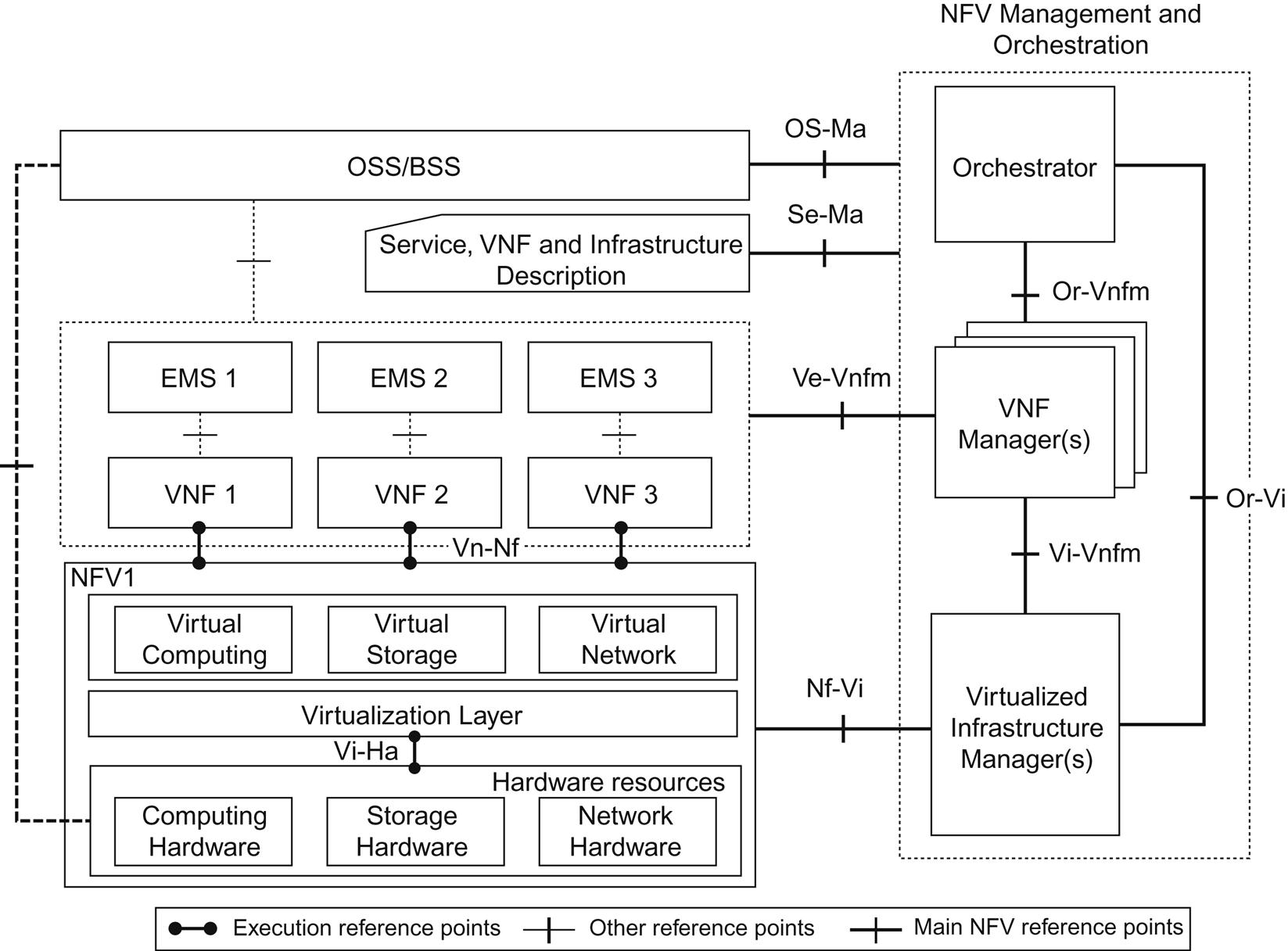

The big blocks in the high-level architecture framework (Fig. 3.3) include a further breakdown of NFV Management and Orchestration (and some external components), which we will visit again in Chapter 5, The NFV Infrastructure Management and Chapter 6, MANO: Management, Orchestration, OSS, and Service Assurance:

• OSS/BSS (Operations Support System/Business Support Systems) are traditionally involved in creating service (service catalog), maintaining it (FCAPS) and providing business support services (eg, billing). This remains outside of the scope of the architecture except for the Os-Ma interface.

• Service, VNF, and Infrastructure Description—an interface for Orchestration to ingest Forwarding Graph information and information/data models (or templates) for a Network Service catalog, a VNF catalog, the NFV instances and NFVI resources. This block insinuates the need for standard information/data models that is expanded on in the Virtualization Requirements document.

• NFV Orchestrator (NFVO) provides network-wide orchestration and management of both the VNFs and the NFVI. While this can be a proprietary software entity, it’s commonly drawn associated with OpenStack.

• The Virtual Network Function Manager (VNFM) provides lifecycle management for the VNF. There are suggestions (eg, in vendor white papers) that the Orchestrator provides a superset of this functionality (advocating a plug-in VNFM architecture to bring in vendor-specific VNFMs or providing the service if the VNF does not have one of its own) as depicted in Fig. 3.4.

• The Virtual Infrastructure Manager (VIM) provides resource allocation notifications for resource management and is responsible for CRUD operations on the VM (host or container) level, including creating and destroying connections between the VMs. It will also track configuration, image, and usage information. Its interface to NFVI is where the mapping of physical and virtual resources occurs. Particularly in the area of network provisioning, especially where complex (above the provisioning of VLAN chains) topologies (eg, overlays) are involved, this is often associated with a network (SDN) controller (also depicted in Fig. 3.4).

The reference points shown in the figure describe and support service creation flows and their portability across infrastructure components.

The architecture illustration shows three types of reference points: execution, main and “other” (with the first two being in-scope and standardization targets).

The model often works as logical flows across the architectural elements via these well-defined interconnections.

The portability chain can be seen in:

• The Vl-Ha interface between the Virtualization Layer and the Hardware Layer is described to provide hardware independent execution and operation environment for the VNF.

• The Vn-Nf interface is supposed to provide an independent execution environment, portability, and performance requirements between the VNF Domain and NFVI in a similarly hardware-independent (and nonspecific of a control protocol) manner.

This might imply that a standard needs to evolve around how a VM (eg, an application or applications representing an NFV executing in a guest OS) connects to a hypervisor, which may attach the connectivity to an external software or hardware forwarder within or outside of the hypervisor with a simple virtualization support interface. The latter may be container definition, host resource allocation, etc. Or perhaps this should simply be an exercise to document the de facto standards in this space such as VMware, HyperV, or KVM.

The flow of information that provides generic resource management, configuration, and state exchange in support of service creation traverses multiple reference points:

• Or-Vnfm interface between Orchestration and VNFM, which configures the VNF and collects service specific data to pass upstream.

• Vi-Vnfm interface between the VNFM and VNF Domain, which does additional work in virtualized hardware configuration.

• Or-Vi interface between the Orchestration and VIM, which also provides a virtualized hardware configuration information pathway.

• Nf-Vi interface between the NFVI and VIM, which performs physical hardware configuration (mapped to virtual resources).

The architecture suggests two levels of resource allocation, service-specific and VNF-specific.

There are two potential camps emerging around the implementation of this aspect of the architecture. As mentioned previously, there is a view that suggests that the NFVO takes on total responsibility for simplicity, consistency, and pragmatism, as this is how systems such as OpenStack currently function. Others may see the NFVO dividing up its responsibility with the VNFM where the NFVO is responsible only for service-level resources and the VNFM responsible solely for VNF resources.

There are pros and cons to either approach, which devolve into a classic complexity-versus-control argument in which the advocates of the latter view would hope to achieve some higher degree of control in return for a greater cost in complexity.

From a service management perspective, the OSS/BSS has just a single new interface described (Os-Ma) in the architecture and that is to the NFV Orchestrator. This interface supports the interface between back-end “business process” systems to the orchestrator. In the reality of today’s systems, this represents an interface between a business process system and OpenStack, VMware, or Hyper-V’s11 north-bound APIs.

The rest of the OSS connectivity appears unchanged in this document. This is represented by the NMS to EMS connection and EMS to VNF connections. There is no mention of any change in the protocols involved in these interactions. The SWA document describes the EMS to VNF connectivity “as defined in ITU-T TMN recommendations.”

Because there is only one new interface here, the bulk of the responsibility for this innovation seems shifted to NFV Orchestration.

The Ve-Vnfm reference point between EMS and VNF overlaps with the OS-Ma interface in respect to VNF lifecycle management and network service lifecycle management with the only discernable difference being in configuration.12

The Se-Ma reference point is more of an “ingestion” interface used by the Management and Orchestration system to consume information models related to the Forwarding Graph, services, and NFVI resources. This information can be manifested/understood as service and VM “catalogs.”

The conclusion of the Architectural Framework points to a number of study items that will be fleshed out in the working groups and be returned in the form of their recommendations and gap analysis.

Use Cases

The use case document (GS NFV 001) describes nine use cases, and functions well as a standalone document. While it predates some workgroup document publications, it still provides a decent and still applicable set of use cases. The reader should keep in mind that the use cases include problem statements and requirements that were to be refined/addressed in the individual WGs. Given this, the use cases are due for an update. Whether these are done in ETSI or the new OP-NFV group is still to be determined.

The work starts with a statement of potential benefits that includes three notable items: improved operational efficiencies from common automation, improved power and cooling efficiency through the shedding, migration and consolidation of workloads and the resulting powering down of idle equipment, as well as improved capital efficiencies compared to dedicated equipment (as was the traditional operational model for workloads—one function per physical device/box).

The table below summarizes each use case:

1. NFV Infrastructure as a Service (NFVIaaS)

2. Virtual Network Functions as a Service (VNFaaS)

3. VNF Platform as a Service (VNFPaaS)

5. Virtualization of the Mobile Core Network and IMS (vEPC)

6. Virtualization of the Mobile Base Station

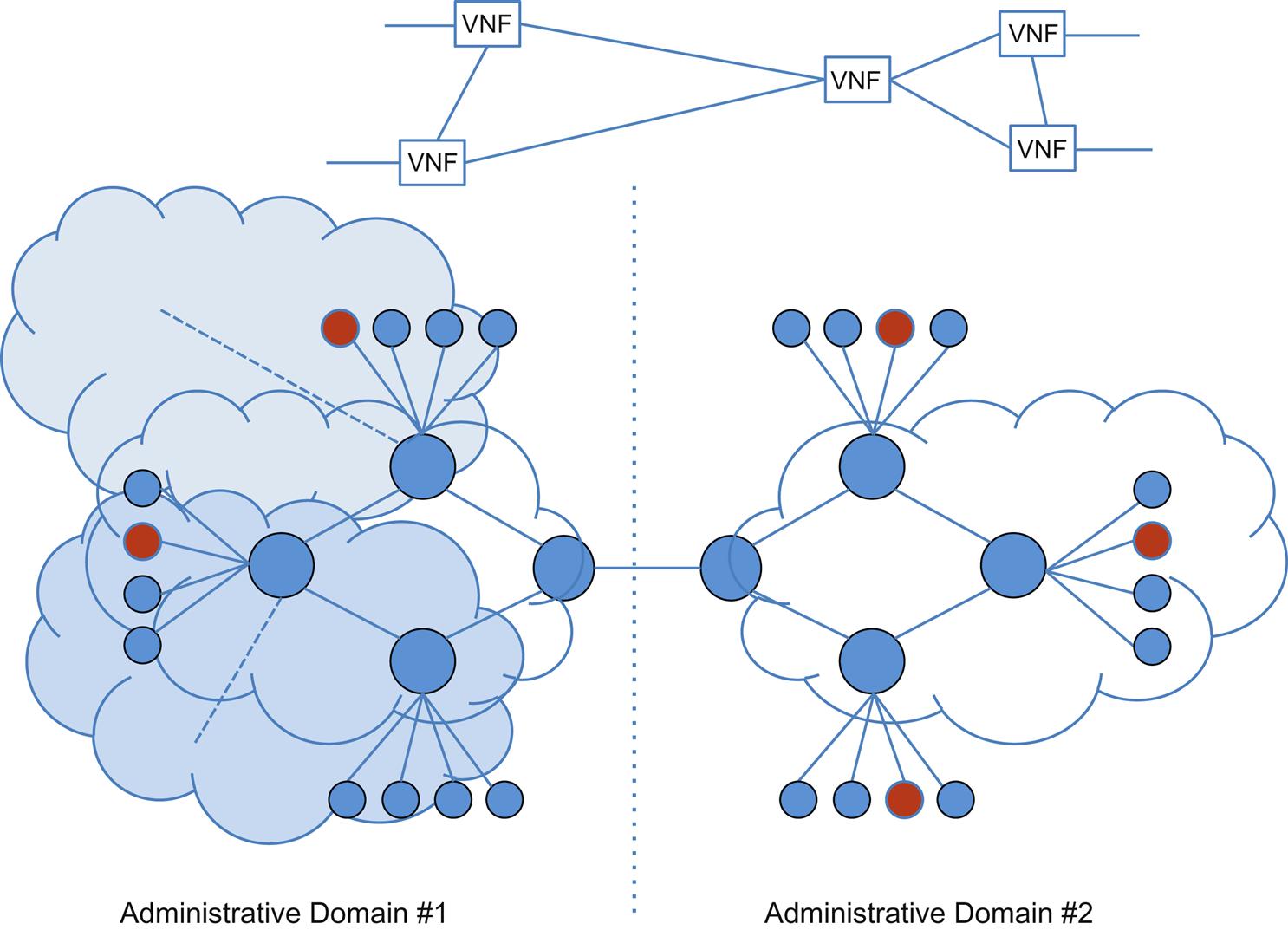

In Fig. 3.5, NFVIaaS (Case 1—NFV Infrastructure as a Service) envisions an environment where one service provider (Administrative Domain #2) runs NFV services on the (cloud) NFVI of another (Administrative Domain #1), creating an offering across NFVIs that have separate administration. The incentives to do so could include (but are not limited to) the superior connectivity (and thus proximity to service clients) or resources (physical or operational) in Domain #1.

This mechanism could potentially address the scenario in Chapter 2, Service Creation and Service Function Chaining, where a Service Provider might want to offer a new service (expand) into another’s territory without the capital outlay of building a large Point of Presence. However, the greater applicability is probably in the extension of an Enterprise domain service into the Service Provider network.

There is an assumption that appropriate authorization, authentication, resource delegation, visibility, and isolation are provided to the client provider by the offering provider’s orchestration and management systems.13

The VNFaaS use case (Case 2—Virtual Network Functions as a Service) proposes a service provider offering an enterprise the ability to run services on their NFVI, with the manifest example being the vCPE for Enterprise customers. The target virtualized service list includes a Firewall, NG-Firewall (although no distinction between the two is given), PE, WAN optimization, Intrusion Detection, performance monitoring, and Deep Packet Inspection. Note here, the distinction from the former (NFVIaaS) is that the function is more of a managed service as well as infrastructure (the operator provides not only the infrastructure but the service function itself) and there can be subtle differences in the location of the hosted virtual services (where some providers see this as “in my cloud” and others want to offer “managed X86” on customer premise).

There is a VNFPaaS case (Case 3—VNF Platform as a Service), is a variant in which the primary operator offers the infrastructure and ancillary services required for the main service function and the subscriber provides the VNF. Examples of the ancillary functions include external/complimentary service functions like load balancing (providing elasticity) or more closely coupled functions like service monitoring/debugging environments and tools.

Similarly, there is a VNF Forwarding Graph use case (Case 4) description that appears to be a macro/generic description of NFV itself.

The use case surrounding virtualization of the Mobile Core Network and IMS (vEPC—Case 5) is interesting in that it echoes the recommendation of prudence (in Chapter 2: Service Creation and Service Function Chaining) around the relocation of services and describes a potential for full or partial virtualization of the services (there is a long list of components with potential to be virtualized)—given that there are varying functional characteristics across the set that at least in some cases, seem to contradict this. The challenges described for this service show a more realistic view of the complications of NFV rather than it being a simple exercise of repackaging an existing service into a VM (or container). In this vein, the use case does attempt to exhaustively list components with potential to be virtualized in this scenario, although it is unclear if this indicates the group’s thinking on future components. Most importantly, the authors stress transparency within the context of network control and management, service awareness, and operational concerns like debugging, monitoring, and recovery of virtualized components.

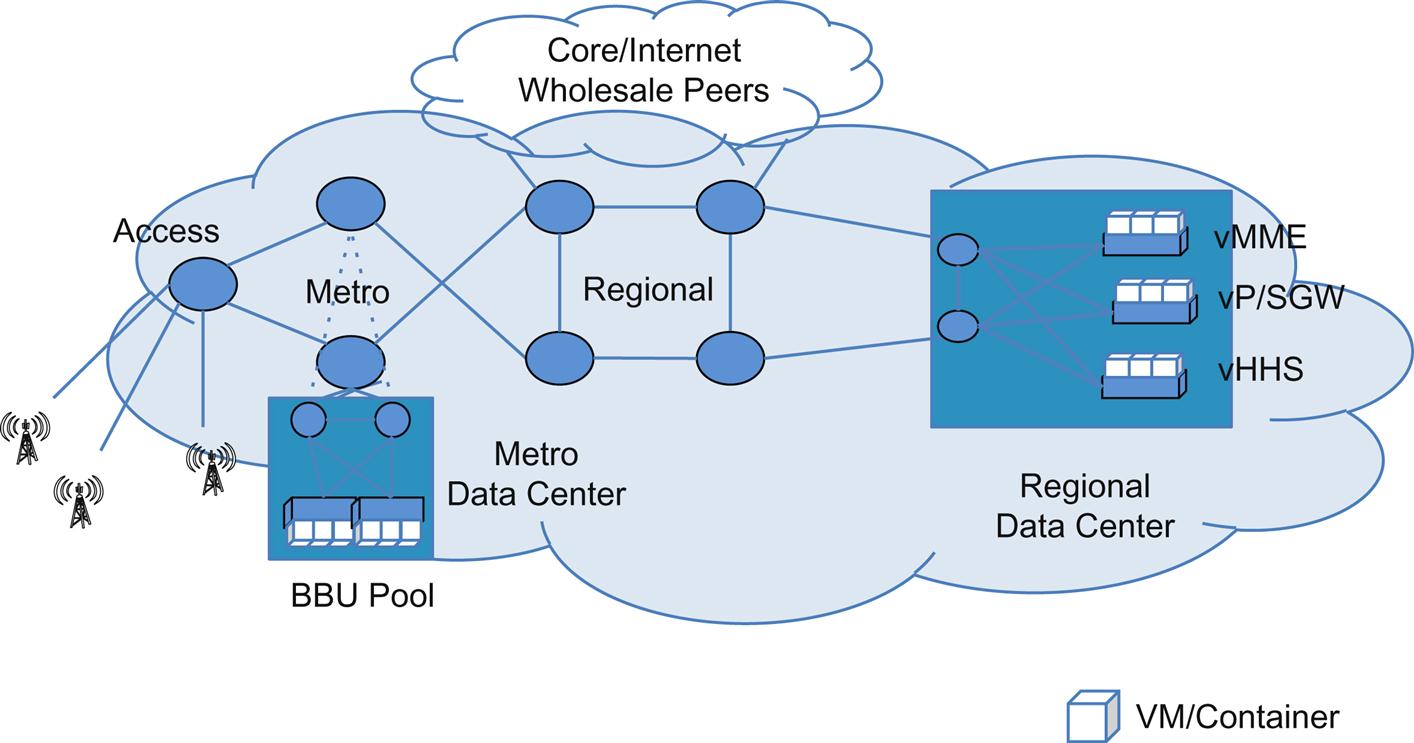

The suggested virtualization of the Mobile Base Station (Case 6) has some of the same justifications as the vCPE example in the VNFaaS case in that the widely dispersed, generally bespoke implementation of the fixed eNodeB is a likely bottleneck to innovation. The virtualization targets described for both the “traditional” RAN and C-RAN include the baseband radio-processing unit (BBU)—which introduces (for the first time within the context of the use cases) a potential need for real-time signal processing!14

Both use case 5 and use case 6 (Fig. 3.6) illustrate the potential intersection and impact of NFV on 3GPP.

Use case 7 describes how a service provider might virtualize a residential service CPE device commonly deployed as either the cable TV or Fiber to the Home (FTTH) set top box (STB) or RGW (Residential Gateway) and relocate some of its more sophisticated functionality into the service provider’s cloud infrastructure (eg, NFVI). Like the vEPC, this service and its virtualization targets are well described, most likely as a result of these cases being some of the most obvious (and thus attractive) to service providers today.

Disappointingly, the virtualized residential service CPE (vCPE) use case does not segue into the potential to virtualize (or offload) the BNG, nor does the latter use case for access technologies (use case 9).

The virtual Cache use case (Case 8) brings out some of the fundamental issues with virtualization—the trade-off between operational efficiency via virtualization and performance loss due to virtualization (as opposed to bare metal performance15). There is a potential business problem this use case may address in abating the need to host a CDN provider’s proprietary cache inside of a service provider—which is very specific example of the NFVIaaS use case.

The final use case, Fixed Access Network Virtualization (Case 9), targets the components of hybrid fiber/DSL environments such as fiber-to-the-cabinet (FTTC), which is used to facilitate most high-speed DSL these days, or FTTH. The proposal centers on the separation of the compute intensive parts of the element control plane, but not to the level of centralizing Layer 1 functions such as signal processing, FEC, or pair-bonding. The most interesting issue that NFV raised in this use case is the management of QoS when virtualization is employed.16 In particular, bandwidth management (which is almost always instrumented in the form of packet buffer queues) is particularly tricky to manage in a virtualized environment so that it actually works as specified and as well as it did in a nonvirtualized configuration.

This use case also mentions the interesting challenge in managing the traffic backhaul insofar as the placement of the virtual resource used to implement the service. Specifically, when one considers the classic centralized versus distributed cloud model that was referenced in Chapter 2, Service Creation and Service Function Chaining. It is interesting and instructive to consider the particular relationship of these design choices as compared to the overall projected power utilization savings and economic benefits in NFV configuration (as they are not always what they might appear at first glance).

A notable omission from all of this work is some sort of concrete model that documents and validates the assumed cost savings in both power reduction and total cost of ownership that takes into account both the Opex and Capex expense swing expected in the use case.

In fairness, it may be some time before the platform requirements for I/O performance in virtualization for a use case like vCDN can achieve the same performance targets as dedicated hardware. Similarly, the potential cost of designs requiring real-time signal processing can be balanced against the concept of greater economics from nonspecialized hardware achieving some level of performance approaching that of specialized hardware, but there will always be a trade-off to consider. The good news is that technological approaches such as Intel’s DPDK or SRIOV are making these choices easier every day as in a number of important use cases such as raw packet forwarding, they raise the level of network performance of virtualized systems running on commodity hardware to one that is very close to that of specialized hardware.

As a final thought around the Use Case Documents, we encourage the reader to go through them in detail in order to discover what we have covered above. The document itself reads well as a standalone document, but please keep in mind that the Use Case document points to the WG documents for redress of some of their unresolved requirements, which will require further investigation, depending on what level of detailed resolution you wish to achieve.

Virtualization Requirements

The Virtualization Requirements document (GS NFV 004) is a broadly scoped document with 11 high-level sections, each of which covers numerous aspects of virtualization relevant to every work group.

We think the reader will find that the document consistently reintroduces the requirement for information models pertaining to almost every aspect of virtualization. This particularly is a welcome addition to the work as we feel it is critical to the further advancement of this work. The document is divided into a number of sections that focus on key aspects of NFV including performance, elasticity, resiliency, stability, management, and orchestration and management.

The first section looks at performance focus and considers the allocation and management of resources for a VNF as they might fit into the performance requirements set in the VNF information model. This is expressed throughout the remaining sections in both the ability to both share and isolate resources. The performance monitoring requirements might actually be addressable through open source software offerings (eg, Ganglia17 or customized derivatives).

The portability requirements (particularly the challenges around binary compatibility) might actually be addressed through the use of container environments (eg, Docker18).

From an ISG perspective, these requirements bubble up from the work of two work groups (MANO and PER). Commenting on performance in their most recent publication, they state:

… corresponding shortcomings of processors, hypervisors, and VIM environments were identified early in the work and have triggered recent developments in Linux kernel, KVM, libvirt, and OpenStack to accommodate NFV scenarios in IT-centric environments.

The NFV Performance & Portability Best Practises document explains how to put these principles into practice with a generic VNF deployment and should be studied in conjunction with the NFV Management and Orchestration document.19

The elasticity section defines this area as a common advantage of and requirement for most NFV deployment. Here again, an information model is suggested to describe the degree of parallelism of the components, the number of instances supported as well as resource requirements for each component.

Elasticity itself is described as either driven by the VNF itself on demand, as the result of a user command or even programmatically via external input. The former can potentially support of the case where the VNF is a composite and is deployed with an integrated load balancing function. This was described in the SWA document in the area of design.

There is a requirement for VM mobility in the elasticity section that details the need for the ability to move some or all of the service/VNF components from one compute resource to another. This is reiterated in the OAM requirements later in the document in much the same light.

It does remain to be seen if this is a requirement for a wide array of operational environments or just a corner case. While it is true that many types of VNFs should theoretically be movable, there are many operational and practical caveats to surmount, such as the required bandwidth to move a VNF, locality to other service chain functions on reassignment and/or the feasibility of splitting components in a composite. However, this requirement may drive the realization of some of the claimed power efficiencies of NFV.

The resiliency requirements have obvious aspects for maintaining connectivity between the functions and their management/orchestration systems. There are management facets, like the ability to express resiliency requirements and grouping (of functions) within those requirements. And, the resiliency section suggests the use of standards based, high performance state replication, and preservation of data/state integrity.

The ISG REL WG published a subsequent, separate paper on resiliency that the ISG summarizes:

the NFV Resiliency Requirements document describes the resiliency problem, use case analysis, resiliency principles, requirements, and deployment and engineering guidelines relating to NFV. It sets the stage for future work to bring software engineering best practices to the design of resilient NFV-based systems.20

There is a recommendation to establish and measure some metric that defines “stability” (and its variance) in the context of maintaining a SLA for a particular VNF. It yet remains to be seen if this is at all practical to implement, or if vendors can implement it in any consistent way across different VNFs. A number of what we consider difficult measurements are also suggested to measure adherence to an SLA, including: unintentional packet loss due to oversubscription, maximum flow delay variation and latency, and the maximum failure rate of valid transactions (other than invalidation by another transaction).

Beyond those issues covered in the resiliency section, management aspects are further underscored in the requirements for service continuity and service assurance. These might serve to flesh out the weaker OSS/BSS presence in the general architecture document and further shine a light on the need for often-overlooked capabilities such as consistent service quality and performance measurements.

In this regard, the document interestingly includes the requirement to be able to copy time-stamped packet copies of some to-be-determined precision to a configurable destination for monitoring. The mechanism relies on the time stamping capabilities of hardware for precision and performance. Because these are not universal capabilities, this further requires a capability discovery for these functions.

The orchestration and management section lays out fourteen requirements.21 The most interesting of these are potentially in the ability to manage and enforce policies and constraints regarding placement and resource conflicts (which ties into some of the more practical constraints cited in the prior discussion of NSC/SFC).

These requirements all serve to underline both the potential fragility and difficulty of managing the performance of an ensemble of virtual functions that might come from one or more vendors.

The other broad area of requirements is in the security and maintenance sections.22 Here, nine requirements are presented under the area of security (many to address risks introduced by virtualization and the numerous interfaces to be verified within the framework) and 16 under maintenance.

The depth and breadth of the requirements underscore how the virtual environment might sacrifice the things commonly found in a telco environment (eg, predictable security and maintenance procedures) in exchange for its massive scale and flexibility.

It is puzzling at best to imagine how the requirements can be mapped onto the rigid expectations of a telco environment. In short, it is like trying to map a “three nines” reality (for the equipment used in NFV) onto a “five nines” expectation (for service availability).

The sections, energy efficiency and coexistence/transition address issues more directly at the heart of NFV deployment decision-making.

The energy requirements start with a statement that NFV could reduce energy consumption up to 50% when compared to traditional appliances. These claims are according to studies that are not cited in the document. It would be interesting to have the document augmented with these references in the future.

Despite the lack of references, these claims generally rely on the ability of an operator in a virtualized environment to consolidate workloads in response to or anticipation of idle periods. It also assumes that the compute infrastructure supports energy saving modes of operation such as stepped power consumption modes, idle or sleep modes, or even lower power using components.23 The claim does not appear to be one of power efficiency over other methodologies during normal/peak operation. However, an assumption of certain peak to mean workload distribution or diurnal behavior may not translate to all services or all network operations.

It seems reasonable that if all these dependencies can be satisfied, there is a potential for savings (with a degree of variance between network designs and operations).

Outlining the need for coexistence with existing networks and a transition plan distinguish the ETSI NFV ISG from organizations like the ONF, which started with the premise that the network is a greenfield and have only later in their lifecycle begun to address “migration.” In this section, the ISG states that the framework shall support a truly heterogeneous environment, both multivendor and mixed physical/virtual appliances, meaning that in actuality, your mileage will vary with regard to actual power savings and should only get better as an environment moves to a larger and larger percentage of virtualized assets over time. This claim will be highly dependent on the development of a number of standard interfaces and information models across the spectrum of appliance implementations to be realized.

Finally, the document returns to the theme of models, by discussing deployment, service, and maintenance models. The service models have a focus on the requirements to enable inter-provider overlay applications (eg, NFVIaaS), which seems to indicate that this is a desired use case (at least for some providers).

Gap Analysis

Gap Analysis (NFV-013) consolidates gaps identified by the individual WGs and gives a hint as to where future work in NFV might be located, given the number of gaps and the number of impacted/targeted SDOs.

Drafted later in the Phase 1 document creation cycle, the document functions well as a standalone synopsis of the workgroup(s) findings.

More than one work group can identify gaps for an interface.

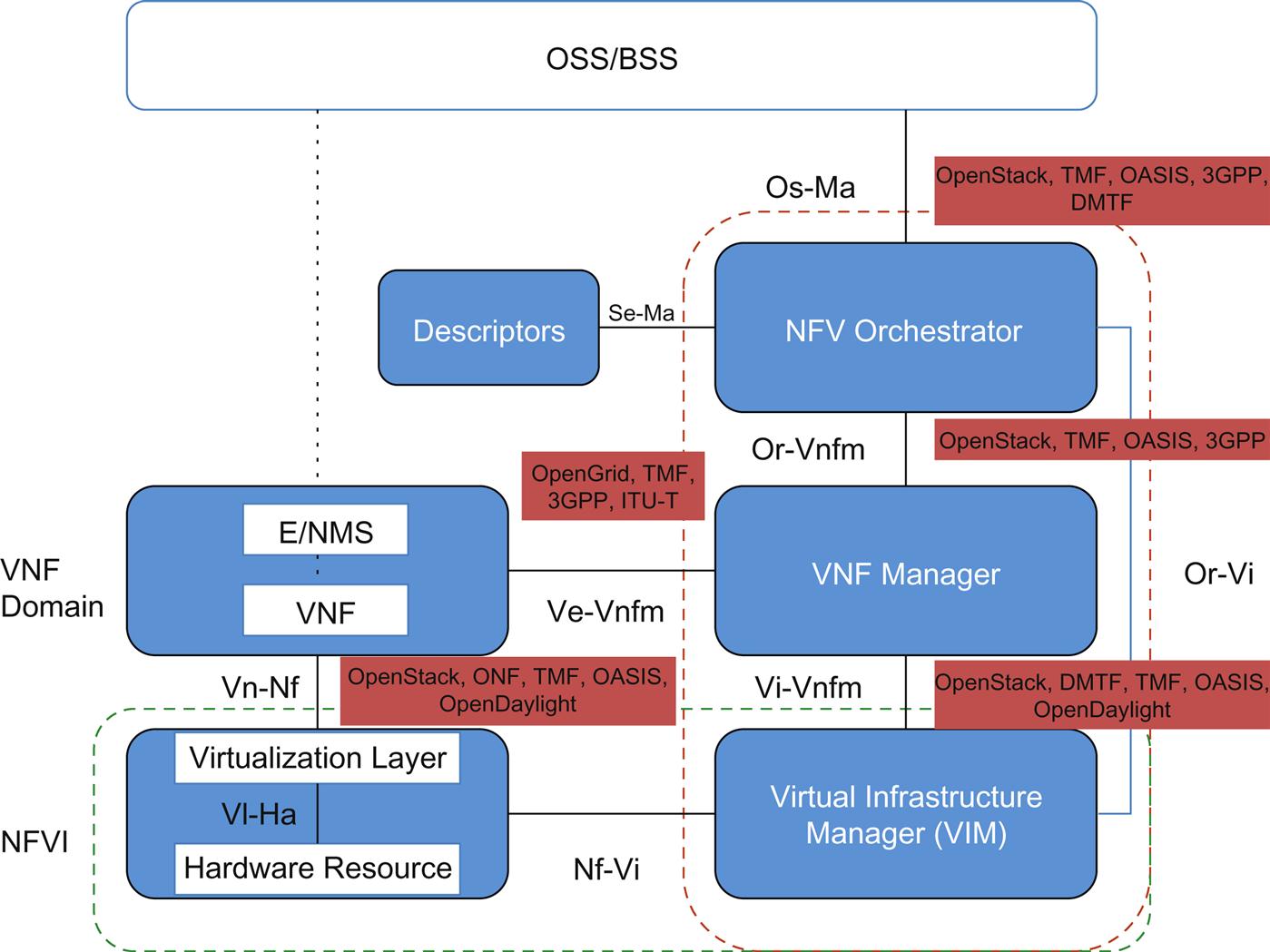

Referring to Fig. 3.7:

The INF WG pointed out two notable gaps. First, they identify the fact that there is no minimum performance requirement or target identified for Nf-Vi or Vi-HA interfaces and there is no common API for hardware accelerators. Another notable gap was the lack of a common interface for VIMs (eg, CloudStack, OpenStack, vSphere, or hyperV). This may be addressed via a plug-in facade around VIM selection in the future, but at present none exists. Despite these gaps, the primary focus for this work appears to be in OpenStack.

The MANO WG focused on gaps in resource management (for Vi-Vnfm and Nfvo-Vi interfaces). For starters, they identified the need for resource reservation via a common interface and the need for a common, aggregated resource catalog interface for cases where resource catalogs might be managed by multiple entities. The primary focus for this work was the TM Forum24 and their Framework API and information models. However, the WG also cites necessary work in both the DMTF25 (Distributed Management Task Force) OVF (Open Virtualization Format) specification and OASIS26 TOSCA (Topology and Orchestration Specification for Cloud Applications) modeling technology. Both OpenStack and OpenDaylight Project may play a role here as well.

The PER WG pointed to a number of technologies aimed at boosting performance in processors. These are the Vi-HA and Nf-Vi interfaces in the NFV architecture. Specific performance improvements noted include hypervisor-bypass in CPU access to virtual memory, large page use in VMs, hypervisor-bypass for I/O access to virtual memory, large page use for I/O access, I/O interrupt remapping to VM, and the direct I/O access from NIC to CPU cache. Most of this work points to gaps in Libvirt27 as well as vendor uptake of these technologies. Additional work in these areas for the Vi Vnfm and Service VNF and Infrastructure Descriptor (Se-Ma) are proposed as a series of OpenStack Blueprints that can potentially trigger future work if accepted by the corresponding OpenStack group.

The REL WG took a more generalized approach and pointed to both the IETF (RSerPool) and OpenStack as organizations to push for “carrier grade” solutions for NFV. In the former, they are pushing (however, unsuccessfully) for the creation of a new WG called VNFPool, and in the latter they cite a list of recommendations.

Fortunately, the SEC WG has a very comprehensive gap analysis (they have an overarching role in the architecture) that is in line with their white paper (GS NFV-SEC 001).28 Gaps were identified in the following areas: Topology Validation and Enforcement, Availability of Management Support Infra, Secured Boot, Secured Crash, Performance Isolation, User/Tenant AAA, Authenticated Time Service, Private Keys with Cloned Images, Backdoors via Virtualized Test and Monitoring and Multi-Administrator Isolation. At least two WGs in the IETF have been identified for inputs (ConEx and SFC), as well as the ITU-T (MPLS topology validation), TMF (TM Forum29), ONF, IEEE (time authentication), DMTF, and TCG.

The ISG white paper 3 notes these gaps and sums their work to date:

The NFV Security and Trust Guidance document [4] identifies areas where evolution to NFV will require different technologies, practices, and processes for security and trust. It also gives security guidance on the environment that supports and interfaces with NFV systems and operations.30

The SWA WG provided a gap analysis for VNF, EMS, and VNFC (largely around the Vn-Nf and Ve-Vnfm interfaces).

This work was disappointingly left incomplete in Phase 1. In the period between the publication of the draft of NVF-013 and the recharter at the end of 2014, many documents were finalized.

Unfortunately, the consolidated Gap Analysis (one of the prime promised outcomes of Phase 1) was not and its draft form was deleted from the ETSI NFV Phase 1 WG documents.

PoC Observations

From a process perspective, the PER Expert Group is tasked with reviewing proposals based on their PoC Framework (GS NFV-PER 002).

It is important to note that the PoC sponsor is responsible for the technical correctness of the PoC.

The PoC Framework and Architecture Framework were published at the same time and the intent was for the PoC to adhere to and explore the different components and reference points of the architecture.

Given the large number of PoCs,31 it is hard to cover each individual PoC here in detail, but there are interesting high-level trends amongst them.

Most PoCs are multivendor and demonstrate interoperable orchestration and management of services either as a vendor ecosystem consisting of both orchestration vendor and partners, or via open source (eg, using OpenStack to provide multivendor orchestration interoperability).

PoC #1 works around the lack of strict definitions, protocols, or examples of the reference points in the architecture framework by using a service integration framework (eg, the CloudNFV Open NFV Framework) that did not require the exposure of APIs.

The vendors involved in that first PoC also put forward their own viewpoints on how service “acceleration” or service optimization should be architected. There were some commonalities but these were far from a majority, nor were they necessarily consistently implemented implying some sort of uniform standard approach.

This revealed an early and later repeated/enduring focus on the potential weakness of network I/O centric applications in a virtualized environment.

As we stated earlier, in theory performance of virtualized network functions should be easily mapped onto virtualized hardware; in reality, it is not that straightforward. While PoCs based around Intel architectures and their associated specialized execution environments (eg, WindRiver or 6Wind) tend to dominate, there are other PoCs from competitors (eg, AMD or ARM) that proved worth investigating. Notably, PoC #21 focused on a mixture of CPU acceleration and the use of specialized coprocessing on programmable NICs to implement what the PoC labeled “Protocol Oblivious Forwarding.” This represented what effectively was the Next Generation iteration of the OpenFlow architecture. Specifically, the output of the PoC suggested work was required in the Hardware Abstraction Layer.

Many of the PoCs champion a particular SDN technology for creating the Forwarding Graph (otherwise known as the Service Function Chain from Chapter 2), with the greatest emphasis on OpenFlow. Because OpenFlow is readily adaptable to simple PoCs, it was often used. PoC #14 tests the applicability of the IETF FoRCES architecture framework as a means of realizing NFV. Unfortunately, there has been little market momentum to date for FoRCES and so traction resulting from this PoC was limited.

As discussed as a topic earlier in Chapter 2, Service Creation and Service Function Chaining, a few PoCs concentrated on stateful VNF HA as being achievable through a variety of mechanisms.

PoC #12 investigated and demonstrated how a stateful VNF redundancy (potentially georedundancy for a site) is achieved using custom middleware by creating a SIP proxy from a load balancer, a group of SIP servers and “purpose-built” middleware for synchronizing state between these elements across each geographic site. PoC #3 shows a slight twist on the theme of stateful VNF HA, where state is migrated in a vEPC function from one vEPC to another. While not synchronized across sites to form a “live/live” configuration, it showed how live state migrations could be an important operational tool for stateful NFV. Finally, PoC #22 explores VNF elasticity management and resiliency through a number of scenarios using an external monitor as implemented using a WindRiver product.

PoC #3 focused on Virtual Function State Migration and Interoperability and brought some focus to the tooling behind portability. It also demonstrated tool-chain and operating system independence across ISAs while introducing the concept of a Low Level VM compiler. In some respects, this aspect of NFV is now also being addressed by the Linux containerization effort led by companies like Docker (as mentioned in the Virtualization Requirements comments).

To close on the PoCs, we note that the dominant PoC use cases are in the area of mobility and included detailed subcases such as vIMS, vEPC, LTE, RAN optimization, and SGi/Gi-LAN virtualization. Many of the VNFaaS scenarios hint at a carrier view that Enterprise services offload to their cloud may also be an early target.

Like the Use Case document, the PoCs do not expose much of the business/cost models around the solutions, which we feel is a very important driver to any adoption around the NFV technologies. After all, if the business drivers for this technology are not explored in detail, the actual motivation here will only be technological lust.

Finally, while a PoC can test interoperability aspects of the NFV technologies, it must also test their interoperability with the fixed network functions that are being replaced by NFV, despite existing for decades. While their functionality has been extensively tested, NFV introduces new ways in which to deploy these existing functions. In some cases, older code bases are completely rewritten when offered as an VNF in order to optimize their performance on Intel (or other) hardware. There is no mention of the burden, cost of integration and testing or even a testing methodology for these use cases.

A Look Back—White Paper 3

Between the beginning of Open Platform for NFV (OPNFV) and before the final voting was completed on Phase 2, the ETSI NFV ISG published a third white paper (in October 2014). The paper introduction includes a brief justification of continued work (in Phase 2) to “address barriers to interoperability.”

To the credit of the participants, the paper shows that they have realized some of the fundamental points covered in the chapter—particularly concerning the large role OSS will play in an NFV future, the importance of new thinking regarding OSS/BSS and the need for more focus on operator-desired service models like NFVFaas, NFVIaaS, and NFVPaaS (the focus of early Use Cases).

The document also clarifies, in their view, what the ISG thinks the important contributions of the work groups have been (these have been quoted in italics in the chapter) and provides some clarifications on the original premise(s) of ETSI NFV architecture.

To balance this self-retrospective look, it’s interesting to look at the ETSI log of contributions for its first two years.

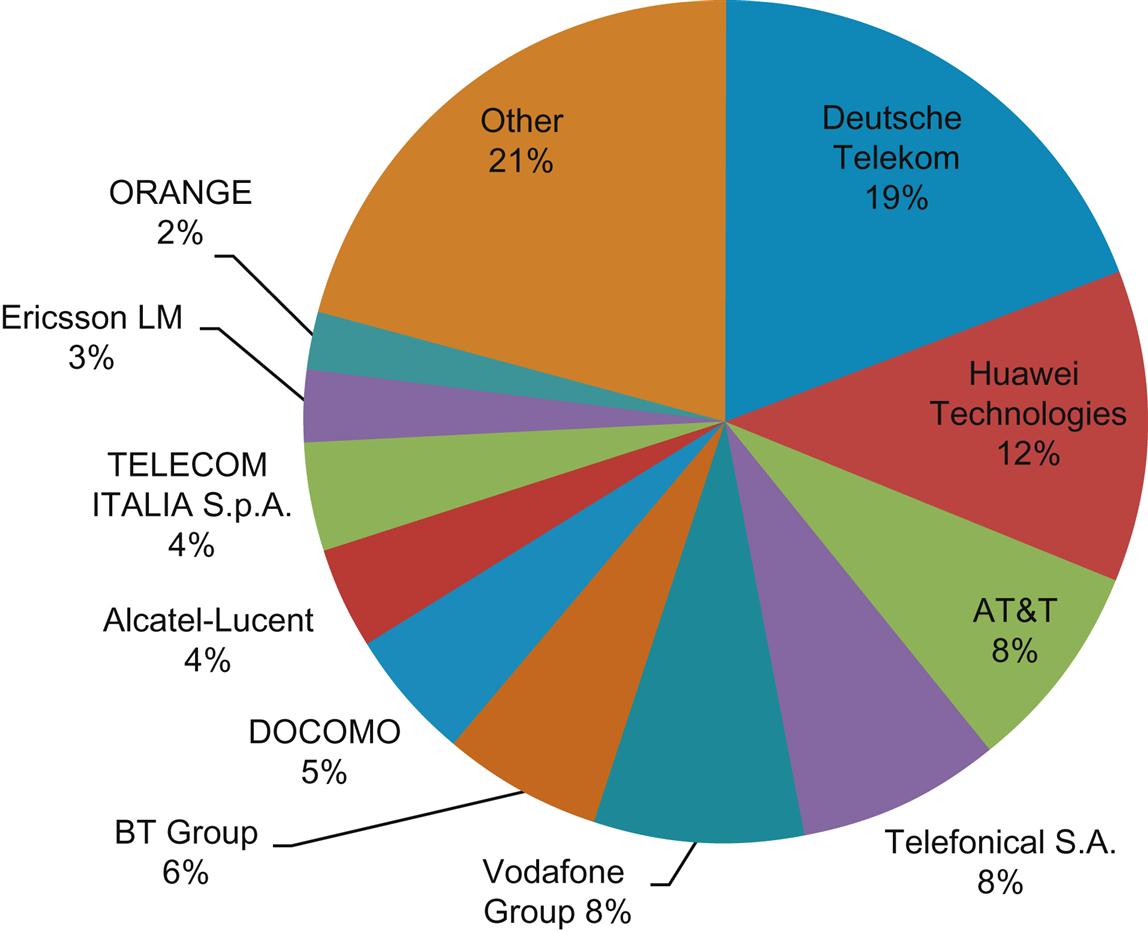

The log shows over one thousand four hundred (1400!) contributions. After eliminating administrative sources (workgroup, chair, etc.), the number is still over five hundred company-specific source contributions. Further, accounting for multiple source entries yields the graph in Fig. 3.8. The graph moves contributors of less than 1% of the total into a summary pool (Other) to maintain readability.

While it says nothing about quality of the contributions, the raw numbers shed interesting light on what voices controlled the dialog and the role of vendors. These can be compared against the original goal of provider control of the forum and the expressed limits on vendor participation. There was an expectation that vendors would contribute, but perhaps not to the extent that any of them would contribute more than the operator principals.

Future Directions

Open Platform for NFV

At NFV#5 (February 2013), a document was circulated proposing the formation of an open source community as the basis for vendors to implement an open source NFV platform/forum. Given that the NFV output documents available at the time of the proposal were relatively high level, the ETSI NFV impact on other SDOs was (to that point) somewhat limited, and the Gap Analysis was not yet complete there was some expectation that this work (OPNFV) would influence the architecture, protocols, and APIs that needed to evolve for interoperability via a reference implementation.

The forum finally named OPNFV32 was launched in September 2014 under the Linux Foundation, from which the new organization will derive its governance and administrative structure. It has been proposed that any intellectual property generated would be covered by an Apache 2.0 license33 or an Eclipse Public license.34

Similar to the OpenDaylight Project that preceded it, OPNFV has a formal board and TSC hierarchy. However, like any organization under the wing of the Linux foundation, it is focused on open source code contributions, and is thus truly governed by code committers who form a subset of coders that produce the majority of source code for the project.

Since the organization starts out with no initial codebase, participants provide or create the pieces of the reference platform for VNFs, VNFM, and orchestrator as defined by ETSI NFV ISG. Products could be brought to market on that reference platform. As part of that work, the forum would further define/validate, implement, and test some of the common interfaces that were alluded to in the ETSI NFV architecture.

The platform is expected to be hardware agnostic (eg, Intel vs ARM), and amenable to VMs or bare metal implementations. Since containerization is likely to become a dominant strategy in the near future, container-based implementations are expected to be included.

The reality of the organization is that it is comprised of members from the service provider and vendor communities. As such, service providers generally do not bring traditional software developers to the party, and instead bring testing and interoperability facilities, test plans and documentation as well as a steady stream of guiding requirements and architectural points. In general, vendors will contribute traditional code.

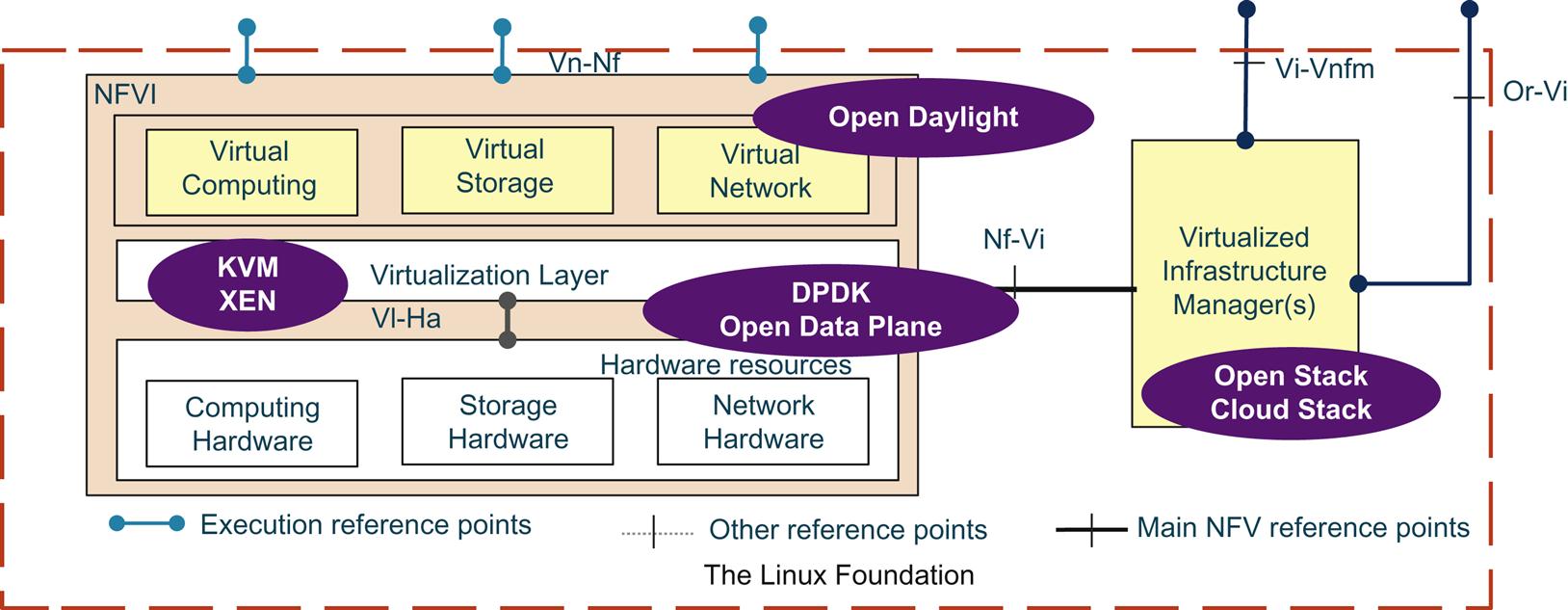

The OPNFV platform’s initial scope for the first release encompasses a subset of the ETSI defined VIM and NFVI as well as their associated reference points (Vi-Vnfm, Vi-HA, Nf-Vi, Vn-Nf, and Or-Vi) as illustrated in Fig. 3.9.

First ouputs were seen in the Arno release (June 2015) and the subsequent Brahmaputra (February 2016).

At this time, the organization has formed requirements, documentation, testing integration, and a software platform integration projects.

Much of the community comprising the organization has also taken what appears to be a more pragmatic approach to its first deliverable, and has begun work on an integration project that has some very simple goals: to integrate KVM, OpenStack, and OpenDaylight.

The premise behind this approach is to first build up the infrastructure around the integration project that will support future work. In doing so, it brings the community together to work on a worthwhile project.

It also will run the integrated project through the software integration testing labs provided by the service providers, and finally produce documentation pertaining to the combined project.

The project seems to not need any custom (ie, branched) code changes initially, which is again a pragmatic approach as maintaining separate code bases is not something for the faint of heart or those without a lot of experience. Instead, the project will strive to use “off the shelf” builds of OpenDaylight and OpenStack and simply integrate them together. When changes are needed, attempts will be made to commit patches upstream.

Repatriation/ISG2.0 (and 3.0)

It should be noted that here is no formal linkage between OPNFV and the attempt to reboot the ETSI NFV ISG, although they could be complimentary.35

At the same time that the concept of an OPNFV project was being broached, the participants in ETSI NFV were circulating a strawman proposal seeking to extend the charter of the ISG past its early 2015 remit. This proposed repatriation with ETSI NFV as an ISG v2.0, thus extending the life of the group. This proposal ultimately (and perhaps not surprisingly) passed, and Phase 2 of the ETSI NFV workgroup began in January 2015 (and continues to meet quarterly through 2016).

The proposal eliminated some of the permanent Work Groups of the original instantiation in favor of more ad-hoc groups under the oversight of a Steering Board. These would probably be composed similarly to the TSC, with a continuing NOC role. REL and SEC Work Groups remain and new groups TST, EVE, and IFA were added.

Evolution and Ecosystem Working Group (EVE) has the following responsibilities accord to its Terms of Reference (ToR)36:

• Develop feasibility studies and requirements in relation to: (i) new NFV use cases and associated technical features, (ii) new technologies for NFV, and (iii) relationship of NFV with other technologies.

• Develop gap analysis on topics between ISG NFV GSs under its responsibilities and industry standards, including de facto standards.

• Facilitate engagement with research institute and academia to encourage research and teaching curricula on the topic of NFV.

The Testing, Experimentation, and Open Source Working Group (TST) has the following responsibility according to its ToR37:

• Maintaining and evolving the PoC Framework and extending the testing activities to cover interoperability based on ISG NFV specifications.

• Developing specification on testing and test methodologies.

• Coordinating experimentation and showcasing of NFV solutions (eg, PoC Zone).

• Producing PoC case studies (eg, VNF lifecycle), and documenting/reporting the results in various forms (eg, white papers, wikis).

• Feeding feature requests to open source projects and implementation experience results to open source communities, and developing reports (eg, guidelines, best practices) from implementation experience.

• Transferring results to other ISG NFV working groups to ensure consistent delivery of specification through real implementation and testing.

Interfaces and Architecture (IFA) ToR38 gives them the following responsibilities:

• Delivering a consistent consolidated set of information models and information flows (and data models and protocols when necessary) to support interoperability at reference points.

• Refinement of the architecture (including architectural requirements) and interfaces leading to the production of the set of detailed specifications.

• Maintenance of specifications related to architecture and interfaces within the scope of this WG.

• Cooperate with other external bodies to lead to the production of the set of detailed specifications and/or open source code development of the reference points or interfaces to meet the requirements.

• Collaborate with other external bodies as necessary related to the WG activities.

Between them, these groups share almost 30 work items.

The stated main goal of the ETSI NFV ISG reboot is simply to steer the industry toward implementations of NFV as described by the NFV documents, and to make future work “normative” and informative, with the possibility of refining/redefining existing documents into the “normative” category or deriving “normative” specifications from them. As previously mentioned, the IPR prospects would have to be re-evaluated by potential participants.

This may also lead to questions about the relationship of ETSI to other SDOs that are also working on standards or “normative” work in these areas.

The reboot effort may have some undesirable connotations:

Some may see a recharter for another two years with normative documents as a slight devaluation of the outputs of its preceding work.39 This is natural in a move from “study” mode to “implementation” mode.

There may also be a temptation to further refine the work of Phase 1. For example, early proposals from a new Working Group (IFA) consider further decomposition of the NFVO, the VIM, and the VNFM.

Additionally, some observers may also see the rechartered ISG goal of developing an NFV ecosystem and a potential reference implementation as being a significant overlap with the newly-formed OPNFV organization, who fully intend to function in an agile and accelerated way. The slow-moving and sometimes political nature of the original NFV ISG have been cited by some as a reason for preferring to operate within the OPNFV organization.

By the end of 2015, the groups had considered more than two hundred (200) new documents,40 almost half of which were originated by the IFA WG. Participation followed a similar pattern to the prior ISG, with even stronger vendor participation relative to service providers.

Much of the work was centered on modeling (to further define the component interfaces), including a special workshop on Information Modeling which lead to an announcement of further collaboration with other SDOs to get necessary work done (vs imposing a centralized information model)41 with more potential workshops.

Going into 2016, the future of the ISG is again unclear, as the ISG may now be aware of how embarrassing it would be to recharter a third time at the end of 2016. Once again, the longevity and necessity of the specifications may be called into question, unless a smooth handover to some other entity occurs.

Conclusion

At the highest level, the ETSI NFV experiment is a good illustration of the pros and cons of “forum-seeking” (a relatively new behavior) by a user group. Lacking an SDO willing to standardize anything related to NFV, the result of Phase 1 was a “study.”

The proposed marriage between traditional telco-like standards development, the desires of traditional network operators and the realities of agile, open source implementation (as a means to achieve timely and up-to-date Standards creation) is interesting and challenging. More importantly, the combination could potentially have overcome some of the traditional standards creation hurdles by rapidly creating a single, reference open source framework that could become a de facto standard. At least in Phase 1 of the ETSI NFV WG, this did not happen.

Outside of their generated white papers, the contribution of the ETSI NFV ISG to ongoing work in NFV and related standards is still unclear.

The resulting ETSI NFV ISG work is to date, instructive. They have created a lexicon for discussing NFV.

The PoC work has been an early peek at what may be possible with NFV and (importantly) where the interests of operators and vendors may lie (mobility and enterprise managed services).

However, a number of relevant, nontechnical items are left unfinished by the ISG for the operators and the reader to figure out, including the business cases and cost models of the prime use cases. Even the proposed energy savings rely on specific models of operation that may not fit all operator networks. As we have said earlier, the devil is in the details. Unfortunately important details such as economic impact have been overlooked, which could prove important.

The failure to publish the Gap Analysis (including the erasure of the draft) was also disappointing.

Certainly, the ETSI NFV ISG (v1) outputs will shape some vendor/operator transactions, potentially manifesting as requirements or references in the operator/vendor RFP/RFQ process (particularly for the founding operators).

But, after two years of study, the result appears to have been the recommendation of a great deal of additional work. The number of interfaces still needing specification in their ITU-T-like approach to standardization as described in their documents will take a much longer time, and require interaction with many other independent forums and open source projects to reach their goals. ETSI 2.0 seems to be approaching these as a modeling exercise.

As this work moves to other forums, participants in the newly-minted OPNFV forum may see an overlap with the proposed reinstantiation of the ETSI ISG. The success of either is not yet assured, but if OPNFV can move at the same rapid pace as The OpenDaylight organization has, it may render the new ISG effort moot.

There are already working NFV implementations that obscure the many yet-to-be-specified interfaces described in the ETSI ISG architecture (ie, blurring the lines between the functional blocks by providing multiple parts of the recommended architecture without the formal adoption of these reference points) on the market (and in early trials).

The advent of open source implementations and their associated rapid iteration may also prove the ETSI architecture moot, at least in part.

This is not the end of the world, as many view the ETSI architecture as a guide, and not a specification for implementation. The architecture has become, as a colleague described it, “a hole into which your jigsaw piece has to fit, but my concern is that it’s a hole into which nothing that works will ever truly fit.”

Consumers may ultimately decide that the level of granularity described in the architecture is not necessary before the targeted contributions required of (and in) other forums are accepted and codified.

Early offerings of service virtualization are not all choosing the chain-of-VMs model behind the original architecture, indicating that there may be room for more than one architectural view (depending on the service offered and the operator environment).

If compelling, these offerings, ongoing (and new) open source efforts and other standards bodies may ultimately decide the level of standardization required for interoperable NFV and how much of the ETSI NFV ISG architecture is relevant.