Experimental methods in cognitive psychology allow us to draw causal conclusions about learning. These conclusions need to be communicated carefully to educational stakeholders, so that the findings are not distorted.

What does it mean to be “evidence based” in one’s approach to education? After all, there are many different types of evidence that one can use to make decisions, or support the decisions one has already made (see Chapter 3, where we discuss confirmation bias – our tendency to seek out information that supports rather than disproves our beliefs). But, what counts as good evidence? The answer to these questions will depend on your values, your background, and your goals. We are writing this book to tell you what we know about learning from a cognitive perspective.

We are writing this book to tell you what we know about learning from a cognitive perspective.

This knowledge reflects our values (i.e., that learning is important); our backgrounds (as cognitive psychologists who apply their work to education); and our goals (communicating with teachers and students about the science of learning). After reading this information presented from our perspective, you, the reader, can then add this new perspective to your arsenal of understanding about learning, and integrate the information you find useful into the way you learn or teach.

Empirical (that is, data-driven) evidence about how we learn runs the gamut from neuroscientific studies in which individual cells in a rat’s brain are stimulated (e.g., Hölscher, Jacob, & Mallot, 2003), to interviews, where descriptions, attitudes, and feelings are gathered from individual teachers or students (Ramey-Gassert, Shroyer, & Staver, 1996). While the former is firmly quantitative (data in the form of numbers are collected) – the latter is mostly qualitative (data in the form of words are collected, though sometimes these data can also be quantified for analyses, creating what’s known as a mixed-methods design). In this book, we talk mostly about quantitative data, because those are the data that we tend to collect and analyze. We used the example of single-cell recording in rats earlier in this paragraph, but those are not the type of data we will be talking about in this book. Instead, the quantitative data we rely upon typically include students’ performance on various quizzes and assessments, but also students’ self-reports about their learning (e.g., we often ask students to predict how well they have learned the material after studying a particular way [Smith, Blunt, Whiffen, & Karpicke, 2016], or how well they think they did on a test [Weinstein & Roediger, 2010]).

The quantitative data we rely upon typically include students’ performance on various quizzes and assessments.

That’s not to say that we think quantitative data are more important than qualitative data – both are critical to understanding how we can positively impact education. For example, we need interviews and focus groups to understand what kinds of strategies are going to be most feasible in the classroom. But only the more highly controlled experimental research allows us to infer what causes learning.

While each of the vast array of different methods has its own place in the process of understanding learning, experimental manipulations (or randomized controlled trials) are needed in order to get at causal relationships.

Experimental manipulations (or randomized controlled trials) are needed in order to get at causal relationships.

Cognitive psychologists sometimes use a randomized controlled trial to determine whether something is causing an increase (or decrease) in learning. There are a couple of things researchers must do when running a randomized controlled trial. First, we need to randomly assign students to different groups. This random assignment helps create equivalent groups from the beginning. Second, we need to change something (for example, the type of learning strategy) across the two groups, holding everything else as constant as possible. The key here is to make sure to isolate the thing we are changing, so that it is the only difference between the groups. We also need to make sure at least one of the groups serves as a control group, or a group that serves as a comparison. We need to make sure that the only thing being systematically changed is our manipulation. (Note, sometimes we can systematically manipulate multiple things at once, but these are more complicated designs.) Finally, we then measure learning across the different groups. If we find that our manipulation led to greater learning compared to the control group, and we made sure to conduct the experiment properly with random assignment and appropriate controls, then we can say that our manipulation caused learning. For example, if we randomly assign students to either sleep all night or stay up all night, and those who stay up all night remember less of what they learned the previous day, we can draw the conclusion that lack of sleep hurts learning (see Walker & Stickgold [2004] for a review of this literature). Of course, we won’t just stop after one experiment. Evidence from one experiment can support conclusions, but it is when evidence converges from many different studies done in many different contexts that we are comfortable making educational recommendations.

Experiments can also be conducted in a “within-subjects” design. This means that each individual participating in the experiment is serving as their own control. In these experiments, each person participates in all of the conditions. To make sure that the order of conditions or materials are not affecting the results, the researcher randomizes the order of conditions and materials in a process called counterbalancing. The researcher then randomly assigns different participants to different versions of the experiment, with the conditions coming up in different orders. There are a number of ways to implement counterbalancing to maintain control in an experiment so that researchers can identify cause-and-effect relationships. The specifics of how to do this are not important for our purposes here. The important thing to note is that, even when participants are in within-subjects experiments and are participating in multiple learning conditions, in order to determine cause and effect we still need to maintain control and rule out alternate explanations for any findings (e.g., order or material effects).

Experimental studies can be contrasted with correlational studies, from which we can only conclude that learning co-varies with some other factor. Correlational studies involve measuring two or more variables. The researchers can then look at how related two variables are to one another. If two variables are related, or correlated, then we can use one variable to predict the value of another variable. The greater the correlation, the greater accuracy our prediction will have. However, correlations do not allow us to determine causality. When we have a correlation, we cannot determine the direction of a causal relationship, and there could also be another variable that is causing both of the study variables to be related.

For example, in a study about sleep and academic achievement in medical students, sleep quality during the semester was correlated with medical board exam grades (Ahrberg, Dresler, Niedermaier, Steiger, & Genzel, 2012). One might conclude that the poor sleep quality is causing lower grades, but there are other possible interpretations. For example, the direction of the causal relationship could be different from what we think. Perhaps having better grades might cause students to relax and sleep better, while poor grades might cause students to be anxious and unable to sleep. There could also be a third variable such as genetics causing both sleep disturbances and poor academic performance. The possibilities are endless, and the correlation does not tell us about the causal nature of the relationship between sleep and academic performance.

Another problem with correlational studies is that sometimes completely unrelated variables can be correlated just by chance. There is a fun website called Spurious Correlations (www.tylervigen.com/ and now also a book; Vigen, 2015) where the creator graphs all sorts of random pairs of variables that happen to produce a correlation. For example, this graph shows ten-year trends for per capita consumption of cheese and the numbers of lawyers in Hawaii. Perhaps you can think of some reasons why those might be related, but most likely it is due to chance! (see opposite)

Because experimental studies avoid these issues and more directly point to cause and effect, those are the studies we prefer to use to draw conclusions about learning. However, while many disciplines have used the experimental method, we focus specifically on one of them: cognitive psychology.

Per Capita Consumption of Cheese (US) Correlates with Lawyers in Hawaii

The spurious correlation between per capita consumption of cheese and the numbers of lawyers in Hawaii. Data from Vigen (2015).

In this book, we focus mainly on findings from cognitive psychology. However, research from this field is sometimes confused with neuroscience, at least in the mainstream media where findings from cognitive psychology are reported with the word “neuroscience” in the title (e.g., see the blog post from Staff Writers at OnlineUniversities.com [2012], which includes many references to “neuroscience” when they are actually referring to findings from cognitive psychology).

The distinction between cognitive psychology and neuroscience is important, but often lost.

A very simple explanation of the differences between cognitive psychology and neuroscience is that cognitive psychology focuses on explanations related to the mind, whereas neuroscience is concerned with figuring out what happens in the brain. For example, cognitive psychologists discuss abstract processes such as encoding, storage, and retrieval when talking about memory and trying to explain why we forget or remember. Neuroscientists, on the other hand, are concerned with pin-pointing those processes in terms of physical activity in the brain, often on quite a detailed level. We feel that cognitive psychology is currently a better knowledge base for teachers and learners from which to extrapolate findings that are applicable to the classroom, because this approach has a longer history from which to draw conclusions, and also because it provides more of an overview of how we learn rather than a very detailed understanding of what that looks like in the brain.

About 20 years ago, an article called “Education and the brain: A bridge too far” (Bruer, 1997) was published. The author argued that findings about the brain were being misapplied to education, with simplifications and misunderstandings of the actual science. We talk more about these misunderstandings in Chapter 4. Bruer suggested that for now we should focus on bridging the gap between cognition and education (the goal of this book!), as well as the gap between cognition and neuroscience. That is, while we know a lot about cognitive processes and a lot about how the brain functions, the two fields are still relatively separate, with insufficient understanding of how cognitive processes map onto the brain.

Neuroscience has discovered a great deal about neurons and synapses, but not nearly enough to guide educational practice. (1977, p. 15)

Neuroscience has discovered a great deal about neurons and synapses, but not nearly enough to guide educational practice. (1977, p. 15)

John Bruer

This was true 20 years ago, and was true eight years ago when we wrote a chapter in a book called Neuroscience in Education: The Good, the Bad, and the Ugly (Roediger, Finn, & Weinstein, 2012), and it is still true today (e.g., see Smeyers [2016] for a similar argument). Having said that, valiant attempts are being made to connect the two fields (Hardt, Einarsson, & Nader, 2010), and there are some basics of memory at the neural level that are useful to understand, which you will find in Chapter 7. You can also read about a more optimistic outlook on the future role of neuroscience in education in a piece by Daniel Ansari and colleagues (Ansari, Coch, & De Smedt, 2011).

I’m optimistic about us making real progress in understanding how children learn and how we can use that information in order to improve education. (2014)

I’m optimistic about us making real progress in understanding how children learn and how we can use that information in order to improve education. (2014)

Daniel Ansari

Since we haven’t yet discovered a way to measure mental processes directly, what we do instead is try to observe and measure behavior, and then infer the mental processes from the behavior that we’ve been able to observe and measure. In fact, the field of cognitive psychology evolved directly from behaviorism, in which behavior is observed and measured without cognitive explanations.

Behavioral psychology, cognitive psychology, and neuroscience all come from the experimental discipline.

Since both cognitive psychology and behaviorism measure behavior (e.g., performance on tests), behavioral studies often converge with cognitive studies in terms of recommendations for teaching and learning (see Markovits & Weinstein, [2018], for a review). Behavioral psychology, cognitive psychology, and neuroscience all come from the experimental discipline. We, however, prefer the cognitive approach because it not only provides us with information about what works, but also helps us figure out how and why certain learning strategies work better than others.

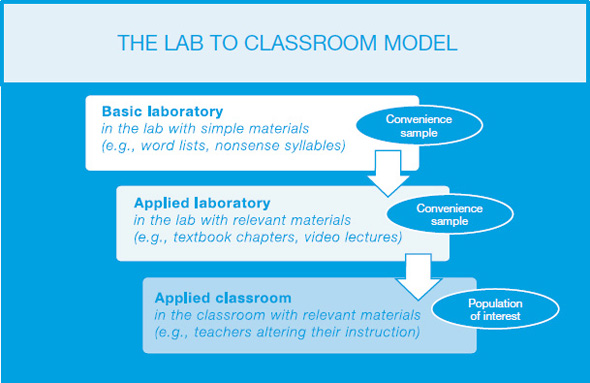

A very important aspect of this process is what we like to call the lab-to-classroom model. A misunderstanding of our discipline could lead one to believe that all cognitive researchers carry out their work in the lab (Black, n.d.). While this is not true, it would be accurate to state that we begin our research in the lab. In what we call the “basic lab level,” participants take part in very simplified tasks, studying very simplified material.

That is, participants might be learning lists of unrelated words, or even nonsense syllables. These materials are highly controlled, and often not much like something you or I might actually want to learn in real life. The context in which the research takes place may also be quite contrived and unrealistic. But the benefits of starting at this level are that we have much more control over the participants’ learning environment. This control allows us to hone in on what factors are actually causing (or preventing) learning.

In the lab-to-classroom model, we start at the basic lab level and build up to the classroom.

Once we come across a technique that can be replicated in the lab, the research progresses to something we call the “applied lab level.” At this point, the experiment still takes place in the lab, but now participants will be studying educationally relevant materials instead of the artificially simplified materials. So, they might be reading a chapter from a textbook, watching a lecture, etc. And, once we find that the strategy we examined at the basic lab level works here as well, it is now time to continue onto the classroom level. At this stage, we will actually go into schools, with realistic material, and examine the effectiveness of learning techniques in this realistic context, usually with the help of the teachers.

The reason why we take so long to get to the applied classroom level is that this stage is costly, both in terms of money and, most importantly, in terms of time. We certainly do not want to be wasting teacher and student time with activities and techniques that haven’t been shown to work effectively in the basic and applied laboratory settings! It is also important to note that the process is not linear – we frequently go back to previous levels, especially when a strategy does not work in the classroom as we expected it to. Throughout this book, we will be using examples of research from each level (basic laboratory, applied laboratory, and classroom).

Science communication is becoming increasingly important in the current age of “fake news,” but not all scientists are involved in this practice. For example, we recently examined the science communication behaviors of 327 psychological scientists, and found that only about 5 percent of them were actively engaged in communicating about their science on popular platforms such as Twitter and blogs, despite the negligible financial burden of these activities (Weinstein & Sumeracki, 2017).

This is a problem, because if we – scientists – are not doing the communication ourselves, the results of our research risk getting distorted as they make their way through to teachers and learners.

When research results are communicated, the findings may get distorted.

Moreover, no one study can give us definitive information about how we learn; evidence from different studies can sometimes be contradictory, making it hard to draw conclusions. For example, while there is a base of research showing that students benefit from immediate feedback (Epstein, Epstein, & Brosvic, 2001), more recent research suggests that delaying feedback could be more helpful in some situations (Butler, Karpicke, & Roediger, 2007).

Evidence can sometimes be contradictory, making it hard to draw conclusions.

The process goes as follows: data are collected and written up by scientists. Eventually, the write-up of the data is accepted for publication in a peer-reviewed academic journal. After publication, the paper may be picked up by mainstream media, and then people will pass their impression of the media coverage along to other people. Unfortunately, however, the media coverage may have introduced errors or misunderstandings into their interpretation of the science (see Chapter 4), and these errors can be further exacerbated through word of mouth.

As such, and as we already mentioned in Chapter 1, we believe that it is very important for us as scientists to keep communicating about our research findings. Of course, books, blogs, and even the media can be helpful, but all need to be consumed critically.

Books, blogs, and other media can be helpful, but need to be consumed critically.

In this book, we cite a variety of sources, ranging from academic journal articles to blog posts. However, where we cite blog posts, we have checked to make sure that they are describing the research accurately. When you are reading other pieces, you might want to ask yourself: are the authors of these works reading and interpreting or maybe even conducting the research itself – for example, as is the case in the book “Make It Stick” by Brown, Roediger, and McDaniel (2014) – or are they relying on secondary sources (such as other books and blogs!) to draw conclusions? And, always stay alert for overextensions and misunderstandings, such as those we discuss in Chapter 4.

A large number of disciplines contribute to our understanding of how we learn. We focus specifically on cognitive psychology, which is an experimental discipline and thus provides the strongest evidence for causal conclusions (that is, predicting rather than merely describing). In our discipline, we start by running experiments in a lab (known as basic research), and then increase the relevance of the materials and settings involved in the study (applied research), eventually taking our research to the classroom. But, our job does not stop there – we need to also communicate the research findings beyond academia, in order to help prevent and resolve misunderstandings and misinterpretations.

Ahrberg, K., Dresler, M., Niedermaier, S., Steiger, A., & Genzel, L. (2012). The interaction between sleep quality and academic performance. Journal of Psychiatric Research, 46, 1618–1622.

Ansari, D. (2014). Daniel Ansari – The Science Network Interview [YouTube video]. Retrieved from https://youtu.be/vvLsQ29RQtg

Ansari, D., Coch, D., & De Smedt, B. (2011). Connecting education and cognitive neuroscience: Where will the journey take us? Educational Philosophy and Theory, 43, 37–42.

Black, C. (n.d.). Science/fiction: How learning styles became a myth. Retrieved from http://carolblack.org/science-fiction/

Brown, P. C., Roediger, H. L., & McDaniel, M. A. (2014). Make It Stick. The Science of Successful Learning. Cambridge, MA: Harvard University Press.

Bruer, J. T. (1997). Education and the brain: A bridge too far. Educational Researcher, 26, 4–16.

Butler, A. C., Karpicke, J. D., & Roediger III, H. L. (2007). The effect of type and timing of feedback on learning from multiple-choice tests. Journal of Experimental Psychology: Applied, 13, 273–281.

Epstein, M. L., Epstein, B. B., & Brosvic, G. M. (2001). Immediate feedback during academic testing. Psychological Reports, 88, 889–894.

Hardt, O., Einarsson, E. Ö., & Nader, K. (2010). A bridge over troubled water: Reconsolidation as a link between cognitive and neuroscientific memory research traditions. Annual Review of Psychology, 61, 141–167.

Hölscher, C., Jacob, W., & Mallot, H. A. (2003). Reward modulates neuronal activity in the hippocampus of the rat. Behavioural Brain Research, 142, 181–191.

Markovits, R., & Weinstein, Y. (2018). Can cognitive processes help explain the success of instructional techniques recommended by behavior analysts? npj Science of Learning.

Ramey-Gassert, L., Shroyer, M. G., & Staver, J. R. (1996). A qualitative study of factors influencing science teaching self-efficacy of elementary level teachers. Science Education, 80, 283–315.

Roediger, H. L., Finn, B., & Weinstein, Y. (2012). Improving metacognition to enhance educational practice. In S. Della Sala & M. Anderson (Eds.), Neuroscience in education: The good, the bad, and the ugly (pp. 128–151). Oxford: Oxford University Press.

Smeyers, P. (2016). Neurophilia: Guiding educational research and the educational field? Journal of Philosophy of Education, 50, 62–75.

Smith, M. A., Blunt, J. R., Whiffen, J. W., & Karpicke, J. D. (2016). Does providing prompts during retrieval practice improve learning? Applied Cognitive Psychology, 30, 544–553.

Staff Writers (2012). 9 signs that neuroscience has entered the classroom. OnineUniversities.com. Retrieved from www.onlineuniversities.com/blog/2012/06/9-signs-that-neuroscience-has-entered-classroom/

Vigen, T. (2015). Spurious correlations. Hachette Books.

Walker, M. P., & Stickgold, R. (2004). Sleep-dependent learning and memory consolidation. Neuron, 44, 121–133.

Weinstein, Y., & Roediger, H. L. (2010). Retrospective bias in test performance: Providing easy items at the beginning of a test makes students believe they did better on it. Memory & Cognition, 38, 366–376.

Weinstein, Y., & Sumeracki, M. A. (2017). Are Twitter and blogs important tools for the modern psychological scientist? Perspectives on Psychological Science, 12, 1171–1175.