what is a survey?

what is a survey?Surveys, longitudinal, cross-sectional and trend studies |

CHAPTER 13 |

There are many different kinds of survey; each has its own characteristics and key issues. We set these out in this chapter, addressing such matters as:

what is a survey?

what is a survey?

some preliminary considerations

some preliminary considerations

planning a survey

planning a survey

low response and non- response, and how to reduce them

low response and non- response, and how to reduce them

survey sampling

survey sampling

longitudinal, cross- sectional and trend studies

longitudinal, cross- sectional and trend studies

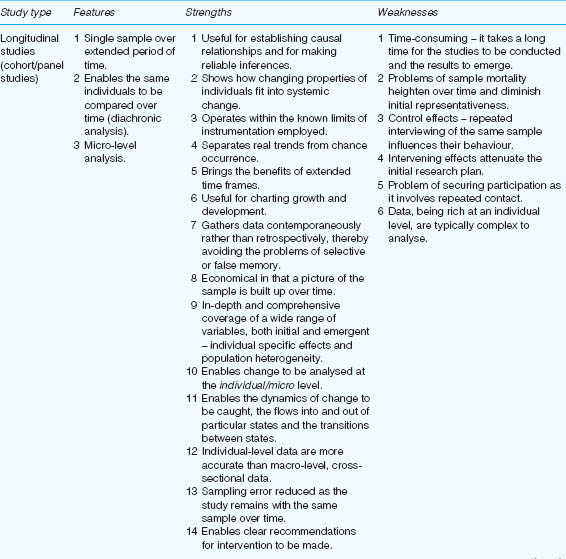

strengths and weaknesses of longitudinal, cohort and cross- sectional studies

strengths and weaknesses of longitudinal, cohort and cross- sectional studies

postal, interview and telephone surveys

postal, interview and telephone surveys

internet- based surveys

internet- based surveys

comparing methods of data collection in surveys

comparing methods of data collection in surveys

We advise readers to take this chapter in conjunction with the chapters on sampling, questionnaires, interviews and data analysis techniques. Many researchers reading this book will probably be studying for higher degrees within a fixed and maybe short time frame; that may render longitudinal study out of the question for them. Nevertheless longitudinal study is an important type of research, and we introduce it here. More likely, researchers for higher degrees will find cross- sectional survey research appropriate, and it is widely used in higher degree research.

Many educational research methods are descriptive; that is they set out to describe and to interpret what is. Such studies look at individuals, groups, institutions, methods and materials in order to describe, compare, contrast, classify, analyse and interpret the entities and the events that constitute their various fields of enquiry. We deal here with several types of descriptive survey research, including longitudinal, cross- sectional and trend or prediction studies.

Typically, surveys gather data at a particular point in time with the intention of describing the nature of existing conditions, or identifying standards against which existing conditions can be compared, or determining the relationships that exist between specific events. Thus, surveys may vary in their levels of complexity from those which provide simple frequency counts to those which present relational analysis.

Surveys may be further differentiated in terms of their scope. A study of contemporary developments in post-secondary education, for example, might encompass the whole of Western Europe; a study of subject choice, on the other hand, might be confined to one secondary school. The complexity and scope of surveys in education can be illustrated by reference to familiar examples.

A survey has several characteristics and several claimed attractions; typically it is used to scan a wide field of issues, populations, programmes, etc. in order to measure or describe any generalized features. It is useful (Morrison, 1993: 38–40) in that it usually:

gathers data on a one- shot basis and hence is economical and efficient;

gathers data on a one- shot basis and hence is economical and efficient;

represents a wide target population (hence there is a need for careful sampling, see Chapter 8);

represents a wide target population (hence there is a need for careful sampling, see Chapter 8);

generates numerical data;

generates numerical data;

provides descriptive, inferential and explanatory information;

provides descriptive, inferential and explanatory information;

manipulates key factors and variables to derive frequencies (e.g. the numbers registering a particular opinion or test score);

manipulates key factors and variables to derive frequencies (e.g. the numbers registering a particular opinion or test score);

gathers standardized information (i.e. using the same instruments and questions for all participants);

gathers standardized information (i.e. using the same instruments and questions for all participants);

ascertains correlations (e.g. to find out if there is any relationship between gender and scores);

ascertains correlations (e.g. to find out if there is any relationship between gender and scores);

presents material which is uncluttered by specific contextual factors;

presents material which is uncluttered by specific contextual factors;

captures data from multiple choice, closed questions, test scores or observation schedules;

captures data from multiple choice, closed questions, test scores or observation schedules;

supports or refutes hypotheses about the target population;

supports or refutes hypotheses about the target population;

generates accurate instruments through their piloting and revision;

generates accurate instruments through their piloting and revision;

makes generalizations about, and observes patterns of response in, the targets of focus;

makes generalizations about, and observes patterns of response in, the targets of focus;

gathers data which can be processed statistically;

gathers data which can be processed statistically;

usually relies on large- scale data gathering from a wide population in order to enable generalizations to be made about given factors or variables.

usually relies on large- scale data gathering from a wide population in order to enable generalizations to be made about given factors or variables.

Examples of surveys1 are:

opinion polls (which refute the notion that only opinion polls can catch opinions);

opinion polls (which refute the notion that only opinion polls can catch opinions);

test scores (e.g. the results of testing students nationally or locally);

test scores (e.g. the results of testing students nationally or locally);

students’ preferences for particular courses (e.g. humanities, sciences);

students’ preferences for particular courses (e.g. humanities, sciences);

reading surveys (e.g. Southgate et al.’s example of teaching practices in 1981 in the United Kingdom).

reading surveys (e.g. Southgate et al.’s example of teaching practices in 1981 in the United Kingdom).

The websites for the National Child Development Study (NCDS) can be found at www.cls.ioe.ac.uk/text. asp?section=000100020003 and www.cls.ioe.ac.uk/.

Websites for the Centre for Longitudinal Studies (CLS) can be found at www.cls.ioe.ac.uk/, which includes the British Cohort Study and the Millennium Cohort Study.

Surveys in education often use test results, self-completion questionnaires and attitude scales. A researcher using this model typically will be seeking to gather large- scale data from as representative a sample population as possible in order to say with a measure of statistical confidence that certain observed characteristics occur with a degree of regularity, or that certain factors cluster together (see Chapter 37) or that they correlate with each other (correlation and covariance), or that they change over time and location (e.g. results of test scores used to ascertain the ‘value- added’ dimension of education, maybe using regression analysis and analysis of residuals to determine the difference between a predicted and an observed score), or regression analysis to use data from one variable to predict an outcome on another variable.

Surveys can be exploratory, in which no assumptions or models are postulated, and in which relationships and patterns are explored (e.g. through correlation, regression, stepwise regression and factor analysis). They can also be confirmatory, in which a model, causal relationship or hypothesis is tested (see the discussion of exploratory and confirmatory analysis in Chapter 37). Surveys can be descriptive or analytic (e.g. to examine relationships). Descriptive surveys simply describe data on variables of interest, whilst analytic surveys operate with hypothesized predictor or explanatory variables that are tested for their influence on dependent variables.

Most surveys will combine nominal data on participants’ backgrounds and relevant personal details with other scales (e.g. attitude scales, data from ordinal, interval and ratio measures). Surveys are useful for gathering factual information, data on attitudes and preferences, beliefs and predictions, opinions, behaviour and experiences – both past and present (Weisberg et al., 1996; Aldridge and Levine, 2001).

The attractions of a survey lie in its appeal to generalizability or universality within given parameters, its ability to make statements which are supported by large data banks and its ability to establish the degree of confidence which can be placed in a set of findings.

On the other hand, if a researcher is concerned to catch local, institutional or small- scale factors and variables – to portray the specificity of a situation, its uniqueness and particular complexity, its interpersonal dynamics, and to provide explanations of why a situation occurred or why a person or group of people returned a particular set of results or behaved in a particular way in a situation, or how a programme changes and develops over time, then a survey approach is probably unsuitable. Its degree of explanatory potential or fine detail is limited; it is lost to broad brush generalizations which are free of temporal, spatial or local contexts, i.e. its appeal largely rests on the basis of positivism. The individual instance is sacrificed to the aggregated response (which has the attraction of anonymity, non- traceability and confidentiality for respondents).

Surveys typically, though by no means exclusively, rely on large- scale data, e.g. from questionnaires, test scores, attendance rates, results of public examinations, etc., all of which enable comparisons to be made over time or between groups. This is not to say that surveys cannot be undertaken on a small- scale basis, as indeed they can; rather it is to say that the generalizability of such small- scale data will be slight. In surveys the researcher is usually very clearly an outsider, indeed questions of reliability must attach themselves to researchers conducting survey research on their own subjects, e.g. participants in a course that they have been running (e.g. Bimrose and Bayne (1995) and Morrison (1997)). Further, it is critical that attention is paid to rigorous sampling, otherwise the basis of the survey’s applicability to wider contexts is seriously undermined. Non- probability samples tend to be avoided in surveys if generalizability is sought; probability sampling will tend to lead to generalizability of the data collected.

Three prerequisites to the design of any survey are: the specification of the exact purpose of the enquiry; the population on which it is to focus; and the resources that are available. Hoinville and Jowell’s (1978) consideration of each of these key factors in survey planning can be illustrated in relation to the design of an educational enquiry.

First, a survey’s general purpose must be translated into a specific central aim. Thus, ‘to explore teachers’ views about in- service work’ is somewhat nebulous, whereas ‘to obtain a detailed description of primary and secondary teachers’ priorities in the provision of in- service education courses’ is reasonably specific.

Having decided upon and specified the primary objective of the survey, the second phase of the planning involves the identification and itemizing of subsidiary topics that relate to its central purpose. In our example, subsidiary issues might well include: the types of courses required; the content of courses; the location of courses; the timing of courses; the design of courses; and the financing of courses.

The third phase follows the identification and itemization of subsidiary topics and involves formulating specific information requirements relating to each of these issues. For example, with respect to the type of courses required, detailed information would be needed about the duration of courses (one meeting, several meetings, a week, a month, a term or a year), the status of courses (non- award bearing, award bearing, with certificate, diploma, degree granted by college or university), the orientation of courses (theoretically oriented involving lectures, readings, etc., or practically oriented involving workshops and the production of curriculum materials).

As these details unfold, note Hoinville and Jowell (1978), consideration would have to be given to the most appropriate ways of collecting items of information (interviews with selected teachers, postal questionnaires to selected schools, etc.).

The second prerequisite to survey design, the specification of the population to which the enquiry is addressed, affects decisions that researchers must make both about sampling and resources. In our hypothetical survey of in- service requirements, for example, we might specify the population as ‘those primary and secondary teachers employed in schools within a 30- mile radius of Loughborough University’. In this case, the population is readily identifiable and, given sufficient resources to contact every member of the designated group, sampling decisions do not arise. Things are rarely so straightforward, however. Often the criteria by which populations are specified (‘severely challenged’, ‘under- achievers’, ‘intending teachers’ or ‘highly anxious’) are difficult to operationalize. Populations, moreover, vary considerably in their accessibility; pupils and student teachers are relatively easy to survey, gypsy children and headteachers are more elusive. More importantly, in a large survey researchers usually draw a sample from the population to be studied; rarely do they attempt to contact every member. We deal with the question of sampling shortly.

The third important factor in designing and planning a survey is the financial cost. Sample surveys are labour-intensive (see Davidson, 1970), the largest single expenditure being the fieldwork where costs arise out of the interviewing time, travel time and transport claims of the interviewers themselves. There are additional demands on the survey budget. Training and supervising the panel of interviewers can often be as expensive as the costs incurred during the time that they actually spend in the field. Questionnaire construction, piloting, printing, posting, coding, together with computer programming – all eat into financial resources.

Proposals from intending education researchers seeking governmental or private funding are often weakest in the amount of time and thought devoted to a detailed planning of the financial implications of the projected enquiries. (In this chapter we confine ourselves from this point to a discussion of surveys based on self- completion questionnaires. A full account of the interview as a research technique is given in Chapter 21.)

There are two main issues to be addressed here:

1 Will the researcher be completing the survey by entering data, or will the participants be self-administering the survey?

2 How will the survey be administered, e.g. a postal survey, a telephone survey, an internet survey, by face- to-face interviews, by email?

There can be a significant difference in the responses gained from self- reporting and those obtained from face- to-face survey interviews or telephone interviews (Dale 2006: 145). Many surveys ask respondents not only to administer the questionnaires themselves but also to report on themselves. This may introduce bias, as respondents may under- report (e .g. to avoid socially undesirable responses) or over- report (to give socially desirable answers). Self- reporting also requires the researcher to ensure that respondents all understand the question, understand it in the same way and understand it in the way intended by the researcher (Kenett, 2006: 406). The difficulty here is that words are inherently ambiguous (see Chapter 20 on questionnaire design), so the researcher should be as specific as possible. The researcher should also indicate how much contextual information the respondent should provide, what kind of answer is being sought (so that the respondent knows how to respond appropriately), how much factual detail is required, and what constitutes relevant and irrelevant data (e.g. the level of detail or focus on priority issues required) (Kenett, 2006: 407–8). Further, surveys that rely on respondents’ memory may be prone to the bias of forgetting or selective recall.

Whether the survey is large scale and undertaken by some governmental bureau or small scale and carried out by the lone researcher, the collection of information typically involves one or more of the following data- gathering techniques: structured or semi-structured interviews, self- completion or postal questionnaires, telephone interviews, internet surveys, standardized tests of attainment or performance, and attitude scales. Typically, too, surveys proceed through well- defined stages, though not every stage outlined in Figure 13.1 is required for the successful completion of a survey.

The process moves from the general to the specific. A general research topic is broken down into complementary issues and questions, and, for each component, questions are set. As will be discussed in questionnaires (Chapter 20), it is important, in the interests of reliability and validity, to have several items or questions for each component issue, as this does justice to the all- round nature of the topic. Sapsford (1999: 34–40) suggests that there are four main considerations in planning a survey:

problem definition (e.g. deciding what kinds and contents of answers are required; what hypotheses there are to be tested; what variables there are to explore);

problem definition (e.g. deciding what kinds and contents of answers are required; what hypotheses there are to be tested; what variables there are to explore);

sample selection (e.g. what is the target population; how can access and representativeness be assured; what other samples will need to be drawn for the purpose of comparison);

sample selection (e.g. what is the target population; how can access and representativeness be assured; what other samples will need to be drawn for the purpose of comparison);

design of measurements (e.g. what will be measured, and how (i.e. what metrics will be used – see Chapter 20 on questionnaires); what variables will be required; how reliability and validity will be assured);

design of measurements (e.g. what will be measured, and how (i.e. what metrics will be used – see Chapter 20 on questionnaires); what variables will be required; how reliability and validity will be assured);

concern for participants (e.g. protection of confidentiality and anonymity; avoidance of pain to the respondents; avoiding harm to those who might be affected by the results; avoiding over- intrusive questions; avoiding coercion; informed consent – see Chapter 5 on ethics).

concern for participants (e.g. protection of confidentiality and anonymity; avoidance of pain to the respondents; avoiding harm to those who might be affected by the results; avoiding over- intrusive questions; avoiding coercion; informed consent – see Chapter 5 on ethics).

A 14- stage process of planning a survey can be considered:

Stage 1: define the objectives;

Stage 2: decide the kind of survey required (e.g. longitudinal, cross- section, trend study; cohort study);

Stage 3: formulate research questions or hypotheses (if appropriate): the null hypothesis and alternative hypothesis;

Stage 4: decide the issues on which to focus;

Stage 5: decide the information that is needed to address the issues;

Stage 6: decide the sampling required;

Stage 7: decide the instrumentation and the metrics required;

Stage 8: generate the data collection instruments;

Stage 9: decide how the data will be collected (e.g. postal survey, interviews);

Stage 10: pilot the instruments and refine them;

Stage 11: train the interviewers (if appropriate);

Stage 12: collect the data;

Stage 13: analyse the data;

Stage 14: report the results.

Rosier (1997: 154–62) suggests that the planning of a survey will need to include clarification of:

the research questions to which answers need to be provided;

the research questions to which answers need to be provided;

the conceptual framework of the survey, specifying in precise terms the concepts that will be used and explored;

the conceptual framework of the survey, specifying in precise terms the concepts that will be used and explored;

operationalizing the research questions (e.g. into hypotheses);

operationalizing the research questions (e.g. into hypotheses);

the instruments to be used for data collection, e.g. to chart or measure background characteristics of the sample (often nominal data), academic achievements (e.g. examination results, degrees awarded), attitudes and opinions (often using ordinal data from rating scales) and behaviour (using observational techniques);

the instruments to be used for data collection, e.g. to chart or measure background characteristics of the sample (often nominal data), academic achievements (e.g. examination results, degrees awarded), attitudes and opinions (often using ordinal data from rating scales) and behaviour (using observational techniques);

sampling strategies and subgroups within the sample (unless the whole population is being surveyed, e.g. through census returns or nationally aggregated test scores etc.);

sampling strategies and subgroups within the sample (unless the whole population is being surveyed, e.g. through census returns or nationally aggregated test scores etc.);

pre-piloting the survey;

pre-piloting the survey;

piloting the survey;

piloting the survey;

data collection practicalities and conduct (e.g. permissions, funding, ethical considerations, response rates);

data collection practicalities and conduct (e.g. permissions, funding, ethical considerations, response rates);

data preparation (e.g. coding, data entry for computer analysis, checking and verification);

data preparation (e.g. coding, data entry for computer analysis, checking and verification);

data analysis (e.g. statistical processes, construction of variables and factor analysis, inferential statistics);

data analysis (e.g. statistical processes, construction of variables and factor analysis, inferential statistics);

reporting the findings (answering the research questions).

reporting the findings (answering the research questions).

It is important to pilot and pre- pilot a survey. The difference between the pre- pilot and the pilot is significant. Whereas the pre- pilot is usually a series of open- ended questions that are used to generate categories for closed, typically multiple-choice questions, the pilot is used to test the actual survey instrument itself (see Chapter 20).

A rigorous survey, then, formulates clear, specific objectives and research questions, ensures that the instrumentation, sampling and data types are appropriate to yield answers to the research questions, ensures that as high a level of sophistication of data analysis is undertaken as the data will sustain (but no more!).

A survey is no stronger than its weakest point, and we consider a range of issues here in an endeavour to strengthen each aspect of a survey. Surveys have to minimize errors caused by:

poor sampling (e.g. failure to represent or include sufficiently the target population);

poor sampling (e.g. failure to represent or include sufficiently the target population);

poor question design and wording (e.g. failure to catch accurately the views of, or meanings from, the respondents or to measure the factors of interest);

poor question design and wording (e.g. failure to catch accurately the views of, or meanings from, the respondents or to measure the factors of interest);

incorrect or biased responses;

incorrect or biased responses;

low response or non- response.

low response or non- response.

The first of these – a sampling matter – may be caused by a failure correctly to identify the population and its characteristics, or a failure to use the correct sampling strategy, or systematically to bias the sample (e.g. using a telephone survey based on telephone directory entries, when key people in the population may not have a telephone (e.g. the poor), or may have a cellphone rather than a fixed line (e.g. the young, the middle aged but not the elderly), or using an internet or email based survey, when many respondents do not have access). We address the issue of sampling in Chapter 8 and below.

The second of these is a failure to operationalize the variables fairly (i.e. a validity issue) or a failure in the wording or meanings used or inferred, such that incorrect responses are collected (a reliability issue) (e.g. people may not understand a question, or may misinterpret it, or interpret it differently). We address this in Chapter 10 and below.

The third problem is that some participants may deliberately over- report or under- report the real situation in – often sensitive – matters (e.g. teenage alcohol, smoking or drug use, underage sexual relations, bullying, domestic violence, petty criminality may be systematically under- reported (i.e. biased), whereas popularity of a teacher or students might be over- reported (biased)). Bias obtains where there is a systematic skewing or distortion in the responses. Further, some questions may rely on memory, and memory can be selective and deceptive (e.g. people may not remember accurately). Also, some responses will depend on a person’s state of mind at the time of completing the survey – asking a teacher about teacher stress and tiredness, late on a Friday afternoon in school with a difficult class, could well elicit a completely different response from asking her directly after a week’s holiday. Some questions may be so general as to be unhelpful (e.g. ‘how stressed do you feel?’), whereas others might be so specific as to prevent accurate recall (e.g. ‘how many times have you shouted at a class of children in the past week?’) (one solution to the latter might be to ask participants to keep a diary of instances).

Fowler (2009: 15) suggests that the answer that a respondent gives is a combination of the true response plus an error in the answer given, and he indicates that errors may come from many sources.

The fourth of these – low response or non- response – is a problem that besets researchers, and is so signi-ficant that we devote a separate section to it below.

Non- response to a whole questionnaire (‘unit non-response’ (Durrant, 2009: 293)) or to a specific item (‘item non- response’ (Durrant, 2009: 293)) is a serious problem for much survey research, though Denscombe (2009: 282) notes that online surveys tend to have lower item non- response than paper- based surveys, though there may be more dropouts before reaching the end of an online survey than in a paper- based survey.

Dale (2006: 148) suggests that ‘non- respondents almost invariably differ from respondents’, and that this affects the validity and reliability of the responses obtained, and their analysis. If non- response is received from a very homogenous sample then this might be less of a problem than if the sample is very varied. Further, if non- response is received randomly across a sample then this might be less of a problem than if the non-response was from a particular sub- sector of the respondents (e.g. a very low or a very high socio-economic group), as this would bias the results (cf. Dale, 2006: 148). A subset of non- response to a whole questionnaire is item non- response, and here missing data should not be ignored (Dale, 2006: 15).

Rubin (1987), Little and Rubin (1989), Allison (2001), Dale (2006: 149–50) and Durrant (2006, 2009) review a range of different ‘imputation methods’ for handling and weighting non- response (i.e. methods for filling in data with ‘plausible values’ in order to render a set of data complete and yet to reduce bias in the non-responses, i.e. that bias which might be caused by the non- responses having different values from the non-missing responses (Durrant, 2009: 295)). These depend on whether the non- response is largely confined to a single variable or many variables. The researcher has to determine whether there are patterns of non- response, as these affect the method for handling non- response. For example, if the non- response is randomly distributed across several variables, with no clear patterns of non- response, then this may be less problematic than if there is a systematic non- response to one or more variables in a survey (Durrant, 2009: 295). Durrant sets out several ways of calculating missing values, including, for example:

a calculating missing values from regression techniques using auxiliary variables (p. 296);

b ‘hot deck’ methods, in which subgroups of participants (based on their scores on auxiliary variables) are constructed and the researcher compares their results to the non- missing results of the respondent who had omitted a particular response (p. 297);

c ‘nearest neighbour’ techniques, in which the results from a person whose data diverge as little as possible from those of the missing person are used to replace the missing values.

Durrant (2006, 2009) identifies further, statistical methods of calculating missing scores, such as multiple and fractional imputation, and propensity score weighting. She makes the point that how one calculates the values of missing data depends on a range of factors such as the purpose of the analysis, the variable(s) in question, the kinds of data, any patterns of missing data, and the characteristics and fittingness of the assumptions on which the particular intended imputation method is based. For further guidance on this matter we refer the reader to the sources indicated above.

In some cases (e.g. when all the students in a class complete a questionnaire during a lesson) the response rate may be very high, but in other circumstances the response rate may be very low or zero, either for the whole survey or for individual items within it, for several reasons, e.g.

the survey never reaches the intended people;

the survey never reaches the intended people;

people refuse to answer;

people refuse to answer;

people may not be available (e.g. for a survey administered by interview), for example they may be out at work when a telephone survey administrator calls;

people may not be available (e.g. for a survey administered by interview), for example they may be out at work when a telephone survey administrator calls;

people may not be able to answer the questions (e.g. language or writing difficulties);

people may not be able to answer the questions (e.g. language or writing difficulties);

people may not actually have the information requested;

people may not actually have the information requested;

people may overlook some items in error;

people may overlook some items in error;

the survey was completed and posted but failed to return;

the survey was completed and posted but failed to return;

the pressure of competing activities on the time of the respondent;

the pressure of competing activities on the time of the respondent;

potential embarrassment at their own ignorance if respondents feel unable to answer a question;

potential embarrassment at their own ignorance if respondents feel unable to answer a question;

ignorance of the topic/no background in the topic;

ignorance of the topic/no background in the topic;

dislike of the contents or subject matter of the interview;

dislike of the contents or subject matter of the interview;

fear of possible consequences of the survey to himself/herself or others;

fear of possible consequences of the survey to himself/herself or others;

lack of clarity in the instructions;

lack of clarity in the instructions;

fear or dislike of being interviewed;

fear or dislike of being interviewed;

sensitivity of the topic, or potentially insulting or threatening topic;

sensitivity of the topic, or potentially insulting or threatening topic;

betrayal of confidences;

betrayal of confidences;

losing the return envelope or return address;

losing the return envelope or return address;

the wrong person may open the mail, and fail to pass it on to the most appropriate person.

the wrong person may open the mail, and fail to pass it on to the most appropriate person.

Later in this chapter we discuss ways of improving response rates. However, here we wish to insert a note of caution: some researchers suggest that, for non-responders to an item, an average score for that item can be inserted. This might be acceptable if it can be shown that the sample or the population is fairly homogeneous, but, for heterogeneous populations or samples, or those where the variation in the sample or population is not known, it may be dangerous to assume homoge neity and hence to infer what the missing data may look like, as this could distort the results.

Let us suppose that out of a sample of 200 participants 90 per cent reply (180 participants) to a ‘yes/no’ type of question, e.g. for the question ‘Do you agree with public examinations at age 11?’, and let us say that 50 per cent (90 people) indicate ‘yes’ and 50 per cent indicate ‘no’. If the 10 per cent who did not reply (20 people) would have said ‘yes’ then this would clearly swing the results as 110 people say ‘yes’ (55 per cent) and 90 people say ‘no’ (45 per cent). However, if the response rates vary, then the maximum variation could be very different, as in Table 13.1 (cf. Fowler, 2009: 55). Table 13.1 assumes that if a 100 per sample had replied, 50 per said ‘yes’ and 50 per cent said ‘no; the rest of the table indicates the possible variation depending on response rate.

Table 13.1 indicates the possible variation in a simple ‘yes/no’ type of question. If a rating scale is chosen, for example a 5-point rating scale, the number of options increases from two to five, and, correspondingly, the possibility for variation increases even further.

A major difficulty in survey research is securing a suf-ficiently high response rate to give credibility and reliability to the data. In some postal research, response rates can be as low as 20–30 per cent, and this compromises the reliability of the data very considerably. There is a difference between the intended and the achieved sample (Fogelman, 2002: 105). Punch (2003: 43) suggests that it is important to plan for poor response rates (e.g. by increasing the sample size) rather than trying to adjust sampling post hoc. He also suggests that access to the sample needs to be researched before the survey commences, maybe pre-notifying potential participants if that is deemed desirable. He argues that a poor response level may also be due to the careless omission of details of how and when the questionnaire will be returned or collected. This is a matter that needs to be made clear in the questionnaire itself. In the case of a postal survey a stamped addressed envelope should always be included.

Kenett (2006) and Fowler (2009: 52) report that responses rates increase when people are interested in the subject matter of the survey, or if the subject is very relevant to them, or if the completion of the survey brings a sense of satisfaction to the respondent. Denscombe (2009: 288) reports that response rates increase if the ‘respondent burden’ is low (i.e. the effort required by the respondent to answer a question).

Further, the design, layout and presentation of the survey may also exert an influence on response rate. It is important to include a brief covering letter that explains the research clearly and introduces the researcher. The timing of the survey is important, for example schools will not welcome researchers or surveys in examination periods or at special periods, e.g. Christmas or inspection times (Fogelman, 2002: 106). Finally, it is important to plan the follow-up to surveys, to ensure that non- respondents are called again and reminded of the request to complete the survey. Fowler (2009: 57) indicates that between a quarter and a third of people may agree to complete a survey if a follow- up is undertaken.

There are several possible ways of increasing response rates to mailed surveys, including, for example (e.g. Aldridge and Levine, 2001; Fowler, 2009: 56):

follow- ups and polite reminders (e.g. by mail, email, telephone call) in which the reminder is short, polite, indicating the value of the respondent’s participation and, if the reminder is postal, another clean copy of the questionnaire;

follow- ups and polite reminders (e.g. by mail, email, telephone call) in which the reminder is short, polite, indicating the value of the respondent’s participation and, if the reminder is postal, another clean copy of the questionnaire;

advance notification of the survey (e.g. by telephone, post or email);

advance notification of the survey (e.g. by telephone, post or email);

pre- paid return stamped addressed envelopes;

pre- paid return stamped addressed envelopes;

institutional affiliation, survey sponsorship or support from a high status agent;

institutional affiliation, survey sponsorship or support from a high status agent;

financial incentives (though increasing the financial incentive to a high figure does not bring commensurate returns in response rates);

financial incentives (though increasing the financial incentive to a high figure does not bring commensurate returns in response rates);

rewards for return;

rewards for return;

making surveys easy to read and to complete;

making surveys easy to read and to complete;

making instructions about responses and return very clear;

making instructions about responses and return very clear;

avoid open- ended questions unless these are really important (as the quality of responses is usually poor to open- ended questions: people tend not to write anything or to write very little);

avoid open- ended questions unless these are really important (as the quality of responses is usually poor to open- ended questions: people tend not to write anything or to write very little);

avoid placing open- ended questions at the start of a questionnaire;

avoid placing open- ended questions at the start of a questionnaire;

make the surveys attractive, with easy- to-follow instructions and spacing of the text;

make the surveys attractive, with easy- to-follow instructions and spacing of the text;

flatter the participants without being seen to flatter them;

flatter the participants without being seen to flatter them;

providing information about the research through a covering letter and/or advance notification;

providing information about the research through a covering letter and/or advance notification;

making the survey look very unlike junk mail;

making the survey look very unlike junk mail;

consider asking the respondents for an interview to complete the survey questionnaire;

consider asking the respondents for an interview to complete the survey questionnaire;

deliver the questionnaire personally rather than through mail;

deliver the questionnaire personally rather than through mail;

ensure that the questions or items are non-judgemental (e.g.in sensitive matters);

ensure that the questions or items are non-judgemental (e.g.in sensitive matters);

assure confidentiality and anonymity.

assure confidentiality and anonymity.

Cooper and Schindler (2001: 314–15) and Fowler (2009: 58) report that the following factors make little or no appreciable difference to response rates:

personalizing the introductory letter;

personalizing the introductory letter;

writing an introductory letter;

writing an introductory letter;

promises of anonymity;

promises of anonymity;

questionnaire length (it is not always the case that a short questionnaire produces more returns than a long questionnaire, but researchers will need to consider the effect of a long survey questionnaire on the respondents – they may feel positive or negative about it, or set it aside temporarily and forget to return it later);

questionnaire length (it is not always the case that a short questionnaire produces more returns than a long questionnaire, but researchers will need to consider the effect of a long survey questionnaire on the respondents – they may feel positive or negative about it, or set it aside temporarily and forget to return it later);

size, reproduction and colour of the questionnaire;

size, reproduction and colour of the questionnaire;

deadline dates for return (it was found that these did not increase response rate but did accelerate the return of questionnaires).

deadline dates for return (it was found that these did not increase response rate but did accelerate the return of questionnaires).

Potential respondents may be persuaded to participate depending on, for example:

the status and prestige of the institution or researcher carrying out the research;

the status and prestige of the institution or researcher carrying out the research;

the perceived benefit of the research;

the perceived benefit of the research;

the perceived importance of the topic;

the perceived importance of the topic;

personal interest in the research;

personal interest in the research;

interest in being interviewed, i.e. the interview experience;

interest in being interviewed, i.e. the interview experience;

personal liking for, or empathy with, the researcher;

personal liking for, or empathy with, the researcher;

feelings of duty to the public and sense of civic responsibility;

feelings of duty to the public and sense of civic responsibility;

loneliness or boredom (nothing else to do);

loneliness or boredom (nothing else to do);

sense of self- importance.

sense of self- importance.

Dillman (2007) suggests that response rates can be increased if, in sequence: (a) non- respondents are sent a friendly reminder after ten days, stressing the importance of the research; (b) non- respondents are sent a further friendly reminder ten days after the initial reminder, stressing the importance of the research; (c) a telephone call is made to the respondents shortly after the second reminder, indicating the importance of the research.

Fowler (2009: 60) suggests that the initial questionnaire might also include a statement to say that completion and return of the questionnaire will ensure that no follow-up reminders will be sent (though this may be regarded by some respondents as presumptuous).

Sampling is a key feature of a survey approach, and we advise readers to look closely at Chapter 8 on sampling. Because questions about sampling arise directly from the second of our preliminary considerations, that is defining the population upon which the survey is to focus, researchers must take sampling decisions early in the overall planning of a survey (see Figure 13.1). We have already seen that due to factors of expense, time and accessibility, it is not always possible or practical to obtain measures from a population. Indeed Wilson et al. (2006: 352) draw attention to the tension between the need for large samples in order to conduct ‘robust statistical analysis’, and issues of resources such as cost and practicability (p. 353).

Researchers endeavour therefore to collect information from a smaller group or subset of the population in such a way that the knowledge gained is representative of the total population under study. This smaller group or subset is a ‘sample’. Unless researchers identify the total population in advance, it is virtually impossible for them to assess how representative the sample is that they have drawn. Chapter 8 addresses probability and non- probability samples, and we refer readers to the detailed discussion of these in that chapter. The researcher will need to decide the sampling strategy to be used on the basis of fitness for purpose, e.g.

a probability and non- probability sample;

a probability and non- probability sample;

the desire to generalize, and to whom;

the desire to generalize, and to whom;

the sampling frame (those who are eligible to be included);

the sampling frame (those who are eligible to be included);

the sample size;

the sample size;

the representativeness of the sample;

the representativeness of the sample;

access to the sample;

access to the sample;

the response rate.

the response rate.

Even if the researcher has taken extraordinary care with the sampling strategy, there may still be problems (e.g. response rate, respondent characteristics or availability) that may interfere with the best- laid strategies.

In addition to the sampling strategy to be used, there are the issues of sample size and selection. We discussed this in Chapter 8, but here we wish to address the issue of practicability. For example, let us say that, in the interests of precision, the researcher wishes to have a sample in which there are four strata (e.g. age groups in a primary school), and that each stratum comprised 50 students, i.e. 200 students. If that researcher wished to increase the sample size of one stratum by, say 20 students, this would necessitate an overall increase of 80 students (20 × 4) in the sample. Do the benefits outweigh the costs here?

An alternative to increasing the total size of the sample would be to increase the size of one stratum only, under certain conditions. For example, let us say that the researcher is studying attitudes of males and females to learning science, in a secondary school which had only recently moved from being a single- sex boys’ school to a mixed sex school, so the ratio of male to female students is 4:1. The researcher wishes to include a minimum of 200 female students. This could require a total of 1,000 students in the sample (200 females + {200 × 4} male students in the sample); this could be unmanageable. Rather, the researcher could identify two female students for each male student (i.e. 400 females) and then, when analysing the data, could give one-quarter of the weight to the response of the female students, in order to gain a truer representation of the target population of the school. This would bring the total sample to 600 students, rather than 1,000, involved in the survey. Over-sampling a smaller group (in this case the females), and then weighting the analysis is frequently undertaken in surveys (cf. Fowler, 2009: 27).

In sampling, the probability might also exist of excluding some legitimate members of population in the target sample; however, the researcher will need to weigh the cost of excluding these members (e.g. the very- hard-to- reach) against the cost of ensuring that they are included – the benefit gained from including them may not justify the time, cost and effort (cf. Fowler, 2009: 179). Similarly, the precision gained from stratified sampling (see Chapter 8) may not be worth the price to be paid in necessarily increasing the sample size in order to represent each stratum.

In many cases a sampling strategy may be in more than one stage. For example, let us consider the instance of a survey of 1,000 biology students from a population of 10,000 biology students in a city. In the first stage a group of, say, ten, schools is identified (A), then, within that, an age group of students (B), and then, within that the individuals in that group who are studying biology (C), and, finally, the sample (D) is taken from that group. The intention is to arrive at (D), but, in order to reach this point, a series of other steps has to be taken.

This raises the matter of deciding what are those steps to be taken. For example, the researcher could decide the sampling for the survey of the biology students by taking the random sample of 1,000 students from ten schools. The researcher lists all the 1,000 relevant students from the list of 10,000 students, and decides to select 100 students from each of the ten schools (a biology student, therefore, in one of these ten schools has a 1 in 10 chance of being selected). Alternatively the researcher could decide to sample from five schools only, with 200 students from each of the five schools, so students in each of these five schools have a 1 in 5 chance of being selected. Alternatively, the researcher could decide to sample from two schools, with 500 students, so students in each of these two schools have a 1 in 2 chance of being selected. There are other permutations. The point here is that, as the number of schools decreases, so does the possible cost of conducting the survey, but so does the overall reliability, as so few schools are included. It is a trade- off.

In order to reduce sampling error (the variation of the mean scores of the sample from the mean score of the population), a general rule is to increase the sample, and this is good advice. However, it has to be tempered by the fact that the effect of increasing the sample size in a small sample reduces sampling error more than in a large sample, e.g. increasing the sample size from 50 to 80 (30 persons) will have greater impact on reducing sampling error than increasing the sample size from 500 to 530 (30 persons). Hence it may be unprofitable simply to increase sample sizes in already large samples.

Further, the researcher has to exercise his or her judgement in attending to sampling. For example, if it is already known that a population is homogenous, then the researcher may feel it a needless exercise in having too large and unmanageable a sample if the results are not likely to be much different from those of a small sample of the same homogeneous group (though theoretical sampling (see Chapter 33) may suggest where a researcher needs to include participants from other small samples). As Fowler (2009: 44) remarks, the results of a sample of 150 people will describe a population of 15,000 or 25 million with more or less the same degree of accuracy. He remarks that samples of more than 150 or 200 may not yield more than modest gains to the precision of the data (p. 45), though this, of course, has to be addressed in relation to the population characteristics, the number, size and kind of strata to be included, and the type of sample being used. Sampling errors, he notes (p. 45) are more a function of sample size than of the proportions of the sample to the population. Further, he advocates probability rather than non- probability samples, unless there are persuasive reasons for non- probability samples to be used.

Whilst sample sizes can be calculated on the basis of statistics alone (e.g. confidence levels, confidence intervals, population size and so on, see Chapter 8), this is often not the sole criterion, as it accords a degree of precision to the sample which takes insufficient account of other sampling issues, for example access, variation or homogeneity in the population, levels of literacy in the population (e.g. in the case of a self- administered questionnaire survey) and costs.

Sampling is one of several sources of error in surveys, as indicated earlier in this chapter.

The term ‘longitudinal’ is used to describe a variety of studies that are conducted over a period of time. Often, as we have seen, the word ‘developmental’ is employed in connection with longitudinal studies that deal specifically with aspects of human growth.

A clear distinction is drawn between longitudinal and cross-sectional studies.2 The longitudinal study gathers data over an extended period of time; a short-term investigation may take several weeks or months; a long- term study can extend over many years. Where successive measures are taken at different points in time from the same respondents, the term ‘follow- up study’ or ‘cohort study’ is used in the British literature, the equivalent term in the United States being the ‘panel study’. The term ‘cohort’ is a group of people with some common characteristic. A cohort study is sometimes differentiated from a panel study. In a cohort study a specific population is tracked over a specific period of time but selective sampling within that sample occurs (Borg and Gall, 1979: 291). This means that some members of a cohort may not be included each time. By contrast, in a panel study each same individual is tracked over time.

Where different respondents are studied at different points in time, the study is called ‘cross- sectional’. Where a few selected factors are studied continuously over time, the term ‘trend study’ is employed. One example of regular or repeated cross- sectional social surveys is the General Household Survey, in which the same questions are asked every year though they are put to a different sample of the population each time. The British Social Attitudes Survey is an example of a repeated cross- sectional survey, using some 3,600 respondents.

A famous example of a longitudinal (cohort) study is the National Child Development Study, which started in 1958. The British General Household Panel Survey interviewed individuals from a representative sample each year in the 1990s. Another example is the British Family Expenditure Survey. These latter two are cross-sectional in that they tell us about the population at a given point in time, and hence provide aggregated data.

By contrast, longitudinal studies can also provide individual level data, by focusing on the same individuals over time (e.g. the Household Panel Studies which follow individuals and families over time (Ruspini, 2002: 4). Lazarsfeld introduced the concept of a panel in the 1940s, attempting to identify causal patterns and the difficulties in tracing causal patterns (Ruspini, 2002: 13)).

Longitudinal studies can be of the survey type or of other types (e.g. case study). Here we confine ourselves to the survey type. Such longitudinal studies can use repeated cross- sectional studies, which are ‘carried out regularly, each time using a largely different sample or a completely new sample’ (Ruspini, 2002: 3), or use the same sample over time. They enable researchers to: ‘analyse the duration of social phenomena’ (Ruspini, 2002: 24); highlight similarities, differences and changes over time in respect of one or more variables or participants (within and between participants); identify long- term (‘sleeper’) effects; and explain changes in terms of stable characteristics, e.g. sex, or variable characteristics, such as income. The appeal of longitudinal research is its ability to establish causality and to make inferences. Ruspini (2002) adds to these the ability of longitudinal research to ‘construct more complicated behavioural models than purely cross- sectional or time- series data’ (p. 26); they catch the complexity of human behaviour. Further, longitudinal studies can combine numerical and qualitative data.

Cohort studies and trend studies are prospective longitudinal methods, in that they are ongoing in their collection of information about individuals or their monitoring of specific events. Retrospective longitudinal studies, on the other hand, focus upon individuals who have reached some defined end- point or state. For example, a group of young people may be the researcher’s particular interest (intending social workers, convicted drug offenders or university dropouts, for example), and the questions to which she will address herself are likely to include ones such as: ‘Is there anything about the previous experience of these individuals that can account for their present situation?’ Retrospective longitudinal studies will specify the period over which to be retrospective, e.g. one year, five years.

Retrospective analysis is not confined to longitudinal studies alone. For example Rose and Sullivan (1993: 185) and Ruane (2005: 87) suggest that cross-sectional studies can use retrospective factual questions, e.g. previous occupations, dates of birth within the family, dates of marriage, divorce, though Rose and Sullivan (1993: 185) advise against collecting other types of retrospective data in cross- sectional studies, as the quality of the data diminishes the further back one asks respondents to recall previous states or even facts.

It is important, in longitudinal studies, to decide when, and how frequently to collect data over time, and this is informed by issues of fitness for purpose as well as practicability. Further, in order to allow for attrition (dropout) of the sample, it is wise to have as large a sample as practicable and possible at the start of the study (Wilson et al., 2006: 354).

A cross-sectional study is one that produces a ‘snapshot’ of a population at a particular point in time. The epitome of the cross- sectional study is a national census in which a representative sample of the population consisting of individuals of different ages, different occupations, different educational and income levels, and residing in different parts of the country is interviewed on the same day. More typically in education, cross-sectional studies involve indirect measures of the nature and rate of changes in the physical and intellectual development of samples of children drawn from representative age levels. The single ‘snapshot’ of the cross-sectional study provides researchers with data for either a retrospective or a prospective enquiry.

A cross-sectional study can also bear several hallmarks of a longitudinal study of parallel groups (e.g. age groups) which are drawn simultaneously from the population. For example, drawing students aged 5, 7, 9 and 11 at a single point in time would bear some characteristics of a longitudinal study in that developments over age groups could be seen, though, of course, it would not have the same weight as a longitudinal study conducted on the same age group over time. This is the case for international studies of educational achievement, requiring samples to be drawn from the same population (Lietz and Keeves, 1997: 122) and for factors that might influence changes in the dependent variables to remain constant across the age groups. Cross- sectional studies, catching a frozen moment in time, may be ineffective for studying change. If changes are to be addressed through cross- sectional surveys, then this suggests the need for repeated applications of the survey, or by the use of trend analysis.

Trend studies focus on factors rather than people, and these factors are studied over time. New samples are drawn at each stage of the data collection, but focusing on the same factors. By taking different samples the problem of reactivity is avoided (see below: ‘pre- test sensitisation’), i.e. earlier surveys affecting the behaviour of participants in the later surveys. This is particularly useful if the research is being conducted on sensitive issues, as raising a sensitive issue early on in research may change an individual’s behaviour, which could affect the responses in a later round of data collection. By drawing a different sample each time this problem is overcome.

Trend or prediction studies have an obvious importance to educational administrators or planners. Like cohort studies, they may be of relatively short or long duration. Essentially, the trend study examines recorded data to establish patterns of change that have already occurred in order to predict what will be likely to occur in the future. In trend studies two or more cross-sectional studies are undertaken with identical age groups at more than one point in time in order to make comparisons over time (e.g. the Scholastic Aptitude and Achievement tests in the United States) (Keeves, 1997a: 141) and the National Assessment of Educational Progress results (Lietz and Keeves, 1997: 122). A major difficulty that researchers face in conducting trend analyses is the intrusion of unpredictable factors that invalidate forecasts formulated on past data. For this reason, short- term trend studies tend to be more accurate than long- term analyses. Trend studies do not include the same respondents over time, so the possibility exists for variation in data due to the different respondents rather than the change in trends. Gorard (2001b: 87) suggests that this problem can be attenuated by a ‘rolling sample’ in which a proportion of the original sample is retained in the second wave of data collection, and a proportion of this sample is retained in the third wave, and so on.

The distinctions we have drawn between the various terms used in developmental research are illustrated in Figure 13.2.

Longitudinal studies of the cohort analysis type have an important place in the research armoury of educational investigators. Longitudinal studies have considerable potential for yielding rich data that can trace changes over time, and with great accuracy (Gorard, 2001b: 86). On the other hand they suffer from problems of attrition (participants leaving the research over time, a particular problem in panel studies which research the same individuals over time), and they can be expensive to conduct in terms of time and money (Ruspini, 2002: 71). Gorard (2001b) reports a study of careers and identities that had an initial response rate of between 60 and 70 per cent in the first round, and then risked dropping to 25 per cent by the third round, becoming increasingly more middle class in each wave of the study. He also discusses a Youth Cohort Study (2001b) in which only 45 per cent of the respondents took part in all three waves of the data collection. Ruspini (2002: 72) identi-fies an attrition rate of 78 per cent in the three waves of the European Community Household Panel survey of the UK in 1997.

Ruspini (2002) also indicates how a small measurement error in a longitudinal study may be compounded over time. She gives the example of an error in income occurring at a point in time (p. 72) that could lead to ‘false transitions’ appearing over time in regard to poverty and unemployment.

Further, long- term studies, Gorard (2001b: 86) avers, face ‘a threat to internal validity’ that stems from the need ‘to test and re- test the same individuals’. Dooley (2001: 120) terms this ‘pre- test sensitisation’; it is also termed ‘panel conditioning’ or ‘time- in sample bias’ (Ruspini, 2002: 73). Here the first interview in an interview survey can cause changes in the second interview, i.e. the first interview may set up a self- fulfilling prophecy that is recorded in the second interview. He gives the example of a health survey in the first round of data collection, which may raise participants’ awareness of the dangers of smoking, such that they reduce or give up smoking by the time the second round takes place. Trend studies overcome this problem by drawing different populations at each stage of the data collection in the research.

Dooley (2001) also raises the issue of the difficulties caused by changes in the research staff over time in longitudinal surveys. Changes in interviewee response, he suggests, may be due to having different researchers rather than to the respondents themselves. Even using the same instruments, different researchers may use them differently (e.g. in interviewing behaviour).

To add to these matters, Ruspini (2002: 73) suggests that longitudinal data are affected by:

a history (events occurring may change the observations of a group under study);

b maturation (participants mature at different speeds and in different ways);

c testing (test sensitization may occur – participants learn from exposure to repeated testing/interviews);

d the timing of cause and effect (some causes may produce virtually instantaneous effects and others may take a long time for the effects to show);

e the direction of causality not always being clear or singular.

A major concern in longitudinal studies concerns the comparability of data over time. For example, though public examinations may remain constant over time (e.g. GCSE, A levels), the contents and format of those examinations do not. (This rehearses the argument that public examinations like A levels are becoming easier over time.) This issue concerns the need to ensure consistency in the data collection instruments over time. Further, if comparability of data in a longitudinal study is to be addressed then this means that the initial rounds of data collection, in the earliest stage of the research, will need to anticipate and include all the variables that will be addressed over time.

Longitudinal studies are more prone to attrition than cross- sectional studies, and are more expensive to conduct in terms of time and cost. On the other hand, whereas trend studies change their populations, thereby disabling micro- level – individual- level – analysis from being conducted, longitudinal analysis enables such individual- level analysis to be performed. Indeed, whereas cross-sectional designs (even if they are repeated cross- sectional designs) may be unsuitable for studying developmental patterns and causality within cohorts, in longitudinal analysis this is a strength. Longitudinal data can supply ‘satisfactory answers to questions concerning the dynamics and the determinants of individual behaviour’ (Ruspini, 2002: 71), issues which are not easily addressed in cross- sectional designs.

Retrospective longitudinal studies rely on the memories of the participants. These may be faulty, and the further back one’s memory reaches, the greater is the danger of distortion or inability to recall. Memory is affected by, for example (Ruspini, 2002: 97):

the time that has elapsed since the event took place;

the time that has elapsed since the event took place;

the significance of the event for the participant;

the significance of the event for the participant;

the amount of information required for the study – the greater the amount, the harder it is to provide;

the amount of information required for the study – the greater the amount, the harder it is to provide;

the contamination/interference effect of other memories of a similar event (i.e. the inability to separate similar events);

the contamination/interference effect of other memories of a similar event (i.e. the inability to separate similar events);

the emotional content or the social desirability of the content;

the emotional content or the social desirability of the content;

the psychological condition of the participant at interview.

the psychological condition of the participant at interview.

Further, participants will look at past events through the lens of hindsight and subsequent events rather than what those events meant at the time. Further, it is not always easy for these participants to recall their emotional state at the time in question. Factually speaking, it may not be possible to gather data from some time past, as they simply do not exist, e.g. medical records, data on income, or they cannot be found or recovered.

Cohort studies of human growth and development conducted on representative samples of populations are uniquely able to identify typical patterns of development and to reveal factors operating on those samples which elude other research designs. They permit researchers to examine individual variations in characteristics or traits, and to produce individual growth curves. Cohort studies, too, are particularly appropriate when investigators attempt to establish causal relationships, for this task involves identifying changes in certain characteristics that result in changes in others.

Cross-sectional designs are inappropriate in causal research as they cannot sustain causal analysis unless they are repeated over time. Cohort analysis is especially useful in sociological research because it can show how changing properties of individuals fit together into changing properties of social systems as a whole. For example, the study of staff morale and its association with the emerging organizational climate of a newly opened school would lend itself to this type of developmental research. A further strength of cohort studies in schools is that they provide longitudinal records whose value derives in part from the known fallibility of any single test or assessment (see Davie, 1972). Finally, time, always a limiting factor in experimental and interview settings, is generally more readily available in cohort studies, allowing the researcher greater opportunity to observe trends and to distinguish ‘real’ changes from chance occurrences (see Bailey, 1994).

In longitudinal, cohort and trend studies there is the risk that characteristics of the respondents may affect the results (Robson, 1993: 128). For example, their memory, knowledge, motivation and personality may affect their responses, and, indeed, they may withhold information, particularly if it is sensitive.

Longitudinal research indicates the influence of biological factors over time (e.g. human development), environmental influences and intervention influences (Keeves, 1997a: 139) and their interactions. Addressing these, the appeal of longitudinal analysis is that it enables causal analysis to be undertaken. Time series studies in longitudinal research also enable emergent patterns to be observed over time, by examining a given range of variables over time, in addition to other factors. This permits individual and group profiles to be examined over time and development, indicating similarities and differences within and between individuals and groups in respect of given variables. As longitudinal studies do not concern themselves with time-specific infuences, only those naturally occurring influences are included (Keeves, 1997a: 142).

Longitudinal studies suffer several disadvantages (though the gravity of these weaknesses is challenged by supporters of cohort analysis). The disadvantages are first, that they are time- consuming and expensive, because the researcher is obliged to wait for growth data to accumulate. Second, there is the difficulty of sample mortality. Inevitably during the course of a long- term cohort study, subjects drop out, are lost or refuse further cooperation. Such attrition makes it unlikely that those who remain in the study are as representative of the population as the sample that was originally drawn. Sometimes attempts are made to lessen the effects of sample mortality by introducing aspects of cross- sectional study design, that is, ‘topping up’ the original cohort sample size at each time of retesting with the same number of respondents drawn from the same population. The problem here is that differences arising in the data from one survey to the next may then be accounted for by differences in the persons surveyed rather than by genuine changes or trends.

A third difficulty has been termed ‘control effect’ (sometimes referred to as measurement effect). Often, repeated interviewing results in an undesired and confusing effect on the actions or attitudes under study, influencing the behaviour of subjects, sensitizing them to matters that have hitherto passed unnoticed, or stimulating them to communication with others on unwanted topics (see Riley, 1963). Fourth, cohort studies can suffer from the interaction of biological, environmental and intervention influences (Keeves, 1997a: 139). Finally, cohort studies in education pose considerable problems of organization due to the continuous changes that occur in pupils, staff, teaching methods and the like. Such changes make it highly unlikely that a study will be completed in the way that it was originally planned.

Cohort studies, as we have seen, are particularly appropriate in research on human growth and development. Why then are so many studies in this area cross-sectional in design? The reason is that they have a number of advantages over cohort studies; they are less expensive; they produce findings more quickly; they are less likely to suffer from control effects; and they are more likely to secure the cooperation of respondents on a ‘one-off’ basis. Generally, cross-sectional designs are able to include more subjects than are cohort designs.

The strengths of cohort analysis are the weaknesses of the cross- sectional design. The cross- sectional study is a less effective method for the researcher who is concerned to identify individual variations in growth or to establish causal relationships between variables. Sampling in the cross- sectional study is complicated because different subjects are involved at each age level and may not be comparable. Further problems arising out of selection effects and the obscuring of irregularities in growth weaken the cross- sectional study so much that one observer dismisses the method as a highly unsatisfactory way of obtaining developmental data except for the crudest purposes. Douglas (1976), who pioneered the first national cohort study to be undertaken in any country, makes a spirited defence of the method against the common criticisms that are levelled against it – that it is expensive and time- consuming. His account of the advantages of cohort analysis over cross- sectional designs is summarized in Box 13.1.

Cross- sectional studies require attention to be given to sampling, to ensure that the information on which the sample is based is comprehensive (Lietz and Keeves, 1997: 124). Further, there is a risk that some potential participants may decline to take part, thereby weakening the sample, or that some respondents may not answer specific questions or, wittingly or unwittingly, give incorrect answers. Measurement error may also occur if the instrument is faulty, for example choosing inappropriate metrics or scales.

The comparative strengths and weaknesses of longitudinal studies (including retrospective studies), cross-section analysis and trend studies are summarized in Table 13.2 (see also Rose and Sullivan, 1993: 184–8).

Several of the strengths and weaknesses of retrospective longitudinal studies share the same characteristics as those of ex post facto research, discussed in Chapter 15.

BOX 13.1 ADVANTAGES OF COHORT OVER CROSS- SECTIONAL DESIGNS

1 Some types of information, for example on attitudes or assessment of potential ability, are only meaningful if collected contemporaneously. Other types are more complete or more accurate if collected during the course of a longitudinal survey, though they are likely to have some value even if collected retrospectively, for example length of schooling, job history, geographical movement.

2 In cohort studies, no duplication of information occurs, whereas in cross- sectional studies the same type of background information has to be collected on each occasion. This increases the interviewing costs.

3 The omission of even a single variable, later found to be important, from a cross- sectional study is a disaster, whereas it is usually possible in a cohort study to fill the gap, even if only partially, in a subsequent interview.

4 A cohort study allows the accumulation of a much larger number of variables, extending over a much wider area of knowledge than would be possible in a cross- sectional study. This is of course because the collection can be spread over many interviews. Moreover, information may be obtained at the most appropriate time, for example information on job entry may be obtained when it occurs even if this varies from one member of the sample to another.

5 Starting with a birth cohort removes later problems of sampling and allows the extensive use of subsamples. It also eases problems of estimating bias and reliability.

6 Longitudinal studies are free of one of the major obstacles to causal analysis, namely the reinterpretation of remembered information so that it conforms with conventional views on causation. It also provides the means to assess the direction of effect.

Source: Adapted from Douglas, 1976

Robson (1993) indicates strengths and difficulties with postal and interview surveys. Postal surveys can reach a large number of people, gather data at comparatively low cost and quite quickly, and can give assurances of confidentiality (Bailey, 1994: 148). Similarly they can be completed at the respondents’ own convenience and in their preferred surroundings and own time; this will enable them to check information if necessary (e.g. personal documents) and think about the responses. As standardized wording is used, there is a useful degree of comparability across the responses, and, as no interviewer is present, there is no risk of interviewer bias. Further, postal questionnaires enable widely scattered populations to be reached.

Postal surveys can also be used to gather detailed sensitive qualitative data (Beckett and Clegg, 2007), not least because the non- presence of another person (e.g. an interviewer) can increase the honesty and richness of the data (Beckett and Clegg, 2007), whereas the presence of an interviewer might inhibit the respondent. Further, in a postal survey, the relations of power between the researcher and the respondent are often more equal than in an interview situation (in which the former often controls the situation more than the latter) (Beckett and Clegg, 2007: 308).

On the other hand postal surveys typically suffer from a poor response rate, and, because one does not have any information about the non- respondents, one does not know whether the sample is representative of the wider population. Further, respondents may not take the care required to complete the survey carefully, and, indeed, may misunderstand the questions. There is no way of checking this. Bailey (1994: 149) suggests that the very issues that make postal surveys attractive might also render them less appealing, for example:

the standardization of wording;

the standardization of wording;

the inability to catch anything other than a verbal response;

the inability to catch anything other than a verbal response;

the lack of control over the environment in which the survey questionnaire is completed;

the lack of control over the environment in which the survey questionnaire is completed;