UNIX storage is looking more and more like a giant set of Lego blocks that you can put together in an infinite variety of configurations. What will you build? A fighter jet? A dump truck? An advanced technology helicopter with air bags and a night-vision camera?

Traditional hard disks remain the dominant medium for on-line storage, but they’re increasingly being joined by solid state drives (SSDs) for performance-sensitive applications. Running on top of this hardware are a variety of software components that mediate between the raw storage devices and the filesystem hierarchy seen by users. These components include device drivers, partitioning conventions, RAID implementations, logical volume managers, systems for virtualizing disks over a network, and the filesystem implementations themselves.

In this chapter, we discuss the administrative tasks and decisions that occur at each of these layers. We begin with “fast path” instructions for adding a basic disk to each of our example systems. We then review storage-related hardware technologies and look at the general architecture of storage software. We then work our way up the storage stack from low-level formatting up to the filesystem level. Along the way, we cover disk partitioning, RAID systems, logical volume managers, and systems for implementing storage area networks (SANs).

Although vendors all use standardized disk hardware, there’s a lot of variation among systems in the software domain. Accordingly, you’ll see a lot of vendor-specific details in this chapter. We try to cover each system in enough detail that you can at least identify the commands and systems that are used and can locate the necessary documentation.

Before we launch into many pages of storage architecture and theory, let’s first address the most common scenario: you want to install a hard disk and make it accessible through the filesystem. Nothing fancy: no RAID, all the drive’s space in a single logical volume, and the default filesystem type.

Step one is to attach the drive and reboot. Some systems allow hot-addition of disk drives, but we don’t address that case here. Beyond that, the recipes differ slightly among systems.

Regardless of your OS, it’s critically important to identify and format the right disk drive. A newly added drive is not necessarily represented by the highest-numbered device file, and on some systems, the addition of a new drive can change the device names of existing drives. Double-check the identity of the new drive by reviewing its manufacturer, size, and model number before you do anything that’s potentially destructive.

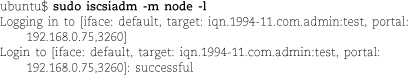

Run sudo fdisk -l to list the system’s disks and identify the new drive. Then run any convenient partitioning

utility to create a partition table for the drive. For drives 2TB and below, install

a Windows MBR partition table. cfdisk is the easiest utility for this, but you can also use fdisk, sfdisk, parted, or gparted. Larger disks require a GPT partition table, so you must partition with parted or its GNOME GUI, gparted. gparted is a lot easier to use but isn’t usually installed by default.

Run sudo fdisk -l to list the system’s disks and identify the new drive. Then run any convenient partitioning

utility to create a partition table for the drive. For drives 2TB and below, install

a Windows MBR partition table. cfdisk is the easiest utility for this, but you can also use fdisk, sfdisk, parted, or gparted. Larger disks require a GPT partition table, so you must partition with parted or its GNOME GUI, gparted. gparted is a lot easier to use but isn’t usually installed by default.

Put all the drive’s space into one partition of unspecified or “unformatted” type. Do not install a filesystem. Note the device name of the new partition before you leave the partitioning utility; let’s say it’s /dev/sdc1.

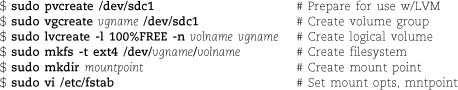

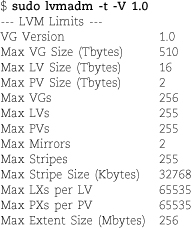

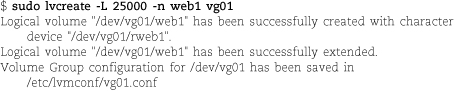

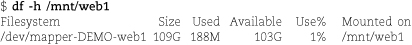

Next, run the following command sequence, selecting appropriate names for the volume group (vgname), logical volume (volname), and mount point. (Examples of reasonable choices: homevg, home, and /home.)

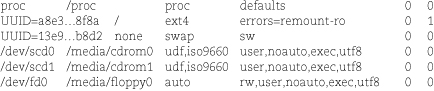

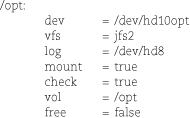

In the /etc/fstab file, copy the line for an existing filesystem and adjust it. The device to be mounted is /dev/vgname/volname. If your existing fstab file identifies volumes by UUID, replace the UUID=xxx clause with the device file; UUID identification is not necessary for LVM volumes.

Finally, run sudo mount mountpoint to mount the filesystem.

See page 224 for more details on Linux device files for disks. See page 236 for partitioning information and page 251 for logical volume management. The ext4 filesystem family is discussed starting on page 255.

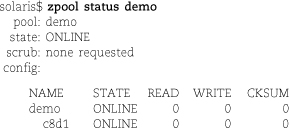

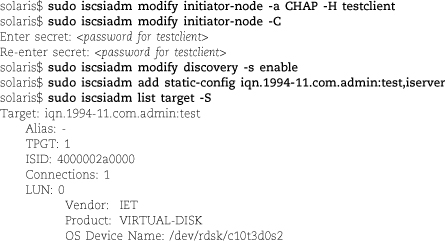

Run sudo format and inspect the menu of known disks to identify the name of the new device. Let’s

say it’s c9t0d0. Type <Control-C> to abort.

Run sudo format and inspect the menu of known disks to identify the name of the new device. Let’s

say it’s c9t0d0. Type <Control-C> to abort.

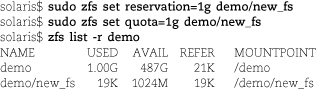

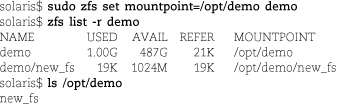

Run zpool create poolname c9t0d0. Choose a simple poolname such as “home” or “extra.” ZFS creates a filesystem and mounts it under / poolname.

See page 225 for more details on disk devices in Solaris. See page 264 for a general overview of ZFS.

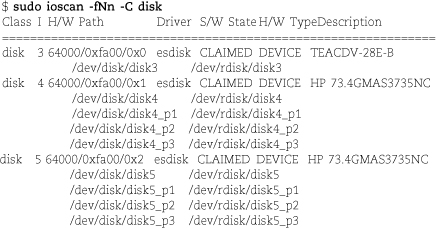

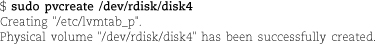

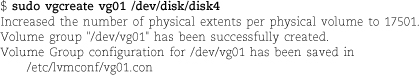

Run sudo ioscan -fNn -C disk to identify the device files for the new disk; let’s say they are /dev/disk/disk4 and /dev/rdisk/disk4.

Run sudo ioscan -fNn -C disk to identify the device files for the new disk; let’s say they are /dev/disk/disk4 and /dev/rdisk/disk4.

Next, run the following command sequence, selecting appropriate names for the volume group (vgname), logical volume (volname), and mount point. (An example of reasonable choices: homevg, home, and /home.)

In the /etc/fstab file, copy the line for an existing filesystem and adjust it. The device to be mounted is /dev/vgname/volname.

Finally, run sudo mount mountpoint to mount the filesystem.

See page 225 for more details on HP-UX disk device files. See page 251 for logical volume management information. The VxFS filesystem is discussed starting on page 256.

Run lsdev -C -c disk to see a list of the disks the system is aware of, then run lspv to see which disks are already set up for volume management. The device that appears

in the first list but not the second is your new disk. Let’s say it’s hdisk1.

Run lsdev -C -c disk to see a list of the disks the system is aware of, then run lspv to see which disks are already set up for volume management. The device that appears

in the first list but not the second is your new disk. Let’s say it’s hdisk1.

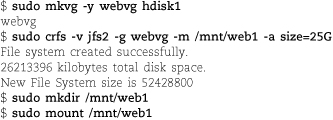

Next, run the following command sequence, selecting appropriate names for the volume group (vgname), logical volume (volname), and mount point. (Examples of reasonable choices: homevg, home, and /home.)

See page 226 for more details on AIX disk device files, and see page 253 for AIX logical volume management information. The JFS2 filesystem is discussed starting on page 257.

Even in today’s post-Internet world, there are only a few basic ways to store computer data: hard disks, flash memory, magnetic tapes, and optical media. The last two technologies have significant limitations that disqualify them from use as a system’s primary filesystem. However, they’re still commonly used for backups and for “near-line” storage—cases in which instant access and rewritability are not of primary concern.

See page 301 for a summary of current tape technologies.

After 40 years of hard disk technology, system builders are finally getting a practical alternative in the form of solid state disks (SSDs). These flash-memory-based devices offer a different set of tradeoffs from a standard disk, and they’re sure to exert a strong influence over the architectures of databases, filesystems, and operating systems in the years to come.

At the same time, traditional hard disks are continuing their exponential increases in capacity. Twenty years ago, a 60MB hard disk cost $1,000. Today, a garden-variety 1TB drive runs $80 or so. That’s 200,000 times more storage for the money, or double the MB/$ every 1.15 years—nearly twice the rate predicted by Moore’s Law. During that same period, the sequential throughput of mass-market drives has increased from 500 kB/s to 100 MB/s, a comparatively paltry factor of 200. And random-access seek times have hardly budged. The more things change, the more they stay the same.

A third—hybrid—category, hard disks with large flash-memory buffers, was widely touted a few years ago but never actually materialized in the marketplace. It’s not clear to us whether the drives were delayed by technical, manufacturing, or marketing concerns. They may yet appear on the scene, but the implications for system administrators remain unclear.

See page 14 for more information on IEC units (gibibytes, etc.).

Disk sizes are specified in gigabytes that are billions of bytes, as opposed to memory, which is specified in gigabytes (gibibytes, really) of 230 (1,073,741,824) bytes. The difference is about 7%. Be sure to check your units when estimating and comparing capacities.

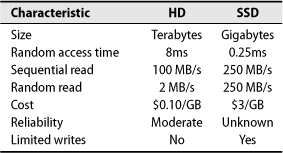

Hard disks and SSDs are enough alike that they can act as drop-in replacements for each other, at least at the hardware level. They use the same hardware interfaces and interface protocols. And yet they have different strengths, as summarized in Table 8.1. Performance and cost values are as of mid-2010.

Table 8.1 Comparison of hard disk and SSD technology

In the next sections, we take a closer look at each of these technologies.

A typical hard drive contains several rotating platters coated with magnetic film. They are read and written by tiny skating heads that are mounted on a metal arm that swings back and forth to position them. The heads float close to the surface of the platters but do not actually touch.

Reading from a platter is quick; it’s the mechanical maneuvering needed to address a particular sector that drives down random-access throughput. There are two main sources of delay.

First, the head armature must swing into position over the appropriate track. This part is called seek delay. Then, the system must wait for the right sector to pass underneath the head as the platter rotates. That part is rotational latency. Disks can stream data at tens of MB/s if reads are optimally sequenced, but random reads are fortunate to achieve more than a few MB/s.

A set of tracks on different platters that are all the same distance from the spindle is called a cylinder. The cylinder’s data can be read without any additional movement of the arm. Although heads move amazingly fast, they still move much slower than the disks spin around. Therefore, any disk access that does not require the heads to seek to a new position will be faster.

Rotational speeds have increased over time. Currently, 7,200 RPM is the mass-market standard for performance-oriented drives, and 10,000 RPM and 15,000 RPM drives are popular at the high end. Higher rotational speeds decrease latency and increase the bandwidth of data transfers, but the drives tend to run hot.

Hard disks fail frequently. A 2007 Google Labs study of 100,000 drives surprised the tech world with the news that hard disks more than two years old had an average annual failure rate (AFR) of more than 6%, much higher than the failure rates manufacturers predicted based on their extrapolation of short-term testing. The overall pattern was a few months of infant mortality, a two-year honeymoon of annual failure rates of a few percent, and then a jump up to the 6%–8% AFR range. Overall, hard disks in the Google study had less than a 75% chance of surviving a five-year tour of duty.

Interestingly, Google found no correlation between failure rate and two environmental factors that were formerly thought to be important: operating temperature and drive activity. The complete paper can be found at tinyurl.com/fail-pdf.

Disk failures tend to involve either platter surfaces (bad blocks) or the mechanical components. The firmware and hardware interface usually remain operable after a failure, so you can query the disk for details (see page 230).

Drive reliability is often quoted by manufacturers in terms of mean time between failures (MTBF), denominated in hours. A typical value for an enterprise drive is around 1.2 million hours. However, MTBF is a statistical measure and should not be read to imply that an individual drive will run for 140 years before failing.

MTBF is the inverse of AFR in the drive’s steady-state period—that is, after break-in but before wear-out. A manufacturer’s MTBF of 1.2 million hours corresponds to an AFR of 0.7% per year. This value is almost, but not quite, concordant with the AFR range observed by Google (1%–2%) during the first two years of their sample drives’ lives.

Manufacturers’ MTBF values are probably accurate, but they are cherry-picked from the most reliable phase of each drive’s life. MTBF values should therefore be regarded as an upper bound on reliability; they do not predict your actual expected failure rate over the long term. Based on the limited data quoted above, you might consider dividing manufacturers’ MTBFs by a factor of 7.5 or so to arrive at a more realistic estimate of five-year failure rates.

Hard disks are commodity products, and one manufacturer’s model is much like another’s, given similar specifications for spindle speed, hardware interface, and reliability. These days, you need a dedicated qualification laboratory to make fine distinctions among competing drives.

SSDs spread reads and writes across banks of flash memory cells, which are individually rather slow in comparison to modern hard disks. But because of parallelism, the SSD as a whole meets or exceeds the bandwidth of a traditional disk. The great strength of SSDs is that they continue to perform well when data is read or written at random, an access pattern that’s predominant in real-world use.

Storage device manufacturers like to quote sequential transfer rates for their products because the numbers are impressively high. But for traditional hard disks, these sequential numbers have almost no relationship to the throughput observed with random reads and writes. For example, Western Digital’s high-performance Velociraptor drives can achieve nearly 120 MB/s in sequential transfers, but their random read results are more on the order of 2 MB/s. By contrast, Intel’s current-generation SSDs stay above 30 MB/s for all access patterns.

This performance comes at a cost, however. Not only are SSDs more expensive per gigabyte of storage than are hard disks, but they also introduce several new wrinkles and uncertainties into the storage equation.

Each page of flash memory in an SSD (typically 4KiB on current products) can be rewritten only a limited number of times (usually about 100,000, depending on the underlying technology). To limit the wear on any given page, the SSD firmware maintains a mapping table and distributes writes across all the drive’s pages. This remapping is invisible to the operating system, which sees the drive as a linear series of blocks. Think of it as virtual memory for storage.

A further complication is that flash memory pages must be erased before they can be rewritten. Erasing is a separate operation that is slower than writing. It’s also impossible to erase individual pages—clusters of adjacent pages (typically 128 pages or 512KiB) must be erased together. The write performance of an SSD can drop substantially when the pool of pre-erased pages is exhausted and the drive must recover pages on the fly to service ongoing writes.

Rebuilding a buffer of erased pages is harder than it might seem because filesystems typically do not mark or erase data blocks they are no longer using. A storage device doesn’t know that the filesystem now considers a given block to be free; it only knows that long ago someone gave it data to store there. In order for an SSD to maintain its cache of pre-erased pages (and thus, its write performance), the filesystem has to be capable of informing the SSD that certain pages are no longer needed. As of this writing, ext4 and Windows 7’s NTFS are the only common filesystems that offers this feature. But given the enormous interest in SSDs, other filesystems are sure to become more SSD-aware in the near future.

Another touchy subject is alignment. The standard size for a disk block is 512 bytes, but that size is too small for filesystems to deal with efficiently.1 Filesystems manage the disk in terms of clusters of 1KiB to 8KiB in size, and a translation layer maps filesystem clusters into ranges of disk blocks for reads and writes.

On a hard disk, it makes no difference where a cluster begins or ends. But because SSDs can only read or write data in 4KiB pages (despite their emulation of a hard disk’s traditional 512-byte blocks), filesystem cluster boundaries and SSD page boundaries should coincide. You wouldn’t want a 4KiB logical cluster to correspond to half of one 4KiB SSD cluster and half of another—with that layout, the SSD might have to read or write twice as many physical pages as it should to service a given number of logical clusters.

Since filesystems usually count off their clusters starting at the beginning of whatever storage is allocated to them, the alignment issue can be finessed by aligning disk partitions to a power-of-2 boundary that is large in comparison with the likely size of SSD and filesystem pages (e.g., 64KiB). Unfortunately, the Windows MBR partitioning scheme that Linux has inherited does not make such alignment automatic. Check the block ranges that your partitioning tool assigns to make sure they are aligned, keeping in mind that the MBR itself consumes a block. (Windows 7 aligns partitions suitably for SSDs by default.)

The theoretical limits on the rewritability of flash memory are probably less of an issue than they might initially seem. Just as a matter of arithmetic, you would have to stream 100 MB/s of data to a 150GB SSD for more than four continuous years to start running up against the rewrite limit. The more general question of long-term SSD reliability is as yet unanswered, however. SSDs are an immature product category, and early adopters should expect quirks.

The controllers used inside SSDs are rapidly evolving, and there are currently marked differences in performance among manufacturers. The market should eventually converge to a standard architecture for these devices, but that day is still a year or two off. In the short term, careful shopping is essential.

Anand Shimpi’s March 2009 article on SSD technology is a superb introduction to the promise and perils of the SSD. It can be found at tinyurl.com/dexnbt.

These days, only a few interface standards are in common use. If a system supports several different interfaces, use the one that best meets your requirements for speed, redundancy, mobility, and price.

• ATA (Advanced Technology Attachment), known in earlier revisions as IDE, was developed as a simple, low-cost interface for PCs. It was originally called Integrated Drive Electronics because it put the hardware controller in the same box as the disk platters and used a relatively high-level protocol for communication between the computer and the disks. This is now the way that all hard disks work, but at the time it was something of an innovation.

The traditional parallel ATA interface (PATA) connected disks to the motherboard with a 40- or 80-conductor ribbon cable. This style of disk is nearly obsolete, but the installed base is enormous. PATA disks are often labeled as “IDE” to distinguish them from SATA drives (below), but they are true ATA drives. PATA disks are medium to fast in speed, generous in capacity, and unbelievably cheap.

• Serial ATA, SATA, is the successor to PATA. In addition to supporting much higher transfer rates (currently 3 Gb/s, with 6 Gb/s soon to arrive), SATA simplifies connectivity with tidier cabling and a longer maximum cable length. SATA has native support for hot-swapping and (optional) command queueing, two features that finally make ATA a viable alternative to SCSI in server environments.

• Though not as common as it once was, SCSI is one of the most widely supported disk interfaces. It comes in several flavors, all of which support multiple disks on a bus and various speeds and communication styles. SCSI is described in more detail on page 216.

Hard drive manufacturers typically reserve SCSI interfaces for their highest-performing and most rugged drives. You’ll pay more for these drives, but mostly because of the drive features rather than the interface.

• Fibre Channel is a serial interface that is popular in the enterprise environment thanks to its high bandwidth and to the large number of storage devices that can be attached to it at once. Fibre Channel devices connect with a fiber optic or twinaxial copper cable. Speeds range from roughly 1–40 Gb/s depending on the protocol revision.

Common topologies include loops, called Fibre Channel Arbitrated Loops (FC-AL), and fabrics, which are constructed with Fibre Channel switches. Fibre Channel can speak several different protocols, including SCSI and even IP. Devices are identified by a hardwired, 8-byte ID number (a “World Wide Name”) that’s similar to an Ethernet MAC address.

• The Universal Serial Bus (USB) and FireWire (IEEE1394) serial communication systems have become popular for connecting external hard disks. Current speeds are 480 Mb/s for USB and 800 Mb/s for FireWire; both systems are too slow to accommodate a fast disk streaming data at full speed. Upcoming revisions of both standards will offer more competitive speeds (up to 5 Gb/s with USB 3.0).

Hard disks never provide native USB or FireWire interfaces—SATA converters are built into the disk enclosures that feature these ports.

ATA and SCSI are by far the dominant players in the disk drive arena. They are the only interfaces we discuss in detail.

PATA (Parallel Advanced Technology Attachment), also called IDE, was designed to be simple and inexpensive. It is most often found on PCs or low-cost workstations. The original IDE became popular in the late 1980s. A succession of protocol revisions culminating in the current ATA-7 (also known as Ultra ATA/133) added direct memory access (DMA) modes, plug and play features, logical block addressing (LBA), power management, self-monitoring capabilities, and bus speeds up to 133 MB/s. Around the time of ATA-4, the ATA standard also merged with the ATA Packet Interface (ATAPI) protocol, which allows CD-ROM and tape drives to work on an IDE bus.

The PATA connector is a 40-pin header that connects the drive to the interface card with a clumsy ribbon cable. ATA standards beyond Ultra DMA/66 use an 80-conductor cable with more ground pins and therefore less electrical noise. Some nicer cables that are available bundle up the ribbon into a thick cable sleeve, tidying up the chassis and improving air flow. Power cabling for PATA uses a chunky 4-conductor Molex plug.

If a cable or drive is not keyed, be sure that pin 1 on the drive goes to pin 1 on the interface jack. Pin 1 is usually marked with a small “1” on one side of the connector. If it is not marked, a rule of thumb is that pin 1 is usually the one closest to the power connector. Pin 1 on a ribbon cable is usually marked in red. If there is no red stripe on one edge of your cable, just make sure you have the cable oriented so that pin 1 is connected to pin 1 and mark the cable with a red sharpie.

Most PCs have two PATA buses, each of which can host two devices. If you have more than one device on a PATA bus, you must designate one as the master and the other as the slave. A “cable select” jumper setting on modern drives (which is usually the default) lets the devices work out master vs. slave on their own. Occasionally, it does not work correctly and you must explicitly assign the master and slave roles.

No performance advantage accrues from being the master. Some older PATA drives do not like to be slaves, so if you are having trouble getting one configuration to work, try reversing the disks’ roles. If things are still not working out, try making each device the master of its own PATA bus.

Arbitration between master and slave devices on a PATA bus can be relatively slow. If possible, put each PATA drive on its own bus.

As data transfer rates for PATA drives increased, the standard’s disadvantages started to become obvious. Electromagnetic interference and other electrical issues caused reliability concerns at high speeds. Serial ATA, SATA, was invented to address these problems. It is now the predominant hardware interface for storage.

SATA smooths many of PATA’s sharp edges. It improves transfer rates (potentially to 750 MB/s with the upcoming 6 Gb/s SATA) and includes superior error checking. The standard supports hot-swapping, native command queuing, and sundry performance enhancements. SATA eliminates the need for master and slave designations because only a single device can be connected to each channel.

SATA overcomes the 18-inch cable limitation of PATA and introduces new data and power cable standards of 7 and 15 conductors, respectively.2 These cables are infinitely more flexible and easier to work with than their ribbon cable predecessors—no more curving and twisting to fit drives on the same cable. They do seem to be a bit more quality-sensitive than the old PATA ribbon cables, however. We have seen several of the cheap pack-in SATA cables that come with motherboards fail in actual use.3

SATA cables slide easily onto their mating connectors, but they can just as easily slide off. Cables with locking catches are available, but they’re a mixed blessing. On motherboards with six or eight SATA connectors packed together, it can be hard to disengage the locking connectors without a pair of needle-nosed pliers.

SATA also introduces an external cabling standard called eSATA. The cables are electrically identical to standard SATA, but the connectors are slightly different. You can add an eSATA port to a system that has only internal SATA connectors by installing an inexpensive converter bracket.

Be leery of external multidrive enclosures that have only a single eSATA port— some of these are smart (RAID) enclosures that require a proprietary driver. (The drivers rarely support UNIX or Linux.) Others are dumb enclosures that have a SATA port multiplier built in. These are potentially usable on UNIX systems, but since not all SATA host adapters support port expanders, pay close attention to the compatibility information. Enclosures with multiple eSATA ports—one per drive bay—are always safe.

SCSI, the Small Computer System Interface, defines a generic data pipe that can be used by all kinds of peripherals. In the past it was used for disks, tape drives, scanners, and printers, but these days most peripherals have abandoned SCSI in favor of USB.

Many flavors of SCSI interface have been defined since 1986, when SCSI-1 was first adopted as an ANSI standard. Traditional SCSI uses parallel cabling with 8 or 16 conductors.

Unfortunately, there has been no real rhyme or reason to the naming conventions for parallel SCSI. The terms “fast,” “wide,” and “ultra” were introduced at various times to mark significant developments, but as those features became standard, the descriptors vanished from the names. The nimble-sounding Ultra SCSI is in

fact a 20 MB/s standard that no one would dream of using today, so it has had to give way to Ultra2, Ultra3, Ultra-320, and Ultra-640 SCSI. For the curious, the following regular expression matches all the various flavors of parallel SCSI:

(Fast(-Wide)?|Ultra((Wide)?|2 (Wide)?|3|-320|-640)?) SCSI|SCSI-[1-3]

Many different connectors have been used as well. They vary depending on the version of SCSI, the type of connection (internal or external), and the number of data bits sent at once. Exhibit A shows pictures of some common ones. Each connector is shown from the front, as if you were about to plug it into your forehead.

Exhibit A Parallel SCSI connectors (front view, male except where noted)

The only one of these connectors still being manufactured today is the SCA-2, which is an 80-pin connector that includes both power and bus connections.

Each end of a parallel SCSI bus must have a terminating resistor (“terminator”). These resistors absorb signals as they reach the end of the bus and prevent noise from reflecting back onto the bus. Terminators take several forms, from small external plugs that you snap onto a regular port to sets of tiny resistor packs that install onto a device’s circuit boards. Most modern devices are autoterminating.

If you experience seemingly random hardware problems on your SCSI bus, first check that both ends of the bus are properly terminated. Improper termination is one of the most common SCSI configuration mistakes on old SCSI systems, and the errors it produces can be obscure and intermittent.

Parallel SCSI buses use a daisy chain configuration, so most external devices have two SCSI ports.4 The ports are identical and interchangeable—either one can be the input. Internal SCSI devices (including those with SCA-2 connectors) are attached to a ribbon cable, so only one port is needed on the device.

Each device has a SCSI address or “target number” that distinguishes it from the other devices on the bus. Target numbers start at 0 and go up to 7 or 15, depending on whether the bus is narrow or wide. The SCSI controller itself counts as a device and is usually target 7. All other devices must have their target numbers set to unique values. It is a common error to forget that the SCSI controller has a target number and to set a device to the same target number as the controller.

If you’re lucky, a device will have an external thumbwheel with which the target number can be set. Other common ways of setting the target number are DIP switches and jumpers. If it is not obvious how to set the target number on a device, look up the hardware manual on the web.

The SCSI standard supports a form of subaddressing called a “logical unit number.” Each target can have several logical units inside it. A plausible example is a drive array with several disks but only one SCSI controller. If a SCSI device contains only one logical unit, the LUN usually defaults to 0.

The use of logical unit numbers is generally confined to large drive arrays. When you hear “SCSI unit number,” you should assume that it is really a target number that’s being discussed until proven otherwise.

From the perspective of a sysadmin dealing with legacy SCSI hardware, here are the important points to keep in mind:

• Don’t worry about the exact SCSI versions a device claims to support; look at the connectors. If two SCSI devices have the same connectors, they are compatible. That doesn’t necessarily mean that they can achieve the same speeds, however. Communication will occur at the speed of the slower device.

• Even if the connectors are different, the devices can still be made compatible with an adapter if both connectors have the same number of pins.

• Many older workstations have internal SCSI devices such as tape and floppy drives. Check the listing of current devices before you reboot to add a new device.

• After you have added a new SCSI device, check the listing of devices discovered by the kernel when it reboots to make sure that everything you expect is there. Most SCSI drivers do not detect multiple devices that have the same SCSI address (an illegal configuration). SCSI address conflicts lead to strange behavior.

• If you see flaky behavior, check for a target number conflict or a problem with bus termination.

• Remember that your SCSI controller uses one of the SCSI addresses.

As in the PATA world, parallel SCSI is giving way to Serial Attached SCSI (SAS), the SCSI analog of SATA. From the hardware perspective, SAS improves just about every aspect of traditional parallel SCSI.

• Chained buses are passé. Like SATA, SAS is a point-to-point system. SAS allows the use of “expanders” to connect multiple devices to a single host port. They’re analogous to SATA port multipliers, but whereas support for port multipliers is hit or miss, expanders are always supported.

• SAS does not use terminators.

• SCSI target IDs are no longer used. Instead, each SAS device has a Fibre-Channel-style 64-bit World Wide Name (WWN) assigned by the manufacturer. It’s analogous to an Ethernet MAC address.

• The number of devices in a SCSI bus (“SAS domain,” really) is no longer limited to 8 or 16. Up to 16,384 devices can be connected.

SAS currently operates at 3 Gb/s, but speeds are scheduled to increase to 6 Gb/s and then to 12 Gb/s by 2012.

In past editions of this book, SCSI was the obvious interface choice for server applications. It offered the highest available bandwidth, out-of-order command execution (aka tagged command queueing), lower CPU utilization, easier handling of large numbers of storage devices, and access to the market’s most advanced hard drives.

The advent of SATA has removed or minimized most of these advantages, so SCSI simply does not deliver the bang for the buck that it used to. SATA drives compete with (and in some cases, outperform) equivalent SCSI disks in nearly every category. At the same time, both SATA devices and the interfaces and cabling used to connect them are cheaper and far more widely available.

SCSI still holds a few trump cards:

• Manufacturers continue to use the SATA/SCSI divide to stratify the storage market. To help justify premium pricing, the fastest and most reliable drives are still available with only SCSI interfaces.

• SATA is limited to a queue depth of 32 pending operations. SCSI can handle thousands.

• SAS can handle many storage devices (hundreds or thousands) on a single host interface. But keep in mind that all those devices share a single pipe to the host; you are still limited to 3 Gb/s of aggregate bandwidth.

The SAS vs. SATA debate may ultimately be moot because the SAS standard includes support for SATA drives. SAS and SATA connectors are similar enough that a single SAS backplane can accommodate drives of either type. At the logical layer, SATA commands are simply tunneled over the SAS bus.

This convergence is an amazing technical feat, but the economic argument for it is less clear. The expense of a SAS installation is mostly in the host adapter, backplane, and infrastructure; the SAS drives themselves aren’t outrageously priced. Once you’ve invested in a SAS setup, you might as well stick with SAS from end to end. (On the other hand, perhaps the modest price premiums for SAS drives are a result of the fact that SATA drives can easily be substituted for them.)

If you’re used to plugging in a disk and having your Windows system ask if you want to format it, you may be a bit taken aback by the apparent complexity of storage management on UNIX and Linux systems. Why is it all so complicated?

To begin with, much of the complexity is optional. On some systems, you can log in to your system’s desktop, connect that same USB drive, and have much the same experience as on Windows. You’ll get a simple setup for personal data storage. If that’s all you need, you’re good to go.

As usual in this book, we’re primarily interested in enterprise-class storage systems: filesystems that are accessed by many users (both local and remote) and that are reliable, high-performance, easy to back up, and easy to adapt to future needs. These systems require a bit more thought, and UNIX and Linux give you plenty to think about.

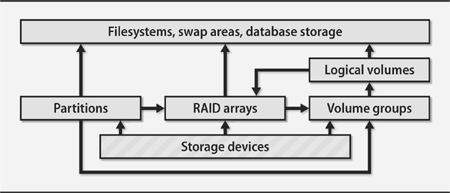

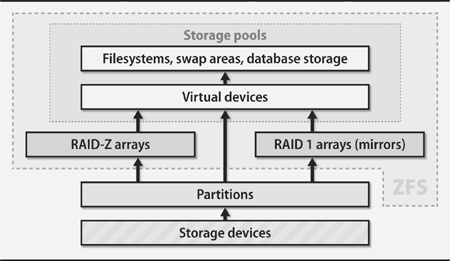

Exhibit B shows a typical set of software components that can mediate between a raw storage device and its end users. The specific architecture shown in Exhibit B is for Linux, but our other example systems include similar features, although not necessarily in the same packages.

The arrows in Exhibit B mean “can be built on.” For example, a Linux filesystem can be built on top of a partition, a RAID array, or a logical volume. It’s up to the administrator to construct a stack of modules that connect each storage device to its final application.

Sharp-eyed readers will note that the graph has a cycle, but real-world configurations do not loop. Linux allows RAID and logical volumes to be stacked in either order, but neither component should be used more than once (though it is technically possible to do this).

Here’s what the pieces in Exhibit B represent:

• A storage device is anything that looks like a disk. It can be a hard disk, a flash drive, an SSD, an external RAID array implemented in hardware, or even a network service that provides block-level access to a remote device. The exact hardware doesn’t matter, as long as the device allows random access, handles block I/O, and is represented by a device file.

Exhibit B Storage management layers

• A partition is a fixed-size subsection of a storage device. Each partition has its own device file and acts much like an independent storage device. For efficiency, the same driver that handles the underlying device usually implements partitioning. Most partitioning schemes consume a few blocks at the start of the device to record the ranges of blocks that make up each partition.

Partitioning is becoming something of a vestigial feature. Linux and Solaris drag it along primarily for compatibility with Windows-partitioned disks. HP-UX and AIX have largely done away with it in favor of logical volume management, though it’s still needed on Itanium-based HP-UX systems.

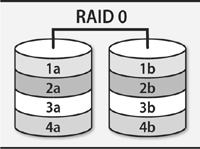

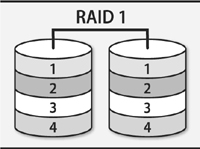

• A RAID array (a redundant array of inexpensive/independent disks) combines multiple storage devices into one virtualized device. Depending on how you set up the array, this configuration can increase performance (by reading or writing disks in parallel), increase reliability (by duplicating or parity-checking data across multiple disks), or both. RAID can be implemented by the operating system or by various types of hardware.

As the name suggests, RAID is typically conceived of as an aggregation of bare drives, but modern implementations let you use as a component of a RAID array anything that acts like a disk.

• Volume groups and logical volumes are associated with logical volume managers (LVMs). These systems aggregate physical devices to form pools of storage called volume groups. The administrator can then subdivide this pool into logical volumes in much the same way that disks of yore were divided into partitions. For example, a 750GB disk and a 250GB disk could be aggregated into a 1TB volume group and then split into two 500GB logical volumes. At least one volume would include data blocks from both hard disks.

Since the LVM adds a layer of indirection between logical and physical blocks, it can freeze the logical state of a volume simply by making a copy of the mapping table. Therefore, logical volume managers often provide some kind of a “snapshot” feature. Writes to the volume are then directed to new blocks, and the LVM keeps both the old and new mapping tables. Of course, the LVM has to store both the original image and all modified blocks, so it can eventually run out of space if a snapshot is never deleted.

• A filesystem mediates between the raw bag of blocks presented by a partition, RAID array, or logical volume and the standard filesystem interface expected by programs: paths such as /var/spool/mail, UNIX file types, UNIX permissions, etc. The filesystem determines where and how the contents of files are stored, how the filesystem namespace is represented and searched on disk, and how the system is made resistant to (or recoverable from) corruption.

Most storage space ends up as part of a filesystem, but swap space and database storage can potentially be slightly more efficient without “help” from a filesystem. The kernel or database imposes its own structure on the storage, rendering the filesystem unnecessary.

If it seems to you that this system has a few too many little components that simply implement one block storage device in terms of another, you’re in good company. The trend over the last few years has been toward consolidating these components to increase efficiency and remove duplication. Although logical volume managers did not originally function as RAID controllers, most have absorbed some RAID-like features (notably, striping and mirroring). As administrators get comfortable with logical volume management, partitions are disappearing, too.

On the cutting edge today are systems that combine a filesystem, a RAID controller, and an LVM system all in one tightly integrated package. Sun’s ZFS filesystem is the leading example, but the Btrfs filesystem in development for Linux has similar design goals. We have more to say about ZFS on page 264.

Most setups are relatively simple. Exhibit C illustrates a traditional partitions-andfilesystems schema as it might be found on a couple of data disks on a Linux system. (The boot disk is not shown.) Substitute logical volumes for partitions and the setup is similar on other systems.

In the next sections, we look in more detail at the steps involved in various phases of storage configuration: device wrangling, partitioning, RAID, logical volume management, and the installation of a filesystem. Finally, we double back to cover ZFS and storage area networking.

Exhibit C Traditional data disk partitioning scheme (Linux device names)

The way a disk is attached to the system depends on the interface that is used. The rest is all mounting brackets and cabling. Fortunately, SAS and SATA connections are virtually idiot-proof.

For parallel SCSI, double-check that you have terminated both ends of the SCSI bus, that the cable length is less than the maximum appropriate for the SCSI variant you are using, and that the new SCSI target number does not conflict with the controller or another device on the bus.

Even on hot-pluggable interfaces, it’s conservative to shut the system down before making hardware changes. Some older systems such as AIX default to doing device configuration only at boot time, so the fact that the hardware is hot-pluggable may not translate into immediate visibility at the OS level. In the case of SATA interfaces, hot-pluggability is an implementation option. Some host adapters don’t support it.

After you install a new disk, check to make sure that the system acknowledges its existence at the lowest possible level. On a PC this is easy: the BIOS shows you IDE and SATA disks, and most SCSI cards have their own setup screen that you can invoke before the system boots.

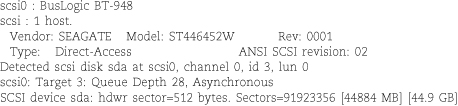

On other types of hardware, you may have to let the system boot and check the diagnostic output from the kernel as it probes for devices. For example, one of our test systems showed the following messages for an older SCSI disk attached to a BusLogic SCSI host adapter.

You may be able to review this information after the system has finished booting by looking in your system log files. See the material starting on page 352 for more information about the handling of boot-time messages from the kernel.

A newly added disk is represented by device files in /dev. See page 150 for general information about device files.

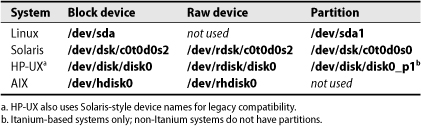

All our example systems create these files for you automatically, but you still need to know where to look for the device files and how to identify the ones that correspond to your new device. Formatting the wrong disk device file is a rapid route to disaster. Table 8.2. summarizes the device naming conventions for disks on our example systems. Instead of showing the abstract pattern according to which devices are named, Table 8.2. simply shows a typical example for the name of the system’s first disk.

Table 8.2. Device naming standard for disks

The block and raw device columns show the path for the disk as a whole, and the partition column shows the path for an example partition.

Linux disk names are assigned in sequence as the kernel enumerates the various interfaces

and devices on the system. Adding a disk can cause existing disks to change their

names. In fact, even rebooting the system can cause name changes.5

Linux disk names are assigned in sequence as the kernel enumerates the various interfaces

and devices on the system. Adding a disk can cause existing disks to change their

names. In fact, even rebooting the system can cause name changes.5

Never make changes without verifying the identity of the disk you’re working on, even on a stable system.

Linux provides a couple of ways around the “dancing names” issue. Subdirectories under /dev/disk list disks by various stable characteristics such as their manufacturer ID or connection information. These device names (which are really just links back to /dev/sd*) are stable, but they’re long and awkward.

At the level of filesystems and disk arrays, Linux uses unique ID strings to persistently identify objects. In many cases, the existence of these long IDs is cleverly concealed so that you don’t have to deal with them directly.

Linux doesn’t have raw device files for disks or disk partitions, so just use the block device wherever you might be accustomed to specifying a raw device.

parted -l lists the sizes, partition tables, model numbers, and manufacturers of every disk on the system.

Solaris disk device names are of the form /dev/[r]dsk/cWtXdYsZ, where W is the controller number, X is the SCSI target number, Y is the SCSI logical

unit number (or LUN, almost always 0), and Z is the partition (slice) number. There

are a couple of subtleties: ATA drives show up as cWdYsZ (with no t clause), and disks can have a series of DOS-style partitions, signified by pZ, as well as the Solaris-style slices denoted by sZ.

Solaris disk device names are of the form /dev/[r]dsk/cWtXdYsZ, where W is the controller number, X is the SCSI target number, Y is the SCSI logical

unit number (or LUN, almost always 0), and Z is the partition (slice) number. There

are a couple of subtleties: ATA drives show up as cWdYsZ (with no t clause), and disks can have a series of DOS-style partitions, signified by pZ, as well as the Solaris-style slices denoted by sZ.

These device files are actually just symbolic links into the /devices tree, where the real device files live. More generally, Solaris makes an effort to give continuity to device names, even in the face of hardware changes. Once a disk has shown up under a given name, it can generally be found at that name in the future unless you switch controllers or SCSI target IDs.

By convention, slice 2 represents the complete, unpartitioned disk. Unlike Linux, Solaris gives you device files for every possible slice and partition, whether or not those slices and partitions actually exist. Solaris also supports overlapping partitions, but that’s just crazy talk. Oracle may as well ship every Solaris system with a loaded gun.

Hot-plugging should work fine on Solaris. When you add a new disk, devfsadmd should detect it and create the appropriate device files for you. If need be, you can run devfsadm by hand.

HP-UX has traditionally used disk device names patterned after those of Solaris,

which record a lot of hardware-specific information in the device path. As of HP-UX

11i v3, however, those pathnames have been deprecated in favor of “agile addresses”

of the form /dev/disk/disk1. The latter paths are stable and do not change with the details of the system’s hardware

configuration.

HP-UX has traditionally used disk device names patterned after those of Solaris,

which record a lot of hardware-specific information in the device path. As of HP-UX

11i v3, however, those pathnames have been deprecated in favor of “agile addresses”

of the form /dev/disk/disk1. The latter paths are stable and do not change with the details of the system’s hardware

configuration.

Before you boot UNIX, you can obtain a listing of the system’s SCSI devices from the PROM monitor. Unfortunately, the exact way in which this is done varies among machines. After you boot, you can list disks by running ioscan.

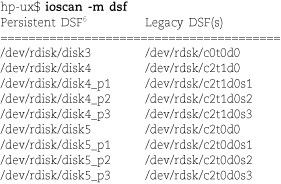

The old-style device names are still around in the /dsk and /rdsk directories, and you can continue to use them if you wish—at least for now. Run ioscan -m dsf to see the current mapping between old- and new-style device names.

Note that partitions are now abbreviated p instead of s in the Solaris manner (for “slice”). Unlike Solaris, HP-UX uses names such as disk3 with no partition suffix to represent the entire disk. On Solaris systems, partition 2 represents the whole disk; on HP-UX, it’s just another partition.

The system from which this example comes is Itanium-based and so has disk partitions. Other HP systems use logical volume management instead of partitioning.

AIX’s /dev/hdiskX and /dev/rhdiskX paths are refreshingly simple. Disk names are unfortunately subject to change when

the hardware configuration changes. However, most AIX disks will be under logical

volume management, so the hardware device names are not that important. The logical

volume manager writes a unique ID to each disk as part of the process of inducting

it into a volume group. This labeling allows the system to sort out the disks automatically,

so changes in device names are less troublesome than they might be on other systems.

AIX’s /dev/hdiskX and /dev/rhdiskX paths are refreshingly simple. Disk names are unfortunately subject to change when

the hardware configuration changes. However, most AIX disks will be under logical

volume management, so the hardware device names are not that important. The logical

volume manager writes a unique ID to each disk as part of the process of inducting

it into a volume group. This labeling allows the system to sort out the disks automatically,

so changes in device names are less troublesome than they might be on other systems.

You can run lsdev -C -c disk to see a list of the disks the system is aware of.

All hard disks come preformatted, and the factory formatting is at least as good as any formatting you can do in the field. It is best to avoid doing a low-level format if it’s not required. Don’t reformat new drives as a matter of course.

If you encounter read or write errors on a disk, first check for cabling, termination, and address problems, all of which can cause symptoms similar to those of a bad block. If after this procedure you are still convinced that the disk has defects, you might be better off replacing it with a new one rather than waiting long hours for a format to complete and hoping the problem doesn’t come back.

The formatting process writes address information and timing marks on the platters to delineate each sector. It also identifies bad blocks, imperfections in the media that result in areas that cannot be reliably read or written. All modern disks have bad block management built in, so neither you nor the driver need to worry about managing defects. The drive firmware substitutes known-good blocks from an area of backup storage on the disk that is reserved for this purpose.

Bad blocks that manifest themselves after a disk has been formatted may or may not be handled automatically. If the drive believes that the affected data can be reliably reconstructed, the newly discovered defect may be mapped out on the fly and the data rewritten to a new location. For more serious or less clearly recoverable errors, the drive aborts the read or write operation and reports the error back to the host operating system.

ATA disks are usually not designed to be formatted outside the factory. However, you may be able to obtain formatting software from the manufacturer, usually for Windows. Make sure the software matches the drive you plan to format and follow the manufacturer’s directions carefully.7

SCSI disks format themselves in response to a standard command that you send from the host computer. The procedure for sending this command varies from system to system. On PCs, you can often send the command from the SCSI controller’s BIOS. To issue the SCSI format command from within the operating system, use the sg_format command on Linux, the format command on Solaris, and the mediainit command on HP-UX.

Various utilities let you verify the integrity of a disk by writing random patterns to it and then reading them back. Thorough tests take a long time (hours) and unfortunately seem to be of little prognostic value. Unless you suspect that a disk is bad and are unable to simply replace it (or you bill by the hour), you should skip these tests. Barring that, let the tests run overnight. Don’t be concerned about “wearing out” a disk with overuse or aggressive testing. Enterprise-class disks are designed for constant activity.

Since 2000, PATA and SATA disks have implemented a “secure erase” command that overwrites the data on the disk by using a method the manufacturer has determined to be secure against recovery efforts. Secure erase is NIST-certified for most needs. Under the U.S. Department of Defense categorization, it’s approved for use at security levels less than “secret.”

Why is this feature even needed? First, filesystems generally do no erasing of their own, so an rm -rf * of a disk’s data leaves everything intact and recoverable with software tools. It’s critically important to remember this fact when disposing of disks, whether their destination is eBay or the trash.

Second, even a manual rewrite of every sector on a disk may leave magnetic traces that are recoverable by a determined attacker with access to a laboratory. Secure erase performs as many overwrites as are needed to eliminate these shadow signals. Magnetic remnants won’t be a serious concern for most sites, but it’s always nice to know that you’re not exporting your organization’s confidential data to the world at large.

Finally, secure erase has the effect of resetting SSDs to their fully erased state. This reset may improve performance in cases in which the ATA TRIM command (the command to erase a block) cannot be issued, either because the filesystem used on the SSD does not know to issue it or because the SSD is connected through a host adapter or RAID interface that does not propagate TRIM.

Unfortunately, UNIX support for sending the secure erase command remains elusive. At this point, your best bet is to reconnect drives to a Windows or Linux system for erasure. DOS software for secure erasing can be found at the Center of Magnetic Recording Research at tinyurl.com/2xoqqw. The MHDD utility also supports secure erase through its fasterase command—see tinyurl.com/2g6r98.

Under Linux, you can use the hdparm command:

There is no analog in the SCSI world to ATA’s secure erase command, but the SCSI “format unit” command described under Formatting and bad block management on page 226 is a reasonable alternative. Another option is to zero-out a drive’s sectors with dd if=/dev/zero of=diskdevice bs=8k.

Many systems have a shred utility that attempts to securely erase the contents of individual files. Unfortunately, it relies on the assumption that a file’s blocks can be overwritten in place. This assumption is invalid in so many circumstances (any filesystem on any SSD, any logical volume that has snapshots, perhaps generally on ZFS) that shred’s general utility is questionable.

For sanitizing an entire PC system at once, another option is Darik’s Boot and Nuke (dban.org). This tool runs from its own boot disk, so it’s not a tool you’ll use every day. It is quite handy for decommissioning old hardware, however.

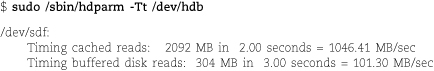

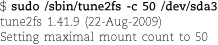

Linux’s hdparm command can do more than just send secure erase commands. It’s a general way to interact

with the firmware of SATA, IDE, and SAS hard disks. Among other things, hdparm can set drive power options, enable or disable noise reduction options, set the read-only

flag, and print detailed drive information. A few of the options work on SCSI drives,

too (under current Linux kernels).

Linux’s hdparm command can do more than just send secure erase commands. It’s a general way to interact

with the firmware of SATA, IDE, and SAS hard disks. Among other things, hdparm can set drive power options, enable or disable noise reduction options, set the read-only

flag, and print detailed drive information. A few of the options work on SCSI drives,

too (under current Linux kernels).

The syntax is

hdparm [options] device

Scores of options are available, but most are of interest only to driver and kernel developers. Table 8.3. shows a few that are relevant to administrators.

Table 8.3. Useful hdparm options system administrators.

Use hdparm -I to verify that each drive is using the fastest possible DMA transfer mode. hdparm lists all the disk’s supported modes and marks the currently active mode with a star, as shown in the example below.

On any modern system, the optimal DMA mode should be selected by default; if this is not the case, check the BIOS and kernel logs for relevant information to determine why not.

Many drives offer acoustic management, which slows down the motion of the read/write head to attenuate the ticking or pinging sounds it makes. Drives that support acoustic management usually come with the feature turned on, but that’s probably not what you want for production drives that live in a server room. Disable this feature with hdparm -M 254.

Most power consumed by hard disks goes to keep the platters spinning. If you have disks that see only occasional use and you can afford to delay access by 20 seconds or so as the motors are restarted, run hdparm -S to turn on the disks’ internal power management feature. The argument to -S sets the idle time after which the drive enters standby mode and turns off the motor. It’s a one-byte value, so the encoding is somewhat nonlinear. For example, values between 1 and 240 are in multiples of 5 seconds, and values from 241 to 251 are in units of 30 minutes. hdparm shows you its interpretation of the value when you run it; it’s faster to guess, adjust, and repeat than to look up the detailed coding rules.

hdparm includes a simple drive performance test to help evaluate the impact of configuration changes. The -T option reads from the drive’s cache and indicates the speed of data transfer on the bus, independent of throughput from the physical disk media. The -t option reads from the physical platters. As you might expect, physical reads are a lot slower.

100 MB/s or so is about the limit of today’s mass-market 1TB drives, so these results (and the information shown by hdparm -I above) confirm that the drive is correctly configured.

Hard disks are fault-tolerant systems that use error-correction coding and intelligent firmware to hide their imperfections from the host operating system. In some cases, an uncorrectable error that the drive is forced to report to the OS is merely the latest event in a long crescendo of correctable but inauspicious problems. It would be nice to know about those omens before the crisis occurs.

ATA devices, including SATA drives, implement a detailed form of status reporting that is sometimes predictive of drive failures. This standard, called SMART for “self-monitoring, analysis, and reporting technology,” exposes more than 50 operational parameters for investigation by the host computer.

The Google disk drive study mentioned on page 211 has been widely summarized in media reports as concluding that SMART data is not predictive of drive failure. That summary is not accurate. In fact, Google found that four SMART parameters were highly predictive of failure but that failure was not consistently preceded by changes in SMART values. Of failed drives in the study, 56% showed no change in the four most predictive parameters. On the other hand, predicting nearly half of failures sounds pretty good to us!

Those four sensitive SMART parameters are scan error count, reallocation count, off-line reallocation count, and number of sectors “on probation.” Those values should all be zero. A nonzero value in these fields raises the likelihood of failure within 60 days by a factor of 39, 14, 21, or 16, respectively.

To take advantage of SMART data, you need software that queries your drives to obtain it and then judges whether the current readings are sufficiently ominous to warrant administrator notification. Unfortunately, reporting standards vary by drive manufacturer, so decoding isn’t necessarily straightforward. Most SMART monitors collect baseline data and then look for sudden changes in the “bad” direction rather than interpreting absolute values. (According to the Google study, taking account of these “soft” SMART indicators in addition to the Big Four predicts 64% of all failures.)

The standard software for SMART wrangling on UNIX and Linux systems is the smartmontools package from smartmontools.sourceforge.net. It’s installed by default on SUSE and Red Hat systems; on Ubuntu, you’ll have to run apt-get install smartmontools. The package does run on Solaris systems if you build it from the source code.

The smartmontools package consists of a smartd daemon that monitors drives continuously and a smartctl command you can use for interactive queries or for scripting. The daemon has a single configuration file, normally /etc/smartd.conf, which is extensively commented and includes plenty of examples.

SCSI has its own system for out-of-band status reporting, but unfortunately the standard is much less granular in this respect than is SMART. The smartmontools attempt to include SCSI devices in their schema, but the predictive value of the SCSI data is less clear.

Partitioning and logical volume management are both ways of dividing up a disk (or pool of disks, in the case of LVM) into separate chunks of known size. All our example systems support logical volume management, but only Linux, Solaris, and sometimes HP-UX allow traditional partitioning.

You can put individual partitions under the control of a logical volume manager, but you can’t partition a logical volume. Partitioning is the lowest possible level of disk management.

On Solaris, partitioning is required but essentially vestigial; ZFS hides it well

enough that you may not even be aware that it’s occurring. This section contains some

general background information that may be useful to Solaris administrators, but from

a procedural standpoint, the Solaris path diverges rather sharply from that of Linux,

HP-UX, and AIX. Skip ahead to ZFS: all your storage problems solved on page 264 for details. (Or don’t: zpool create newpool newdevice pretty much covers basic configuration.)

On Solaris, partitioning is required but essentially vestigial; ZFS hides it well

enough that you may not even be aware that it’s occurring. This section contains some

general background information that may be useful to Solaris administrators, but from

a procedural standpoint, the Solaris path diverges rather sharply from that of Linux,

HP-UX, and AIX. Skip ahead to ZFS: all your storage problems solved on page 264 for details. (Or don’t: zpool create newpool newdevice pretty much covers basic configuration.)

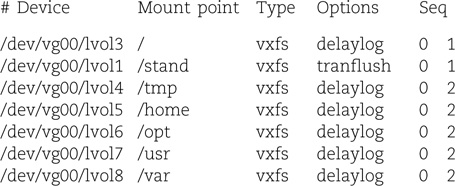

Both partitions and logical volumes make backups easier, prevent users from poaching each other’s disk space, and confine potential damage from runaway programs. All systems have a root “partition” that includes / and most of the local host’s configuration data. In theory, everything needed to bring the system up to single-user mode is part of the root partition. Various subdirectories (most commonly /var, /usr, /tmp, /share, and /home) may be broken out into their own partitions or volumes. Most systems also have at least one swap area.

Opinions differ on the best way to divide up disks, as do the defaults used by various systems. Here are some general points to guide you:

• It’s a good idea to have a backup root device that you can boot to if something goes wrong with the normal root partition. Ideally, the backup root lives on a different disk from the normal root so that it can protect against both hardware problems and corruption. However, even a backup root on the same disk has some value.9

• Verify that you can boot from your backup root. The procedure is often nontrivial. You may need special boot-time arguments to the kernel and minor configuration tweaks within the alternate root itself to get everything working smoothly.

• Since the root partition is often duplicated, it should also be small so that having two copies doesn’t consume an unreasonable amount of disk space. This is the major reason that /usr is often a separate volume; it holds the bulk of the system’s libraries and data.

• Putting /tmp on a separate filesystem limits temporary files to a finite size and saves you from having to back them up. Some systems use a memory-based filesystem to hold /tmp for performance reasons. The memory-based filesystems are still backed by swap space, so they work well in a broad range of situations.

• Since log files are kept in /var, it’s a good idea for /var to be a separate disk partition. Leaving /var as part of a small root partition makes it easy to fill the root and bring the machine to a halt.

• It’s useful to put users’ home directories on a separate partition or volume. Even if the root partition is corrupted or destroyed, user data has a good chance of remaining intact. Conversely, the system can continue to operate even after a user’s misguided shell script fills up /home.

• Splitting swap space among several physical disks increases performance. This technique works for filesystems, too; put the busy ones on different disks. See page 1129 for notes on this subject.

• As you add memory to your machine, you should also add swap space. See page 1124 for more information about virtual memory.

• Backups of a partition may be simplified if the entire partition can fit on one piece of media. See page 294.

• Try to cluster quickly-changing information on a few partitions that are backed up frequently.

Systems that allow partitions implement them by writing a “label” at the beginning of the disk to define the range of blocks included in each partition. The exact details vary; the label must often coexist with other startup information (such as a boot block), and it often contains extra information such as a name or unique ID that identifies the disk as a whole. Under Windows, the label is known as the MBR, or master boot record.

The device driver responsible for representing the disk reads the label and uses the partition table to calculate the physical location of each partition. Typically, one or two device files represent each partition (one block device and one character device; Linux has only block devices). Also, a separate set of device files represents the disk as a whole.

Solaris calls partitions “slices,” or more accurately, it calls them slices when

they are implemented with a Solaris-style label and partitions when they are implemented

with a Windows-style MBR. Slice 2 includes the entire expanse of the disk, illustrating

the rather frightening truth that more than one slice can claim a given disk block.

Perhaps the word “slices” was selected because “partition” suggests a simple division,

whereas slices can overlap. The terms are otherwise interchangeable.

Solaris calls partitions “slices,” or more accurately, it calls them slices when

they are implemented with a Solaris-style label and partitions when they are implemented

with a Windows-style MBR. Slice 2 includes the entire expanse of the disk, illustrating

the rather frightening truth that more than one slice can claim a given disk block.

Perhaps the word “slices” was selected because “partition” suggests a simple division,

whereas slices can overlap. The terms are otherwise interchangeable.

Despite the universal availability of logical volume managers, some situations still require or benefit from traditional partitioning.

• On PC hardware, the boot disk must have a partition table. Most systems require MBR partitioning (see Windows-style partitioning, next), but Itanium systems require GPT partitions (page 235). Data disks may remain unpartitioned.

See page 85 for more information about dual booting with Windows.

• Installing a Windows-style MBR makes the disk comprehensible to Windows, even if the contents of the individual partitions are not. If you want to interoperate with Windows (say, by dual booting), you’ll need to install a Windows MBR. But even if you have no particular ambitions along those lines, it may be helpful to consider the ubiquity of Windows and the likelihood that your disk will one day come in contact with it.

Current versions of Windows are well behaved and would never dream of writing randomly to a disk they can’t decipher. However, they will certainly suggest this course of action to any administrator who logs in. The dialog box even sports a helpful “OK, mess up this disk!” button.10 Nothing bad will happen unless someone makes a mistake, but safety is a structural and organizational process.

• Partitions have a defined location on the disk, and they guarantee locality of reference. Logical volumes do not (at least, not by default). In most cases, this fact isn’t terribly important. However, short seeks are faster than long seeks, and the throughput of a disk’s outer cylinders (those containing the lowest-numbered blocks) can exceed the throughput of its inner cylinders by 30% or more.11 For situations in which every ounce of performance counts, you can use partitioning to gain an extra edge. (You can always use logical volume management inside partitions to regain some of the lost flexibility.)

• RAID systems (see page 237) use disks or partitions of matched size. A given RAID implementation may accept entities of different sizes, but it will probably only use the block ranges that all devices have in common. Rather than letting extra space go to waste, you can isolate it in a separate partition. If you do this, however, you should use the spare partition for data that is infrequently accessed; otherwise, use of the partition will degrade the performance of the RAID array.

The Windows MBR occupies a single 512-byte disk block, most of which is consumed by boot code. Only enough space remains to define four partitions. These are termed “primary” partitions because they are defined directly in the MBR.

You can define one of the primary partitions to be an “extended” partition, which means that it contains its own subsidiary partition table. The extended partition is a true partition, and it occupies a defined physical extent on the disk. The subsidiary partition table is stored at the beginning of that partition’s data.

Partitions that you create within the extended partition are called secondary partitions. They are proper subsets of the extended partition.

Keep the following rules of thumb in mind when setting up Windows-partitioned disks. The first is an actual rule. The others exist only because certain BIOSes, boot blocks, or operating systems may require them.

• There can be only one extended partition on a disk.

• The extended partition should be the last of the partitions defined in the MBR; no primary partitions should come after it.

• Some older operating systems don’t like to be installed in secondary partitions. To avoid trouble, stick to primary partitions for OS installations.

The Windows partitioning system lets one partition be marked “active.” Boot loaders look for the active partition and try to load the operating system from it.

Each partition also has a one-byte type attribute that is supposed to indicate the partition’s contents. Generally, the codes represent either filesystem types or operating systems. These codes are not centrally assigned, but over time some common conventions have evolved. They are summarized by Andries E. Brouwer at tinyurl.com/part-types.

The MS-DOS command that partitioned hard disks was called fdisk. Most operating systems that support Windows-style partitions have adopted this name for their own partitioning commands, but there are many variations among fdisks. Windows itself has moved on: the command-line tool in recent versions is called diskpart. Windows also has a partitioning GUI that’s available through the Disk Management plug-in of mmc.

It does not matter whether you partition a disk with Windows or some other operating system. The end result is the same.

Intel’s extensible firmware interface (EFI) project aims to replace the rickety conventions of PC BIOSes with a more modern and functional architecture.12 Al-though systems that use full EFI firmware are still uncommon, EFI’s partitioning scheme has gained widespread support among operating systems. The main reason for this success is that MBR does not support disks larger than 2TB in size. Since 2TB disks are already widely available, this problem has become a matter of some urgency.

The EFI partitioning scheme, known as a “GUID partition table” or GPT, removes the obvious weaknesses of MBR. It defines only one type of partition, and you can create arbitrarily many of them. Each partition has a type specified by a 16-byte ID code (the globally unique ID, or GUID) that requires no central arbitration.

Significantly, GPT retains primitive compatibility with MBR-based systems by dragging along an MBR as the first block of the partition table. This “fakie” MBR makes the disk look like it’s occupied by one large MBR partition (at least, up to the 2TB limit of MBR). It isn’t useful per se, but the hope is that the decoy MBR may at least prevent naïve systems from attempting to reformat the disk.

Versions of Windows from the Vista era forward support GPT disks for data, but only systems with EFI firmware can boot from them. Linux and its GRUB boot loader have fared better: GPT disks are supported by the OS and bootable on any system. Intel-based Mac OS systems use both EFI and GPT partitioning. Solaris understands GPT partitioning, and ZFS uses it by default. However, Solaris boot disks cannot use GPT partitioning.

Although GPT has already been well accepted by operating system kernels, its support among disk management utilities is still spotty. GPT remains a “bleeding edge” format. There is no compelling reason to use it on disks that don’t require it (that is, disks 2TB in size or smaller).

Linux systems give you several options for partitioning. fdisk is a basic command-line partitioning tool. GNU’s parted is a fancier command-line tool that understands several label formats (including

Solaris’s native one) and can move and re-size partitions in addition to simply creating

and deleting them. A GUI version, gparted, runs under GNOME. Another possibility is cfdisk, which is a nice, terminal-based alternative to fdisk.

Linux systems give you several options for partitioning. fdisk is a basic command-line partitioning tool. GNU’s parted is a fancier command-line tool that understands several label formats (including

Solaris’s native one) and can move and re-size partitions in addition to simply creating

and deleting them. A GUI version, gparted, runs under GNOME. Another possibility is cfdisk, which is a nice, terminal-based alternative to fdisk.

parted and gparted can theoretically resize several types of filesystems along with the partitions that contain them, but the project home page describes this feature as “buggy and unreliable.” Filesystem-specific utilities are likely to do a better job of adjusting filesystems, but unfortunately, parted does not have a “resize the partition but not the filesystem” command. Go back to fdisk if this is what you need.

In general, we recommend gparted over parted. Both are simple, but gparted lets you specify the size of the partitions you want instead of specifying the starting and ending block ranges. For partitioning the boot disk, most distributions’ graphical installers are the best option since they typically suggest a partitioning plan that works well with that particular distribution’s layout.

ZFS automatically labels disks for you, applying a GPT partition table. However,

you can also partition disks manually with the format command. On x86 systems, an fdisk command is also available. Both interfaces are menu driven and relatively straightforward.

ZFS automatically labels disks for you, applying a GPT partition table. However,

you can also partition disks manually with the format command. On x86 systems, an fdisk command is also available. Both interfaces are menu driven and relatively straightforward.

format gives you a nice list of disks to choose from, while fdisk requires you to specify the disk on the command line. Fortunately, format has an fdisk command that runs fdisk as a subprocess, so you can use format as a kind of wrapper to help you pick the right disk.

Solaris understands three partitioning schemes: Windows MBR, GPT, and old-style Solaris partition tables, known as SMI. You must use MBR or SMI for the boot disk, depending on the hardware and whether you are running Solaris or OpenSolaris. For now, it’s probably best to stick to these options for all manually partitioned disks under 2TB.

HP uses disk partitioning only on Itanium (Integrity) boot disks, on which a GPT

partition table and an EFI boot partition are required. The idisk command prints and creates partition tables. Rather than being an interactive partitioning

utility, it reads a partitioning plan from a file or from standard input and uses

that to construct the partition table.

HP uses disk partitioning only on Itanium (Integrity) boot disks, on which a GPT

partition table and an EFI boot partition are required. The idisk command prints and creates partition tables. Rather than being an interactive partitioning

utility, it reads a partitioning plan from a file or from standard input and uses

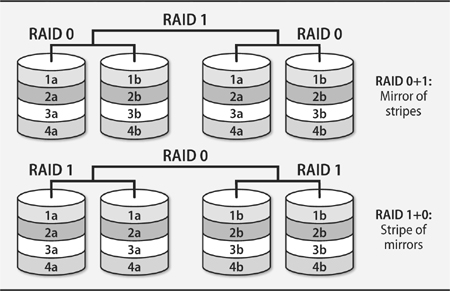

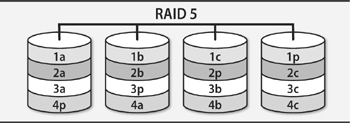

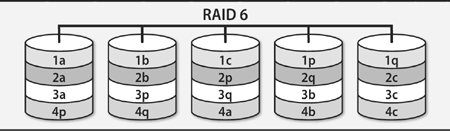

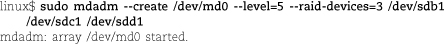

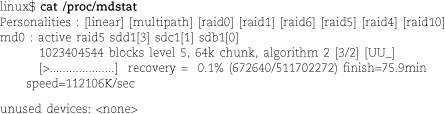

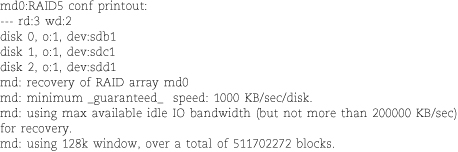

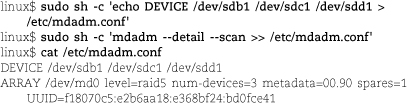

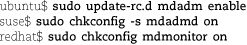

that to construct the partition table.