, and a probability measure P(·) defined for each event E ∈

, and a probability measure P(·) defined for each event E ∈  .

.The concept of a random variable has been introduced as a mathematical device for representing a physical quantity whose value is characterized by a quality of “randomness,” in the sense that there is uncertainty as to which of the possible values will be observed. The concept is essentially static in nature, for it does not take account of the fact that, in real-life situations, phenomena are usually dependent upon the passage of time. Most of the problems to which probability concepts are applied involve a discrete sequence of observations in time or a continuous process of observing the behavior of a dynamic system. At any one instant of time, the outcome has the quality of “randomness.” Knowledge of the outcomes at previous times may or may not reduce the uncertainty. The quantity under observation may be the fluctuating magnitude of a signal from a communication system, the quotations on the stock market, the incidence of particles from a radiation source, a critical parameter of a manufactured item, or any one of a large number of other items that one can readily call to mind. There are, of course, situations in which the outcome to be observed may depend upon a parameter other than (or in addition to) time. The random quantity to be observed may depend in some manner upon such parameters as position, temperature, age of a patient, etc. Mathematically, these dependences are not essentially different from the dependence upon time, and it is helpful to think in terms of a time variable and to develop our notation as if the parameter indicated time.

The problem is to find a generalization of the notion of random variable which allows the extension of probability models to dynamic systems. We have, in fact, already moved in this direction in considering vector-valued random variables or, equivalently, finite families of random variables considered jointly. The components of the vector or the members of the family can be conceived to represent observed values of a physical quantity at successive instants of time. The full generalization of these ideas leads naturally to the concept of a random, or stochastic, process.

The treatment of random processes in this chapter must of necessity be introductory and incomplete. Full-sized treatises on the subject usually have an introductory or prefatory remark to the effect that only selected topics can be treated therein. We introduce the general concept and discuss its meaning as a mathematical model of actual processes. Then we consider briefly some special processes and classes of processes which are often encountered in practice and which illustrate concepts, relationships, and properties that play an important role in the theory.

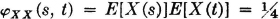

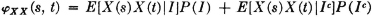

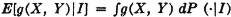

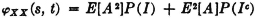

The general concept of a random process is a simple extension of the idea of a random variable. We suppose there is defined a suitable probability space, consisting of a basic space S, a class of events  , and a probability measure P(·) defined for each event E ∈

, and a probability measure P(·) defined for each event E ∈  .

.

Definition 7-1a

Let T be an infinite set of real numbers (countable or uncountable). A random process X is a family of random variables {X(·, t): t ∈ T}. T is called the index set, or the parameter set, for the process.

The terms stochastic process and random function are used by various authors instead of the term random process. Some authors include finite families of random variables in the category of random processes, but they then point out that the term is usually reserved for the infinite case. For most of the processes discussed in this chapter, T is either the set of positive integers, the set of positive integers and zero, the set of all integers, or an interval on the real line (possibly the whole real line). In the case of a countable index set, the term random, sequence is sometimes employed. We shall use the term random process to designate either the countable or uncountable case.

A random process X may be considered from three points of view, each of which is natural for certain considerations.

1. As a function X·,·) on S × T.

To know the value of a random process as defined above, it is necessary to determine the parameter t and the choice variable  . Thus, from a mathematical point of view, it is entirely natural to view the process as a function. Mathematically, the manner in which

. Thus, from a mathematical point of view, it is entirely natural to view the process as a function. Mathematically, the manner in which  and t are determined is of no consequence.

and t are determined is of no consequence.

2. As a family of random variables {X(·, t): t ∈ T}. It is in these terms that the definition is framed. For each choice of t ∈ T, a random variable is chosen. It is usual to think of the choice of t as somehow deterministic. If t represents the time of observation, the choice of this time is taken to be deliberate and premeditated. The choice of  is, however, in some sense “random.” This means that there is some uncertainty before the choice as to which of the possible

is, however, in some sense “random.” This means that there is some uncertainty before the choice as to which of the possible  will be chosen. It is this choice which is the mathematical counterpart of selection from a jar, sampling from a population, or otherwise selecting by a trial or experiment. For this reason, it is helpful to think of

will be chosen. It is this choice which is the mathematical counterpart of selection from a jar, sampling from a population, or otherwise selecting by a trial or experiment. For this reason, it is helpful to think of  as the random choice variable, or simply the choice variable. Selection of subsets (finite or infinite) of the index set T amounts to selection of subfamilies of the process. One important way to study random processes is to study the joint distributions of finite subfamilies of the process.

as the random choice variable, or simply the choice variable. Selection of subsets (finite or infinite) of the index set T amounts to selection of subfamilies of the process. One important way to study random processes is to study the joint distributions of finite subfamilies of the process.

3. As a family of functions on T, {X( ,·):

,·): ∈S). For each choice of

∈S). For each choice of  , a function X(

, a function X( ,·) is determined. The domain of this function is T. Should T be countable, the function so defined is a sequence. Each such function is called a sample function, or a realization of the process, or a member of the process.

,·) is determined. The domain of this function is T. Should T be countable, the function so defined is a sequence. Each such function is called a sample function, or a realization of the process, or a member of the process.

It is the sample function which is observed in practice. The random process can serve as a model of a physical process only if, in observing the behavior of the physical process, one can take the point of view that the function observed is one of a family of possible realizations of the process. The concept of randomness is associated precisely with the uncertainty of the choice of one of the sample functions in the family of such functions. The mechanism of this choice is represented in the choice of  from the basic space. The concept of randomness has nothing to do with the character of the function chosen. This sample function may be quite smooth and regular, or it may have the wild fluctuations popularly and naively associated with “random” phenomena. One can conceive of a simple generator of a specific curve which has this “random” character; each time the generator is set in motion, the same curve is produced. There is nothing random about the function represented by the curve. The outcome of the operation is completely determined.

from the basic space. The concept of randomness has nothing to do with the character of the function chosen. This sample function may be quite smooth and regular, or it may have the wild fluctuations popularly and naively associated with “random” phenomena. One can conceive of a simple generator of a specific curve which has this “random” character; each time the generator is set in motion, the same curve is produced. There is nothing random about the function represented by the curve. The outcome of the operation is completely determined.

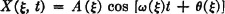

As an example of a simple random process whose sample functions have quite regular characteristics, consider the following

Example 7-1-1 Random Sinusoid

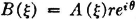

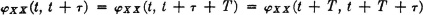

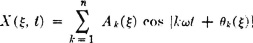

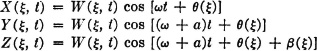

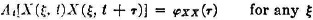

Let A(·), ω(·), and  (·) be random variables. Define the process X by the relation

(·) be random variables. Define the process X by the relation

For each choice of  a choice of amplitude A(

a choice of amplitude A( ), angular frequency ω(

), angular frequency ω( ), and phase angle

), and phase angle  (

( ) is made. Once this choice is made, a perfectly regular sinusoidal function of time is determined. The uncertainty preceding the choice is the uncertainty as to which of the possible functions in the family is to be chosen. On the other hand, if a value of t is determined, each choice of

) is made. Once this choice is made, a perfectly regular sinusoidal function of time is determined. The uncertainty preceding the choice is the uncertainty as to which of the possible functions in the family is to be chosen. On the other hand, if a value of t is determined, each choice of  determines a value of X(·, t). As a Borel function of three random variables, X(·, t) is itself a random variable.

determines a value of X(·, t). As a Borel function of three random variables, X(·, t) is itself a random variable.

Before continuing to further examples, we take note of some common variations of notation used in the literature. Most authors suppress the choice variable, since it plays no role in practical computations. They write Xt or X(t) where we have used X(·, t), above. Since there are distinct conceptual advantages in thinking of  as the choice variable, we shall commonly use the notation X(·, t) to emphasize that, for any given determination of the parameter t, we have a function of

as the choice variable, we shall commonly use the notation X(·, t) to emphasize that, for any given determination of the parameter t, we have a function of  (i.e., a random variable). In some situations, however, particularly in the writing of expectations, we find it notationally convenient to suppress the choice variable. In designating mathematical expectations we shall usually write E[X(t)].

(i.e., a random variable). In some situations, however, particularly in the writing of expectations, we find it notationally convenient to suppress the choice variable. In designating mathematical expectations we shall usually write E[X(t)].

The following examples indicate some ways in which random processes may arise in practice and how certain analytical or stochastic assumptions give character to the process.

Example 7-1-2 A Coin-flipping Sequence

A coin is flipped at regular time intervals t = k, k = 0, 1, 2, …. If a head appears at t = k, the sample function has the value 1 for k ≤ t < k + 1. The value during this interval is 0 if a tail is thrown at t = k.

SOLUTION Each sample function is a step function having values 0 or 1, with steps occurring only at integral values of time. If the sequence of 0’s or 1’s resulting from a sequence of trials is written down in succession after the binary point, the result is a number in binary form in the interval [0, 1]. To each element  there corresponds a point on the unit interval and a sample function X(

there corresponds a point on the unit interval and a sample function X( , ·). We may conveniently take the unit interval to be the basic space S and let

, ·). We may conveniently take the unit interval to be the basic space S and let  be the number whose binary equivalent describes the sample function X(

be the number whose binary equivalent describes the sample function X( ,·). If we let An = {

,·). If we let An = { : X(

: X( , t) = 1, n ≤ t < n + 1}, we have, under the usual assumptions, P(An) =

, t) = 1, n ≤ t < n + 1}, we have, under the usual assumptions, P(An) =  for any n. It may be shown that the probability mass is distributed uniformly on the unit interval, so that the probability measure coincides with the Lebesgue measure, which assigns to each interval a mass equal to the length of that interval.

for any n. It may be shown that the probability mass is distributed uniformly on the unit interval, so that the probability measure coincides with the Lebesgue measure, which assigns to each interval a mass equal to the length of that interval.

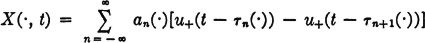

Example 7-1-3 Shot Effect in a Vacuum Tube

Suppose a vacuum tube is connected to a linear circuit. Let the response of the system to a single electron striking the plate at t = 0 be given at any time by the value g(t), where g(·) is a suitable response function. Then the resulting current in the tube is

where {τK(·): − ∞ < k <∞} is a random sequence which describes the arrival times of the electrons. A choice of  amounts to a choice of the sequence of arrival times. Once these are determined, the value of the sample function (representing the current in the tube) is determined for each t. Ways of describing in probability terms the arrival times in terms of counting processes are considered in Sec. 7-3.

amounts to a choice of the sequence of arrival times. Once these are determined, the value of the sample function (representing the current in the tube) is determined for each t. Ways of describing in probability terms the arrival times in terms of counting processes are considered in Sec. 7-3.

Example 7-1-4 Signal from a Communication System

An important branch of modern communication theory applies the theory of random processes to the analysis and design of communication systems. To use this approach, one must take the point of view that the signal under observation is one of the set of signals that are conceptually possible from such a system; that is, the signal is a sample signal from the process. Signals from communication systems can usually be characterized in terms of a variety of analytical properties. The transmitted signal may be taken from a known class of signals, as in the case of certain types of pulse-code modulation schemes. The dynamics of the transmission system place certain restrictions on the character of the signals. Certainly they must be bounded. The “frequency spectrum” of the signals is limited in specified ways. The mechanism producing unwanted “noise” can often be described in probabilistic terms. Utilization of these analytical properties enables the communication theorist to develop and study various processes as models of communication systems.

This simple list of examples does not begin to indicate the extent of the applications of random processes to physical and statistical problems. For a more adequate picture of the scope of the theory and its applications, one should examine such works as those by Parzen [1962], Rosenblatt [1962], Bharucha-Reid [1960], Wax [1954], and Middleton [1960]. Examples included in these works, as well as the voluminous literature cited therein, should serve to convey an impression of the extent and importance of the field.

The simplest kind of a random process is a sequence of discrete random variables. The simplest sequences to deal with are those in which the random variables form an independent family. Much of the early work on sequences of trials has dealt with the case of independent random variables. There are many systems, however, for which the independence assumption is not satisfactory. In a sequence of trials, the outcome of any trial may be influenced by the outcome for one or more previous trials in the sequence. Early in this century, A, A. Markov (1856–1922) considered an important case of such dependence in which the outcome of any trial in a sequence is conditioned by the outcome of the trial immediately preceding, but by no earlier ones. Such dependence has come to be known as chain dependence.

Such a chain of dependences is common in many important practical situations. The result of one choice affects the next. This is true in many games of chance, when a sequence of moves is considered rather than a single, isolated play. Many physical, psychological, biological, and economic phenomena exhibit such a chain dependence. Even when the dependence extends further back than one step, a reasonable approximation may result from considering only one-step dependence. Some simple examples are given later in this section.

The systems to be studied are described in terms of the results of a sequence of trials. Each trial in the sequence is performed at a given transition time. During the period between two transition times, the system is in one of a discrete set of states, corresponding to one of the possible outcomes of any trial in the sequence. This set of states is called the state space  . We thus have a random process {X(·, n):0 ≤ n < ∞}, in which the value of the random variable X(·, n) is the state of the system (or a number corresponding to that state) in the period following the nth trial in the sequence. We take the zeroth period to be the initial period, and the value of X(·,0) is the initial state of the system. The process may be characterized by giving the probabilities of the various states in the initial period [i.e., the distribution of X(·, 0)] and the appropriate conditional probabilities of the various states in each succeeding period, given the states of the previous periods. The precise manner in which this is done is formulated below.

. We thus have a random process {X(·, n):0 ≤ n < ∞}, in which the value of the random variable X(·, n) is the state of the system (or a number corresponding to that state) in the period following the nth trial in the sequence. We take the zeroth period to be the initial period, and the value of X(·,0) is the initial state of the system. The process may be characterized by giving the probabilities of the various states in the initial period [i.e., the distribution of X(·, 0)] and the appropriate conditional probabilities of the various states in each succeeding period, given the states of the previous periods. The precise manner in which this is done is formulated below.

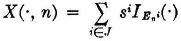

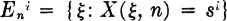

Suppose the state space  is the set {si: i ∈ J}, where J is a finite or countably infinite index set. The elements si of the state space represent possible states of the system and appear in the model as possible values of the various X(·, n); that is,

is the set {si: i ∈ J}, where J is a finite or countably infinite index set. The elements si of the state space represent possible states of the system and appear in the model as possible values of the various X(·, n); that is,  is the range set for each of the random variables in the process. We may take the si to be real numbers without loss of generality. The random variables may be written

is the range set for each of the random variables in the process. We may take the si to be real numbers without loss of generality. The random variables may be written

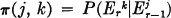

where  . In words,

. In words,  is the event that the system is in the ith state during the nth period or interval. The probability distributions for the X(·, n) are determined by specifying (1) the initial probabilities P(E0i) for each i ∈ J and (2) the conditional probabilities

is the event that the system is in the ith state during the nth period or interval. The probability distributions for the X(·, n) are determined by specifying (1) the initial probabilities P(E0i) for each i ∈ J and (2) the conditional probabilities  for all the possible combinations of the indices.

for all the possible combinations of the indices.

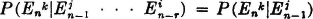

A wide variety of dependency arrangements is possible, according to the nature of the conditional probabilities. We shall limit consideration to processes in which the probabilities for the state after a transition are conditioned only by the state immediately before the transition. This may be stated precisely as follows:

(MCI) Markov property.  for each permissible n, r, k, and j.

for each permissible n, r, k, and j.

Definition 7-2a

The conditional probabilities in property (MCI) are called the transition probabilities for the process.

In most of the processes studied, it is assumed further that the transition probabilities do not depend upon n; that is, we have the

(MC2) Constancy property.  for each permissible n, r, k, and j.

for each permissible n, r, k, and j.

It is convenient, in the case of constant transition probabilities, to designate them by the shorter notation

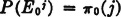

In a similar manner, the total probability for any state i in the rth period is designated by

These probabilities define the probability distribution for the random variable X(·, r).

Definition 7-2b

A random process {X(·, n): 0 ≤ n < ∞} with finite or countably infinite state space  = {si: i ∈ j} and which has the Markov property (MCI) and the constancy property (MC2) is called a Markov chain with constant transition probabilities (or more briefly, a constant Markov chain). If the state space

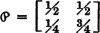

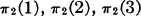

= {si: i ∈ j} and which has the Markov property (MCI) and the constancy property (MC2) is called a Markov chain with constant transition probabilities (or more briefly, a constant Markov chain). If the state space  is finite, the process is called a finite Markov chain. The set of probabilities {π0(i):i ∈ J} is called the set of initial state probabilities. The matrix

is finite, the process is called a finite Markov chain. The set of probabilities {π0(i):i ∈ J} is called the set of initial state probabilities. The matrix  = [π(j, k)] is called the transition matrix.

= [π(j, k)] is called the transition matrix.

Constant Markov chains are also called homogeneous chains, or stationary chains. Most often the reference is simply to Markov chains, the constancy property being tacitly assumed.

A very extensive literature has developed on the subject of constant Markov chains. We can touch upon only a few basic ideas and results in a manner intended to introduce the subject and to facilitate further reference to the pertinent literature.

Since a conditional probability measure, given a fixed event, is itself a probability measure, and since the events  form a partition for each r, we must have, for each i,

form a partition for each r, we must have, for each i,

The last equation means that the elements on each row of the transition matrix  sum to 1, and hence form a probability vector. Such matrices are of sufficient importance to be given a name as follows:

sum to 1, and hence form a probability vector. Such matrices are of sufficient importance to be given a name as follows:

Definition 7-2c

A square matrix (finite or infinite) whose elements are nonnegative and whose sum along any row is unity is called a stochastic matrix. If, in addition, the sum of the elements along any column is unity, the matrix is called doubly stochastic.

The transition matrix for a constant Markov chain is a stochastic matrix. In special cases, it may be doubly stochastic.

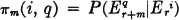

We now note that a constant Markov process is characterized by the initial probabilities and the transition matrix. As a direct consequence of properties (CP1), (MC1), and (MC2), we have

If we put r = 0, we see that specification of the initial state probabilities and of the matrix of transition probabilities serves to specify the joint probability mass distribution for any finite subfamily of random variables in the process. In particular, we may use property (P6) to derive the following expression for the stats probabilities in the nth period:

(MC4) State probabilities

PROOF This follows from (MC3) and the fundamental relation

Before developing further the theoretical apparatus, we consider some simple examples of Markov processes and their characteristic probabilities. To simplify writing, we shall consider finite chains.

Example 7-2-1 Urn Model

Feller [1957] has shown that a constant Markov chain is equivalent to the following urn model. There is an urn for each state of the system. Each urn has balls marked with numbers corresponding to the states. The probability of drawing a ball marked k from urn j is π (j, k). Sampling is with replacement, so that the distribution in any given urn is not disturbed by the sampling. The process consists in making a sequence of samplings according to the following procedure. The first sampling, to start the process, consists of making a choice of an urn according to some prescribed set of probabilities. Call this the zeroth choice, and let  be the event that the jth urn is chosen on this initial step. The choice of urn j is made with probability π0(J) so that

be the event that the jth urn is chosen on this initial step. The choice of urn j is made with probability π0(J) so that  . From this urn make the first choice of a ball. A ball numbered k is chosen, on a random basis, from urn j with probability π (j, k). The next choice is made from urn k. A ball numbered h is chosen with probability π (k, h). The process, once started, continues indefinitely. If we let

. From this urn make the first choice of a ball. A ball numbered k is chosen, on a random basis, from urn j with probability π (j, k). The next choice is made from urn k. A ball numbered h is chosen with probability π (k, h). The process, once started, continues indefinitely. If we let  be the event that a ball numbered k is chosen at the nth trial, then because of the independence of the successive samples, we have

be the event that a ball numbered k is chosen at the nth trial, then because of the independence of the successive samples, we have

This is exactly the Markov property with constant transition probabilities.

Example 7-2-2

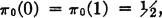

Any square stochastic matrix plus a set of initial probabilities defines a constant Markov chain. Consider a finite chain with three states. Let

SOLUTION The set of initial probabilities is chosen so that, with probability 1, the system starts in state 3. Thus, in the first period, it is sure that the system is in state 3. At the first transition time, the system remains in state 3 with probability 0.4, moves into state 1 with probability 0.3, or into state 2 with probability 0.3. Once in state 1 or state 2, the probability of returning to state 3 is zero. Thus, once the system leaves the state 3, into which it is forced initially, it oscillates between states 1 and 2. If it is in state 1, it remains there with probability 0.5 and changes to state 2 with probability 0.5. When in state 2, it remains there with probability 0.2 or changes to state 1 with probability 0.8. The probability of being in states 3, 3, 1, 2, 2, 1 in that order is π0(3) π (3, 3)π(3, 1)π(1, 2)π(2, 2)π(2, 1) = 1 × 0.4 × 0.3 × 0.5 × 0.2 × 0.8.

For the next example, we consider a simple problem of the “random-walk” type, which is important in the analysis of many physical problems. The example is quite simple and schematic, but it illustrates a number of important ideas and suggests possibilities for processes.

Example 7-2-3

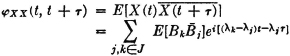

A particle is positioned on the grid shown in Fig. 7-2-1 in a “random manner” as follows. If it is in position 1 or position 9, it remains there with probability 1. If it is in any other position, at the next transition time it moves with equal probability to one of its adjacent neighbors along one of the grid lines (but not diagonally). In position 5 there are four possible moves, each with probability  . In positions 2, 4, 6, or 8 there are three possible moves, each with probability

. In positions 2, 4, 6, or 8 there are three possible moves, each with probability  . In positions 3 and 7 there are two possible moves, each with probability

. In positions 3 and 7 there are two possible moves, each with probability  .

.

Fig. 7-2-1 Grid for the random-walk problem of Example 7-2-3.

The numbers outside the matrix are simply an aid to identifying the rows and columns. To formulate (or to read) such a matrix, the following procedure is helpful. Go to a position on the main diagonal, say, in the jth row and column. Enter (or read) π(j, j), the conditional probability of remaining in that position once in it. For the present example, this is zero except in positions 1, 1 and 9, 9 on the matrix, corresponding to positions 1 and 9 on the grid. Then consider various possible positions to be reached from position j. Suppose position k is such a position; this corresponds to the matrix element in the kth column but on the same (i.e., the jth) row. Enter π(j, k), the conditional probability of moving to position k, given that the present position is j. For example, in the fifth row of the transition matrix above (corresponding to present position 5), π(5, 5) is zero; but π(5, 2), π(5, 4), π(5, 6), and π(5, 8) each has the same value  . Thus the number a is entered into the positions in columns 2, 4, 6, and 8 on the fifth row. In the third row there are only two nonzero entries, each of value

. Thus the number a is entered into the positions in columns 2, 4, 6, and 8 on the fifth row. In the third row there are only two nonzero entries, each of value  , corresponding to the fact that from position 3 only two moves are possible. The matrix should be examined to see that the other entries correspond to the physical system assumed.

, corresponding to the fact that from position 3 only two moves are possible. The matrix should be examined to see that the other entries correspond to the physical system assumed.

Once the initial state probabilities are specified, the Markov chain is determined.

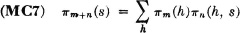

Higher-order transition probabilities

By the use of elementary probability relations, we may develop formulas for conditional probabilities which span several periods.

The mth-order transition probability from state si to state sq is the quantity

It is apparent that, for constant chains, the higher-order transition probabilities are independent of the beginning period and depend only upon m and the two states involved. For m = 1, the first-order transition probability is just the ordinary transition probability; that is, π(i, q) = π(i, q). A number of basic relations are easily derived.

This property can be derived easily by applying properties (CP1), (MC1), and (P6). In a similar way, the following result can be developed.

This equation is a special case of the Chapman-Kolmogorov equation. The state probabilities in the (n + m)th period can be derived from those for the mth period by the following formula:

Use of the definitions and application of fundamental probability theorems suffices to demonstrate the validity of this expression.

In the case of finite Markov processes, in which the state space index set J = {1, 2, …, N}, several of the properties for Markov processes can be expressed compactly in terms of the matrix of transition probabilities.

Let  r be the row matrix of state probabilities in the rth period, and let

r be the row matrix of state probabilities in the rth period, and let  be the matrix of transition probabilities. Since the latter matrix is square, the nth power

be the matrix of transition probabilities. Since the latter matrix is square, the nth power  =

=  ×

×  × · · · ×

× · · · ×  (n factors) is defined. We may then write in matrix form

(n factors) is defined. We may then write in matrix form

Because of this matrix formulation, it has been possible to exploit properties of matrix algebra to develop a very general theory of finite chains. Also, a major tool for analysis is a type of generating function which is identical with the z transform, much used in the theory of sampled-data control systems. We shall not attempt to describe or exploit these special techniques, although we shall assume familiarity with the simple rules of matrix multiplication. For theoretical developments and applications employing these techniques, see Feller [1957], Kemeny and Snell [1960], and Howard [1960].

Example 7-2-4 The Success-Failure Model

The model is characterized by two states: state 1 may correspond to “success,” and state 2 to “failure.” This may be used as a somewhat crude model of marketing success. A manufacturer features a specific product in each marketing period. If he is successful in sales during one period, he has a reasonable probability of being successful in the succeeding period; if he is unsuccessful, he may have a high probability of remaining unsuccessful. For example, if he is successful, he may have a 50-50 chance of remaining successful; if he is unsuccessful in a given period, his probability of being successful in the next period may only be 1 in 4. Under these assumptions, suppose he is successful in a given sales period. What are the probabilities of success and lack of it in the first, second, and third subsequent sales periods?

SOLUTION Under the assumptions stated above, π(1, 1) = π(1, 2) =  , π(2, 1) =

, π(2, 1) =  π, and π(2, 2) =

π, and π(2, 2) =  . The matrix of transition probabilities is thus

. The matrix of transition probabilities is thus

Direct matrix multiplication shows that

As a check, we note that  2 and

2 and  3 are stochastic matrices. The assumption that the system is initially in state 1 is equivalent to the assumption π0(1) = 1 and π0(2) = 0. Thus

3 are stochastic matrices. The assumption that the system is initially in state 1 is equivalent to the assumption π0(1) = 1 and π0(2) = 0. Thus  0 = [1 0], so that

0 = [1 0], so that

In the first period (after the initial one) the system is equally likely to be in either state. In the second and third periods, state 2 becomes the more likely. The probabilities for subsequent periods may be calculated similarly.

Example 7-2-5 Alternative Routing in Communication Networks

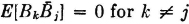

A communication system has trunk lines connecting five cities, which we designate by numbers. There are central-office switching facilities in each city. If it is desired to establish a line from city 1 to city 5, the switching facilities make a search for a line. Three possibilities exist: (1) a direct line is available, (2) a line to an intermediate city is found, or (3) no line is available. In the first case, the line is established. In the second alternative, a new search procedure is set up in the intermediate city to find a line to city 5 or to another intermediate city. In the third alternative, the system begins a new search after a prescribed delay (which may be quite short in a modern, high-capacity system). A fundamental problem is to design the system so that the waiting time (in terms of numbers of searching operations) is below some prescribed level. Searching operations mean not only delay in establishing communication, but also use of extensive switching equipment in the central offices. Traffic conditions and the number of trunk lines available determine probabilities that various connections will be made on any search operation. We may set up a Markov-chain model by assuming that the state of the system corresponds to the connection established. Consider the network shown in Fig. 7-2-2. The system is in state 1 if no line has been found at the originating city. The system is in state 5 if a connecting link—either direct or through intermediate cities—is established with city 5. The system is in state 4 if a connecting link is established to city 4, etc. Suppose the probabilities of establishing connections are those indicated in the transition matrix

Fig. 7-2-2 Possible trunk-line connections for establishing communication from city 1 to city 5.

Since it is determined that the call originates in city 1, the initial probability matrix is [1 0 0 0 0]. The transition matrix reflects the fact that in city 1 and in each intermediate city the probability is 0.1 of not finding a line on any search operation. Cities 3 and 4 are assumed to be closer to city 5 and to have more connecting lines, so that the probabilities of establishing connection to that city are higher than for cities 1 and 2. The connection network is such as to provide connections to higher-numbered cities. The probability of establishing connection in n or fewer search operations is πn(5). Since π0(1) = 1, we must have πn(5) = πn(1, 5). Using the fundamental relations, we may calculate the following values:

Thus only about 1 call in 19 takes more than three search operations; and only about 1 percent of the calls take more than 4 search operations. About 1 call in 5 (0.192 = 0.942 – 0.750) takes three search operations. Other probabilities may be determined similarly.

Closed state sets and irreducible chains

In the analysis of constant Markov chains, it is convenient to classify the various states of the system in a variety of ways. We introduce some terminology to facilitate discussion and then consider the concept of closure.

Definition 7-2e

We say that state sk is accessible from state si (indicated si → sk) iffi there is a positive integer n such that πn(i, k) > 0. States si and sk are said to communicate iffi both si → sk and sk → si. We designate this condition by writing si ↔ sk.

It should be noted that the case i = k is included in the definition above. From the basic Markov relation (MC6) it is easy to derive the following facts:

Theorem 7-2A

1. si → sj and sj→ sk implies si → sk.

2. si ↔ sj and sj ↔ sk implies si ↔ sk.

3. si ↔ sj for some j implies si ↔ si.

In the case of finite chains (or even some special infinite chains), these relations can often be visualized with the aid of a transition diagram.

Definition 7-2f

A transition diagram for a finite chain is a linear graph with one node for each state and one directed branch for each one-step transition with positive probability. It is customary to indicate the transition probability along the branch.

Figure 7-2-3 shows a transition diagram corresponding to the three-state process described in Example 7-2-2. The nodes are numbered to correspond to the number of the state represented. The probability π(i, j) is placed along the branch directed from node i to node j. If for any i and j the transition probability π(i, j) = 0, the branch directed from node i to node j is omitted.

Fig. 7-2-3 The transition diagram for the process of Example 7-2-2.

With such a diagram, the relation si→ sk is equivalent to the existence of at least one path through the diagram (traced in the direction of the arrows) which leads from node i to node k. It is apparent in Fig. 7-2-3 that nodes 1 and 2 communicate and that each node can be reached from node 3; but node 3 cannot be reached from node 1 or from node 2.

Definition 7-2g

A set C of states is said to be closed if no state outside C may be reached from any state in C. If a closed set C consists of only one member state si, that state is called an absorbing state, or a trapping state. A chain is called irreducible (or indecomposable) iffi there are no closed sets of states other than the entire state space.

In the chain represented in Fig. 7-2-3, the set of states {s1, s2} is a closed set, since the only other state in the state space cannot be reached from either member. The chain is thus reducible. It has no absorbing states, however. In order to be absorbing, a state sk must be characterized by π(k, k) = 1. In terms of the transition diagram, this means that the only branch leaving node k must be a self-loop branch beginning and ending on node k. The transition probability associated with this branch is unity.

We note that a set of states C is closed iffi si ∈ C and sk  C implies πn(i, k) = 0 for all positive integers n. In this case we must have π(i, k) = 0. This means that the nonzero entries in row i of a transition matrix of any order must lie in columns corresponding to states in C. This statement is true for every row corresponding to a state in C. If from each

C implies πn(i, k) = 0 for all positive integers n. In this case we must have π(i, k) = 0. This means that the nonzero entries in row i of a transition matrix of any order must lie in columns corresponding to states in C. This statement is true for every row corresponding to a state in C. If from each  n we form the submatrix

n we form the submatrix  cn containing only rows and columns corresponding to states in C, this submatrix is a stochastic matrix. In fact, from the basic properties it follows that:

cn containing only rows and columns corresponding to states in C, this submatrix is a stochastic matrix. In fact, from the basic properties it follows that:

Theorem 7-2B

If C is a closed set, a stochastic matrix  cn may be derived from each matrix

cn may be derived from each matrix  n by taking the rows and columns corresponding to the states in C. The elements of

n by taking the rows and columns corresponding to the states in C. The elements of  c and

c and  cn satisfy the fundamental relations (MC1) through (MC7).

cn satisfy the fundamental relations (MC1) through (MC7).

This theorem implies that a Markov chain is defined on any closed state set. Once the closed set is entered, it is never left. The behavior of the system, once this closed set of states is entered, can be studied in isolation, as a separate problem.

It is easy to show that the set Ri of all states which can be reached from a given state si is closed. From this fact, the following theorem is an immediate consequence.

Theorem 7-2C

A constant Markov chain is irreducible iffi all states communicate.

For finite chains (i.e., finite state spaces) a systematic check for irreducibility can be made to discover if every state can be reached from each given state. This may be done either with the transition matrix or with the aid of the transition diagram for the chain. If the chain is reducible, it is usually desirable to identify the closed subsets of the state space.

Waiting-time distributions

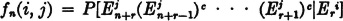

Suppose the system starts in state si at the rth period and reaches state sj for the first time in the (r + n)th period. For each n, i, and j we can designate a conditional probability which we define as follows:

Definition 7-2h

The waiting-time distribution function is given by

In words, fn(i, j) is the conditional probability that, starting with the system in state si, it will reach state si for the first time n steps later. It is apparent that for constant Markov processes these conditional probabilities are independent of the choice of r. The waiting-time distribution is determined by the following recursion relations:

The first expression is obvious. The second expression can be derived by recognizing that the system moves from state si to state sj in n steps by reaching sj for the first time in n steps; or by reaching sj for the first time in n − 1 steps and returning to sj in one more step; or by reaching sj for the first time in n − 2 steps and then returning in exactly two more steps; etc. The product relation in each of the terms of the final sum can be derived by considering carefully the events involved and using the fundamental Markov properties.

Let f(i, j) be the conditional probability that, starting from state si the system ever reaches state sj. It is apparent that

If the initial state and final state are the same, it is customary to call the waiting times recurrence times. We shall define some quantities which serve as the basis of useful classifications of states.

Definition 7-2i

The quantity f(i, i) is referred to as the recurrence probability for si The mean recurrence time τ(i) for a state si is given by

If state si communicates with itself, the periodicity parameter ω(i) is the greatest integer such that πn(i, i) can differ from zero only for n = kω(i), k an integer; if si does not communicate with itself, ω(i) = 0.

In terms of the quantities just derived, we may classify the states of a Markov process as follows:

Definition 7-2j

A state is said to be

1. Transient iffi f(i, i) < 1.

2. Persistent iffi f(i, i) = 1.

3. Periodic with period ω(i) iffi ω(i) > 1.

4. A periodic iffi ω(i) ≤ 1.

5. Null iffi f(i, i) = 1, τ(i) = ∞.

6. Positive iffi f(i, i) = 1, τ(i) < ∞.

7. Ergodic iffi f(i, i) = 1, τ(i) < ∞, ω(i) = 1.

Classify the states in the process described in Example 7-2-2 and Fig. 7-2-3.

SOLUTION We may use the transition diagram as an aid to classification and in calculating waiting-time distributions.

State 3. This state is obviously transient, for once the system leaves this state, it does not return. We thus have

From this it follows that  . It is obvious that ω(3) = 1, so that the state is aperiodic. By definition, the mean recurrence time τ(3) = ∞.

. It is obvious that ω(3) = 1, so that the state is aperiodic. By definition, the mean recurrence time τ(3) = ∞.

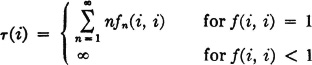

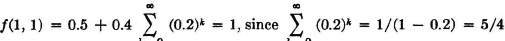

State 1. It would appear that this state is persistent and aperiodic. For one thing, it is obvious that ω(1) = 1. We also note that the possibility of a return for the first time to state 1 after starting in that state must follow a simple pattern. There is either (1) a return in one step, (2) a transition to state 2, then back on the second step, or (3) a transition to state 2, one or more returns to state 2, and an eventual return to state 1. We thus have

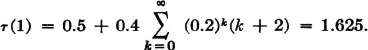

Now  . The mean recurrence time τ(1) is given by

. The mean recurrence time τ(1) is given by

In obtaining this result, we put k + 2 = (k + 1) + 1 = n + 1, and write

The other state has similar properties, and the numerical results for that state may be obtained by similar computations. The return probability f(2, 2) = 1, and the mean recurrence time τ(2) = 2.6.

Two fundamental theorems

Next we state two fundamental theorems which give important characterizations of constant Markov chains. These are stated without proof; for proofs based on certain analytical, nonprobabilistic properties of the so-called renewal equation, see Feller [1957].

Theorem 7-2D

In an irreducible, constant Markov chain, all states belong to the same class: they are either all transient, all null, or all positive. If any state is periodic, all states are periodic with the same period.

In every constant Markov chain, the persistent states can be partitioned uniquely into closed sets C1 C2, …, such that, from any state in a given set Ck, all other states in that set can be reached. All states belonging to a given closed set Ck belong to the same class.

In addition to the states in the closed sets, the chain may have transient states from which the states of one or more of the closed sets may be reached.

According to Theorem 7-2B, each of the closed sets Ck described in the theorem just stated may be studied as a subchain. The behavior of the entire chain may be studied by examining the behavior of each of the subchains, once its set of states is entered, and by studying the ways in which the subchains may be entered from the transient states.

Before stating the next theorem, we make the following

Definition 7-2k

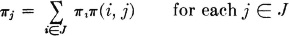

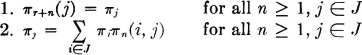

A set of probabilities {πi: i ∈ J}, where J is the index set for the state space  , is called a stationary distribution for the process iffi

, is called a stationary distribution for the process iffi

The significance of this set of probabilities and the justification for the terminology may be seen by noting that if πr(j) = πj for some r and all j ∈ J, then

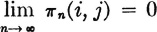

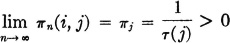

Theorem 7-2E

An irreducible, aperiodic, constant Markov chain is characterized by one of two conditions: either

1. All states are transient or null, in which case  for any pair i, j and there is no stationary distribution for the process, or

for any pair i, j and there is no stationary distribution for the process, or

2. All states are ergodic, in which case

for all pairs i, j, and {πj: j ∈ J} is a stationary distribution for the chain.

In the ergodic case, we have  regardless of the choice of initial probabilities π0(i), i ∈ J.

regardless of the choice of initial probabilities π0(i), i ∈ J.

The validity of the last statement, assuming condition 2, may be seen from the following argument:

If, for some n, all |πn(i, j) − πj| < , then

, then

since the π0(i) sum to unity.

To determine whether or not an irreducible, aperiodic chain is ergodic, the problem is to determine whether there is a set of πj to satisfy the equation in Definition 7-2k. In the case of finite chains, this may be approached algebraically.

Example 7-2-7

Consider once more the two-state process described in Example 7-2-4. This process is characterized by the transition matrix

The equation in the definition for stationary distributions can be written

where  is the unit matrix. In addition, we have the condition π1+π2 = 1. Writing out the matrix equation, we get

is the unit matrix. In addition, we have the condition π1+π2 = 1. Writing out the matrix equation, we get

From this we get π1 = π2/2, so that  and

and  . A check shows that this set is in fact a stationary distribution. It is interesting to compare these stationary probabilities with those in

. A check shows that this set is in fact a stationary distribution. It is interesting to compare these stationary probabilities with those in  2,

2,  3,

3,  2, and

2, and  3 in Example 7-2-4. It appears that the convergence to the limiting values is quite rapid. After a relatively few periods, the probability of being in state 2 (the failure state) during any given period approaches

3 in Example 7-2-4. It appears that the convergence to the limiting values is quite rapid. After a relatively few periods, the probability of being in state 2 (the failure state) during any given period approaches  . This condition prevails regardless of the initial probabilities.

. This condition prevails regardless of the initial probabilities.

As a final example, we consider a classical application which has been widely studied (cf. Feller [1957, pp. 343 and 358f] or Kemeny and Snell [1960, chap. 7, sec. 3]).

Example 7-2-8 The Ehrenfest Diffusion Model

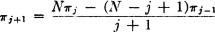

This model assumes N + 1 states, s0, s1, …, sN. The transition probabilities are π(i, i) = 0 for all i, π(i, j) = 0 for |i − j| > 1, π(i, i −1) = i/N, and π(i, i + 1) = 1πi/N. This model was presented in a paper on statistical mechanics (1907) as a representation of a conceptual experiment in which N molecules are distributed in two containers labeled A and B. At any given transition time, a molecule is chosen at random from the total set and moved from its container to the other. The state of the system is taken to be the number of molecules in container A. If the system is in state i, the molecule chosen is taken from A and put into B, with probability i/N, or is taken from B and put into A, with probability 1 − i/N. The first case changes the state from si to si−1; the second case results in moving to state si+1.

SOLUTION The defining equations for the stationary distributions become

Solving recursively, we can obtain expressions for each of the πj in terms of π0. We have immediately

The basic equation may be rewritten in the form

so that

It may be noted that this is of the form  . If πr =

. If πr =  for 0 ≤ r ≤ j, the same formula must hold for r = j + 1. We have

for 0 ≤ r ≤ j, the same formula must hold for r = j + 1. We have

Use of the definitions and some easy algebraic manipulations show that the expression on the right reduces to the desired form. Hence, by induction,  for all j = 1. 2, …, N. Now

for all j = 1. 2, …, N. Now

so that the stationary distribution is the binomial distribution with  The mean value of a random variable with this distribution is thus N/2 (Example 5-2-2).

The mean value of a random variable with this distribution is thus N/2 (Example 5-2-2).

Feller [1957] has given an alternative physical interpretation of this random process. For a detailed study of the characteristics of the probabilities, waiting times, etc., the work by Kemeny and Snell [1960], cited above, may be referred to.

A wide variety of physical and economic problems have been studied with Markov-chain models. The present treatment has sketched some of the fundamental ideas and results. For more details of both application and theory, the references cited in this section and at the end of the chapter may be consulted. Extensive bibliographies are included in several of these works, notably that by Bharucha-Reid [1960].

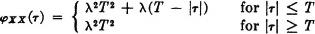

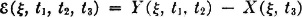

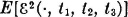

In some applications it is natural to consider the differences between values taken on by a sample function at specific instants of time. For such purposes, it is natural to make the following

Definition 7-3a

If X is a random process and t1 and t2 are any two distinct values of the parameter set T, then the random variable X(·, t2) − X(·, t1) is called an increment of the process.

Increments of random processes are frequently assumed to have one or both of two properties, which we consider briefly.

Definition 7-3b

A random process X is said to have independent increments iffi, for any n and any t1 < t2 < · · · < tn, with each ti ∈ T, it is true that the increments X(·, t2) − X(·, t1), X(·, t3) − X(·, t2), …, X(·, tn) − X(·, tn−1) form an independent class of random variables.

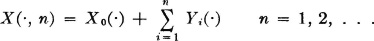

One may construct a simple example of a process with independent increments as follows. Begin with any sequence of independent random variables {Yi(·): 1 ≤ i < ∞ } and an arbitrary random variable X0(·). Put

The resulting process {X(·, n): 1 ≤ n < ∞} has independent increments.

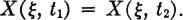

Definition 7-3c

A random process X is said to have stationary increments iffi, for each t1 < t2 and h > 0 such that t1, t2, t1 + h, t2 + h all belong to T, the increments X(·, t2) − X(·, t1) and X(·, t2 + h) − X(·, h + h) have the same distribution.

The Poisson process considered below has increments that are both independent and stationary.

Counting processes

A counting process N is one in which the value N( , t) of a sample function “counts” the number of occurrences of a specified phenomenon in an interval [0, t). For each choice of

, t) of a sample function “counts” the number of occurrences of a specified phenomenon in an interval [0, t). For each choice of  , a particular sequence of occurrences of this phenomenon results. The sample function N(

, a particular sequence of occurrences of this phenomenon results. The sample function N( ,·) for the process is the counting function corresponding to this particular sequence. The number of occurrences in the interval [t1, t2) is given by the increment N(

,·) for the process is the counting function corresponding to this particular sequence. The number of occurrences in the interval [t1, t2) is given by the increment N( , t2) − N(

, t2) − N( , t1) of that particular sample function. Obviously, a counting process has values which are integers. Counting processes have been derived which count a wide variety of phenomena, e.g., the number of telephone calls in a given time interval, the number of errors in decoding a message from a communication channel in a given time period, the number of particles from a radioactive source striking a target in a given time, etc.

, t1) of that particular sample function. Obviously, a counting process has values which are integers. Counting processes have been derived which count a wide variety of phenomena, e.g., the number of telephone calls in a given time interval, the number of errors in decoding a message from a communication channel in a given time period, the number of particles from a radioactive source striking a target in a given time, etc.

The Poisson process

One of the most common of the counting processes is the Poisson process, so named because of the nature of the distributions for its random variables. Since it is a counting process, it has integer values. Its parameter or index set T is usually taken to be the nonnegative real numbers [0, ∞). The process appears in situations for which the following assumptions are valid.

1. N(·, 0) = 0 [P]; that is, P[N(·, 0) = 0] = 1.

2. The increments of the process are independent.

3. The increments of the process are stationary.

4. P[N(·, t) > 0] > 0 for all t > 0

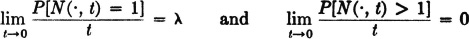

5.

We have thus described a counting process with stationary and independent increments which has unit probability of zero count in an interval of length zero. The probability of a positive count in an interval of positive length is positive, and the probability of more than one count in a very short interval is of smaller order than the probability of a single count.

With the aid of a standard theorem from analysis, it may be shown that assumptions 4 and 5 may be replaced with the following:

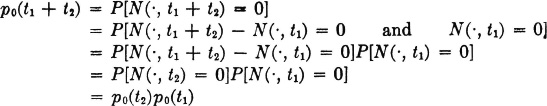

In order to see this, consider

Then

If we consider g(t) = − loge p0(t), for t ≥ 0, we must have

This linear functional equation is known to have the solution

Putting g(1) = λ, a positive constant, we have

This being the case,

which implies

Assumption 5 then requires

It is also apparent that conditions 1, 2, 3, 4′, and 5′ imply the original set.

We now wish to determine the probabilities

We begin by noting that we may have i occurrences in an interval of length t + Δt by having

i occurrences in the first interval of length t and

0 occurrences in the second interval of length Δt,

or i − 1 in the first interval and 1 in the second,

or i − 2 in the first interval and 2 in the second,

etc., to the case

0 in the first interval and i in the second.

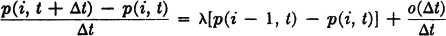

These events are mutually exclusive, so that their probabilities add. Thus

We have used conditions 2 and 3 to obtain the last expression. Conditions 4′ and 5′ imply

where o(Δt) indicates a quantity which goes to zero faster than Δt as the latter goes to zero. Substituting these expressions into the sum for p(i, t + Δt) and rearranging, we obtain

or

Taking the limit as Δt goes to zero, we obtain the right-hand derivatives. A slightly more complicated argument holds for Δt < 0, so that we have the differential equations

Condition 1 implies the boundary conditions

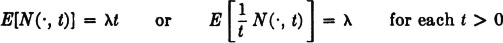

The solution of the set of differential equations under the boundary conditions imposed is readily found to be

which means that, for each positive t, the random variable N(·, t) has the Poisson distribution with  = λt (Example 3-4-7). Since the mean value for the Poisson distribution is

= λt (Example 3-4-7). Since the mean value for the Poisson distribution is  , we have

, we have

so that λ is the expected frequency, or mean rate, of occurrence of the phenomenon counted by the process.

The Poisson process has served as a model for a surprising number of physical and other phenomena. Some of these which are commonly found in the literature are

Radiation phenomena: the number of particles emitted in a given time t

Accidents, traffic counts, misprints

Demands for service, maintenance, sales of units of merchandise, admissions, etc.

Counts of particles suspended in liquids

Shot noise in vacuum tubes

A fortunate feature of the Poisson process is the fact that it is governed by a single parameter which has a simple interpretation, as noted above.

A number of generalizations and modifications of the Poisson process have been studied and applied in a variety of situations. For a discussion of some of these, one may refer to Parzen [1962, chap. 4] or Bharucha-Reid [1960, sec. 2.4] and to references cited in these works.

In Sec. 7-1 it was pointed out that one way of describing and studying random processes is by using joint distributions for finite subfamilies of random variables in the process. Analytically, this description is made in terms of distribution functions, just as in the case of several random variables considered jointly.

Definition 7-4a

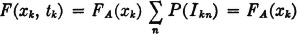

The first-order distribution function F(·,·) for a random process X is the function defined by

for every real x and every t ∈ T

for every real x and every t ∈ T

The second-order distribution function for the process is the function defined by

F(x, t; y, s) = P[X(·, t) ≤ x, X(·, s) ≤ y] for every pair of real x and y and every pair of numbers t and s from the index set T.

Distribution functions of any order n are defined similarly.

The first-order distribution function for any fixed t is the ordinary distribution function for the random variable X(·, t) selected from the family that makes up the process. The second-order distribution is the ordinary joint distribution function for the pair of random variables X(·, t) and X(·, s) selected from the process. These distribution functions have the properties generally possessed by joint distribution functions, as discussed in Sees. 3-5 and 3-6.

The following schematic example may be of some help in grasping the significance of the distribution functions for the process.

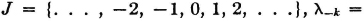

A process has four sample functions (hence the basic space may be considered to have four elementary events). The functions are shown in Fig. 7-4-1. We let

SOLUTION Examination of the figure shows that none of the sample functions lies below the value a at t = t1, so that F (a, t1) = 0. Only the function X( 1,·) lies below b at t = t1 so that F(b, t1) = p1. Examination of the other cases indicated shows that

1,·) lies below b at t = t1 so that F(b, t1) = p1. Examination of the other cases indicated shows that

For the second-order distribution, some values are

In the first case, none of the sample functions lies below a at both t1 and t2; in the second case, only X( 1,·) lies below b at t1 and c at t2; in the third case, only X(

1,·) lies below b at t1 and c at t2; in the third case, only X( 2,·) lies below c at t2 and below b at t3. Other cases may be evaluated similarly.

2,·) lies below c at t2 and below b at t3. Other cases may be evaluated similarly.

That this process is not realistic is obvious; almost any process in which the sample functions are continuous will have an infinity of such sample functions.

It is apparent that if a process is defined, the distribution functions of all finite orders are defined. Kolmogorov [1933, 1956] has shown that if a family of such distribution functions is defined, there exists a process for which they are in fact the distribution functions. The events involved in the definition are of the form

Fig. 7-4-1 A simple schematic random process with four sample functions

The distribution functions of finite order assign probabilities to these events for any finite subset T0 of the parameter set. Fundamental measure theory shows that the probability measure so defined ensures the unique definition of probability for all such sets when T0 is countably infinite. Serious technical mathematical problems arise when T0 is uncountably infinite. It usually occurs in practice, however, that analytic conditions upon the sample functions are such that they are determined by values at a countable number of points t in the index set T. A discussion of these conditions is well beyond the scope of the present treatment. For a careful development of these mathematical conditions, the treatise by Doob [1953, chap. 2] may be consulted. Fortunately, in practice it is rarely necessary to be concerned with these problems, so that we can forgo a discussion of them in an introductory treatment.

In this section we consider a random process (actually a class of processes) whose sample functions have a step-function character; i.e., the graph of a member function has jumps in value at discrete instants of time and remains constant between these transition times. The distribution of amplitudes in the various time intervals and the distribution of transition, or jump, times at which the sample function changes value is described in rather general terms, so that a class of processes is thus included.

Such a step process is commonly assumed in practice, e.g., in the theory of control systems with “randomly varying” inputs. The derivation of the first and second distribution functions illustrates a number of interesting points of theory. These processes are used in later sections to illustrate a number of concepts and ideas developed there.

Description of the process

Consider the step-function process

where u+(t) = 0 for t < 0 and = 1 for t ≥ 0. For any choice of  , a step function is defined. The steps occur at times t = τn(

, a step function is defined. The steps occur at times t = τn( ). The value of the function in the nth interval [τn(

). The value of the function in the nth interval [τn( ), τn+1(

), τn+1( )) is an(

)) is an( ). We make the following assumptions about the random sequences which characterize the process.

). We make the following assumptions about the random sequences which characterize the process.

1. {an(·): − ∞ < n < ∞} is a random process whose members form an independent class; each of these random variables has the same distribution, described by the distribution function FA(·).

2. {τn(·): − ∞ < n <∞} is a random process satisfying the following conditions:

a. For every  and every n, τn(

and every n, τn( ) < τn+1(

) < τn+1( ).

).

b. The distribution of the jump points τn( ) is described by a counting process N(·, t). For any pair of real numbers t1, t2 we put N(

) is described by a counting process N(·, t). For any pair of real numbers t1, t2 we put N( , t1, t2) = |N(

, t1, t2) = |N( , t2) −N(

, t2) −N( , t1)| and suppose

, t1)| and suppose

is a known function of t1 t2. For example, if the counting process is Poisson,  . We must also have

. We must also have

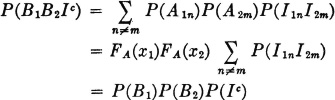

3. The an(·) process and the τn(·) process must be independent in an appropriate sense. We may state the independence conditions precisely in terms of certain events defined as follows. Let t1, t2 be any two arbitrarily chosen real numbers (t1 ≠ t2). Let

If x1, x2 are any two real numbers, let

Then {A1m, A2n, I1pI2q} is an independent class for each choice of the indices m, n, p, q, provided m ≠ n. The significance of the independence assumption in this particular form will appear in the subsequent analysis.

Determination of the distribution functions

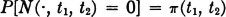

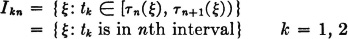

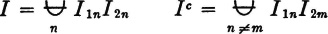

We wish to determine the first and second distribution functions for the process X. In order to state the arguments with precision, it is expedient to define some events. Let

We shall use the relation

Under the assumed independence conditions, it seems intuitively likely that P[X(·, t1) ≤ X1] = FA(x1). A problem arises, however, from the fact that, for each  , the point t1 may be in a different interval. To validate the expected result, we establish a series of propositions.

, the point t1 may be in a different interval. To validate the expected result, we establish a series of propositions.

1. For fixed k, {Ikn: −∞ < n < ∞} is a partition, since, for each  , tk belongs to one and only one interval. We may then assert that for each k, j, n, fixed, {IknIjm: − ∞ < m < ∞ } is a partition of Ikn

, tk belongs to one and only one interval. We may then assert that for each k, j, n, fixed, {IknIjm: − ∞ < m < ∞ } is a partition of Ikn

2.

This follows from the fact that t1 and t2 belong to the same interval iffi, for some n, I1n and I2n both occur. The disjointedness follows from property 1.

3. Use of Theorem 2-6G shows that for each permissible j, k, m, n, each class {Ajm, Ikn}, {Ajm, I}, and {A1mA2n, I} is an independent class.

4.

5. F(xk, tk) = FA(xk). We may argue as follows:

Now P(Akn) = FA(xk) for any n; hence

We have thus established the first assertion to be proved. A somewhat more complicated argument, along the same lines, is used to develop an expression for the second distribution. We shall argue in terms of the conditional probabilities in expression (C), above.

6. Bk and I are independent for k = 1 or 2.

from which the result follows as in the previous arguments. An exactly similar argument follows for B2I.

We note that I1nI2nIc =  and I1nI2n ⊂ Ic for n ≠ m; hence

and I1nI2n ⊂ Ic for n ≠ m; hence

By the independence assumptions

Thus

For the last assertion we have used the independence of Bk and Ic, which follows from the independence of Bk and I.

If I occurs,

For x1 ≤ x2, B1I ⊂ B2I and B1B2I = B1I; similarly, for x2 ≤ x1, B2I ⊂ B1I and B1B2I = B2I. Because of the independence shown earlier, if x1 ≤ x2, P(B1B2I) = P(B1I) = P(B1)P(I) = FA(x1)P(I). For x2 ≤ x1, interchange subscripts 1 and 2 in the previous statements. Thus

from which the asserted relation follows immediately.

We thus may assert

When the counting process describing the distribution of jump points is known, the probabilities P(I) = π(t1 t2) and P(Ic) = 1 − π(t1, t2) are known. If the distribution of jump points is Poisson, we have

It is of interest to note that the first distribution function is independent of t and the second depends upon the difference between the two values t1 and t2 of the parameter. The significance of this fact is discussed in Sec. 7-8.

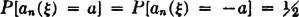

The following special case represents a situation found in many pulse-code transmission systems and computer systems. We deal with it as a special case, although it could be dealt with directly by simple arguments based on fundamentals.

Example 7-5-1

Suppose each member of the random process consists of a voltage wave whose value in any interval is either ±a volts. Switching may occur at any of the prescribed instants τn = nT, where T is a positive constant. The values ±a are taken on with probability ½ each. Values in different intervals are independent. This process may be considered a special case of the general step process. The τ process consists of the constants τn(·) = nT. The process  is an independent class with

is an independent class with

Since constant random variables are independent of any combination of other random variables, the required independence conditions follow. For any t1 t2 P[N(·, t1 t2) = 0] = π(t1, t2) is either 0 or 1 and is a known function of t1 t2. Thus

π(t, u) = 1, whenever t, u are in the same interval, and has the value 0 whenever t, u are in different intervals. Note that in this case we do not have π(t, u) a function of the difference t − u alone, as in the case of a Poisson distribution of the transition times.

In many control situations, say, an on-off control, the value of the control signal may be limited to two values, as in the pulse-code example above, but the transition times are not known in advance. In this case, it is necessary to assume (usually on the basis of experience) a counting-function distribution. When the expected number of occurrences in any interval is a constant, the assumptions leading to the Poisson counting function are often valid.

Example 7-5-2

The first stage of an electronic binary counter produces a voltage wave which takes on one of two values. Each time a “count” occurs, the stage switches from one of its two states to the other. The output is a constant voltage corresponding to the state. The state, and hence the voltage, is maintained until the next count. If the counter should be counting cars passing a given point, the output wave would have the character of a two-value step function with a Poisson distribution of the transition times.

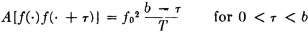

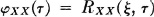

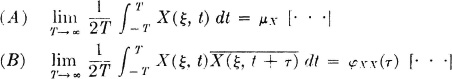

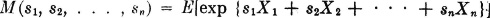

It should not be surprising that the important role of mathematical expectations in the study of random variables should extend to random processes, since the latter are infinite families of random variables. In this section, we shall consider briefly some special expectations which are important in the analytical treatment of many random processes. In particular, we shall be concerned with the covariance function and with the closely related correlation functions. These functions play a central role in the celebrated theory of extrapolation and smoothing of “time series” developed somewhat independently in Russia by A. N. Kolmogorov and in the United States by Norbert Wiener, about 1940. The first applications of this theory were to wartime problems of “fire control” of radar and antiaircraft guns and to related problems of communicating in the presence of noise. Through his teaching and writing on the subject, Y. W. Lee pioneered in the task of making this abstruse theory available to engineers. In this country the work is often referred to as the Wiener-Lee statistical theory of communication. The original theoretical development laid the groundwork for further intensive development of the theory, as well as for applications of the theory to a wide range of real-world problems. Unfortunately, to develop this theory to the point that significant applications could be made would require the development of topics outside the scope of this book. Technique becomes difficult, even for simple problems. The complete theory requires the use of spectral methods based on the Fourier, Laplace, and other related transforms. We shall limit our discussion to an introduction of the probability concepts which underlie the theory. Examples must therefore be limited essentially to simple illustrations of the concept. For applications, one may consult any of a number of books in the field of statistical theory of communication and control (e.g., Laning and Battin [1956] or Davenport and Root [1958]).

We proceed to define and discuss several concepts.

Definition 7-6a

A process X is said to be of order  if, for each

if, for each  (

( is a positive integer).

is a positive integer).

From an elementary property of random variables it follows that if the process X is of order  , it is of order k for all positive integers k <

, it is of order k for all positive integers k <  .

.

Definition 7-6b

The mean-value function for a process is the first moment  X(t) = E[X(t)]

X(t) = E[X(t)]

The mean-value function for a process X exists if the process is of first order (or higher).

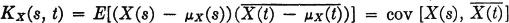

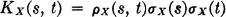

In the consideration of any two real-valued random variables of order 2, a convenient measure of their relatedness is provided by the covariance factor, defined as follows:

Definition 7-6c

The covariance factor cov [X, Y] for two random variables is given by

Simple manipulations show cov [X, Y] = E[X Y] −  X

X Y. If the random variables are independent, cov [X, Y] = 0; if one of the random variables is a linear function of the other—say, Y(·) = aX(·) + b—then

Y. If the random variables are independent, cov [X, Y] = 0; if one of the random variables is a linear function of the other—say, Y(·) = aX(·) + b—then

The covariance factor may be normalized in a useful way to give the correlation coefficient, defined as follows:

Definition 7-6d

The correlation coefficient for two random variables is given by

Use of the Schwarz inequality (E5) shows that

so that − 1 ≤  [X, Y] ≤ 1. When

[X, Y] ≤ 1. When  [X, Y] = 0, we say the random variables are uncorrelated. Independent random variables are always uncorrelated; however, if two random variables are uncorrelated, it does not follow that they are independent, as may be shown by simple examples. By the use of properties (V2) and (V4), one may show that

[X, Y] = 0, we say the random variables are uncorrelated. Independent random variables are always uncorrelated; however, if two random variables are uncorrelated, it does not follow that they are independent, as may be shown by simple examples. By the use of properties (V2) and (V4), one may show that

Equality can occur iffi  , where c is an appropriate constant. This means that

, where c is an appropriate constant. This means that

The plus sign corresponds to  =

=  [X, Y] = 1, and the minus sign corresponds to

[X, Y] = 1, and the minus sign corresponds to  = − 1.

= − 1.

Let us look further at the significance of the correlation coefficient by considering the joint probability mass distribution. If  = +1, the probability mass must all lie on the straight line

= +1, the probability mass must all lie on the straight line

Fig. 7-6-1 Geometric relations for interpreting the correlation coefficient  .

.

This line is shown in Fig. 7-6-1. Now suppose 0 <  < 1. Image points for the mapping, and hence probability mass, may lie at points off the straight line. Consider the image point t1 u1 shown in Fig. 7-6-1. The distance of the point from the line is proportional to the vertical distance |υ1|, where

< 1. Image points for the mapping, and hence probability mass, may lie at points off the straight line. Consider the image point t1 u1 shown in Fig. 7-6-1. The distance of the point from the line is proportional to the vertical distance |υ1|, where

It is convenient, in fact, to consider the quantity w1 proportional to υ1, determined by the relation

An examination of the argument above shows that we must have

so that

Since t1 and u1 are supposed to be simultaneous observations of the random variables X(·) and Y(·), respectively, w1 must be a corresponding observation of the random variable

This is the difference between the standardized random variables X′(·) and Y′(·). The mean value  w = 0, and the variance σW2 = 2(1 −

w = 0, and the variance σW2 = 2(1 −  ). Thus

). Thus  serves to measure the tendency of the standardized random variables X′(·) and Y′(·) to take on different values. The expression for σW2 holds for negative as well as positive values of

serves to measure the tendency of the standardized random variables X′(·) and Y′(·) to take on different values. The expression for σW2 holds for negative as well as positive values of  .

.

As an illustration of these ideas, consider two random variables distributed uniformly on the interval [−a, a]. Several joint distributions are shown in Fig. 7-6-2. For  = 1, the mass is concentrated along the line u = t. For