The concept of mathematical expectation seems to have been introduced first in connection with the development of probability theory in the early study of games of chance. It also arose in a more general form in the applications of probability theory to statistical studies, where the idea of a probability-weighted average appears quite naturally. The concept of a probability-weighted average finds general expression in terms of the abstract integrals studied in Chap. 4. Use of the theorems on the transformation of integrals, developed in Sec. 4-6, makes it possible to derive from a single basic expression a variety of special forms of mathematical expectation employed in practice; the topic is thus given unity and coherence. The abstract integral provides the underlying basic concept; the various forms derived therefrom provide the tools for actual formulation and analysis of various problems. Direct application of certain basic properties of abstract integrals yields corresponding properties of mathematical expectation. These properties are expressed as rules for a calculus of expectations. In many arguments and manipulations it is not necessary to deal directly with the abstract integral or with the detailed special forms.

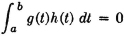

The clear identification of the character of a mathematical expectation as a probability-weighted average makes it possible to give concrete interpretations, in terms of probability mass distributions, to some of the more commonly employed expectations.

Brief attention is given to the concept of random sampling, which is fundamental to statistical applications. Some of the basic ideas of information theory are presented in terms of random variables and mathematical expectations. Moment-generating functions and the closely related characteristic functions for random variables are examined briefly.

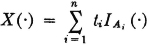

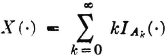

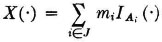

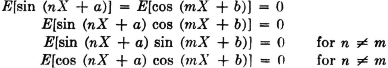

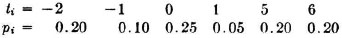

The concept of mathematical expectation arose early in the history of the theory of probability. It seems to have been introduced first in connection with problems in gambling, which served as a spur to much of the early effort. Suppose a game has a number of mutually exclusive ways of being realized. Each way has its probability of occurring, and each way results in a certain return (or loss, which may be considered a negative return) to the gambler. If one sums the various possible returns multiplied by their respective probabilities, one obtains a number which, by intuition and a certain kind of experience, may be called the expected return. In terms of our modern model, this situation could be represented by introducing a simple random variable X(·) whose value is the return realized by the gambler. Suppose X(·) is expressed in canonical form as follows:

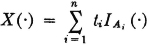

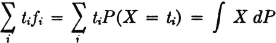

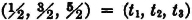

The values ti are the possible returns, and the corresponding Ai are the events which result in these values of return. The expected return for the game described by this random variable is the sum

This is a probability-weighted average of the possible values of X(·).

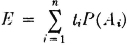

The concept of a weighted average plays a significant role in sampling statistics. A sample of size n is taken. The members of the sample are characterized by numbers. Either these values are from a discrete set of possible values or they are classified in groups, by placing all values in a given interval into a single classification. Each of these classifications is represented by a single number in the interval. Suppose the value assigned to the ith classification is ti and the number of members of the sample in this classification is ni. The average value is given by

where fi = ni/n is the relative frequency of occurrence of the ith classification in the sample.

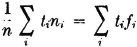

To apply probability theory to the sampling problem, we may suppose that we are dealing with a random variable X(·) whose range T is the set of ti. To a first approximation, at least, we suppose P(X = ti) = fi. In that case, the sum becomes

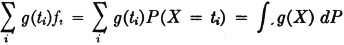

In a similar way, if we deal with a function g(·) of the values of the sample, the weighted average becomes

The notion of mathematical expectation arose out of such considerations as these. Because of the manner in which integrals are defined in Chap. 4, it seems natural to make the following definition in the general case.

Definition 5-1a

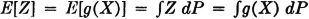

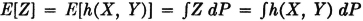

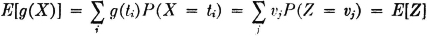

If X(·) is a real-valued random variable and g(·) a Borel function, the mathematical expectation E[g(X)] = E[Z] of the random variable Z(·) = g[X(·)] is given by

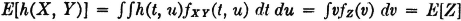

Similarly, if X(·) and Y(·) are two real-valued random variables and h(·) is a Borel function of two real variables, the mathematical expectation E[h(X, Y)] = E[Z] of the random variable Z(·) = h[X(·), Y(·)] is given by

The terms ensemble average (ensemble being a synonym for set) and probability average are sometimes used. The term expectation is frequently suggestive and useful in attempting to visualize or anticipate results. But as in the case of names for other concepts, such as independence, the name may tempt one to bypass a mathematical argument in favor of some intuitively or experientially based idea of “what may be expected.” It is probably most satisfactory to think in terms of probability-weighted averages, in the manner discussed after the definitions of the integral in Secs. 4-2 and 4-4.

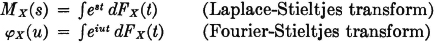

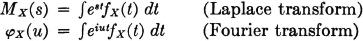

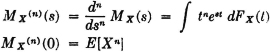

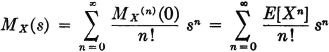

By virtue of the theoretical development of Chap. 4, we may proceed to state immediately a number of equivalent expressions and relations for mathematical expectations. These are summarized below:

Single real-valued random variable X(·): Mapping t = X( ) from S to R1

) from S to R1

Probability measure PX(·) induced on Borel sets of R1

Probability distribution function FX(·) on R1

Probability density function fX(·) in the absolutely continuous case Mapping υ = g(t) from R1 to R2 produces mapping υ = Z( ) = g[X(

) = g[X( )] from S to R2

)] from S to R2

Probability measure PZ(·) induced on the Borel sets of R2

Probability distribution function FZ(·) on R2

Probability density function fZ(·) in the absolutely continuous case

In dealing with continuous distributions and mixed distributions, we shall assume throughout this chapter that there is no singular continuous part (Sec. 4-5). Thus the continuous part is the absolutely continuous part, for which a density function exists.

General case:

Discrete case:

Absolutely continuous case:

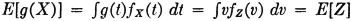

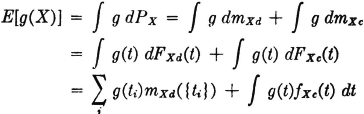

Mixed case: Consider the probability measure PX(·) on R1. We may express PX(·) = mXd(·) + mXc(·). The measure mXd(·) describes the discrete mass distribution on R1, and the measure mXc(·) describes the (absolutely) continuous mass distribution on R1. Corresponding to the first, there is a distribution function FXd(·), and corresponding to the second, there is a distribution function FXc(·) and a density function fXc(·). We may then write

We note that mXd({ti}) = PX({ti}) = P(X = ti).

A similar development may be made for the mass distribution on the line R2 corresponding to PZ(·).

We have adopted the notational convention that integrals over the whole real line (or over the whole euclidean space in the case of several random variables) are written without the infinite limits, in order to simplify the writing of expressions. Integrals over subsets are to be designated as before.

Let us illustrate the ideas presented above with some simple examples.

Example 5-1-1

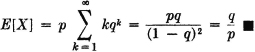

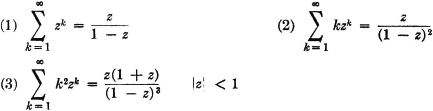

Determine E[X] when X(·) has the geometric distribution (Example 3-4-6). The range is {k: 0 ≤ k < ∞}, with pk = P(X = k) = pqk, where q = 1 − p.

As an example of the absolutely continuous case, consider

Example 5-1-2

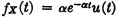

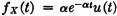

Let g(t) = tn, and let X(·) have the exponential distribution with fX(t) = αe−αtu(t), α > 0.

The lower limit of the integral is set to zero because the integrand is zero for negative t.

In the mixed case, each part is handled as if it were the expectation for a discrete or a continuous random variable, with the exception that the probabilities, distribution functions, and density functions are modified according to the total probability mass which is discretely or continuously distributed. As the next example shows, it is usually easier to calculate the expectation as E[g(X)] rather than as E[Z], since it is then not necessary to derive the mass distribution for Z(·) from that given for X(·).

Example 5-1-3

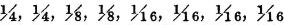

Suppose g(t) = t2 and suppose X(·) produces a mass distribution PX(·) on R1 which may be described as follows. A mass of  is uniformly distributed in the interval [−2, 2]. A point mass of

is uniformly distributed in the interval [−2, 2]. A point mass of  is located at each of the points t = −2, −1, 0, 1, 2.

is located at each of the points t = −2, −1, 0, 1, 2.

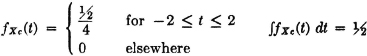

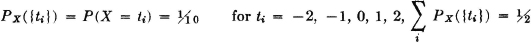

DISCUSSION The density function for the continuous part of the distribution for X(·) is given by

The probability masses for the discrete part of the distribution for X(·) are described by

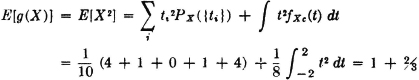

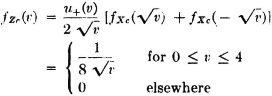

We may make an alternative calculation by dealing with the mass distribution induced on R2 by Z(·) = g[X(·)], as follows. Using the result of Example 3-9-1 for the continuous part, we have

For the discrete part, we have range υk = 0, 1, 4, with probabilities  , and

, and  respectively.

respectively.

The situation outlined above for a single random variable may be extended to two or more random variables. We write out the case for two random variables. As in the case of real-valued random variables, we shall assume that there is no continuous singular part, so that continuous distributions or continuous parts of distributions are absolutely continuous and have appropriate density functions.

Two random variables X(·) and Y(·) considered jointly:

Mapping (t, u) = [X, Y]( ) from S to the plane R1 × R2 Probability measure PXY(·) induced on the Borel sets of R1 × R2

) from S to the plane R1 × R2 Probability measure PXY(·) induced on the Borel sets of R1 × R2

Joint probability distribution function FXY(·, ·) on R1 × R2

Joint probability density function fXY(·, ·) in the continuous case Mapping υ = h(t, u) from R1 × R2 to R3 produces the mapping υ = Z( ) = h[X(

) = h[X( ), Y(

), Y( )] from S to R3

)] from S to R3

Probability measure PZ(·) induced on the Borel sets of R3

Probability distribution function FZ(·) on R3

Probability density function fZ(·) in the continuous case

General case:

Discrete case:

Mixed cases for point masses and continuous masses may be handled in a manner analogous to that for the single variable. Suitable joint distributions must be described by the appropriate functions. We illustrate with a simple mixed case.

Example 5-1-4

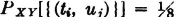

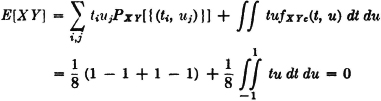

Let h(t, u) = tu so that Z(·) = X(·)Y(·). The random variables are those in Example 3-9-8 (Fig. 3-9-5). A mass of  is continuously and uniformly distributed over the square, which has corners at (t, u) = (·1, −1), (−1, 1), (1, 1), (1, −1). A point mass of

is continuously and uniformly distributed over the square, which has corners at (t, u) = (·1, −1), (−1, 1), (1, 1), (1, −1). A point mass of  is located at each of these corners.

is located at each of these corners.

DISCUSSION The discrete distribution is characterized by  for each pair (ti, uj) corresponding to a corner of the square. The continuous distribution can be described by a joint density function

for each pair (ti, uj) corresponding to a corner of the square. The continuous distribution can be described by a joint density function

Thus

Calculations for E[Z] are somewhat more complicated because of the more complicated expressions for the mass distribution on R2.

Before considering further examples, we develop some general properties of mathematical expectation which make possible a considerable calculus of mathematical expectation, without direct use of the integral and summation formulas. This is the topic of the next section.

A wide variety of mathematical expectations are encountered in practice. Two very important ones, known as the mean value and the standard deviation, are studied in the next two sections. Before examining these, however, we discuss in this section some general properties of mathematical expectation which do not depend upon the nature of any given probability distribution. These properties are, for the most part, direct consequences of the properties of integrals developed in Chap. 4. These properties provide the basis for a calculus of expectations which makes it unnecessary in many arguments and manipulations to deal directly with the abstract integral or with the detailed special forms derived therefrom.

Unless special conditions—particularly the use of inequalities—indicate otherwise, the properties developed below hold for complex-valued random variables as well as for real-valued ones. For a brief discussion of the complex-valued case, see Appendix E. The statements of the properties given below tacitly assume the existence of the indicated expectations. This is equivalent to assuming the integrability of the random variables.

We first obtain a list of basic properties and then give some very simple examples to illustrate their use in analytical arguments. As a first property, we utilize the definition of the integral of a simple function to obtain

(E1) E[aIA] = aP(A) where a is a real or complex constant

A constant may be considered to be a random variable whose value is the same for all  . If the random variable is almost surely equal to a constant, then for most purposes in probability theory it is indistinguishable from that constant. As an expression of the linearity property of the integral, we have

. If the random variable is almost surely equal to a constant, then for most purposes in probability theory it is indistinguishable from that constant. As an expression of the linearity property of the integral, we have

(E2) Linearity. If a and b are real or complex constants,

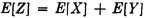

E[aX + BY] = aE[X] + bE[Y]

By a simple process of mathematical induction, the linearity property may be extended to linear combinations of any finite number of random variables. The mathematical expectation thus belongs to the class of linear operators and hence shares the properties common to this important class of operators.

Members of the class of linear operators which have the positivity property share a number of important properties. If X(·) and Y(·) are real-valued or complex-valued random variables, the mathematical expectation  has the character of an inner product, which plays an important role in the theory of linear metric spaces. Note that the bar over Y indicates the complex conjugate.

has the character of an inner product, which plays an important role in the theory of linear metric spaces. Note that the bar over Y indicates the complex conjugate.

A restatement of property (I4) for integrals (as extended to the complex case in Appendix E) yields the important result

(E4) E[X] exists iffi E[|X|] does, and [E|X|] ≤ E[|X|].

The importance of this inequality in analysis is well known.

Another inequality of classical importance is the Schwarz inequality, characteristic of linear operators with the positivity property.

(E5) Schwarz inequality. |E[XY)|2 ≤ E|[X|2]E[|Y|2]. In the real case, equality holds iffi there is a real constant λ such that λX(·) + Y(·) = 0 [P].

PROOF We first prove the theorem in the real case and then extend it to the complex case. By the positivity property (E3), for any real λ, E[(λX + Y)2] ≥ 0, with equality iffi λX(·) + Y(·) = 0 [P], Expanding the squared term and using linearity, we get

This is of the form Aλ2 + Bλ + C ≥ 0. The strict inequality holds iffi there is no real zero for the quadratic in λ. Equality holds iffi there is a second-order zero for the quadratic, which makes it a perfect square. Use of the quadratic formula for the zeros shows there is no real zero iffi B2 − 4AC < 0 and a pair of real zeros iffi B2 = 4AC. Examination shows these statements are equivalent to the Schwarz inequality. To extend to the complex case, we use property (E4) and the result for the real case as follows:

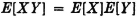

The product rule for integrals of independent random variables may be restated to give the

(E6) Product rule for independent random variables. If X(·) and Y(·) are independent, integrable random variables, then E[XY] = E[X]E[Y].

By a process of mathematical induction, this may be extended to any finite, independent class of random variables.

The next property is not a direct consequence of the properties of integrals, but may be derived with the aid of property (E3), above. It is so frequently useful in analytical arguments that we list it among the basic properties of mathematical expectation.

(E7) If g(·) is a nonnegative Borel function and if A = { : g(X) ≥ σ}, then E[g(X)] ≥ aP(A).

: g(X) ≥ σ}, then E[g(X)] ≥ aP(A).

PROOF If we show g[X( )] ≥ aIA(

)] ≥ aIA( ) for all

) for all  , the statement follows from property (E3). For

, the statement follows from property (E3). For  ∈ A, g[X(

∈ A, g[X( )] ≥ a while aIA(

)] ≥ a while aIA( ) = a; for

) = a; for  ∈ Ac, g[X(

∈ Ac, g[X( )] ≥ 0 while aIA(

)] ≥ 0 while aIA( ) = 0. Thus the inequality holds for all

) = 0. Thus the inequality holds for all  .

.

This elementary inequality is useful in deriving certain classical inequalities, such as the celebrated Chebyshev inequality in Theorem 5-4B.

A somewhat similar inequality is an extension of an inequality attributed to A. A. Markov.

(E8) If g(·) is a nonnegative, strictly increasing, Borel function of a single real variable and c is a nonnegative constant, then

PROOF |X| ≥ c iffi g(|X|) ≥ g(c). In property (E7), replace the constant a by the constant g(c).

For the special case g(t) = tk, we have the so-called Markov inequality.

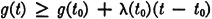

The next inequality deals with convex functions, which are defined as follows:

Definition 5-2a

A Borel function g(·) defined on a finite or infinite open interval I on the real line is said to be convex (on I) iffi for every pair of real numbers a, b belonging to I we have

It is known that a convex function must be continuous on I in order to be a Borel function. If g(·) is continuous, it is convex on I iffi to every t0 in I there is a number λ(t0) such that for all t in I

In terms of the graph of the function, this means that the graph of g(·) lies above a line passing through the point (t0, g(t0)). Using this inequality, we may extend a celebrated inequality for sums and integrals to mathematical expectation.

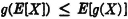

(E9) Jensen’s inequality. If g(·) is a convex Borel function and X(·) is a random variable whose expectation exists, then

PROOF In the inequality above, let t0 = E[X] and t = X(·); then take expectations of both sides, utilizing property (E3).

We now consider some simple examples designed to illustrate how the basic properties may be used in analytical arguments. The significance of mathematical expectation for real-world problems will appear more explicitly in later sections.

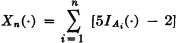

Example 5-2-1 Discrete Random Variables

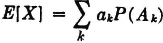

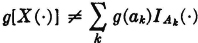

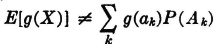

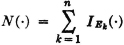

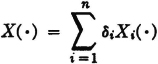

Let  . By the linearity property (E2) and property (E1), we have

. By the linearity property (E2) and property (E1), we have  , whether or not the expression is in canonical form. In general,

, whether or not the expression is in canonical form. In general,  if the Ak do not form a partition (Example 3-8-1); therefore, in general,

if the Ak do not form a partition (Example 3-8-1); therefore, in general,  . By virtue of the formulas in Sec. 3-8 for the canonical form, equality does hold if the Ak form a partition.

. By virtue of the formulas in Sec. 3-8 for the canonical form, equality does hold if the Ak form a partition.

Example 5-2-2 Sequences of n Repeated Trials

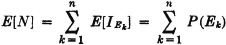

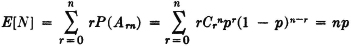

Let Ek be the event of a success on the kth trial. Then

is the number of successes in a sequence of n trials. According to the result in Example 5-2-1,

If P(Ek) = p for all k, this reduces to E[N] = np. No condition of independence is required. If, however, the Ek form an independent class, we have the case of Bernoulli trials studied in Examples 2-8-3 and 3-1-4. In that case, if we let Arn be the event of exactly r successes in the sequence of n trials, we know that the Arn form a partition and P(Arn) = Crnpr(1 − p)n−r. Hence

The series is summed in Example 2-8-10, in another connection, to give the same result. For purposes of computing the mathematical expectation, the first form for N(·) given above is the more convenient.

Example 5-2-3

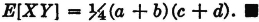

Two real-valued random variables X(·) and Y(·) have a joint probability mass distribution which is continuous and uniform over the rectangle a ≤ t ≤ b and c ≤ u ≤ d. Find the mathematical expectation E[XY].

SOLUTION The marginal mass distributions are uniform over the intervals a ≤ t ≤ b and c ≤ u ≤ d, respectively. The induced measures have the product property, which ensures independence of the two random variables. Hence, by property (E6), we have

Now

Similarly, E[Y] = (c + d)/2, so that

The next example is of interest in studying the variance and standard deviation, to be introduced in Sec. 5-4.

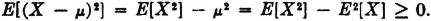

Suppose X(·) and X2(·) are integrable and E[X] =  . Then

. Then

SOLUTION

Another result which is sometimes useful is the following:

Example 5-2-5

For any two random variables X(·) and Y(·) whose squares are integrable, |E[XY]| ≤ max {E[|X|2], E[|Y|2]}.

SOLUTION By the Schwarz inequality, |E[XY]|2 ≤ E[|X|2]E[|Y|2]. The inequality is strengthened by replacing the smaller of the factors on the right-hand side by the larger. Hence |E[XY]|2 ≤ max {E2[|X|2], E2[|Y|2]). The asserted inequality follows immediately from elementary properties of inequalities for real numbers.

We turn, in the next two sections, to a study of two special expectations which are useful in describing the probability distributions induced by random variables.

Discussions in earlier sections show that a real-valued random variable is essentially described for many purposes, if the mass distribution which it induces on the real line is properly described. Complete analytical descriptions are provided by the probability distribution function. In many cases, a complete description of the distribution is not possible and may not be needed. Certain parameters provide a partial description, which may be satisfactory for many investigations. In this and the succeeding section, we consider two of the most common and useful parameters of the probability distribution induced by a random variable.

The simplest of the parameters is described in the following

Definition 5-3a

If X(·) is a real-valued random variable, its mean value, denoted by one of the following symbols  ,

,  X, or

X, or  [X], is denned by

[X], is denned by  [X] = E[X].

[X] = E[X].

We shall use PX(·) to denote the probability measure induced on the real line R, as in the previous sections. The mean value can be given a very simple geometrical interpretation in terms of the mass distribution. According to the fundamental forms for expectations, we may write

If {ti: i ∈ J} is the set of values of a simple random variable which approximates X(·) and if Mi = [ti, ti+1), then the approximating integral is

As the subdivisions become finer and finer, this approaches the first moment of the mass distribution on the real line about the origin. Since the total mass is unity, this moment is also the coordinate of the center of mass. Thus the mean value is the coordinate of the center of mass of the probability mass distribution. This mechanical picture is an aid in visualizing the location of the possible values of the random variable. As an example of the use of this interpretation, consider first the uniform distribution over an interval.

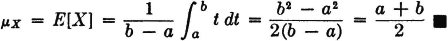

Example 5-3-1

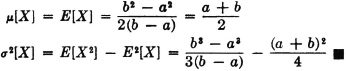

Suppose X(·) is uniformly distributed over the interval [a, b]. This means that probability mass is uniformly distributed along the real line between the points t = a and t = b. The center of mass, and hence the mean value, should be given by  X = (a + b)/2. This fact may be checked analytically as follows:

X = (a + b)/2. This fact may be checked analytically as follows:

As a second example, we may consider the gaussian, or normal, distribution.

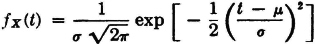

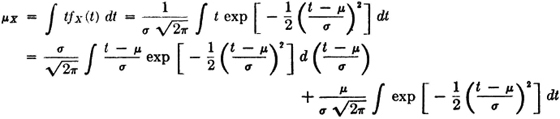

Example 5-3-2 The Normal Distribution

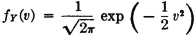

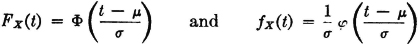

A real-valued random variable X(·) is said to have the normal, or gaussian, distribution if it is continuously distributed with probability density function

where  is a constant and σ is a positive constant. A graph of the density function is shown in Fig. 3-4-4. This is seen to be a curve symmetrical about the point t =

is a constant and σ is a positive constant. A graph of the density function is shown in Fig. 3-4-4. This is seen to be a curve symmetrical about the point t =  , as can be checked easily from the analytical expression. This means that the probability mass is distributed symmetrically about t =

, as can be checked easily from the analytical expression. This means that the probability mass is distributed symmetrically about t =  and hence has its center of mass at that point. The mean value of a random variable with the normal distribution is therefore the parameter

and hence has its center of mass at that point. The mean value of a random variable with the normal distribution is therefore the parameter  . Again, we may check these results by straightforward analytical evaluation. We have

. Again, we may check these results by straightforward analytical evaluation. We have

The integrand in the first integral in the last expression is an odd function of t −  ; since the integral exists, its value must be zero. The second term in this expression is

; since the integral exists, its value must be zero. The second term in this expression is  times the area under the graph for the density function and therefore must have the value

times the area under the graph for the density function and therefore must have the value  .

.

It is not always possible to use the center-of-mass interpretation so easily. Since  [X] is a mathematical expectation, the various forms of expression for and properties of the mean value are obtained directly from the expressions and properties of the mathematical expectation. Some of the examples in Sec. 5-2 are examples of calculation of the mean value. Example 5-2-1 gives a general formula for the mean value of a simple random variable. Example 5-2-2 gives the mean value for the number of successes in a sequence of n trials. In the Bernoulli case, in which the random variable has the binomial distribution (Example 3-4-5), the mean value is np. The constant

[X] is a mathematical expectation, the various forms of expression for and properties of the mean value are obtained directly from the expressions and properties of the mathematical expectation. Some of the examples in Sec. 5-2 are examples of calculation of the mean value. Example 5-2-1 gives a general formula for the mean value of a simple random variable. Example 5-2-2 gives the mean value for the number of successes in a sequence of n trials. In the Bernoulli case, in which the random variable has the binomial distribution (Example 3-4-5), the mean value is np. The constant  in Example 5-2-4 is the mean value for the random variable considered in that example.

in Example 5-2-4 is the mean value for the random variable considered in that example.

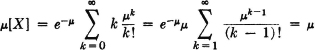

Example 5-3-3 Poisson’s Distribution

A random variable having the Poisson distribution is described in Example 3-4-7. This random variable has values 0, 1, 2, …, with probabilities

The mean value is thus

since the last series expression converges to e . Again, the choice of the symbol

. Again, the choice of the symbol  for the parameter in the formulas is based on the fact that this is a traditional symbol for the mean value.

for the parameter in the formulas is based on the fact that this is a traditional symbol for the mean value.

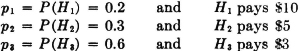

Example 5-3-4

A man plays a game in which he wins an amount t1 with probability p or loses an amount t2 with probability q = 1 − p. The net winnings may be expressed as a random variable W(·) = t1IA(·) − t2IAc(·), where A is the event that the man wins and Ac is the event that he loses. The game is considered a “fair game” if the mathematical expectation, or mean value, of the random variable is zero. Determine p in terms of the amounts t1 and t2 in order that the game be fair.

SOLUTION We set  [W] = t1p − t2q = 0. Thus p/q = t2/t1 = r, from which we obtain, by a little algebra, p = r/(1 + r) = t2/(t1 + t2).

[W] = t1p − t2q = 0. Thus p/q = t2/t1 = r, from which we obtain, by a little algebra, p = r/(1 + r) = t2/(t1 + t2).

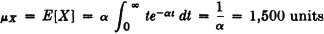

Example 5-3-5

The sales demand in a given period for an item of merchandise may be represented by a random variable X(·) whose distribution is exponential (Example 3-4-8). The density function is given by

Suppose 1/α = 1,500 units of merchandise. What amount must be stocked at the beginning of the period so that the probability is 0.99 that the sales demand will not exceed the supply? What is the “expected number” of sales in the period?

SOLUTION The first question amounts to asking what value of t makes FX(t) = u(t)[1 − e−αt] = 0.99. This is equivalent to making e−αt = 0.01. Use of a table of exponentials shows that this requires a value αt = 4.6. Thus t = 4.6/α = 6,900 units. The expected number of sales is interpreted as the mathematical expectation, or mean value

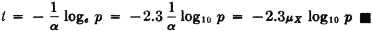

Note that this expectation may be obtained from the result of Example 5-1-2, with n = 1. The result above may be expressed in a general formula for the exponential distribution. If it is desired to make the probability of meeting sales demand equal to 1 − p, then e−αt = p or αt = −loge p, so that

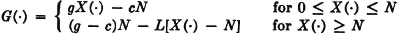

The next example involves some assumptions that may not be realistic in many situations, but it points to the role of probability theory in decision making. Even such an oversimplified model may often give insight into the alternatives offered in a decision process.

Example 5-3-6

A government agency has m research and development problems it would like to have solved in a given period of time and n contracts to offer n equally qualified laboratories that will work essentially independently on the problems assigned to them. Each laboratory has a probability p of solving any one of the problems which may be assigned to it. The agency is faced with the decision whether to assign contracts with a uniform number n/m (assumed to be an integer) of laboratories working on each problem or to let the laboratories choose (presumably in a manner that is essentially random) the problem that it will attack. Which policy offers the greater prospect of success in the sense that it will result in the solution of the larger number of problems?

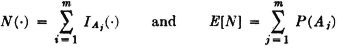

SOLUTION AND DISCUSSION We shall approach this problem by defining the random variable N(·) whose value is the number of problems solved and determine E[N] under each of the assignment schemes. If we let Aj be the event that the jth problem is solved, then

The problem reduces to finding the P(Aj). Consider the events

Bij = event ith laboratory works on jth problem

Ci = event ith laboratory is successful

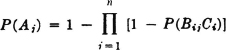

We suppose P(Ci) = p and P(Bij) depends upon the method of assignment. We suppose further that {Ci, Bij: 1 ≤ i ≤ n, 1 ≤ j ≤ m} is an independent class of events. Now

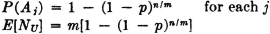

Uniform assignment: P(Bij) = 1 for n/m of the i for any j and 0 for the others; this means that 1 − P(BijCi) = 1 − p for n/m factors and = 1 for the others in the expression for P(Aj). Thus

Random assignment: P(Bij) = 1/m for each i and j. P(Bij Ci) = p/m. Therefore P(Aj) = 1 − (1 − p/m)n, and

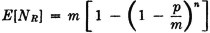

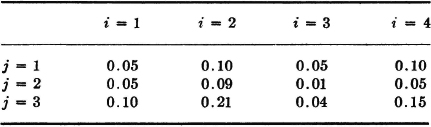

The following numerical values may be of some interest.

It would appear that for small p there is little advantage in a uniform assignment. When one allows for the fact that the probability of success is likely to increase if the laboratory chooses its problem, one would suspect that for difficult problems with low probability of success it might be better to allow the laboratories to choose. On the other hand, one problem might be attractive to all laboratories and the others unattractive to them all, resulting in several solutions to one problem and no attempt to solve the others. A variety of assumptions as to probabilities and corresponding methods of assignments must be tested before a sound decision can be made.

We turn next to a second parameter which is important in characterizing most probability distributions encountered in practice.

The mean value, introduced in the preceding section, determines the location of the center of mass of the probability distribution induced by a real-valued random variable. As such, it gives a measure of the central tendency of the values of the random variable. We next introduce a parameter which gives an indication of the spread, or dispersion, of values of the random variable about the mean value.

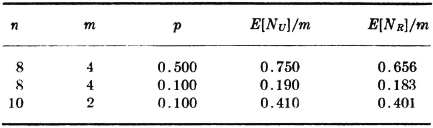

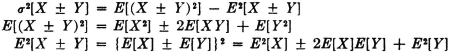

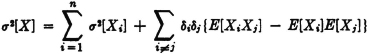

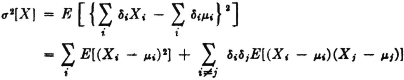

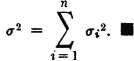

Consider a real-valued random variable X(·) whose square is integrable. The variance of X(·), denoted σ2[X], is given by

where  =

=  [X] is the mean value of X(·).

[X] is the mean value of X(·).

The standard deviation for X(·), denoted σ[X], is the positive square root of the variance.

We sometimes employ symbols σ or σX, to simplify writing.

A mechanical interpretation

As in the case of the mean value, we may give a simple mechanical interpretation of the variance in terms of the mass distribution induced by X(·). In integral form

To form an approximating integral, we divide the t axis into small intervals; the mass located in each interval is multiplied by the square of the distance from the center of mass  to a point in the interval; the sum is taken over all the intervals. The integral is thus the second moment about the center of mass; this is the moment of inertia of the mass about its center of mass. Since the total mass is unity, this is equal to the square of the radius of gyration of the mass distribution. The standard deviation is thus equal to the radius of gyration, and the variance to its square. As the mass is scattered more widely about the mean, the values of these parameters increase. Small variance, or standard deviation, indicates that the mass is located near its center of mass; in this case the probability that the random variable will take on values in a small interval about the mean value tends to be large.

to a point in the interval; the sum is taken over all the intervals. The integral is thus the second moment about the center of mass; this is the moment of inertia of the mass about its center of mass. Since the total mass is unity, this is equal to the square of the radius of gyration of the mass distribution. The standard deviation is thus equal to the radius of gyration, and the variance to its square. As the mass is scattered more widely about the mean, the values of these parameters increase. Small variance, or standard deviation, indicates that the mass is located near its center of mass; in this case the probability that the random variable will take on values in a small interval about the mean value tends to be large.

Some basic properties

Utilizing properties of mathematical expectation, we may derive a number of properties of the variance which are useful. We suppose the variances indicated in the following expressions exist; this means that the squares of the random variables are integrable.

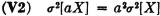

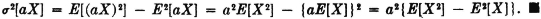

A restatement of the result obtained in Example 5-2-4 shows that

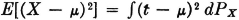

Multiplication by a constant produces the following effect on the variance.

PROOF

This property may be visualized in terms of the mass distribution. If |a| > 1, the variable aX(·) spreads the probability mass more widely about the mean than does X(·), with a consequent increase of the moment of inertia. For |a| < 1, the opposite condition is true. A change in sign produced by a negative value of a does not alter the spread of the probability mass about the center of mass.

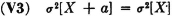

Adding a constant to a random variable does not change the variance. That is,

PROOF

The difference is equal to σ2[X] by (V1).

In terms of the mass distributions, the random variable X(·) + a induces a probability mass distribution which differs from that for X(·) only in being shifted by an amount a. This shifts the center of mass by an amount a. The spread about the center of mass is the same in both cases.

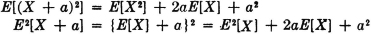

PROOF

Taking the difference and using (V1) gives the desired result.

An important simplification of the formula (V4) results if the random variables are independent, so that E[XY] = E[X]E[Y]. More generally, we have

(V5) If {Xi(·): 1 ≤ i ≤ n} is a class of random variables and  , where each δi has one of the values +1 or −1, then

, where each δi has one of the values +1 or −1, then

PROOF

If the product rule E[XiXj] = E[Xi]E[Xj] holds for all i ≠ j, then each of the terms in the final summation in (V5) is zero. In particular, this is the case if the class is independent. Random variables satisfying this product law for expectations are said to be uncorrelated.

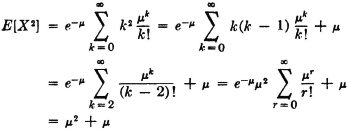

SOLUTION In Example 5-3-3 it is shown that E[X] =  .

.

From this it follows that

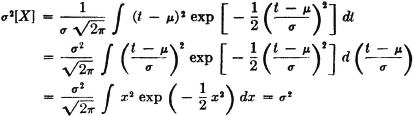

The normal distribution

We next obtain the variance for the normal, or gaussian, distribution and obtain some properties of this important distribution.

Example 5-4-5 The Normal Distribution

Find σ2[X] if X(·) has the normal distribution, as defined in Example 5-3-2.

SOLUTION

as may be verified from a table of integrals.

Definition 5-4b

A random variable X(·) is said to be normal ( , σ) iffi it is continuous and has the normal density function

, σ) iffi it is continuous and has the normal density function

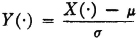

If X(·) is normal ( , σ), we may standardize it in the manner indicated in (V6). It is of considerable interest and importance that the standardized variable is also normal.

, σ), we may standardize it in the manner indicated in (V6). It is of considerable interest and importance that the standardized variable is also normal.

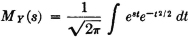

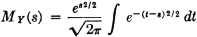

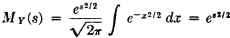

Theorem 5-4A

If X(·) is normal ( , σ), the standardized random variable

, σ), the standardized random variable

is normal (0, 1).

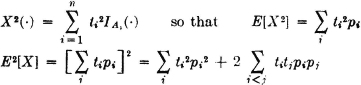

Let X(·) be a simple random variable given in canonical form by the expression

Let pi = P(Ai) and qi = 1 − pi. Determine σ2[X].

SOLUTION

Using (V1), we get

In the last summation, the index notation i < j is intended to indicate the sum of all distinct pairs of integers, with the pair (i, j) considered the same as the pair (j, i). The factor 2 takes account of the two possible ways any pair can appear.

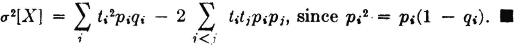

Example 5-4-3

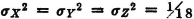

Suppose {Ai: 1 ≤ i ≤ n} is a class of pairwise independent events. Let  . Put P(Ai) = pi and qi.= 1 − pi. Determine σ2[X].

. Put P(Ai) = pi and qi.= 1 − pi. Determine σ2[X].

SOLUTION Since by Example 3-7-1, we may assert {aiIAi,(·): 1 ≤ i ≤ n} is a class of pairwise independent random variables, we may apply (V5), (V2), and the result of Example 5-4-1 to give

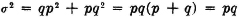

For the random variable giving the number of successes in Bernoulli trials (Example 5-2-2), Ai = Ei, ai = 1, and pi = p. Hence the random variable N(·) with the Binomial distribution has σ2[N] = npq.

Example 5-4-4 Poisson Distribution

The random variable  , with the Ak forming a disjoint class and with

, with the Ak forming a disjoint class and with  , has the Poisson distribution. Determine σ2[X].

, has the Poisson distribution. Determine σ2[X].

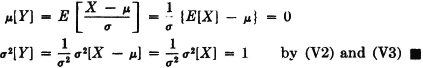

It is frequently convenient to make a transformation from a random variable X(·) having a specified mean and variance to a related random variable having zero mean and unit variance. This may be done as indicated in the following:

(V6) Consider the random variable X(·) with mean  [X] =

[X] =  and standard deviation σ[X] = σ. Then the random variable

and standard deviation σ[X] = σ. Then the random variable

has mean  [Y] = 0 and standard deviation σ[Y] = 1.

[Y] = 0 and standard deviation σ[Y] = 1.

PROOF

Some examples

We now consider a number of simple but important examples.

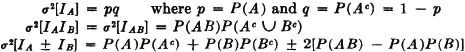

Example 5-4-1 The Indicator Function IA (·)

PROOF AND DISCUSSION σ2[IA] = E[IA2] − E2[IA]. Since IA(·) has values only zero or one, IA2(·) = IA(·). Using the fact that E[IA] = P(A) = p, we have σ2[IA] = p − p2 = p(1 − p) = pq. This problem may also be viewed in terms of the mass distribution with the aid of Fig. 5-4-1. Mass p = P(A) is located at t = 1, and mass q is located at t = 0. The center of mass is at t = p. Summing the products of the mass times the square of its distance from the center of mass yields

The second case follows from the first and the fact that (AB)c = Ac ∪ Bc.

The third relation comes from a direct application of (V4).

Fig. 5-4-1 Variance for the indicator function IA(·).

PROOF This result may be obtained easily from the result of Example 3-9-4. Putting a = 1/σ, which is positive, and b = − /σ, we have

/σ, we have

Substituting συ +  for t in the expression for fX(t) gives

for t in the expression for fX(t) gives

which is the condition required.

It is customary to use special symbols for the distribution and density functions for a random variable X(·) which is normal (0, 1). In this case it is customary to designate FX(·) by Φ(·) and fX(·) by  (·). Extensive tables of these functions are found in many places. The general shape of the graphs for these functions is given in Fig. 3-4-4. By letting

(·). Extensive tables of these functions are found in many places. The general shape of the graphs for these functions is given in Fig. 3-4-4. By letting  = 0 and adjusting the scale for σ = 1, these become curves for Φ(t) and

= 0 and adjusting the scale for σ = 1, these become curves for Φ(t) and  (t) as shown in Fig. 5-4-2.

(t) as shown in Fig. 5-4-2.

Fig. 5-4-2 (a) Distribution function Φ(·) and (b) density function  (·) for a random variable normal (0, 1).

(·) for a random variable normal (0, 1).

so that tables of Φ(·) and  (·) suffice for any normally distributed random variable. As an illustration of this fact, consider the following

(·) suffice for any normally distributed random variable. As an illustration of this fact, consider the following

Example 5-4-6

A random variable X(·) is normal ( , σ). What is the probability that it does not differ from its mean value by more than k standard deviations?

, σ). What is the probability that it does not differ from its mean value by more than k standard deviations?

SOLUTION  . Since (X −

. Since (X −  )/σ is normal (0, 1), the desired probability is Φ(k) − Φ (−k). This may be put into another form which requires only values of Φ(·) for positive k, by utilizing the symmetries of the distribution or density function. From the curves in Fig. 5-4-2, it is apparent that Φ(−k) = 1 − Φ(k), so that Φ(k) − Φ(−k) = 2Φ(k) − 1.

)/σ is normal (0, 1), the desired probability is Φ(k) − Φ (−k). This may be put into another form which requires only values of Φ(·) for positive k, by utilizing the symmetries of the distribution or density function. From the curves in Fig. 5-4-2, it is apparent that Φ(−k) = 1 − Φ(k), so that Φ(k) − Φ(−k) = 2Φ(k) − 1.

This example merely serves to illustrate how the symmetries of the normal distribution and density functions may be exploited to simplify or otherwise modify expressions for probabilities of normally distributed random variables. Once these symmetries are carefully noted, the manner in which they may be utilized becomes apparent in a variety of situations. Extensive tables of Φ(·),  (·), and related functions are to be found in most handbooks, as well as in books on statistics or applied probability. The table below provides some typical values which are helpful in making estimates.

(·), and related functions are to be found in most handbooks, as well as in books on statistics or applied probability. The table below provides some typical values which are helpful in making estimates.

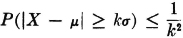

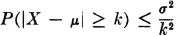

The inequality of Chebyshev

We have seen that the standard deviation gives an indication of the spread of possible values of a random variable about the mean. This fact has been given analytical expression in the following inequality of Chebyshev (spelled variously in the literature as Tchebycheff or Tshebysheff).

Theorem 5-4B Chebyshev Inequality

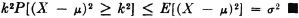

Let X(·) be any random variable whose mean  and standard deviation σ exist. Then

and standard deviation σ exist. Then

or, equivalently,

PROOF Since |X −  | ≥ k iffi (X −

| ≥ k iffi (X −  )2 ≥ k2, we may use property (E7) with g(X) = (X −

)2 ≥ k2, we may use property (E7) with g(X) = (X −  )2 to assert

)2 to assert

The first form of the inequality is interesting in that it shows the effective spread of probability mass to be measured in multiples of σ. Thus the standard deviation provides an important unit of measure in evaluating the spread of probability mass. This fact is also illustrated in Example 5-4-6. It should be noted that the Chebyshev inequality is quite general in its application. It has played a significant role in providing the estimates needed to prove various limit theorems (Chap. 6). Numerical examples show, however, that it does not provide the best possible estimate of the probability in special cases. For example, in the case of the normal distribution, Chebyshev’s estimate for k = 2 is  , whereas from the table above for the normal distribution, it is apparent that

, whereas from the table above for the normal distribution, it is apparent that

Another comparison is made for an important special case in Sec. 6-2. The general inequality is useful, however, for estimates in situations where detailed information concerning the distribution of the random variable is not available.

A number of other special mathematical expectations are used extensively in mathematical statistics to describe important characteristics of a probability mass distribution. For a careful treatment of some of the more important of these, one may consult any of a number of works in mathematical statistics (e.g., Brunk [1964], Cramér [1946], or Fisz [1963]).

It may be well at this point to make a new comparison of the situation in the real world, in the mathematical model, and in the auxiliary model, as we have done previously.

The process of sampling is fundamental in statistics. In Examples 2-8-9 and 2-8-10, the idea of random sampling is touched upon briefly. In this section, we examine the idea in terms of random variables.

Suppose a physical quantity is under observation in some sort of experiment. A sequence of n observations (t1, t2, …, tn) is recorded. Under appropriate conditions, these observations may be considered to be n observations, or “samples,” of a single random variable X(·), having a given probability distribution. A more natural way to consider these observations, from the viewpoint of our mathematical model, is to think of them as a single observation of the values of a class of random variables {X1(·), X2(·), …, Xn(·)}, where t1 = X1( ), t2 = X2(

), t2 = X2( ), etc.

), etc.

Definition 5-5a

If the conditions of the physical sampling or observation process described above are such that

1. The class {X1(·), X2(·), …, Xn(·)} is an independent class, and

2. Each random variable Xi(·) has the same distribution as does X(·), the set of observations is called a random sample of size n of the variable X(·).

This model seems to be a satisfactory one in many physical sampling processes. The concept of stochastic independence is the mathematical counterpart of an assumed “physical independence” between the various members of the sample. The condition that the Xi(·) have the same distribution seems to be met when the sampling is from an infinite population (that is, one so large that the removal of a unit does not appreciably affect the distribution in the population) or from a finite population with replacement.

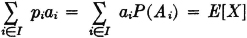

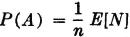

When a sample is taken, it is often desirable to average the set of values observed. The significance of this operation from the point of view of the probability model may be obtained as follows. First we make the

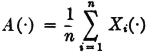

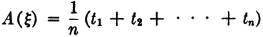

The random variable A(·) given by the expression

is known as the sample mean.

Each choice of an elementary outcome  corresponds to the choice of a set of values (t1, t2, …, tn). But this also corresponds to the choice of the number

corresponds to the choice of a set of values (t1, t2, …, tn). But this also corresponds to the choice of the number

which is the sample mean. The following simple but important theorem shows the significance of the sample mean in statistical analysis.

Theorem 5-5A

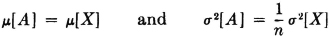

If A(·) is the sample mean for a sample of size n of the random variable X(·), then

provided  [X] and σ2[X] exist

[X] and σ2[X] exist

PROOF The first relation is a direct result of the linearity property for expectations, and the second is a direct result of the additivity property (V5) for variances of independent random variables. Thus

This theorem says that the mean of the sample mean is the same as the mean of the random variable being sampled. The variance of the sample mean, however, is only 1/n times the variance of the random variable being sampled. In view of the role of the variance in measuring the spread of possible values of the random variable about its mean, this indicates that the values of the sample mean can be concentrated about the mean by taking large enough samples. This may be given analytical formulation with the help of the Chebyshev inequality (Theorem 5-4B). If we put  [X] =

[X] =  and σ2[X] = σ2, we have

and σ2[X] = σ2, we have

For any given k we can make the probability that A(·) differs from  by more than kσ as small as we please by making the sample size n sufficiently large.

by more than kσ as small as we please by making the sample size n sufficiently large.

In various situations of practical interest, much better estimates are available than that provided by Chebyshev inequality. One of the central problems of mathematical statistics is to study such estimates. For an introduction to such studies, one may refer to any of a number of standard works on statistics (e.g., Brunk [1964] or Fisz [1963]).

Probability ideas are being applied to problems of communication, control, and data processing in a variety of ways. One of the important aspects of this statistical communication theory is the so-called information theory, whose foundations were laid by Claude Shannon in a series of brilliant researches carried out in the 1940s at Bell Telephone Laboratories. In this section, we sketch briefly some of the central ideas of information theory and see how they may be given expression in terms of the concepts of probability theory.

We suppose the communication system under study transmits signals in terms of some known, finite set of symbols. These may be the symbols of an alphabet, words chosen from a given code-word list, or messages from a prescribed set of possible messages. For convenience, we refer to the unit of signal under consideration as a message. The situation assumed is this: when a signal conveying a message is received, it is known that one of the given set of possible messages has been sent. The usual communication channel is characterized by noise or disturbances of a “random” character which perturb the transmitted signal. The actual physical process may be quite complicated.

The behavior of such a system is characterized by uncertainties. The problem of communication is to remove these uncertainties. If there were no uncertainty about the message transmitted over a communication system, there would be no real reason for the system. All that is needed in this case is a device to generate the appropriate signal at the receiving station. There are two sources of uncertainty in the communication system:

1. The message source (whether a person or a physical device under observation). It is not known with certainty which of the possible messages will be sent at any given transmission time.

2. The transmission channel. Because of the effects of noise, it is not known with certainty which signal was transmitted even when the received signal is known.

This characterization of a communication system points to two fundamental ideas underlying the development of a model of information theory.

1. The signal transmitted (or received) is one of a set of conceptually possible signals. The signal actually sent (or received) is chosen from this set.

2. The receipt of information is equivalent to the removal of uncertainty.

The problem of establishing a model for information theory requires first the identification of a suitable measure of information or uncertainty.

Shannon, in a remarkable work [1948], identified such a concept and succeeded in establishing the foundations of a mathematical theory of information. In this theory, uncertainty is related to probabilities. Shannon showed that a reasonable model for a communication source describes the source in terms of probabilities assigned to the various messages and subsets of messages in the basic set. The effects of noise in the communication channel are characterized by conditional probabilities. A given signal is transmitted. The signal is perturbed in such a manner that one can assign, in principle at least, conditional probabilities to each of the possible received signals, given the particular signal transmitted.

In this theory, the receipt of information is equivalent to the removal of uncertainty about which of the possible signals is transmitted. The concept of information employed has nothing to do with the meaning of the message or with its value to the recipient. It simply deals with the problem of getting knowledge of the correct message to the person at the receiving end.

For a review of the ideas which led up to the formulation of the mathematical concepts, Shannon’s original papers are probably the best source available. We simply begin with some definitions, due principally to Shannon, but bearing the mark of considerable development by various workers, notably R. M. Fano and a group of associates at the Massachusetts Institute of Technology (Fano [1961] or Abramson [1963]). The importance of the concepts defined rests upon certain properties and the interpretation of these properties in terms of the communication problem.

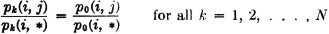

Probability schemes and uncertainty

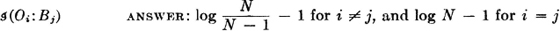

The output of an information source or of a communication channel at any given time can be considered to be a random variable. This may be a random variable whose “values” are nonnumerical, but this causes no difficulty. The random variable indicates in some way which of the possible messages is chosen (transmitted or received). We limit ourselves to the case where the number of possible messages is finite. Information theory depends upon the identification of which of the possible outcomes has been realized. Once this is known, it is assumed that the proper signal can be generated (i.e., that the “value” is known). If the random variable is

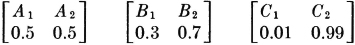

where mi represents the ith message, and Ai is the event that the message mi is sent, there is uncertainty about which message mi will be sent. This means that there is uncertainty about which event in the partition {Ai: i ∈ J} will occur. This uncertainty must be related in some manner to the set of probabilities {P(Ai): i ∈ J} for the events in the partition. For example, consider three possible partitions of the basic space into two events (corresponding to two possible messages):

It is intuitively obvious that the first system, or scheme, indicates the greatest uncertainty as to the outcome; the next two schemes indicate decreasing amounts of uncertainty as to which event may be expected to occur. One may test his intuition by asking himself how he would bet on the outcome in each case. There is little doubt how one would bet in the third case; one would not bet with as much confidence in the second case; and in the first case the outcome would be so uncertain that he may reasonably bet either way. In evaluating more complicated schemes, or in comparing schemes with different numbers of events, the amounts of uncertainty may not be obvious. The problem is to find a measure which assigns a number to the uncertainty of the outcome. Actually, Shannon’s measure is a measure of the average uncertainty for the various events in the partition; and as the examples above suggest, this measure must be a function of the set of probabilities of the events in the partition. A number of intuitive and theoretical considerations led to the introduction of a measure of uncertainty which depends upon the logarithms of the probabilities. We shall not attempt to trace the development that led to this choice, but shall simply introduce the measure discovered by Shannon (and anticipated by others) and see that the properties of such a measure admit of an appropriate interpretation in terms of uncertainty.

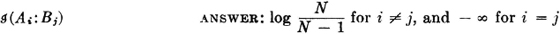

Mutual and self-information of individual events

Shannon’s measure refers to probability schemes consisting of partitions and the associated set of probabilities. It is helpful, however, to begin with the idea of the mutual information in any two events; these events may or may not be given as members of a partition. At this point we simply make a mathematical definition. The justification of this definition must rest on its mathematical consequences and on the interpretations which can be made in terms of uncertainties among events in the real world.

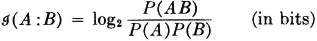

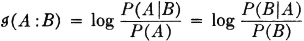

Definition 5-6a

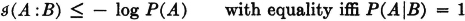

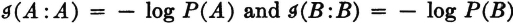

The mutual information in two events A and B is given by the expression

It should be noted that the logarithm in the definition is to the base 2. If any other base had been chosen, the result would be to multiply the expression above by an appropriate constant. This is equivalent to a scale change. Unless otherwise indicated, logarithms in this section are taken to base 2, in keeping with the custom in works on information theory. With this choice of base, the unit of information is the bit; the term was coined as a contraction of the term binary digit. Reasons for the choice of this term are discussed in practically all the introductions to information theory.

A number of facts about the properties of mutual information are easily derived. First we note a symmetry property which helps justify the term mutual.

The mutual information in events A and B is the same as the mutual information in events B and A. The order of considering or listing is not important. The function may also be written in a form which hides the symmetry but which leads to some relationships that are important in the development of the topic.

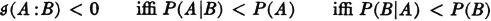

An examination of the possibilities shows that mutual information may take on any real value, positive, negative, or zero. That is,

The phenomenon of negative mutual information usually surprises the beginner. The following argument shows the significance of this condition. From the form above, which uses conditional probabilities, it is apparent that

Use of elementary properties of conditional probabilities verifies the equivalence of the last two inequalities as well as the fact that

so that

We may thus say that the mutual information between A and B is negative under the condition that the occurrence of B makes the nonoccurrence of A more likely or the occurrence of A makes the nonoccurrence of B more likely. It is also easy to see from the definition that

The mutual information is zero if the occurrence of one event does not influence (or condition) the probability of the occurrence of the other.

We may rewrite the expression, using conditional probabilities in the following manner to exhibit an important property.

It is apparent that

In particular,  . The mutual information of an event with itself is naturally interpreted as an information property of that event. It is thus natural to make the

. The mutual information of an event with itself is naturally interpreted as an information property of that event. It is thus natural to make the

Definition 5-6b

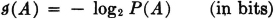

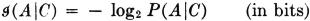

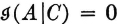

The self-information in an event A is given by

The self-information is nonnegative; it has value zero iffi P(A) = 1, which means there is no uncertainty about the occurrence of A. In this case, its occurrence removes no uncertainty, hence conveys no information.

Parallel definitions may be made when the probabilities involved are conditional probabilities.

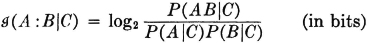

The conditional mutual information in A and B, given C, is

The conditional self-information in A, given C, is

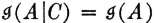

Properties of the conditional-information measures are similar to those for the information, since conditional probability is a probability measure. Some interpretations are of interest. The conditional self-information is nonnegative.  iffi P(A|C) = 1. This means that there is no uncertainty about the occurrence of A, given that C is known to have occurred.

iffi P(A|C) = 1. This means that there is no uncertainty about the occurrence of A, given that C is known to have occurred.  iffi A and C are independent. Independence of the events A and C implies that knowledge of the occurrence of C gives no knowledge of the occurrence of A.

iffi A and C are independent. Independence of the events A and C implies that knowledge of the occurrence of C gives no knowledge of the occurrence of A.

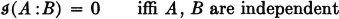

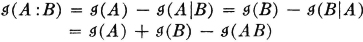

Some simple but important relations between the concepts defined above are of interest.

The last relation may be rewritten

These relations may be given interpretations in terms of uncertainties. We suppose  is the uncertainty about the occurrence of event A (i.e., the information received when event A occurs). If event B occurs, it may or may not change the uncertainty about event A. We suppose

is the uncertainty about the occurrence of event A (i.e., the information received when event A occurs). If event B occurs, it may or may not change the uncertainty about event A. We suppose  is the residual uncertainty about A when one has knowledge of the occurrence of B. We may interpret

is the residual uncertainty about A when one has knowledge of the occurrence of B. We may interpret  and

and  similarly. Then, according to the first relation,

similarly. Then, according to the first relation,  is the information about A provided by the occurrence of the event B; similarly, the mutual information is the information about B provided by the occurrence of A. The last relation above may then be interpreted to mean that the information in the joint occurrence of events A and B is the information in the occurrence of A plus that in the occurrence of B minus the mutual information. Since

is the information about A provided by the occurrence of the event B; similarly, the mutual information is the information about B provided by the occurrence of A. The last relation above may then be interpreted to mean that the information in the joint occurrence of events A and B is the information in the occurrence of A plus that in the occurrence of B minus the mutual information. Since  iffi A and B are independent, the information in the joint occurrence AB is the sum of the information in A and in B iffi the two events are independent.

iffi A and B are independent, the information in the joint occurrence AB is the sum of the information in A and in B iffi the two events are independent.

We turn next to the problem of measuring the average uncertainties in systems or schemes in which any one of several outcomes is possible. In order to be able to speak with precision and economy of statement, we formalize some notions and introduce some terminology.

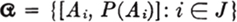

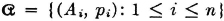

The concept of a partition has played an important role in the development of the theory of probability. For a given probability measure defined on a suitable class of events, there is associated with each partition a corresponding set of probabilities. If the partition is

there is a corresponding set of probabilities {pi = P(Ai): i ∈ J} with the properties

It should be apparent that, if we were given any set of numbers pi satisfying these two conditions, we could define a probability space and a partition such that these are the probabilities of the members of the partition. It is frequently convenient to use the following terminology in dealing with such a set of numbers:

Definition 5-6d

If {pi: i ∈ J} is a finite or countably infinite set of nonnegative numbers whose sum is 1, we refer to this indexed or ordered set as a probability vector. If there is a finite number n of elements, it is sometimes useful to refer to the set as a probability n-vector.

The combination of a partition and its associated probability vector we refer to according to the following

Definition 5-6e

If  is a finite or countably infinite partition with associated probability vector {P(Ai): i ∈ J}, we speak of the collection

is a finite or countably infinite partition with associated probability vector {P(Ai): i ∈ J}, we speak of the collection  as a probability scheme. If the partition has a finite number of members, we speak of a finite (probability) scheme.

as a probability scheme. If the partition has a finite number of members, we speak of a finite (probability) scheme.

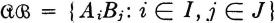

The simple schemes used earlier in this section to illustrate the uncertainty associated with such schemes are special cases having two member events each. It is easy to extend this idea to joint probability schemes in which the partition is a joint partition  of the type discussed in Sec. 2-7.

of the type discussed in Sec. 2-7.

If  is a joint partition, the collection

is a joint partition, the collection  is called a joint probability scheme.

is called a joint probability scheme.

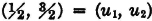

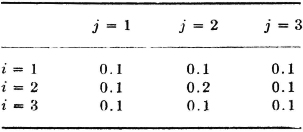

This concept may be extended in an obvious way to the joint scheme for three or more schemes. In the case of the joint scheme  , we must have the following relationships between probability vectors. We let

, we must have the following relationships between probability vectors. We let

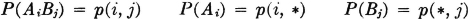

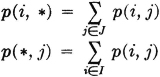

Then (p(i, j): i ∈ I, j ∈ J}, {p(i, *): i ∈ I}, and {p(*, j):j ∈ J} are all probability vectors. They are related by the fact that

Definition 5-6g

Two schemes  and

and  are said to be independent iffi

are said to be independent iffi

P(AiBj) = P(Ai)P(Bj)

for all i ∈ I and j ∈ J.

Average uncertainty for probability schemes

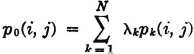

We have already illustrated the fact that the uncertainty as to the outcome of a probability scheme is dependent in some manner on the probability vector for the scheme. Also, we have noted that in a communication system the probability scheme of interest is that determined by the choice of a message from among a set of possible messages. Shannon developed his very powerful mathematical theory of information upon the following basic measure for the average uncertainty of the scheme associated with the choice of a message.

Definition 5-6h

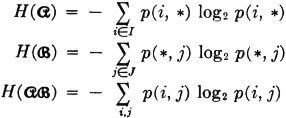

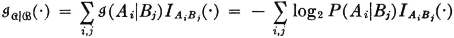

For schemes  , and

, and  , described above, we define the entropies

, described above, we define the entropies

The term entropy is used because the mathematical functions are the same as the entropy functions which play a central role in statistical mechanics. Numerous attempts have been made to relate the entropy concept in communication theory to the entropy concept in statistical mechanics. For an interesting discussion written for the nonspecialist, one may consult Pierce [1961, chaps. 1 and 10]. For our purposes, we shall be concerned only to see that the functions introduced do have the properties that one would reasonably demand of a measure of uncertainty or information.

Since the partitions of interest in the probability schemes are related to random variables X(·) and Y(·) whose values are messages (or numbers representing the messages), the corresponding entropies are often designated H(X), H(Y), and H(XY). The random variables rather than the schemes are displayed by this notation. For our purposes, it seems desirable to continue with the notation designating the scheme.

We have alluded to the fact that the value of the entropy in some sense measures the average uncertainty for the scheme. We wish to see that this is the case, provided the self- and mutual-information concepts introduced earlier measure the uncertainty associated with any single event or pair of events.

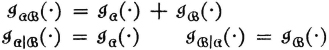

First let us note that if we have a sum of the form

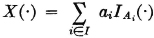

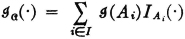

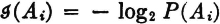

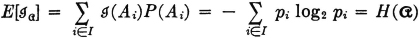

where the pi are components of the probability vector for a scheme, this sum is in fact the mathematical expectation of a random variable. We suppose {Ai: i ∈ I} is a partition and that pi = P(Ai) for each i. Then if we form the random variable (in canonical form)

it follows immediately from the definition of mathematical expectation that

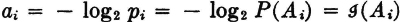

Now suppose we let  . That is, ai is the self-information of the event Ai. We define the random variable

. That is, ai is the self-information of the event Ai. We define the random variable  by

by

This is the random variable whose value for any  is the self-information

is the self-information  for the event Ai which occurs when this

for the event Ai which occurs when this  is chosen. The mathematical expectation or probability-weighted average for this random variable is

is chosen. The mathematical expectation or probability-weighted average for this random variable is

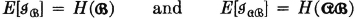

We may define similar random variables  and

and  for the schemes

for the schemes  and

and  and have as a result

and have as a result

We may also define the random variable  whose value for any

whose value for any  ∈ AiBj is the conditional self-information in Ai, given Bj. That is,

∈ AiBj is the conditional self-information in Ai, given Bj. That is,

In a similar manner the variable  may be defined. It is also convenient to define the random variable

may be defined. It is also convenient to define the random variable

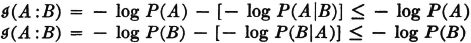

Conditions for independence of the schemes  and

and  show that the following relations hold iffi

show that the following relations hold iffi  and

and  are independent:

are independent:

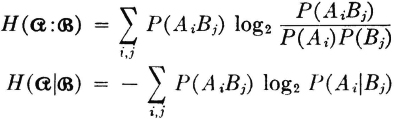

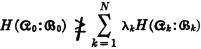

In terms of these functions we may define two further entropies:

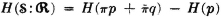

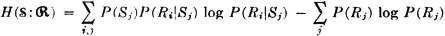

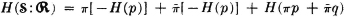

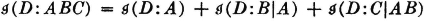

These entropies may be expanded in terms of the probabilities as follows:

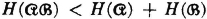

Linearity properties of the mathematical expectation show that various relations developed between information expressions for events can be extended to the entropies. Thus

Interpretations for these expressions parallel those for the information expressions, with the addition of the term average.

The following very simple example deals with a system studied extensively in information theory. It shows how the entropy concepts appear in this theory.

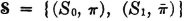

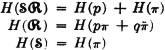

Example 5-6-1 Binary Symmetric Channel

In information theory, a binary channel is one which transmits a succession of binary symbols, which may be represented by 0 and 1 (see also Example 2-5-7). We suppose the system deals independently with each successive symbol, so that we may study the behavior in terms of one symbol at a time. Let S0 be the event a 0 is sent and S1 be the event a 1 is sent; let R0 and R1 be the events of receiving a 0 or a 1, respectively. If the probabilities of the two input events are P(S0) = π and P(S1) =  , then the input scheme (which characterizes the message source) consists of the collection

, then the input scheme (which characterizes the message source) consists of the collection  . The channel is characterized by the conditional probabilities of the outputs, given the inputs. The channel is referred to as a binary symmetric channel (BSC) if P(R1|S0) = P(R0|S1) = p and P(R0|S0) = P(R1|S1) = q, where q = 1 − p; that is, the probability that an input digit is changed into its opposite is p and the probability that an input digit is transmitted unchanged is q. These probability relations are frequently represented schematically as in Fig. 5-6-1.

. The channel is characterized by the conditional probabilities of the outputs, given the inputs. The channel is referred to as a binary symmetric channel (BSC) if P(R1|S0) = P(R0|S1) = p and P(R0|S0) = P(R1|S1) = q, where q = 1 − p; that is, the probability that an input digit is changed into its opposite is p and the probability that an input digit is transmitted unchanged is q. These probability relations are frequently represented schematically as in Fig. 5-6-1.

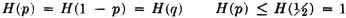

The binary entropy function H(p) = −p log2 p − q log2 q plays an important role in the theory of binary systems. [Note that we have used H(·) in a different sense here. This is in keeping with a custom that need cause no real difficulty]. A sketch of this function against the value of the transition probability p is shown in Fig. 5-6-2. Two characteristics important for our purposes in this example are evident in that figure. These are easily checked analytically.

If we let  be the output scheme consisting of the partition {R0, R1} and the associated probability vector, we may calculate the average mutual information between the input and output schemes and show it to be

be the output scheme consisting of the partition {R0, R1} and the associated probability vector, we may calculate the average mutual information between the input and output schemes and show it to be

To see this, we write

Fig. 5-6-1 Schematic representation of the binary symmetric channel.

Fig. 5-6-2 The binary entropy function

Substituting and recognizing the form for the binary entropy function, we obtain

from which the desired result follows because  . This function gives the average information about the input provided by the receipt of symbols at the output. If we set the input probabilities equal to

. This function gives the average information about the input provided by the receipt of symbols at the output. If we set the input probabilities equal to  , so that either input symbol is equally likely, the resulting value is

, so that either input symbol is equally likely, the resulting value is

which is a maximum, since the maximum value of H(·) is 1 and it occurs when the argument is  .

.

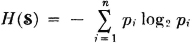

The entropy for an input-message scheme plays a central role in the theory of information. If a source produces one of n messages {mi: 1 ≤ i ≤ n} with corresponding probabilities {pi: 1 ≤ i ≤ n}, the source entropy  is given by

is given by

A basic coding theorem shows that these messages may be represented by a binary code in which the probability-weighted average L of the word lengths satisfies

By considering coding schemes in which several messages are coded in groups, it is possible to make the average code-word length as close to the source entropy as desired. This is done at the expense of complications in coding, of course. Roughly speaking, then, the source entropy in bits (i.e., when logarithms are to base 2) measures the minimum number of binary digits per code word required to encode the source. It is interesting to note that the codes which achieve this minimum length do so by assigning to each message mi a binary code word whose length is the integer next larger than the self-information −log2 pi for that message. In the more sophisticated theory of coding for efficient transmission in the presence of noise, the source entropy and the mutual and conditional entropies for channel input and output play similar roles. It is outside the scope of this book to deal with these topics, although some groundwork has been laid. For a clear, concise, and easily readable treatment, one may consult the very fine work by Abramson [1963], who deals with simple cases in a way that illuminates the content and essential character of the theory.

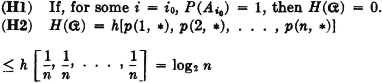

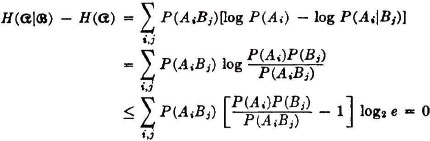

We shall conclude this section by deriving several properties of the entropy functions which strengthen the interpretation in terms of uncertainty or information. In doing so, we shall find the following inequality and some inequalities derived from it very useful.

with equality iffi x = 1. This inequality follows from the fact that the curve y = loge x is tangent to the line y = x − 1 at x = 1 while the slope of y = loge x is a decreasing function of x.

In calculations with entropy expressions, we note that x log x is 0 when x has the value 1. Since x log x approaches 0 as x goes to 0, we define 0 log 0 to be 0.

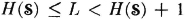

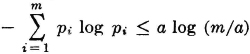

Consider two probability vectors {pi: 1 ≤ i ≤ n} and {qi: 1 ≤ i ≤ n}. Then we show that

with equality iffi pi = qi for each i. To show this, we note

Equality holds iffi qi/pi = 1 for each i.

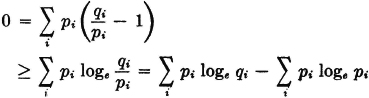

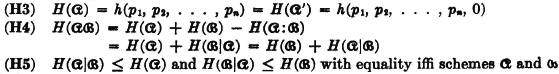

We now collect some properties and relations for entropies which are important in information theory. Some of these properties have already been considered above.

with equality iffi p(i, *) = 1/n for each i.

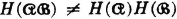

are independent schemes.

Equality holds iffi P(AiBj) = P(Ai)P(Bj) for each i, j.

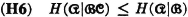

PROOF The proof is similar to that in (H5), but the details are somewhat more complicated. We omit the proof.

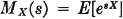

Property (H1) indicates that the average uncertainty in a scheme is zero if there is probability 1 that one of the events  will occur. Property (H2) says that the average uncertainty is a maximum iffi the events of the scheme are all equally likely. Property (H3) indicates that no change in the uncertainty of a scheme is made if an event of zero probability is split off as a separate event. Property (H4) has been discussed above. Property (H5) says that conditioning (i.e., giving information about one scheme) cannot increase the average uncertainty about the other. Property (H6) extends this result to conditioning by several schemes.

will occur. Property (H2) says that the average uncertainty is a maximum iffi the events of the scheme are all equally likely. Property (H3) indicates that no change in the uncertainty of a scheme is made if an event of zero probability is split off as a separate event. Property (H4) has been discussed above. Property (H5) says that conditioning (i.e., giving information about one scheme) cannot increase the average uncertainty about the other. Property (H6) extends this result to conditioning by several schemes.