Network Function Virtualization

Abstract

In this chapter, we define what Network Function Virtualization is in our view. We also take the time to discuss what its key components are and more importantly, are not.

Keywords

Network function virtualization; NFV; COTS; service function chaining; SFC

Introduction

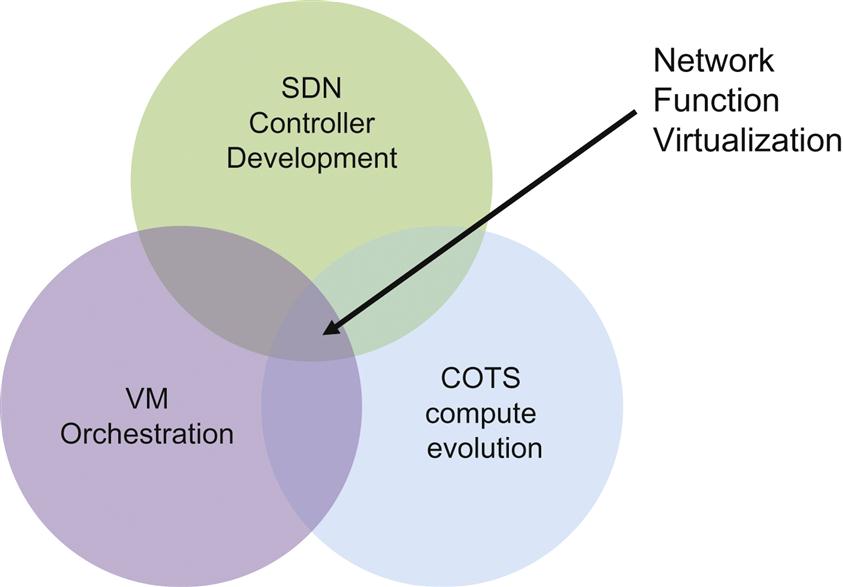

Arguably, Network Function Virtualization (NFV) builds on some of the basic and now salient concepts of SDN. These include control/data plane separation, logical centralization, controllers, network virtualization (logical overlays), application awareness, application intent control, and the trend of running all of these on commodity (Commercial Off-The-Shelf (COTS)) hardware platforms. NFV expands these concepts with new methods in support of service element interconnectivity such as Service Function Chaining (SFC) and new management techniques that must be employed to cope with its dynamic, elastic capabilities.

NFV is a complex technical and business topic. This book is structured to cover the key topics in both of these areas that continue to fuel the discussions around both proof-of-concept, and ultimate deployments of NFV.

In this chapter, we will first review the evolution of NFV from its origins in physical proprietary hardware, to its first stage of evolution with the onset of virtualization, and then SDN concepts to what exists at present. We will also demonstrate how these most recent requirements for SDN and specifically NFV requirements have evolved into a consistent set of requirements that are not appearing on any network operator RFP/RFQs over the short course of two years.

By the end of the chapter, we will define what NFV is fundamentally about (and support that definition through the material in the remaining chapters).

As was the case with SDN, our definition may not include the hyperbolic properties you hear espoused by invested vendors or endorse the architectures proposed by ardent devotees of newly evolved interest groups attempting to “standardize” NFV. Rather, we will offer a pragmatic assessment of the value proposition of NFV.

Background

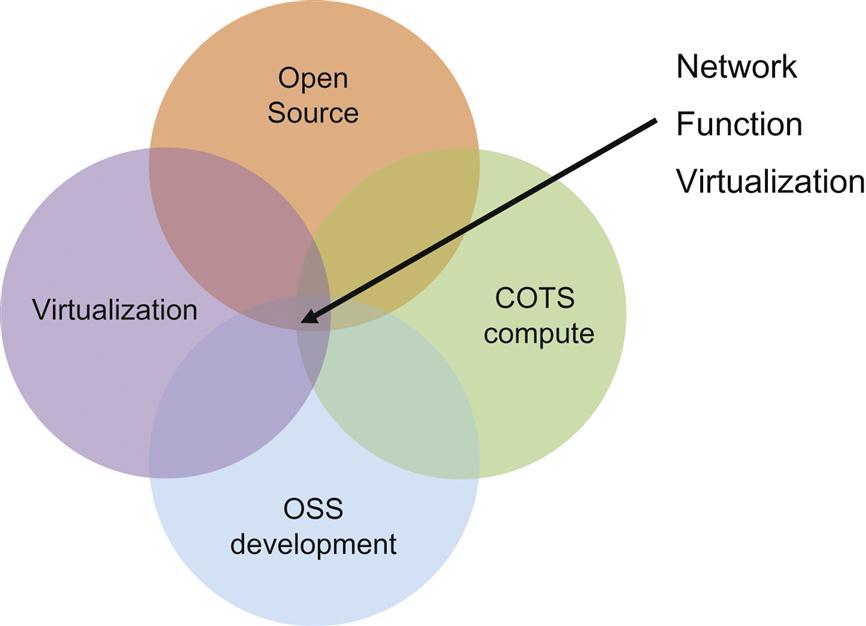

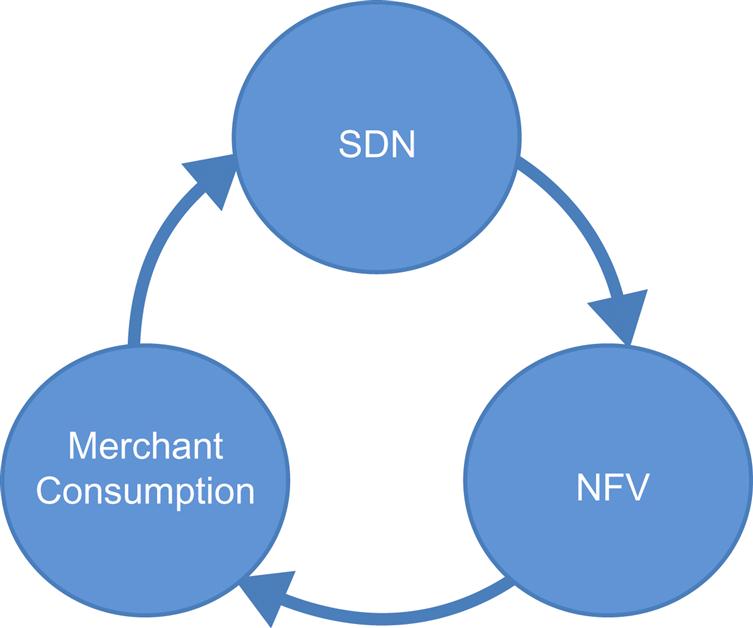

In 2013, in our first book covering SDN1 we described NFV as an application that would manage the splicing together of virtual machines that were deployed as virtualized services (eg, vFirewall) in collaboration with an SDN controller. While the NFV concept continues to have Enterprise application relevance, the evolution of the idea was triggered by a broader set of political/economic realities in the Service Provider industry and technical innovations in areas adjacent and critical to NFV (shown in Fig. 1.1).

The acceleration of pressure that network operators began to see when we wrote about SDN in 2013 grew into real challenges to their businesses.

• What began as an Over The Top (OTT) video and social media incursion into their broadband customer base expanded into OTT service offerings to their business customers. The outsourcing of Enterprise IT to cloud providers positioned these new competitors as potentially more relevant IT partners. Many were now seeing competition in traditional services like virtual private networking (VPN).

• Wireline operators in particular faced large and long-delayed transitions in copper-based services.

• Wireless operators were also facing a potentially expensive architecture change in the move to LTE to accommodate the growing demand for data services. Complicating this was the growing presence of WiFi while competition put pressure on pricing.

These circumstances led to pressure from investors on traditional service providers to increase operational agility, particularly around service agility (reducing costs through automation, just in time provisioning, resource pooling, etc.) and innovation (create new, sticky, and potentially individualized services). The intended result would be twofold—to compete (or at least find a stable position) with the OTT threat and avoid relegation as mere transport providers and to provide new revenue sources.

Many operators had both wireline and wireless operations that they were seeking to consolidate to cut costs and increase efficiency. Here the impacts are seen largely in reducing operational and capital expense (OPEX and CAPEX).

Meanwhile, virtualization concepts evolved from more Enterprise-centric virtual machine operations (eg, VM-motion) to more compose-able and scalable constructs like containers (in public and private clouds) and open source alternatives in orchestration matured.

Optimized performance for virtualization infrastructure started to receive a huge push through efforts like Intel’s Dataplane Development Kit (DPDK)-enabled version of Open vSwitch (OVS)—making the potential throughput of virtualized network functions more attractive.

During this time, cloud computing continued to attract enterprise customers. This not only continued the reduction in COTS costs, but also created an environment in which potentially more service outsourcing may be palatable.

Some highly computer-centric network applications were already beginning to appear as ready-to-run on COTS hardware (eg, IMS, PCRF and other elements of mobile networks), either from incumbents or startups that sensed an easy entry opportunity. Network operators, particularly Tier 1 telcos, were encouraged by the technological gains in virtualization and orchestration over this period and chastened by these business challenges. They responded by working toward establishing what they called next generation network evolutions. Some declared these publicly (eg, AT&T Domain 2.0 or Verizon's SDN/NFV Reference Architecture (https://www.sdxcentral.com/articles/news/verizon-publishes-sdnnfv-reference-architecture/2016/04/)) while others held their cards closer to their vests. In either case, these approaches were all predicated on the promises of SDN and NFV.

Redrawing NFV and Missing Pieces

In our previous book, the functionality spawning the interest in NFV was depicted as the intersection of technological revolutions (Fig. 1.2).

The difference between the view projected in 2013 (Fig. 1.2) and today (Fig. 1.1) is explained by the growth of open source software for infrastructure and some oversights in the original NFV discussion.

Since 2013, there has been an expansion of interest in highly collaborative, multivendor open source environments. Open source software emerged as a very real and viable ingredient of networking and compute environments. This was the case both in the development of orchestration components and SDN controllers based on the Linux operating system, which was already a big part of cloud compute. Open source environments are changing the landscape of how compute and network solutions are standardized, packaged, and introduced to the marketplace. So much so, that this interest is often co-opted in what we refer to as “open-washing” of products targeted at NFV infrastructure. To put a point on this, many of these first phase products or efforts were merely proprietary offerings covered by a thin veil of “open” with a vendor-only or single vendor dominant community that used the term “open” as a marketing strategy.

This growing “open mandate” is explored more fully in Chapter 5, The NFV Infrastructure Management (OpenDaylight and OpenStack), Chapter 6, MANO: Management, Orchestration, OSS, and Service Assurance (MANO), and Chapter 7, The Virtualization Layer—Performance, Packaging, and NFV (virtualization) and has replaced the SDN component of the original drawing (as a super-set).

Other chapters will deal with some missing pieces from the original concept of NFV:

• While the virtualization aspects of those early NFV definitions included the concepts of orchestrated overlays (virtual networking), they did not have a firm plan for service chaining. It was the addition of the SFC concept that finally offered a true service overlay (this is covered in Chapter 4: IETF Related Standards: NETMOD, NETCONF, SFC and SPRING).

• Another critical area that was not included in the original calculus of NFV proponents was the Operations Support Systems (OSS) used to operate and manage the networks where NFV will be used. While a very old concept dating back to networks in the 1960s and 1970s, the OSS is undergoing a potential rebirth as an SDN-driven and highly programmable system (this is covered in Chapter 6: MANO: Management, Orchestration, OSS, and Service Assurance).

Without these critical components, NFV is merely virtualized physical devices (or emulations thereof) dropped down on COTS hardware. Ultimately it is the intersection and combination of the advancement of the OSS, plus advances in COTS computing to support virtualization and high speed network forwarding at severely lower cost points coupled with the latest trend in open source network software components, and virtualization that together form the complete NFV (and later SFC) story.

Defining NFV

Before we explore each of these areas to see how they combine together as the basis for NFV, we should start by defining NFV.

NFV describes and defines how network services are designed, constructed, and deployed using virtualized software components and how these are decoupled from the hardware upon which they execute.

NFV allows for the orchestration of virtualized services or service components, along with their physical counterparts. Specifically, this means the creation, placement, interconnection, modification, and destruction of those services.

Even though there is an opportunity for some potential CAPEX savings and OPEX reduction due to the elasticity of these orchestrated virtual systems, without SFC and a requisite OSS reboot, the same inherent operational issues and costs would exist in NFV that plagued the original services, systems, and networks supporting them.

At its heart, NFV is about service creation. The true value of NFV, particularly in its early phases, is realized in combination with SFC and modernization of the OSS. This may also involve the deconstruction and reconstructed optimization of existing services in defiance of box-based component-to-function mappings dictated by standards or common practice today. Ultimately, it may result in the proliferation of Web-inspired Over-The-Top service implementations.

Is NFV SDN?

SDN is a component of NFV. In some ways it is a related enabler of NFV, but one is not the other, despite some currently confused positioning in the marketplace. With the advent of SDN, orchestration techniques and virtualization advances in COGS hardware, NFV is now being realized albeit slowly. Indications of its utility are the numerous early deployments in production networks today.2 Just as it caused some rethinking about the control plane, SDN concepts and constructs press service providers and users to rethink the assumptions built into the current method of providing a service plane or delivering services using new virtualized, flexible, and COGS hardware-based service platform. The existence of SDN also affords service providers potentially more flexible ways of manipulating services than they had before.

Virtualization alone does not solve all service deployment problems and actually introduces new reliability problem vectors that a service orchestration system or architecture has to mitigate. Virtualization, like any tool, put into nonskilled hands or deployed improperly, can result in unexpected or disastrous results.

While virtualization is the focus of the NFV effort, the reality is that the orchestration and chaining involved needs to have a scope that includes present and future fully integrated service platforms (at least up to the point where the I/O characteristics of fully virtualized solutions eclipse them and some “tail” period in which they would amortize). Here, SDN can provide a “glue” layer of enabling middle-ware.

Even though the role of SDN in the control of service virtualization appears to be universally accepted, the type of control point or points have been, and will continue to be, much debated. This is a vestige of earlier debates about the nature of SDN itself; whether SDN was single protocol or polyglot and whether SDN was completely centralized control or a hybrid of centralized and distributed. In NFV this manifests in debates around stateless and proxy control points, or when inline or imputed metadata is employed.

Orchestration may allocate the service virtual machine or containers while SDN provides the connectivity in these NFV architectures or models, including some potential abstractions that hide some of the complexity that comes with elasticity.

SDN will be a key early enabler of the IETF Network Service Header (NSH) for service chaining (see Chapter 4: IETF Related Standards: NETMOD, NETCONF, SFC and SPRING).

Orchestration and SDN components will have to work cooperatively to provide high availability and a single management/operations view. In particular, the concepts of services models that abstract away (for network operators) the details of the underlying network are coming onto the scene independent of, but certainly applicable to the NFV movement.

NFV is the Base Case

The original NFV SDN use case was born from the issues surrounding the construction and composition of new services, or the modification or decomposition of existing ones on traditional proprietary network hardware, or the often-proprietary platform operating systems that ran on these platforms. The challenge of service creation is covered in depth in Chapter 2, Service Creation and Service Function Chaining.

At the time when the “NFV as an SDN use case” was initially proposed, most, if not all, service providers owned and operated some sort of cloud service. Their cloud was used to offer public services, internal services or both. A common theme was the use of some form of virtual machine that hosted a duplicate of a service that used to run on proprietary hardware. To make an even finer point, the service was often really a virtualized image of an entire router or switch.

The basic network orchestration to support this service was built around one of the obvious and new commercial hypervisor offerings on the market, but the provisioning systems used to actually manage or provision these services was often the same one that had been used in the past. These services usually ran on commodity hardware with processors and basic designs from either Intel or AMD.

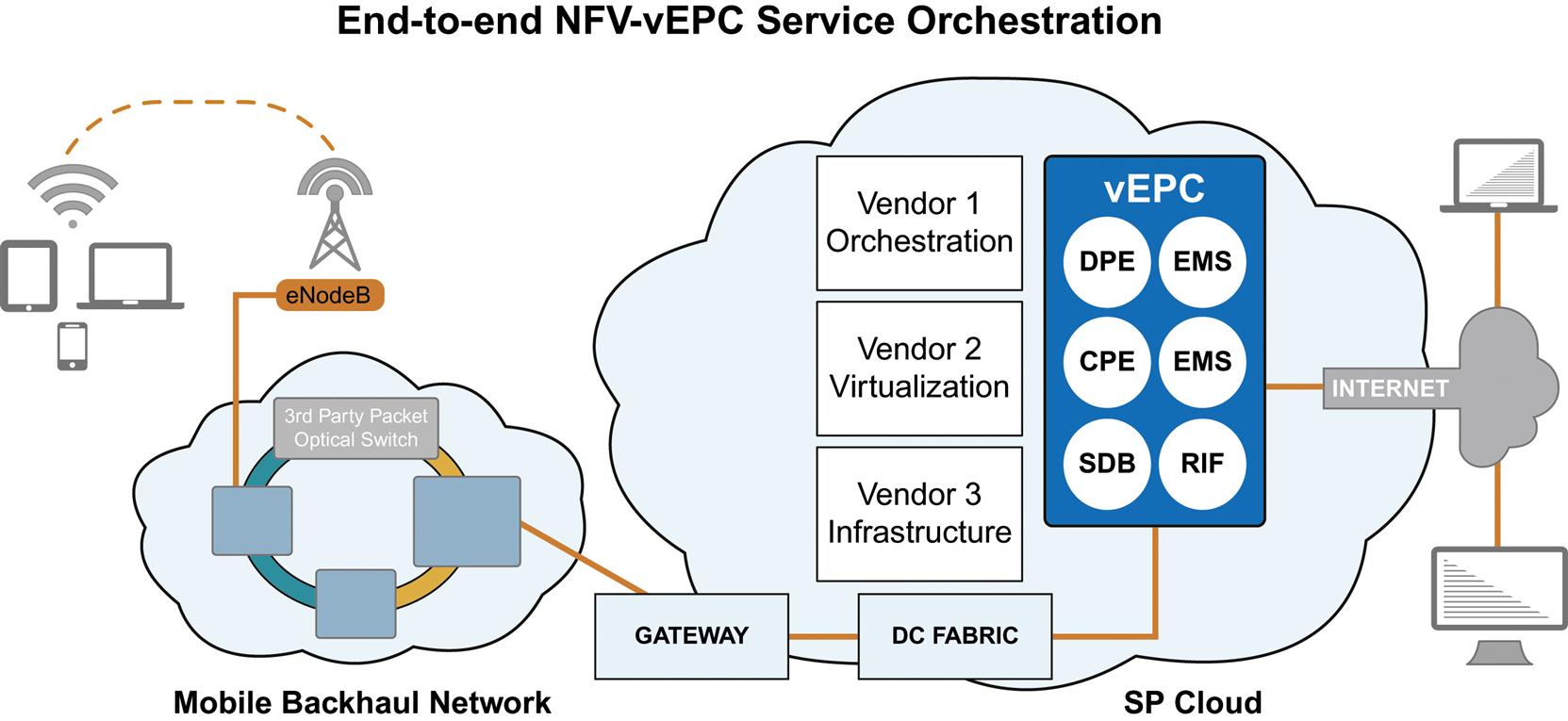

An example of this is shown in Fig. 1.3 where an EPC that was traditionally offered on proprietary hardware has been broken into its constituent software components and offered to execute on commodity hardware within the Service Provider Cloud.

While orchestration and management of the system is shown here to use some more modern components in order to facilitate control and management of the constituent components, if they were offered as a simple virtualized version of the proprietary hardware offering of the EPC, this could easily be managed by the traditional management software from that vendor—except that it would need to deploy the virtual machine that executed the EPC system software.

The motivations for that trend were more or less straightforward in that the network operators wanted to reduce their CAPEX expenses by moving from proprietary hardware to commodity hardware. They also wanted to try to reduce operational expenses such as power and cooling, as well as space management expenses (ie, real estate). In this way, racking servers in a common lab consolidates and compacts various compute loads in a more efficient way than services dedicated to a specific service, or proprietary routers and switches before this. This easily can reduce the amount of idle resources by instituting an infrastructure that encourages more efficient utilization of system resources while at the same time powering-down unused resources.

The economics of the Cloud were an important basis of the NFV use case, as was the elasticity of web services.

However, as we all know and understand, the key component missing here is that simply taking an identical (or near identical) copy of, say, the route processor software that use to run on bespoke hardware and now running it on commodity hardware, has relatively small actual cost savings. The point is that in that model, the operator still manages and operates essentially the same monolithic footprint of various processes within a single, large container. The road to optimal bin packing of workloads and its corresponding cost savings, can only be achieved through disaggregation of the numerous services and processes that execute within that canonical envelope, so that only the ones necessary for a particular service are executed. Another perhaps less obvious component missing from this approach is the way these disaggregated components are orchestrated.

One could argue that the NFV marketplace, at least virtualization of services, predated the push made by operators in 2013. Virtualization of a singleton service (a single function) was not a foreign concept, as pure-software (non-dataplane) services like Domain Name Service (DNS) servers were common (at least in service provider operations). Services like policy and security were also leaning heavily toward virtualized, software-only implementations.

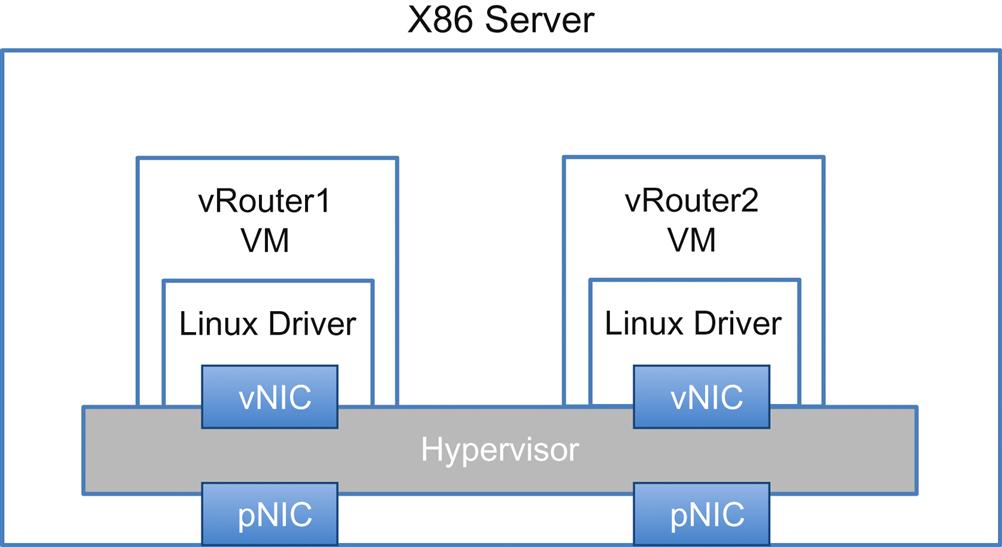

The low-hanging fruit that we pointed to in 2013 were processes like Route Reflection (generally speaking, control and management processes), but this was still far from a “virtual router” (Fig. 1.4) that may have a considerable dataplane component. Similarly, the virtual Firewall appliance provided an easy “victory” for first deployments, providing the dataplane requirements were low (applicable to managed security services for small and medium businesses).

This actually is only the beginning of what is possible with SDN and NFV. While one could argue that technically speaking, moving composite services from proprietary hardware to COGS hardware in a virtualized form is in fact a decomposition of services, what made the decomposition even more interesting was the potential flexibility in the location and position of these individual components that comprised the service running on those platforms. These fledgling virtualized services enjoyed the added benefit that SDN-based network controls offered, providing the missing link between the original concepts of Software Engineered Paths3 and virtualization that became NFV. It is the latter that is the real key to unlocking the value of disaggregated service components, by allowing operators (or their operational systems) to optimally and maximally load commodity servers with workload components thereby maximizing their utilization while at the same time enjoying the flexibility of rapid deployment, change or destruction of services components.

Strengthening “NFV as SDN Use Case”

What really made the use case seem more viable in such a short time were the aforementioned improvements in virtualization and dataplane performance on COTS compute. The combination of the two should theoretically place downward pressure on the cost per bit to process network traffic in a virtualized environment.

Improving Virtualization

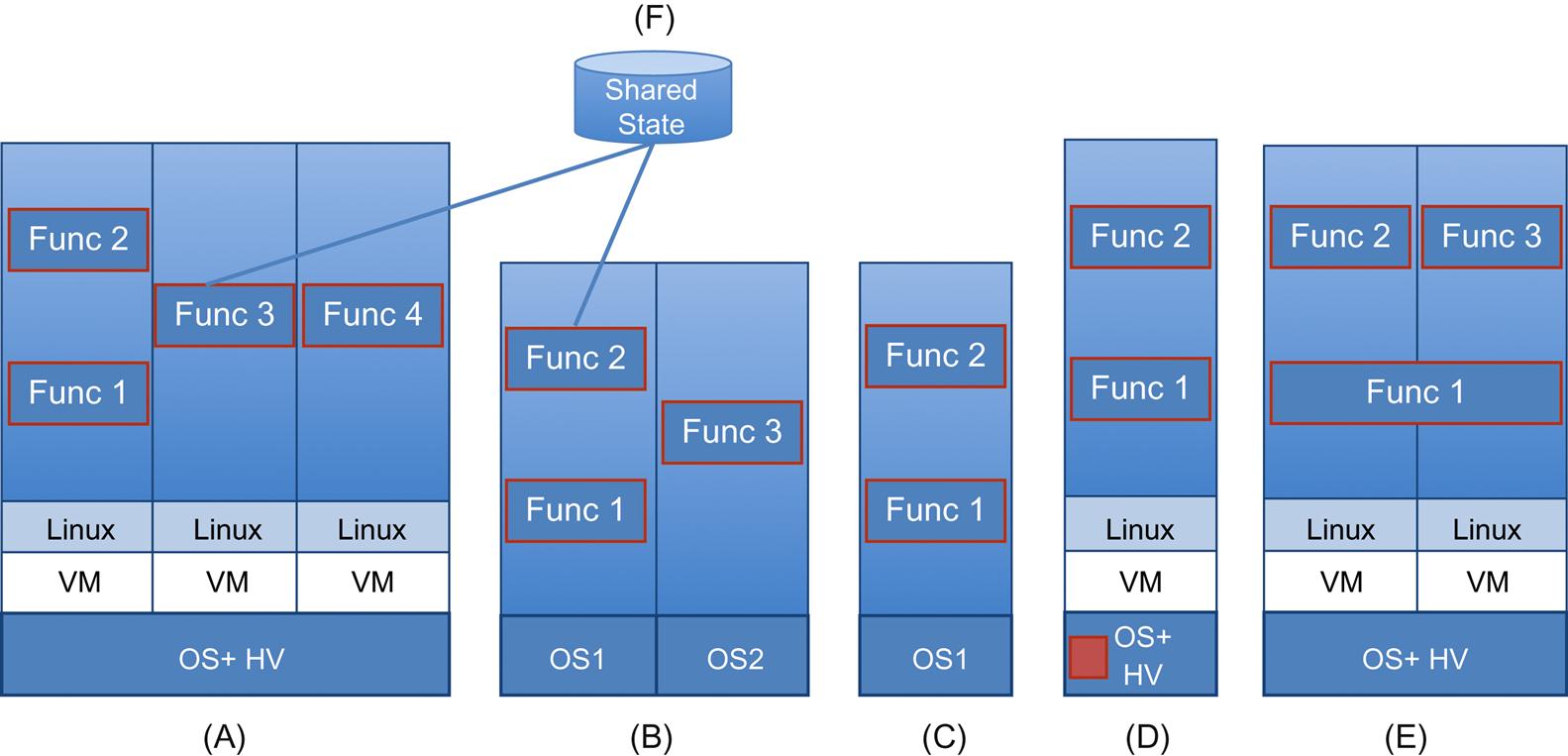

In 2013, we showed the following taxonomy for virtualizing services (shown in Fig. 1.5):

• services implemented in a machine with multiple/compartmentalized OS(s)

• services implemented within the hypervisor

• services implemented as distributed or clustered as composites

At the time, we pointed out that virtual machines provide tremendous advantages with respect to management,4 and thus were pursued as a primary vehicle for NFV, but also had some hurdles to be considered (eg, contention, management visibility, security, resource consumption). Since then, the two most significant changes have occurred in the rise of alternative service virtualization and orchestration.

Containers have now entered the mainstream and have recently begun forays into true open source. Although they face potentially more challenges than Virtual Machines in shared-tenant environments, they offer packaging alternatives that are more efficient and can be used to (potentially) more compose-able services. Other technologies that use hybrids of the VM/container environments and micro-kernels have also been introduced. We will cover these alternatives as a section of Chapter 7, The Virtualization Layer—Performance, Packaging, and NFV.

The orchestration of virtual compute solidified over this timeframe, with multiple releases of OpenStack Nova and other projects giving NFV planners more confidence in an Open Source alternative to the traditional Enterprise orchestration dominance of companies like VMware. The additions of projects like Tacker have real potential to give operators all the tools necessary to realize these goals. These technologies are discussed in detail within the context of the OpenStack movement in Chapter 6, MANO: Management, Orchestration, OSS, and Service Assurance and Chapter 7, The Virtualization Layer—Performance, Packaging, and NFV.

Data Plane I/O and COTS Evolution

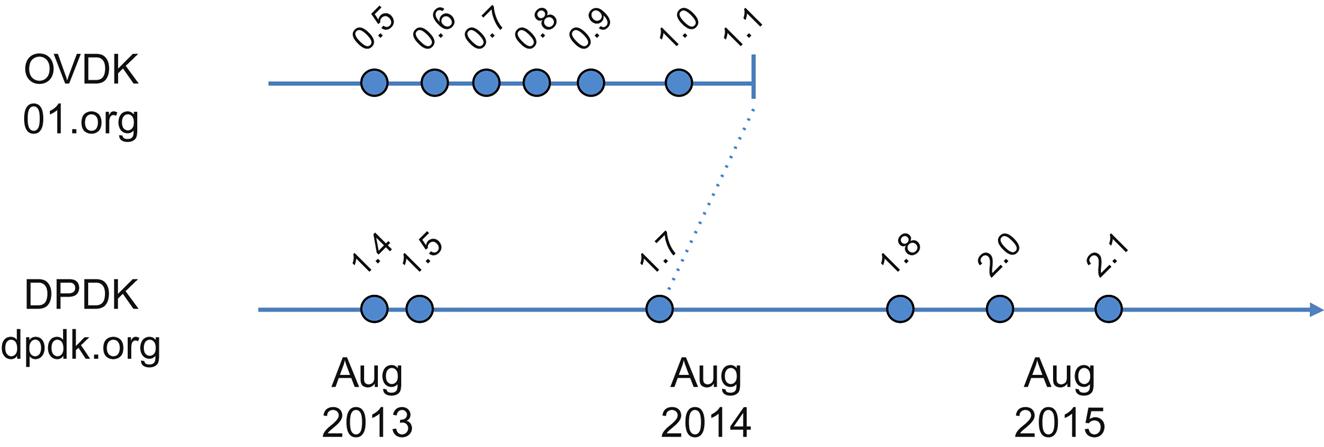

In our last book we made the statement that “Generally speaking, advances in data-plane I/O have been key enablers to running services on COTS hardware.” The original leverage of DPDK against the hypervisor virtual switch (OVS) performance overhead we cited (OVDK via https://01.org/) has evolved—multiple times (Fig. 1.6).

The DPDK5 is Intel’s innovation in I/O virtualization improvements. DPDK provides data plane libraries and optimized (poll-mode) NIC drivers (for Intel NICs). These exist in Linux user space providing advanced queue and buffer management as well as flow classification through a simple API interface (supported via a standard tool chain—gcc/icc, gdb, profiling tools). These all have been shown to vastly improve performance of nonoptimized systems significantly.

When we released our SDN book in August 2013, the version at the Intel sponsored site was 0.5. Eight releases later, 1.1 shipped (August, 2014). Intel subsequently ceased their fork of OVS and sought to integrate into the main distribution of Linux. That version integrated the 1.7 release of the DPDK, which continues development. This has been aided by an Intel processor architecture that has evolved through a full “tick-tock” cycle6 in the intervening 2 years.

In 2014, Intel introduced their Haswell micro-architecture and accompanying 40 Gbps and 4×10 Gbps NICs, increasing core density and changing cache/memory architectures to be more effective at handling network I/O.7

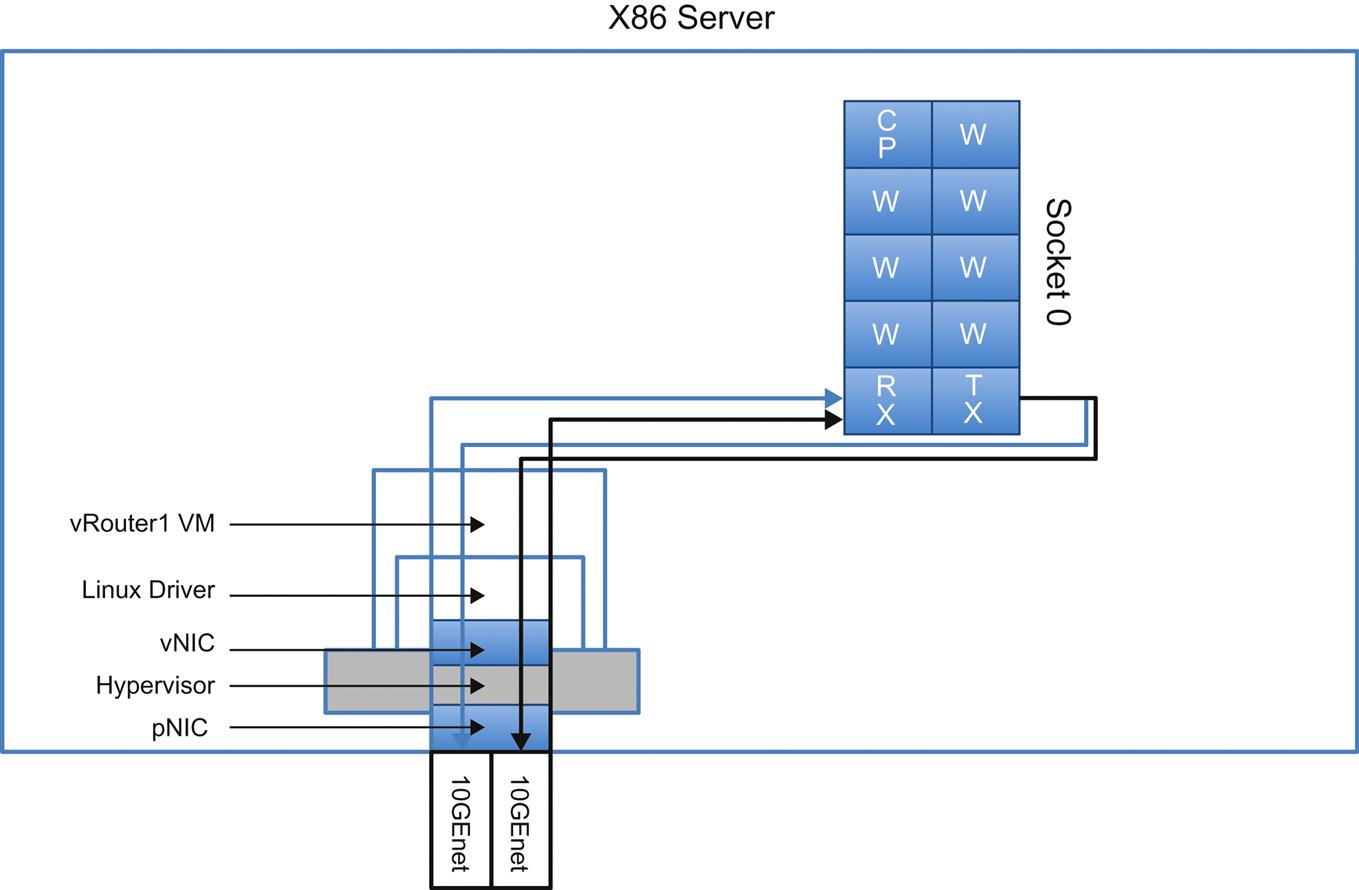

This has been particularly useful and influential in driving the scale-up performance of the virtualized router or switch in our base use case. In Fig. 1.7, the appliance uses ten cores in a socket to support 20 Gbps throughput (a core/management thread, seven worker threads, a receive and a transmit thread). Another important advance has been how other processor vendors have entered the picture and positioned themselves as PCIe-based offload engines for the Intel architecture or directly challenging Intel as THE processor for NFV.

These advancements are described in Chapter 8, NFV Infrastructure—Hardware Evolution and Testing.

Both Intel and software vendors using their architecture are claiming support of theoretical dataplane throughput in excess of 100 Gbps (typically, 120 Gbps for SandyBridge and up to 180 Gbps for IvyBridge variants). Alternative “forwarders” for the dataplane have also emerged (both proprietary and open).

The evolution of state-of-the-art in forwarding (and its continuing challenges) on the Intel architecture is described in both Chapter 7, The Virtualization Layer—Performance, Packaging, and NFV and Chapter 8, NFV Infrastructure—Hardware Evolution and Testing.

Standardizing an NFV Architecture

The most critical standards for NFV are only now evolving and some may never evolve in a traditional SDO environment (but through Open Source projects and de-facto standardization).

Today, from a standards perspective, the horizon has broadened. As the original ETSI NFV process concluded with no real API definitions or standards, work on some of the necessary gaps has begun in the IETF (see Chapter 4: IETF Related Standards: NETMOD, NETCONF, SFC and SPRING), starting with SFC.

The data modeling part that we predicted would be a critical outcome of MANO work in 2013 is being realized more in OpenDaylight and IETF NETMOD than anywhere else (and is now trickling into other projects like OpenStack). If fact, an explosion of modeling is occurring as of 2015 in functionality like routing and service definition.

Many of the same actors that participated in that early ETSI work moved into the ONF to propose architecture workgroups on L4-L7 services in attempt to define APIs for service chaining and NFV (albeit around the single protocol, OpenFlow).

The growth of neo-SDOs like the ONF and the attempt to move work of groups like the ETSI NFV WG into “normative” status, along with the rise of open source communities, brings forward the question of governance. Many of the newly minted “open” products are readily verified through third parties (or the use of tools like “git”) to actually be closed (all or most of the contributions are made and controlled by a single company positioning itself as sole-source of support/revenue from the project).

A great talk on the need for the cooperation of standards and open source as well as governance was given by Dave Ward at the IETF 91 meeting, and is captured in his blog.8

The Marketplace Grew Anyway

In 2013, we noted that the existence of the ETSI workgroup should not imply that this is the only place service virtualization study and standards development are being conducted. Even then, without standards, production service virtualization offerings were already coming to market.

This remains the case, although we have already gone through some early progression in virtualization of traditional network products and some shakeout of startups focused on this market. For example:

• The startups we mentioned in our previous book, LineRate and Embrane, have both been acquired (by F5 Networks and Cisco Systems, respectively) and their products and, to some degree their teams, appear to be assimilated into the operations of these companies. The controllers they were originally positioning for Layer 2 through Layer 7 that worked with their own virtualized services have yet to gain market traction or disappeared entirely.

• Almost every vendor of routing products now offers a virtualized router product. In fact, VNFs for most service functions now proliferate and new startups are being acquired by larger, existing vendors or named as partners to build VNF catalogues in market segments that they may not have competed before (eg, Brocade acquires Connectem giving them a vEPC product9 and Juniper partners with Acclaim).

• Service orchestration products like Tail-F (acquired by Cisco Systems) that leverage the aforementioned service-model concept saw widespread adoption.

• Interest in VIM-related open source components like OpenDaylight and OpenStack emerged.

Academic Studies are Still Relevant

“Middlebox” studies that we pointed to in 201310,11 in the academic community continue to inform ongoing NFV work in the areas of service assurance, performance, and placement. Some of these studies12 provide hints that may be applicable to compose-ability in the future and making the concept of “function” more fungible.

While academic studies can at times make assumptions that are less applicable in actual operation, academic work buttressing NFV understanding has expanded in the areas of resource management (including bandwidth allocation in WAN), telemetry/analytics, high-availability13 and policy—all of which were under-explored in early NFV work.

NFV at ETSI

The attempt by network operators (largely from the telco community) to define NFV architecture is covered in detail in Chapter 3, ETSI NFV ISG. Be forewarned—it is an ongoing story. While many of its contributors may refer to ETSI NFV as a “standard,” it is (at best) an “incomplete” architecture.

In our original description of the activity we pointed with hope to three workgroups as the keys to success of the architecture effort—MANO, INF, and SWA (Fig. 1.8).

Today, even though desired functionality has been defined, much of the necessary practical definitions expected in these architectural components remains undone—particularly in the challenge areas of performance, resource management, high-availability, and integration.

Some of these may be settled in a future phase of ETSI work, but a timing issue for early adopters looms.

Management and Orchestration (MANO) work did not lead directly to new management tools to augment traditional OAM&P models to handle virtualized network services. Chapter 6 covers the oversights in NFV Orchestration related to interfaces with legacy OSS/BSS and the complete lack of Service Assurance. Individual vendors and their customers are sorting through this today. The roles of various managers (VIM and NFVO) are still being debated/defined in an ongoing phase of ETSI work.

Resource management that was envisioned as part of the orchestration layer was defined in terms of capacity but not capability—the latter a problem that emerges in the heterogeneous environments described in Chapter 7, The Virtualization Layer—Performance, Packaging, and NFV and Chapter 8, NFV Infrastructure—Hardware Evolution and Testing.

Minimization of context switching happened organically as a requirement to expand Intel’s market (previously described), through research projects in academia uncoupled from the influence of ETSI NFV Infrastructure (INF) workgroup and through competition. The identification of a “best practice” has proven to be a moving target as a result.

ETSI Software Architecture (NFV SWA) published work remained VM-centric. While they chanced into describing the decomposition of functions into subcomponents they did not define the anticipated descriptors that would used to communicate the relationships and communication between the parts, operational behavior, and constraints (eg, topology). The approach here was anticipated to be model driven, and follow-on “open source” activities in MANO have attempted to define these. A number of examples such as the Open-O effort. More details on these are given in Chapter 7, The Virtualization Layer—Performance, Packaging, and NFV.

These anticipated function descriptors would act like the familiar concept of policies attached to SDN “applications” as expressed-to/imposed-on SDN controllers. Such policies specify each application’s routing, security, performance, QoS, “geo-fencing,” access control, consistency, availability/disaster-recovery, and other operational expectations/parameters.

Such descriptors were to be defined in a way that allows flow-through provisioning. That is, while the orchestration system and SDN controller can collaborate on placement, path placement and instantiation of the network functions, their individual and composite configurations can be quite complex—and are currently vendor-specific.

Much of this ultimately fell through to work in OpenStack, YANG modeling in other SDOs, projects in OPNFV or is still undefined.

Some basic attempts to inject policy in the NFV process came about through the Group Based Policy project in the OpenDaylight Project and an experimental plugin to OpenStack Neutron.

NFV—Why Should i Care?

The potential benefits to network operators are implicit motivations to adopt NFV. Again, in this book we are not questioning whether NFV will be a success in both Service Provider and Enterprise networks, but rather the forms of its architecture and evolution, the original assumptions behind it and how they have changed, the degree to which it is or can be standardized (and other topics). Most importantly we are concerned with increasing your understanding of the topic.

For the individual reading this book, you are more than likely directly or indirectly affected by the future of NFV.

The personnel implications of these initiatives cannot be ignored within the companies that champion NFV. To be successful, the employee bases of companies aggressively deploying NFV have to transition mindset and skills from Network Operator to Network DevOps.

The idea is to “do more with less” to cope with scale and agility competitive pressures via automation. NFV will affect careers in networking at both enterprises and service providers going forward. Though there may be other observable ways to measure the impact of NFV, because OPEX is the largest contributor to costs for operators and OPEX is often equated with “people costs,” perhaps the most visible realization of NFV will be through the reduction of headcount.

Similarly, the industry serving these companies will also have to change.

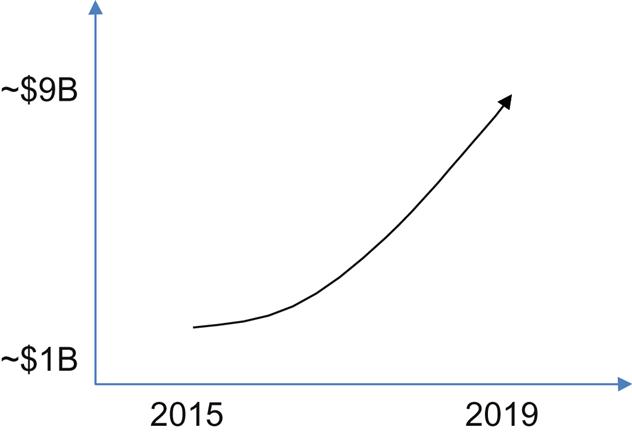

For equipment manufacturers, software companies and integrators, the implications are just as potentially drastic. Different research firms show the market for NFV related products and services.

Though analysts divide the attribution of revenue differently between SDN, VNFs, Infrastructure software (SDN), and Orchestration, the movement has been steadily “up and to the right” for market predictions. The more recent the estimate, the more optimistic the predictions get.

Growth is estimated to rise from a $1B market in 2014 at a combined aggregate growth rate north of 60% over the next 5 years leading (in the most optimistic case) to a double-digit billion dollar overall market (Fig. 1.9).14–16

Parts of the underlying predictions are not quite what one would expect from the early discussions of NFV.

• Physical infrastructure (switches, compute, storage) represents a small part of these revenues. The overall market shift for equipment from traditional purpose-built service appliances to NFV based equipment is of the same relative magnitude (ie, the overall market shift for network equipment is about the same). The projected magnitude (between a 10% and 20% shift), while not as drastic as some NFV advocates imagine, is enough to get the attention of the incumbent vendors.

• The big expenditures are expected in MANO and the VNFs themselves. It is humorous to note that most of the discussion in 2015 is around Infrastructure software components (SDN and virtualization management), perhaps since it is the only well-understood section of the proposed NFV architecture and vendors may feel it is a key control point (to control competitive ingress into accounts). While SDN and Orchestration are not insignificant, the lion’s share of the predicted software market is driven by VNFs, particularly wireless service virtualization (IMS, PCRF, EPC, and GiLAN components like DPI).

• There is a significant amount of separately tracked revenue associated with outsourcing services approximately equivalent to the Infrastructure piece (with similar growth rates) that goes hand-in-hand with the personnel problems of the target NFV market.

The analysts seem to converge around that fact that (contrary to the belief that NFV is creating vast new market segments) NFV represents a “shift” in expenditure largely into already existing segments.

Enabling a New Consumption Model

For the provider, a side effect of NFV is that it enables a new consumption model for network infrastructure. By moving services off their respective edge termination platforms (EPG, BNG, CMTS), providers are positioned to transition to the consumption of “merchant” based network equipment.

Although the definition of “merchant” is contentious, it is not associated with high touch feature capabilities or high session state scale. Instead, the focus of this equipment becomes termination, switching and transport with a minimum feature capability (like QoS and multicast) to support the services in the service overlay. Arguably, providers cannot consume “merchant” without a parallel NFV plan to remove features and states from their equipment.

Strangely enough, once this consumption begins, the control plane and management plane flexibility (mapping control and management 1:1 or 1:N in a merchant underlay) potentially brings us back to SDN (this cycle is illustrated in Fig. 1.10).

Often, the move to NFV and the subsequent realization of merchant platforms—coupled with SDN control—makes the network more access agnostic and leads to end-to-end re-evaluation of the IP network used for transport. In turn, this leads to new means of translating its value to applications like Segment Routing.17

Conclusions

Providers are currently announcing the pilot implementations of what are mostly “low hanging fruit” transformations of integrated service platforms emulating existing service offerings.

None of it is yet the transformative service creation engine that portends a true change in operation and competitiveness.

Deployment of the first functions and some NFVI has indeed started, yet NFV standardization is incomplete and its future is now spread across multiple non-ETSI initiatives for more practical definitions—including open source.

The pace of COTS and virtualization innovation is outstripping the ability of any organization to recommend a relevant “best practice” for deployment.

And, while “service chaining” is often conflated with NFV, we have been clear to define NFV as the base case of any service chained NFV component. In this way, a single NFV service is a Service Chain with a single element connected to a network at least once. As straightforward as this observation may be, getting that base case right is critical to the efficacy of SFC.

The improvements of NFV currently leveraged are primarily in the scale up and down (in situ) of services. We will discuss this further in the chapter on SFC, but for now we hope that we have given you a sufficient and detailed introduction to NFV.

The overall number of functional elements, service chains and the constraints on how those chains are constructed and operate need to be defined and may only be known through trial deployments and experimentation.

However, these chains will have to incorporate both virtual and nonvirtual service elements. There is no ready greenfield for most NFV deployments unless they are incredibly simple monotonic functions.

All the while, behind the scenes, traditional OSS/BSS is not really designed to manage the highly decomposed services of NFV, and the NFV Orchestration/SDN pairings will have to provide a transition. These systems will need to evolve and be adapted to the new future reality of virtualized network services that NFV promises.

More than two years have elapsed since operators began announcements detailing Next Generation business and architecture plans that hinged on NFV.

This discontinuity between readiness—the absence of standards, the apparent incomplete understanding of the complexities in service chaining and OSS integration, a potential misunderstanding of the actual market—and willingness (planned rapid adoption), lead to the conclusion that the process of “getting NFV right” will be iterative.

Throughout the coming chapters, a number of important questions arise (hopefully reading the book will help you develop your own answers). Here are a few high-level questions around the NFV proposition for the end of our first chapter:

• Are the assumptions and model originally behind NFV still valid?

• How big will the ultimate savings be and how much of the costs will just be shifted?

• With so much unspecified or under-specified, how “fixed” is the architecture of NFV after ETSI and is dogmatic pursuit of its architecture adding any value? Are we “crossing the chasm” too soon? Will the missing definitions from the phase one ETSI model be somehow defined in a later phase in time for consumers to care?

• What will be the role of Open Source software going forward?

• Are network operators REALLY going to change fundamentally?

We cannot promise to answer them all, but will hopefully provide some observations of our own and pointers to material that will help you form your own opinions.