Perfect. That is, agent atomicity, free movement of production factors, no restriction to free trade, product homogeneity, and information transparency.

Perfect. That is, agent atomicity, free movement of production factors, no restriction to free trade, product homogeneity, and information transparency.1. As described by Arrow and Debreu (1954).

2. A complete market is a market for everything. For instance, a market for which, for each company, there does not exist a series of loans offering all types of contracts at all maturities cannot be a complete market.

3. Pareto optimal: One cannot increase the utility of one agent in the economy without simultaneously reducing the utility of another. A Walrasian market fulfilling Pareto optimality is:

Perfect. That is, agent atomicity, free movement of production factors, no restriction to free trade, product homogeneity, and information transparency.

Perfect. That is, agent atomicity, free movement of production factors, no restriction to free trade, product homogeneity, and information transparency.

Efficient. All available information is immediately incorporated in prices.

Efficient. All available information is immediately incorporated in prices.

Complete. Markets exists for all goods, all possible states of the world, and all maturities.

Complete. Markets exists for all goods, all possible states of the world, and all maturities.

4. Various important authors dealing with banking microeconomics have illustrated this approach: Douglas Diamond, Raghuran Rajan, Franklin Allen, Douglas Gale, etc. See the references at the end of the book.

5. See surveys in books from Freixas and Rochet (1997) and de Servigny and Zelenko (1999) and in articles from Bhattacharya and Thakor (1993) and Allen and Santomero (1999).

6. For further details on this way to split banking microeconomics, see de Servigny and Zelenko (1999).

7. The terms “moral hazard” and “adverse selection” are defined later.

8. The initial article on owner/manager issues is Berle and Means (1932).

9. Instrumental consideration.

10. The market value of a firm is independent from its financial structure and is obtained through expected future cash flows discounted at a rate that corresponds to the risk category of the firm. Modigliani and Miller (1958) assume that markets are perfect: no taxes, no transaction costs, no bankruptcy costs, etc. Another important assumption is that the asset side of a firm balance sheet does not change as leverage changes. This rules out moral hazard inside the firm. Asymmetric information pertains to the investor/firm relationship.

11. See Aghion and Bolton (1992).

12. We think specifically of models by Aghion and Bolton (1992) and Dewatripont and Tirole (1994) in a situation of incomplete contracts.

13. A popular example in the literature is about “lemons” (used cars); see Akerlof (1970).

14. This should translate into the entrepreneur’s utility function.

15. See Blazenko (1987) and Poitevin (1989).

16. For example, projects with positive net present value.

17. The notion has been introduced by Myers (1984) and Myers and Majluf (1984).

18. See seminal articles from Holmström (1979), Grossman and Hart (1982), Tirole (1988), Fudenberg and Tirole (1991), and Salanié (1997).

19. See Jensen (1986). The manager overinvests to legitimize his role.

20. A standard debt contract (SDC) is an optimal incentive-compatible contract (Gale and Hellwig, 1985; Townsend, 1979). An incentive-compatible contract is a contract in which the entrepreneur is always encouraged to declare the true return of a project. Such a contract supposes direct disclosure—that is:

The debtor pays the creditor on the basis of a report written by the debtor on the performance of the project.

The debtor pays the creditor on the basis of a report written by the debtor on the performance of the project.

An audit rule specifies how the report can be controlled. Such a control will be at the expense of the creditor (costly state of verification).

An audit rule specifies how the report can be controlled. Such a control will be at the expense of the creditor (costly state of verification).

A penalty is defined when there is a difference between the report and the audit.

A penalty is defined when there is a difference between the report and the audit.

21. See Dionne and Viala (1992, 1994). In a model including asymmetric information both on the return of a project and on the entrepreneur’s involvement, they show that an optimal loan contract contains a bonus contract based on an observable criterion of effort intensity.

22. Banking theory includes a dynamic perspective between the monitor and the debtor. As soon as we operate in a dynamic environment, the appropriate framework becomes incomplete contracts.

23. Entrepreneur, debtor, or creditor or shareholder, etc.

24. This approach to incomplete contracts and contingent allocation of control rights has been developed by Grossman and Hart (1982), Holmström and Tirole (1989), Aghion and Bolton (1992), Dewatripont and Tirole (1994), and Hart and Moore (1998).

25. Aghion and Bolton (1992) observe that debt is not the only channel to reallocate control rights. If, for example, it is efficient to give control to the entrepreneur despite low first-period returns and to the investors though first-period returns are stronger than expected, then the ideal type of contract would be debt convertible to equity.

26. They mainly focused on bank runs.

27. The liquidation cost is assumed to be zero.

28. For instance, a cost linked with providing incentive to the top management.

30. These very significant categories have been introduced by Niehans (1978).

31. Santomero (1995) mentions three other incentives for reducing profit volatility: benefits for the managers, tax issues, and deterrent costs linked with bankruptcy.

32. Froot and Stein (1998) explain that risk management should focus on pricing policy particularly for illiquid assets, as a bank should always try to bring back liquid ones to the market.

33. Chang (1990) finds Diamond’s results in an intertemporal scenario.

34. The case of short-term debt with repeated renegotiation has been covered by Boot, Thakor, and Udell (1987) and Boot and Thakor (1991). Rajan (1992) shows that when a loan includes renegotiation, the bank is able to extract additional economic surplus.

35. Mookherjee and P’ng (1989) have shown that when the entrepreneur was risk-averse and the bank risk-neutral, then optimal monitoring consisted of random audits.

36. In Stiglitz and Weiss (1981) credit rationing can occur when banks do not coordinate themselves. For an example of credit rationing, see Appendix 1A.

37. The topic of annuities has been developed in Sharpe (1990). Ex ante, banks tend to have low prices in order to attract new customers and then increase prices sharply when the customer is secured. Von Thadden (1990) shows that when debtors can anticipate such a move, they tend to focus primarily on short-term lending to reduce their dependency on the bank. Such an approach can be damaging for the whole economy, as it tends to set a preference for short-term projects over long-term ones. Rajan (1996) believes that universal banks tend to avoid extracting surplus from their customers too quickly in order to secure their reputation with their customers and therefore sell them their whole range of financial products.

38. Many academic authors tend to acknowledge this point, such as Allen and Santomero (1999) and Diamond and Rajan (1999).

39. As these types of large firms typically have a relationship with several banks/lenders, the argument of efficiency from bank monitoring vis-à-vis multiple lenders presented in Diamond (1984) can be used in the same way in considering a rating agency vis-à-vis a large number of banks.

40. Boot and Thakor (1991) try to identify the best split between bank and market roles. They find that markets are more efficient in dealing with adverse selection than banks, especially when industries require a high level of expertise. But banks are better at reducing moral hazard through monitoring. Their model does not reflect the impact of reputation.

41. See Kaplan and Stromberg (2000).

1. We use the more common term “rating agencies,” rather than “rating organizations,” bearing in mind, however, that they are not linked with any government administration.

2. The presentation of the rating process is based on Standard & Poor’s corporate rating criteria, 2000.

3. A notching down may be applied to junior debt, given relatively worse recovery prospects. Notching up is also possible.

4. Quantitative, qualitative, and legal.

5. This table is for illustrative purposes and may not reflect the actual weights and factors used by one agency or another.

6. For some industries, observed long-term default rates can differ from the average figures. This type of change can be explained by major business changes—for example, regulatory changes within the industry. Statistical effects such as a too limited and nonrepresentative sample can also bias results.

7. Mainly the United States, but also including Europe and Japan.

8. Obligor-specific, senior unsecured ratings data from Moody’s. Changes in the rated universe are to be mentioned:

1970: 98 percent U.S. firms (utilities, 27.8 percent; industries, 57.9 percent; banks, very low).

1970: 98 percent U.S. firms (utilities, 27.8 percent; industries, 57.9 percent; banks, very low).

1997: 66 percent U.S. firms (utilities, 9.1 percent; industries, 59.5 percent; banks, 15.8 percent).

1997: 66 percent U.S. firms (utilities, 9.1 percent; industries, 59.5 percent; banks, 15.8 percent).

9. When working on the same time period, the two transition matrices obtained were similar.

10. This dependence has been incorporated in the credit portfolio view model (see Chapter 6).

11. A Markov chain is defined by the fact that information known in (t − 1), used in the chain, is sufficient to determine the value at (t). In other words, it is not necessary to know all the past path in order to obtain the value at (t).

12. Monotonicity rule: Probabilities are decreasing when the distance to the diagonal of the matrix increases. This property is a characteristic derived from the trajectory concept: Migrations occur through regular downgrades or upgrades rather than through a big shift.

13. See Chapter 6 for a review of this model.

14. “Rating Migration and Credit Quality Correlation 1920–1996,” a study largely based on the U.S. universe.

15. For this reason, several authors have tried to define “good or generally accepted rating principles” (see, for example, Krahnen and Weber, 2001).

16. Portfolio models that are based on internal ratings as inputs, but that rely on external information (often based on information from rating agencies) to determine ratings volatility, tend to bias significantly their results.

17. The two graphs represent the concentration or dispersion of probabilities across transition matrices.

18. And where it is difficult to define criteria because of the limited size of the sample within the bank.

19. A large sample with a sufficient number of defaults is necessary to reach reasonable accuracy. When the number of rating classes increases, the number of necessary observations increases too.

20. The fact that for AAA the number of years is lower only reflects a very limited and unrepresentative sample.

21. Basel II requires 5 years of history, but a lot of banks have less data.

22. See, for example, Allen and Saunders (2002), Borio, Furfine, and Lowe (2002), Chassang and de Servigny (2002), Danielsson et al. (2001), and Strodel (2002).

23. For very good ratings, a longer observation period is required, given the scarcity of defaults.

24. EL (expected loss) = EAD (exposure at default) × PD (probability of default) × LGD (loss given default)

25. Von Thadden (1990).

26. Continuous/discrete.

27. Rating agencies have also complemented their rating-scale choice with dynamic information such as “outlooks.”

28. Note that a generator does not always exist. Israel, Rosenthal, and Wie (2001) identify the conditions for the existence of a generator. They also show how to obtain an approximate generator when a “true” one does not exist.

29. This property means that the current rating is sufficient to determine the probability of terminating in a given rating class at a given horizon. Previous ratings or other past information is irrelevant.

30. Kijima (1998) provides technical conditions for transition matrices to capture the rating momentum and other stylized desirable properties such as monotonicities.

31. BBBu, BBBs, and BBBd denote BBB-rated firms that were upgraded (to BBB), had a stable rating, or were downgraded over the previous period.

32. In terms of mean default rate.

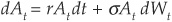

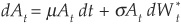

1. A geometric Brownian motion is a stochastic process with lognormal distribution. µ is the growth rate, while σv is the volatility of the process. Z is a standard Brownian motion whose increments dZ have mean zero and variance equal to time. The term µV dt is the deterministic drift of the process, and the other term σvV dZ is the random volatility component. See Hull (2002) for a simple introduction to geometric Brownian motion.

2. We drop the time subscripts to simplify notations.

3. Recent articles and papers focus on the stochastic behavior of this default threshold. See, for example, Hull and White (2000) and Avellaneda and Zhu (2001).

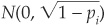

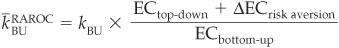

4. This is the probability under the historical measure. The risk-neutral probability is  , as described in the equity pricing formula noted earlier.

, as described in the equity pricing formula noted earlier.

5. For businesses with gross sales of less than $5 million and loan face values up to $250,000.

6. Loans under $100,000.

7. Front-end users are salespeople who look for a fast and robust model that performs well for new customers. These new customers may have slightly different characteristics from those customers already in the database that was used to calibrate the model.

8. The linear discrimination analysis supposes the assumption of an ellipsoidal distribution of factors. Practically, however, experience shows that linear discriminant analysis is doing well without any distributional assumptions.

9. For variables selection, see Appendix 3B.

10. Logistic analysis is also optimal if multivariate normal distribution is assumed. However, linear discriminant analysis is more efficient than linear regression if the distributions are really ellipsoidal (e.g., multivariate normal), and logistic regression is less biased if they are not.

11. ROC and other classification performance measures are explained later.

12. In this case, xi has to be positive.

13. The k-nearest neighbor (kNN) methodology can also be seen as a particular kernel approach. The appeal of kNN is that the equivalent kernel width varies according to the local density.

14. Class-conditional distributions and a priori distributions are identified.

15. For a more detailed analysis of these techniques, we recommend Webb (2002) and references therein.

16. The key point about support vector machines is that they find the best separating hyperplane in a space of very high dimensionality obtained by transforming and combining the raw predictor variables. Methods using the best separating hyperplane have been around since the late 1950s. (See, e.g., the literature on perceptrons, including the book by Minsky and Papert, 1988.)

17. In our example there are only two variables (ROA and leverage). Therefore the hyperplane boils down to a line.

18. ||ω||denotes the norm of vector w.

19. For example, Blochwitz, Liebig, and Nyberg (2000).

20. Compared with the area corresponding to a “perfect model.”

21. To give an example of unequal misclassification costs, let us consider someone who is sorting mushrooms. Classifying a poisonous mushroom in the edible category is far worse than throwing away an edible mushroom.

22. The ROC convex hull (ROCCH) decouples classifier performance from specific class and cost distribution by determining an efficient frontier curve corresponding to minimum error rate, whatever the class distribution is (see Provost and Fawcett, 2000).

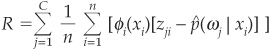

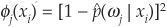

23. Hand (1994, 1997) has focused on reliability. He called it imprecision. His observation is that knowing that p(ωi|x) is the greatest is not sufficient. Being able to compare the true posterior probability p(ωi|x) with the estimated one  is also very significant in order to evaluate the precision of the model. Hand (1997) suggests a statistical measure of imprecision, for a given sample:

is also very significant in order to evaluate the precision of the model. Hand (1997) suggests a statistical measure of imprecision, for a given sample:

where  if

if  and 0 otherwise. φj is a function that determines the test statistic, for example

and 0 otherwise. φj is a function that determines the test statistic, for example  .

.

24. See D’Agostino and Stephens (1986).

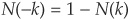

25.

converges to  as N tends to ∞ with

as N tends to ∞ with

and therefore

converges toward a

26. A utility function U (W) is a function that maps an agent’s wealth W to the satisfaction (utility) she can derive from it. It is a way to rank payoffs of various actions or investment strategies. Utilities are chosen to be increasing (so that more wealth is associated with more utility) and concave (the utility of one extra dollar when the agent is poor is higher than when she is rich).

27. Variables with subscripts such as py, Oy, or by are the scalar constituents of vectors p, O, and b.

28. These can be derived analytically in some cases but depend on q (the vector of qy).

29. The relative performance measure becomes the logarithm of the likelihood ratio for two models.

30. Calibration describes how predicted PDs correspond to effective outcomes.

31. The standard deviation of the rating distributions over several years shows a peak in the subinvestment-grade part (see Figure 3-17b).

32. Galindo and Tamayo (2000) show that for a fixed-size training sample, the speed of convergence of out-of-sample error can define a criterion.

33. One thing to bear in mind is that the error estimate of a classifier, being a function of the training and testing sets, is a random variable.

34. Vapnik (1998) says that the optimal prediction for a given model is achieved when the in-sample error is close to the out-of-sample error.

35. The apparent error rate is the error rate obtained by using the design set.

36. This is particularly interesting when the underlying sample is limited.

37. The problem with this type of solution is that in the end it is very easy to remove defaulting companies, considered as outliers.

38. As obtaining a clear and stable definition of misclassification costs can prove difficult, a way to compensate for this is to maximize the performance of the classifier. The ROC convex hull decouples classifier performance from specific class and cost distribution by determining an efficient frontier curve corresponding to the minimum error rate, whatever the class distribution is. (See Provost and Fawcett, 2000.)

1. See for example Jensen (1991).

2. Manove, Padilla, and Pagano (2001).

3. Jackson (1986), Berglof et al. (1999), Hart (1995), Hart and Moore (1998a), and Welch (1997).

4. Franks and Sussman (2002).

5. Elsas and Krahnen (2002).

6. In this respect we recommend access to the document “A Review of Company Rescue and Business Reconstruction Mechanisms—Report by the Review Group” from the Department of Trade and Industry and HM Treasury, May 2000.

7. Such as U.S. Chapter 11.

8. This type of measurement can be performed even if information on all the underlying collateral is not precisely known. It can be estimated.

9. Default is defined as interest or principal past due.

10. Before moving on to reviewing what we think are the key drivers of recovery rates, we want to stress that all the recovery rates considered here do not include the cost of running a bank’s distress unit. Statistics shown in this chapter and in most other studies therefore tend to underestimate the real cost of a default for a bank.

11. Debt cushion—how much debt is below a particular instrument in the balance sheet.

12.  where si corresponds to the market share of firm i.

where si corresponds to the market share of firm i.

13. The negative link is, however, captured in the Merton’s (1974) firm value–based model. When the value of the firm decreases, the probability of default increases and the expected recovery rate falls.

14. A similar graph can be found in Frye (2000b).

15. To go into more detail, collateral types include cash, equipment, intellectual property, intercompany debt, inventories, receivables, marketable securities, oil and gas reserves, real estate, etc.

16. The model Portfolio Risk Tracker presented in Chapter 6 allows for a dependence of the value of collateral on systematic factors. This is a significant step toward linking PDs and LGDs.

17. By Gilson (1990), Gilson, John, and Lang (1990), and Franks and Torous (1993, 1994).

18. Get hold of the equity.

19. In Spain, for example, the bankruptcy rate is 0.5 percent in the SME sector. This is abnormally low and suggests that most defaults lead to private settlements.

20. Portfolio losses are measured by credit value at risk. See Chapter 6 on portfolio models for an introduction to this concept.

21. PD and LGD are assumed uncorrelated.

22. The recovery rate in CreditRisk+ is, however, assumed to be constant.

23. There does not exist any algebraic solution of the maximum-likelihood equations, but several approximations have been proposed in the literature (see, e.g., Balakrishnan, Johnson, and Kotz, 1995).

24. This is not only a theoretical exercise. Asarnow and Edwards (1995), for example, report a bimodal distribution for the recovery rates on defaulted bank loans.

25. Beta kernels have been introduced by Chen (1999) in the statistics literature.

26. The experiment is carried out as follows: We draw 10,000 independent random variables from the “true” bimodal distribution, which is a mixture of two beta distributions, and we apply the beta kernel estimator.

27. Their optimization criterion is based on an “excess wealth growth rate” performance measure, close to maximum likelihood. It is described in Chapter 2.

28. The 1993–2003 Japanese crisis of the banking system stands as a good example for such risk of correlation between default rates and real estate–based recovery rates.

29. In the Basel II framework, loan-to-value requirements are specified.

30. Based on 37,259 defaulted lease contracts.

31. Data from 12 leasing companies.

1. Correlation is directly related to the covariance of two variables.

2. Correlation here refers to factor correlation. The graph in Figure 5-1 was created using a factor model of credit risk and assuming that there are 100 bonds in the portfolio and that the probability of default of all bonds is 5 percent. More details on the formula used to calculate the probabilities of default in the portfolio are provided in Appendix 5A.

3. Embrecht et al. (1999a,1999b) give a very clear treatment of the limitations of correlations.

4. X and Y are comonotonic if we can write  where G(.) is an increasing function. They are countermonotonic if G(.) is a decreasing function.

where G(.) is an increasing function. They are countermonotonic if G(.) is a decreasing function.

5. An in-depth analysis of copulas can be found in Nelsen (1999).

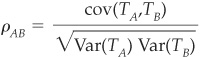

6. Rather than considering the correlation of default events, Li (2000) introduced the notion of correlation of times-until-default for two assets A and B:

where TA, TB correspond to the survival times of assets A and B. Survival-time correlation offers significant empirical perspectives regarding default and also rating migration events.

7. A 1-year default correlation involving AAA issuers cannot be calculated as there has never been any AAA-rated company defaulting within a year.

8. Appendix 5A provides more details on factor models of credit risk. Commercial models of portfolio credit risk are reviewed in Chapter 6.

9. Recall from the earlier section on copulas that we could choose other bivariate distributions while keeping Gaussian marginals.

10. The AAA curve cannot be computed as there has never been an AAA default within a year.

11. This is also consistent with the empirical findings presented in the following paragraph.

12. In distributions with little tail dependency (e.g., the normal distribution), correlations are concentrated in the middle of the distribution. Thus correlations decrease very steeply as one considers higher exceedance levels. In general, the flatter the exceedance correlation line, the higher the tail dependency.

1. Some of those are provided on a regular basis by Riskmetrics.

2. CreditMetrics relies on equity index correlations as proxies for factor correlations. The correlation matrix is supplied by RiskMetrics on a regular basis.

3. A database from Standard & Poor’s Risk Solutions.

4. Unlike CreditMetrics, Portfolio Manager does not consider equity index correlation but tries to capture asset correlation.

5. All these factors are diagonalized in order to reduce the dimensionality of the problem.

6. In the Asset Manager’s version of the product.

7. Useful references on this topic are Rogers and Zane (1999) and Browne et al. (2001a, 2001b).

8. A risk measure ρ is said to be coherent according to Artzner et al. (1999) if it satisfies four properties:

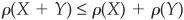

Subadditivity, for all bounded random variables X and Y:

Subadditivity, for all bounded random variables X and Y:  .

.

Monotonicity, for all bounded variables X and Y, such that

Monotonicity, for all bounded variables X and Y, such that  :

:  .

.

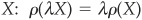

Positive homogeneity, for all

Positive homogeneity, for all  and for all bounded variables

and for all bounded variables  .

.

Translation invariance, for all

Translation invariance, for all  and for all bounded variables

and for all bounded variables  , where rf, corresponds to a riskless discount factor.

, where rf, corresponds to a riskless discount factor.

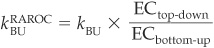

9. RAROC was introduced by Bankers Trust in the late 1970s and adopted in one form or another by the entire financial community.

10. As defined by the NBER.

11. These figures correspond to the mean and standard deviation of the loss rate for senior unsecured bonds reported in Keisman and Van de Castle (1999).

12. In this example we are ignoring country effects. Country factors could also be incorporated in the same way.

13. Recall that asset returns are scaled to have unit variance.

14.  in the summation means that we are summing over all exposures having size vj L—that is, all exposures in bucket j.

in the summation means that we are summing over all exposures having size vj L—that is, all exposures in bucket j.

15. Subscripts i now denote specific bonds in the portfolio.

16. For a thorough discussion of Fourier transforms, see, for example, Brigham (1988).

1. From the facility level to the bank level (see Figure 7-1).

2. Within banks the most current usage of such bottom-up techniques has rather been focused on sensitivity analyses, linked to back testing and stress testing.

3. See, for example, Crouhy, Galai, and Mark (2001).

4. Such an exclusive focus on the confidence level for the calculation of economic capital may reduce the incentive for the bank to integrate in its internal system the monitoring of what is precisely followed by rating agencies.

5. The converse proposition is also true.

6. De Servigny (2001, p. 122) lists 10 different horizons that could alternatively be retained for various reasons.

7. More than what equity-driven correlation models would suggest.

8. Capital, and in particular equity, is a buffer against shocks, but capital is also the fuel for the core activity of the bank: lending money.

9. “In summary, the optimal capital structure for a bank trades off three effects of capital—more capital increases the rent absorbed by the banker, increases the buffer against shocks, and changes the amount that can be extracted from borrowers. . . . financial fragility is essential for banks to create liquidity” (Diamond and Rajan, 2000).

10. Business that is kept on the book of the bank.

11. Coupon minus funding costs, minus expected loss.

12. Leland (1997) shows how an adjusted beta should be calculated when asset returns are not normally distributed.

13. In what follows, we use Turnbull (2002) notations.

14. A clear definition of what is considered the current value has to be defined. Several options are possible—it could be a market value, a fair value, an accounting value, etc.

15. See Ross (1976).

16. There is correlation between banks, but not between business units. Returns of business units are taken as independent correlation factors. Surprisingly, the level of correlation seems to be time-independent.

17. This assumes that the cost of capital for each of the business units of the bank is observed.

18. An idea could be to have the NAV distribution for each business partially depend on exogenous macrofactors in the context of a multifactor model.

19. From a theoretical standpoint it is sensible, but there are strong technical constraints associated with the rigidity of costs within the bank.

20. They do not consider the impact of expected loss.

22. Corresponding to the requirement of the shareholders.

23. Such a measure looks at a facility level only, with the myopic bias of considering a static balance sheet, and not at a business level where the balance sheet is dynamic.

24. Based on the fact that the amount of economic capital that should be allocated to such business units is typically very low, as no credit risk, market risk, or operational risk is incurred.

25. This case can happen if, for example, the presence of a business unit within a bank damages the whole value of this bank. Practically, let us consider a large retail bank that owns a small investment bank business unit. The investment bank division is not profitable. Because of the uncertainty generated by this business unit, the price-earnings ratio of the bank is significantly lower than that of its peers.

26. Or alternatively

27. Or alternatively

28. See Froot and Stein (1998).

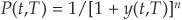

1. If time to maturity  corresponds to n years and a discrete interest rate compounding is adopted, we could use

corresponds to n years and a discrete interest rate compounding is adopted, we could use  where y is the discretely compounded yield.

where y is the discretely compounded yield.

2. For example, the German firm Daimler may have issued a bond with maturity 3/20/2010, which would be associated with a Bund maturing 3/31/2010.

3. Some further adjustments would have to be made to incorporate option features.

4. See, for example, Sundaresan (1997).

5. And therefore also bond prices using Equation (8-2).

6. See, e.g., Garbade (1996).

7. http://www.federalreserve.gov.

8. September 14 was the first trading day after the tragedy.

9. The vega (or kappa) of an option is the sensitivity of the option price to changes in the volatility of the underlying. The vega is higher for options near the money, i.e., when the price of the underlying is close to the exercise price of the option (see, for example, Hull, 2002).

10. Appendix 8C provides a summary of intensity-based models of credit risk.

11. See Appendix 8B for a brief introduction to this concept.

12. Their specification is actually in discrete time. This stochastic differential equation is the equivalent specification in continuous time.

13. Models estimated by PRS are under the historical measure and cannot be directly compared with the risk-neutral processes discussed earlier.

14. Brady bonds are securities issued by developing countries as part of a negotiated restructuring of their external debt. They were named after U.S. Treasury Secretary Nicholas Brady, whose plan aimed at permanently restructuring outstanding sovereign loans and arrears into liquid debt instruments. Brady bonds have a maturity of between 10 and 30 years, and some of their interest payments are guaranteed by a collateral of high-grade instruments (typically the first three coupons are secured by a rolling guarantee). They are among the most liquid instruments in emerging markets.

15. A more thorough investigation of this case can be found in Anderson and Renault (1999).

16. We assume the investor has a long position in the security.

17. We will use interchangeably the terms “structural model,” “firm value–based model,” or “equity-based model.”

18. A structural model will enable one to price debt and equity. If equity data are available and if the focus is on bond pricing, then one can use the volatility that fits the equity price perfectly into the bond pricing formulas.

19. We can nonetheless mention Wei and Guo (1997), who compare the performance of the Longstaff and Schwartz (LS, 1995a) and Merton (1974) models on Eurodollar bond data. Although more flexible, the LS model does not seem to enable a better calibration of the term structure of spreads than Merton’s model.

20. Strategic models are a subclass of structural models in which debt and equity holders are engaged in strategic bargaining. In the presence of bankruptcy costs, equity holders may force bondholders to accept a debt service lower than the contractual flow of coupons and principal. If the firm value deteriorates, it may indeed be more valuable for the debt holders to accept a lower coupon rather than force the firm into liquidation and have to pay bankruptcy costs. For an example of strategic models, see Anderson and Sundaresan (1996).

21. Two measures are said to be equivalent when they share the same null sets, i.e., when all events with zero probability under one measure have also zero probability under the other.

22. A martingale is a driftless process, i.e., a process whose expected future value conditional on its current value is the current value. More formally: for

for  .

.

23. This appendix is inspired by the excellent survey by Schönbucher (2000).

24. For Δt sufficiently small, the probability of multiple jumps is negligible.

25. Given that  and

and  .

.

26. We drop the time subscripts in rt and λt to simplify notations.

27. See Schönbucher (2000) for details of the steps.

28. Loosely speaking, the matrix of intensities.

29. See Moraux and Navatte (2001) for pricing formulas for this type of option.

30. Or identically that recovery occurs at the time of default but is a fraction δ of a T-maturity riskless bond.

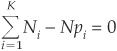

31. i, h,  , and

, and  denote, respectively, the rating, the horizon, the number of bonds rated i in year t that defaulted h years after t, and the number of bonds in rating i at the beginning of the period t. The rating system has K +1 categories, with 1 being the best quality and

denote, respectively, the rating, the horizon, the number of bonds rated i in year t that defaulted h years after t, and the number of bonds in rating i at the beginning of the period t. The rating system has K +1 categories, with 1 being the best quality and  the default class.

the default class.

32. That is, we have  under Q and

under Q and  under P.

under P.

33. We drop the superscript i in the probabilities for notational convenience.

1. Securitization is the process by which a pool of assets is gathered to back up the issuance of bonds. These bonds are called asset-backed securities. The supporting assets can be anything from mortgages to credit card loans, bonds, or any other type of debt.

2. International Swaps and Derivatives Association.

3. For the buyer.

4. An American option can be exercised at any time between the inception of the contract and maturity, whereas a European option can only be exercised at maturity. The terms “American” and “European” do not refer to where these options are traded or issued.

5. See Chapter 1 for a definition of complete markets.

6. For more details, see BIS Quarterly Review, May 27, 2002, Chapter 4.

7. See Chapter 10 for more details.

8. Securitization is the process of converting a pool of assets into securities (bonds) secured by those assets.

9. Within synthetic transactions, there are funded and unfunded structures.

10. Loan terms vary. The lack of uniformity in the manner in which rights and obligations are transferred results in a lack of standardized documentation for these transactions.

11. The typical maturity for a synthetic CDO is 5 years.

12. This is similar to the rationale for investing in a fund.

13. See Chapter 1 for similar results in the context of corporate securities.

14. Corresponding to a highly diversified pool of 50 corporate bonds with a 10-year maturity and the same principal balance.

15. In practice, LGD is incorporated based on the seniority and security of the asset (see Chapter 4).

16. This PD is determined by the rating of the asset, using average cumulative default rates in that specific rating class.

17. We can check that the sum of  is indeed equal to

is indeed equal to  .

.

18. See Chapters 5 and 6 for an introduction to factor models of credit risk.

1. There are now 13 countries that are members of the committee: the United States, Canada, France, Germany, United Kingdom, Italy, Belgium, the Netherlands, Luxembourg, Japan, Sweden, Switzerland, and Spain.

2. See Bhattacharya, Boot, and Thakor (1998), Rochet (1992), and Rajan (1998).

3. This result is interesting from a theoretical standpoint. It is, however, doubtful that in a very competitive banking environment such analysis can offer much discriminating power.

4. That is, the fact that the degree of liquidity in the banking book may be very different for various institutions. The ability of banks to refinance quickly will vary accordingly.

5. In Basel II, regulatory capital is calculated at a 1-year horizon only.

6. Smoothing earnings is very easy for banks, based on their use of provisioning and of latent profit and losses. This leads to greater opacity.

7. The capital measurement corresponded to the 10-day VaR at a 99 percent confidence level.

8. Article 42 of the third consultative paper (Basel Committee, 2003) should be mentioned. It allows regulators to accept that banks with a regional coverage keep the risk treatment corresponding to the Basel I Accord.

9. The capital invested in insurance entities will be deducted from the consolidated balance sheet of the bank and will be placed out of the banking regulatory perimeter.

10. For the most up-to-date version of the capital requirements, the reader can visit the BIS web site: http://www.bis.org.

11. The concept of risk-weighted assets existed before Basel II; in fact it was already a Basel I concept.

12. These agencies are called ECAIs—external credit assessment institutions. The assessment they provide has to rely on a review of quantitative and qualitative information.

13. The choice of option 1 or option 2 for bank exposures depends on each regulator.

14. Corporate exposures, specialized lending, sovereign exposures, bank exposures, retail exposures, and equity exposures.

15. One minus the recovery rate.

16. The fact that correlation depends on PD contributes to this concavity.

17. In some cases such as for corporates, banks, and sovereigns.

18. For further details, see Chapter 9.

19. Defined as the ratio of the IRB capital requirement for the underlying exposures in the pool to the notional or loan equivalent amount of exposures in the pool.

20. “A small number of very large banks operating across a wide range of product and geographic markets.”

21. We thank Dr. Dirk Tasche for a fruitful discussion on this topic.

22. The different treatment for SMEs can be seen as a way to take into account, at least partially, this granularity effect.

23. The IRB approach.

24. The rationale to account for such conservatism is that, in some countries, the 1-year horizon may not be sufficient for the calculation of economic capital, given the absence of straightforward refinancing strategy in the context of bank distress.

25. Technical risk: the risk that credit risk, market risk, or operational risk exceeds the level of capital retained by the bank.

26. Business risk: the risk that the value of the bank collapses.

27. Indeed, the determinant to use fair value instead of the historical basis will be whether the facilities in the banking book can potentially be traded or held to maturity only.